Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In this latest article posted at <a href="http://www.gamasutra.com/xna/">Gamasutra's special XNA section</a>, Xbox/Windows sound guru Brian Schmidt discusses the new cross-platform audio library for Windows and Xbox 360, XAudio2, with much insight into the new audio API.

Welcome to the second of a series of articles on game technology from the XNA group at Microsoft. Last month’s article by Frank Savage discussed features of the newly released XNA Game Studio 2.0, the XNA group’s managed code SDK for community-built games. This month, we turn our attention to the work the XNA team has been doing for native code development in the area of audio technology as we discuss the new cross-platform audio library for Windows and Xbox 360: XAudio2.

A bit of history first: The year is 1995. An Intel Pentium processor running at 133MHz is bleeding-edge and ISA is the standard PC interface. “Google” means “10 to the hundredth power,” Bill Clinton is starting his second term and “Braveheart” wins the Oscar for best picture. In September of that year, Microsoft releases a new technology for Windows games called DirectX.

Since then, DirectX, and its audio component DirectSound, have allowed game programmers to write to a single API set that works across any hardware with a DirectX-compatible driver. The DirectX model continues to be used today and is by far the most popular native-code game development environment.

Back when DirectX began, DirectSound provided two important functions. First, it provided a simple software mixer, allowing a game running on Intel 486 and Pentium-class CPUs to play and mix sounds in software. Second, it provided a means for games to easily take advantage of any DirectX-compatible audio cards to off-load the CPU for audio processing, particularly 3D audio processing. The buffer metaphor used by DirectSound and the on-board sound memory reflected the slow ISA bus and the architecture of the sound cards of the day.

Over the following dozen years, some major changes occurred in game audio. First and most obvious, the power of the main CPU in PCs and game consoles increased dramatically. In addition to the clock speed increases from 133 MHz to 3 GHz, the nature of the CPUs themselves changed. With the introduction of MMX and SSE/SSE2, the number of instructions per clock cycle also increased. Audio processing is particularly well-suited to take advantage of these parallel vector processing architectures.

The cherry on top, from a processing-power perspective, is the move to multi-core, hyper-threaded systems. Add it all up, and the amount of CPU processing available for audio has increased by nearly a factor of 100 since DirectSound was first introduced. The end result: today’s CPUs are more than capable of creating extremely compelling game audio all on their own.

The second change in the game audio industry is the nature of game audio itself. When DirectSound was introduced, game audio was generally quite simplistic: when a game event happened, such as a gunshot, the game would play a gunshot wave that was loaded into a DirectSound buffer. The game could set pitch and volume, and also define a roll-off curve with distance by using the DirectSound3D API—but that’s about as fancy as things got. Sixteen or so concurrent sounds were considered plenty.

Today, sound designers and composers have moved well beyond the simplistic notion of just playing a wave file in response to a game event. Sounds are now often composed of multiple wave files, played simultaneously, each with their own pitch and volume. Imagine an explosion sound that is not just a simple recording of an explosion, but rather a combination of the initial low boom, a higher-pitched crack, and a longer tail.

By combining these individual elements at run-time instead of pre-mixing them into a single wave file, a game can create much more variety in sound effects by separately varying the pitch and volume of each component as it’s played back during the game. A more extreme example is found in modern racing games, which can use as many as 60 waves for each car.

We’ve certainly come a long way from the “one sound equals one wave file” notion of game sounds! In addition to composite sounds, environmental modeling—the emulation of audio environments—is now a staple in game sound. This requires flexible reverberation for room simulation, as well as filtering for occlusion and obstruction effects.

Though it supported some of these new audio needs through DirectSound’s property set extension mechanism, DirectSound’s underlying architecture didn’t have the flexibility to support them all by itself. To meet the changing needs of game audio developers, DirectSound was enhanced to provide basic digital signal processing (DSP) support in the 1999 release of DirectX 8. Although DirectSound 8 allowed a developer to add software DSP effects to a DirectSound buffer, the overall buffer-based architecture of DirectSound remained essentially intact.

If DirectSound has been updated, then why create a new game audio API? The answer goes back to both the industry changes and the design planning for Xbox 360.

When Xbox 360 was in its early design phases, we knew that audio processing (except for data compression) was moving to an all-software model. Drastic increases in processing power, combined with the flexibility and ease of simply writing C code to do any arbitrary signal processing, made that clear. What wasn’t clear was how to make it easy and straightforward for developers to harness the flexibility that the game sound designers and composers wanted for modern video games.

We took a hard look at DirectSound and realized that DirectSound was really past its prime; another facelift wasn’t going to cut it for Xbox 360. DirectSound’s metaphor of one-buffer-one-sound wasn’t up to the job for today’s game audio any more than a graphics sprite engine would be up to the job for today’s 3D graphics.

We needed a new audio API, designed from the ground up as a programmable software audio engine. Armed with our vision of what we wanted to enable, along with several years worth of suggestions from the community, we created XAudio for Xbox 360.

Concurrent with development of Xbox 360, Windows Vista was well into its own development. The Windows team developed a whole new audio architecture, top to bottom. A key component in that audio architecture was LEAP, Longhorn Extensible Audio Processor. (Longhorn was the codename for Windows Vista.)

LEAP is a very powerful low-level graph-building audio architecture that offers submixing, software-based DSP effects, and efficient design—all of the things that XAudio for Xbox 360 was designed to do. Although LEAP itself was not released as an API directly to developers, it serves as the underlying audio engine for both DirectSound on Windows Vista and the Microsoft Cross-Platform Audio Creation Tool (XACT) on both Windows XP and Windows Vista.

Well…. Xbox 360 launched, Windows Vista shipped, and we turned ourselves back to the notion of a cross-platform, low-level replacement for DirectSound. We had received good feedback on our XAudio API from Xbox 360 developers.

At the same time, the LEAP architecture lent itself to a more streamlined and efficient engine with a few features that XAudio didn’t provide, like multi-rate graph support. We took the best of both worlds, and XAudio2 was born, taking the philosophy and style of the XAudio API design coupled with the streamlined engine and features of LEAP.

So what is XAudio2? Simply put, it is a flexible, cross-platform, low-level audio API designed to replace DirectSound for game applications on Windows and replace XAudio on Xbox 360. It allows game designers to create audio signal processing paths that range from simple wave playback to complicated audio graphs with submixes and embedded software-based DSP effects.

XAudio2 provides many features necessary for the creation of modern game sound design:

Cross platform between Xbox 360 and Windows-based platforms, including Microsoft Windows XP and Windows Vista

Arbitrary levels of submixing

A simple streaming model

A software-based, dynamic DSP effects model, both local and global

Simple C language code for arbitrary DSP processing using xAPOs (audio processing objects)

Native compressed data support: XMA and xWMA on Xbox 360, ADPCM and xWMA on Windows

A complement of DSP audio effects

Fully transparent surround sound/3D audio processing

Clean separation of voices from data

Non-blocking processing suitable for multi-core, multi-threaded systems

Efficient and optimized for Windows and Xbox 360

Optimized in-line filter on each voice

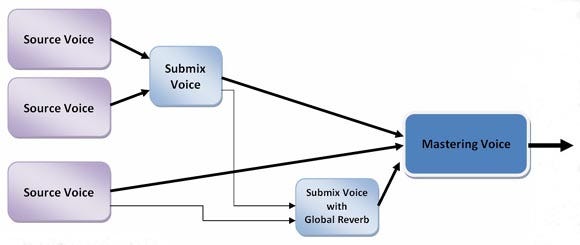

There are three main components in XAudio: source voices, submix voices, and a mastering voice.

A source voice is most analogous to a DirectSound buffer. You create a source voice when you want to play a sound. You can set parameters on a voice, such as pitch and volume, and specify the volume levels for each speaker for surround effects. You can also dynamically place arbitrary software-based DSP effects on a source voice.

Once you create a source voice, you point it to a piece of sound data in memory and play it. (This data can be in a variety of formats: PCM, XMA, or xWMA for Xbox; PCM, ADPCM, or xWMA for Windows.). A source voice can also point to no source data, if it contains software for direct generation of audio data. By default, the output is sent to the speakers via the mastering voice, but a source voice can also send its output to one or more submix voices as well.

A submix voice is much like a source voice, with two differences. First, the sound data for a submix voice is not a piece of sound data in memory, but rather the output of another source (or submix) voice. Secondly, a submix voice can have multiple inputs—each of the inputs will be mixed by the submix voice before processing.

As with source voices, you can insert arbitrary software DSP effects into a submix voice—in this case, the DSP will process the aggregate mix of all the inputs. Submix voices also have built-in filters, and can be panned to the speakers just like a source voice can. Submix voices are very useful for creating complex sound effects from multiple wave files. They can also be used to create audio submixes—for example a sound effects mix, a dialog mix, a music mix, and so on—in the way that professional mixing consoles have buses. Submix buses are also used for global effects, such as a global reverb.

The final component is the mastering voice. There is only one mastering voice, and its job is to create the final N-channel (stereo, 5.1, 7.1) output to present to the speakers. The mastering voice takes input from all the source voices and submix voices, combines them and prepares them for output. As with source voices and submix voices, software DSP effects can be placed on the mastering voice. Most typically a 5.1 mastering limiter or global EQ is inserted into the mastering voice for that final, polished sound.

The following figure shows a simple XAudio2 graph playing two sounds with an environmental reverb. The top two source voices are playing sound data to create a single composite sound that is routed to the submix voice.

From the submix voice, a 5.1 send goes to the mastering voice and a mono send goes to another submix voice that hosts a global reverb effect. 3D panning for the composite sound is performed on the first submix voice. The bottom source voice is used to play a single sound. Its 5.1 output goes to the mastering voice and also has a mono send to the global reverb. Of course, many more options are possible, but this shows a common case.

DSP effects in XAudio2 are performed using software audio processing objects (xAPOs). An xAPO is a lightweight wrapper for audio signal processing combined with a standard method for getting and setting appropriate DSP effects parameters.

Since xAPOs are cross-platform, it is easy to write software-based audio DSP effects that can be run on both Windows and Xbox 360. Typical software effects might include reverb, filtering, echo or other effects, but can also include physical modeling synthesis, granular synthesis, or any kind of wacky audio DSP you might come up with! You can write processor-specific optimizations for Xbox and Windows, but that’s not required.

3D and surround sound in XAudio2 is perhaps the biggest departure from DirectSound’s model. In DirectSound, DirectSound3D buffers and the DirectSound3DListener were used to take a sound emitter’s x, y, z position in 3D space and cause the sound to seem to come from the appropriate location. In XAudio2, there is no inherent notion of 3D—there is nothing analogous to a DirectSound3D buffer.

Instead, an application specifies individual volume levels for each speaker, typically 5.1 or 7.1. A game can put a sound only in a specific speaker (for example, the center channel for dialogue), or it can calculate the appropriate volume level for each speaker given the x, y, z positions of sound source and camera.

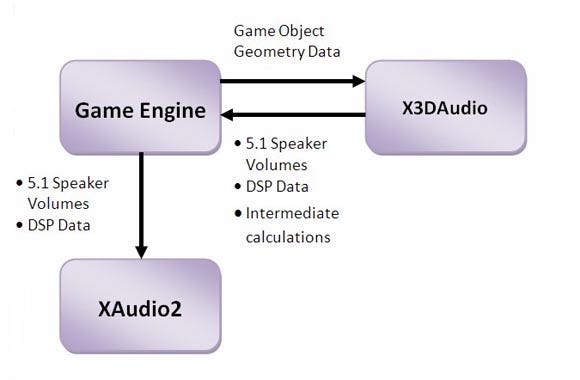

To make this easy, a complementary API, X3DAudio, is used. X3DAudio converts simple game geometry data into signal processing parameters suitable for passing to XAudio2. It takes x, y, z coordinates for sound sources and listeners, and outputs a vector of speaker volumes for each output speaker.

X3DAudio has only two functions, X3DAudioInitialize and X3DAudioCalculate. X3DAudio allows for multiple sound sources, multiple listeners, cones, and a flexible mapping model. X3DAudio also returns the results of some of its own internal calculations, such as source-to-listener distance, internal data from Doppler calculations and other information valuable to the game engine itself.

By separating the signal processing library, XAudio2, from the geometry library, X3DAudio, 3D audio becomes much more flexible and transparent. The following figure shows how X3DAudio and XAudio2 are used together by the game engine to create 3D/Surround sound.

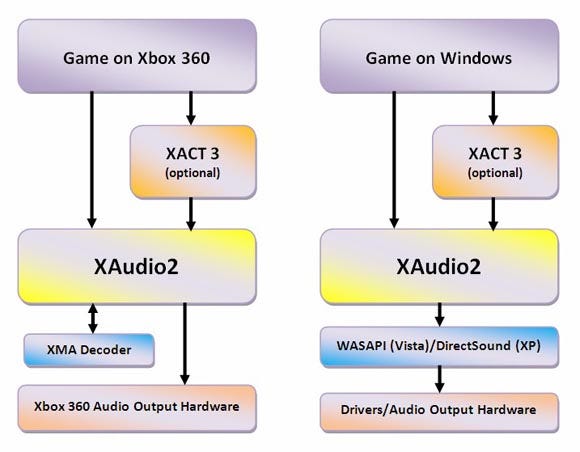

On Xbox 360, XAudio2 sits on top of the XMA hardware decoder and the audio output system of Xbox 360. On Windows Vista, XAudio2 streams directly into the lowest audio API, WASAPI, while on Windows XP, it streams its output to a single DirectSound buffer. For games that use XACT3, XAudio2 is the audio signal processing engine that runs underneath the high-level XACT 3 library.

As a low-level audio engine, XAudio2 is under the hood in several other APIs. XAudio2 is the low-level engine driving XACT3. For Windows developers, this is transparent. Xbox 360 developers will notice a change in the way that audio DSP effects are used, which requires some code changes to reflect the new xAPO model.

On Xbox 360, the XHV and XMV APIs now have XAudio2 counterparts: XHV2 and XMV2. These are versions of the Xbox 360 voice and full-motion video player libraries that use XAudio2 under the hood.

XAudio2 is available today as part of the November Xbox 360 XDK and DirectX SDK. If you haven’t taken a look, now’s a great time to do so. The first approved version for Xbox 360 and release version for Windows will be available in the March 2008 XDK/SDK, with updates and enhancements following in June.

The audio team at XNA is tremendously excited about XAudio2 for both Xbox 360 and Windows. It completes a major piece of our cross-platform initiative for audio technologies that we’ve had in the making for some time, and it provides a strong foundation for game audio well into the future.

Read more about:

FeaturesYou May Also Like