Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Experienced localization producer Le Dour (Splinter Cell: Chaos Theory) discusses the "high maintenance" job of localizing game audio, mapping out the specifics in this fascinating in-depth article.

“We didn't need dialogue. We had faces!” claims Norma Desmond (Gloria Swanson) in Billy Wilder’s Sunset Blvd. (1950). Except that now we do, and we have a lot in video games.

You snipe a guard in a murky alley, there goes the AI triggered cue; you’ve just started a brand new adventure game, there goes the pre-rendered exposition scene; you’ve defeated a level boss, there goes the scripted event hinting at what to do next. In terms of audio, a big game in the past couple of years was over 10,000 recorded lines. With next-gen platforms and publishers banking on massive contents, those figures are likely to grow by 3 if not by 5 or 10 in the medium or long run (and we know the long run isn’t very long in this industry) depending on how effectively AI will manage audiobases1 and cues’ variations.

Localizing in-game and video dialogue is a hot topic: it is complicated and expensive and it usually takes place at a time when you have a million other fish to fry (the first of which being this game you need to finish). This difficulty originates from our games’ high level of sophistication, but also from worldlier principles: the intricacy of level design, programming, animation, script writing (and many other yet to be described tasks) result in what one could describe as a real house of cards. Add pressure from publishers for last minute changes and your production calendar is offset by four weeks while a cold wind of anxiety blows over your already sleep-deprived teams.

Finally, localizing dialogue means dealing with external resources that will carry out translation, casting, recordings and linguistic testing, all tasks that also need to fit into your schedule while your submission date isn’t likely to change.

Localizing audio and video is high maintenance. To paraphrase Harry Burns (Billy Crystal) in “When Harry Met Sally”2, it’s the worst kind: it’s high maintenance that people misconstrue as low maintenance. It has become even more so in the age of “sim ship”3: more and more games, at least AAA’s that need international markets to break even and start generating revenue faster, are localized while the original game is still in its final (or not so final) stage; well, that is exactly like trying to build a house without the final blueprint.

The purpose of this article is to shed some light on original and localized version entanglement and hopefully offer some advice to prevent things from escalating to DEFCON 1.

Figure 1: audio process

Your design is locked, your character brief is ready and you have a “final” recording script for the “original” version (we’ll assume it’s American English). Simultaneously animation is being created, based sometimes on temporary voices. A voice director is hired and briefed on the game mechanics and “feel”.

U.S. casting begins, goes through in-house and/or licensor / publisher approval (if applicable).

U.S. recordings begin (you can do this in-house if you have the facilities or contract a studio if you don’t). Cues are delivered in raw sessions and need to be cut, cleaned (from clicks, scratches and mouth noises) and named4. Keepers5 need to be selected. Lines that will be integrated in cinematic scenes are delivered to the animation department for editing. That’s it: you have a US audiobase that is your reference for localization recording and here’s why you should definitely wait for US recordings to be over:

U.S. lines provide time references (see 1.3). Animation cannot adapt to five languages6. Even if it could, your disc space is limited. If you record “blind”, each foreign file will have a different length. You need the actual U.S. recorded lines so that their localized equivalents match.

Each language “sound databank” will also be the same size – which of course will facilitate data and memory optimization. Before local actors render their lines, they listen to the U.S.’s, then give theirs while checking the wave’s physical form on screen so they can adapt.

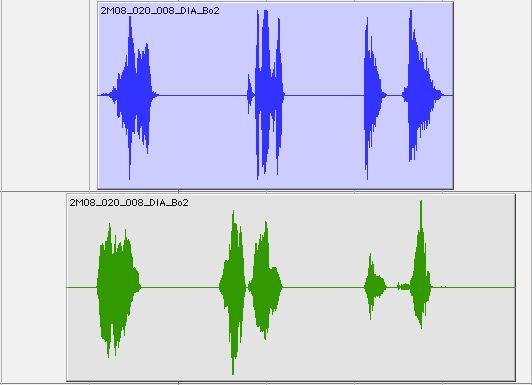

Figure 2: audio waves

You don’t always have the time to select keepers (a lengthy process). This will allow countries to “mirror” all alternative takes in all languages so that later you can pick the right ones (which frequently happens when animation is not final).

Original recordings provide additional artistic direction and help reduce recording time. U.S. recording times are by and large longer than localizations.

You get back mirrored localized audiobases that are consistent: same folder architecture, identical file naming, matching number of files. This comes in very handy when it’s time for post production and integration.

If U.S. recordings are done, chances are retakes7 (if deemed necessary) won’t be too numerous. Remember: all retake costs (studio, engineer, actors and voice director) are multiplied by the number of languages.

Localization begins: casting then script translation. Once localization agencies8 are all set (translated script and U.S. audiobase) they start recording.

Localized audiobases are delivered back to the developer where they are checked for integrity and quality (everything is there and meets the standards). Files are post produced the same way U.S. were. You can then move on with integration.

QA and debug can start. Note: if post production is significant and requires a lot of special effects on voices, you can integrate the dry files9 and proceed with linguistic testing to save some time. The dry files will be eventually swapped with the final ones (voices with effects, final videos) which of course you can’t afford not to check in actual gaming conditions.

1. Audiobase: ensemble of all lines recorded for the game.

2. He was of course referring to women.

3. Simultaneous shipping: original and localized versions ship at the same time.

4. Raw session is cut into single files that need naming, ex: CHAR1_Intro_001

5. When you have alternative takes, you then need to select the keeper (the one that meets your demands).

6. FIGS (French, Italian, German, Spanish) + US. Those are generally the 5 languages you find on PAL discs. Of course the number of languages can go up.

7. Additional recording session (lines were overlooked, quality was poor, lines were added in between etc.)

8. Local service providers that will translate cast and record the game in each language.

9. Special effects are not done yet.

Localizing audio is expensive. It happens to be the lion’s share of your localization budget, nearing 50 to 70% (depending on the size and number of platforms), whereas translation is around 10 to 15%.

Linguistic QA is not easily quantifiable: it depends on the game size and sophistication and on the number of platforms (but let’s settle for an estimated 10% of the main platform localization budget). The rest of the localization budget is allotted to in-house resources (project management, audio and video integration and debugging, additional programming etc.).

Note: Of course, you translate, record and do post-production once (unless you have platform-specific lines) while you need to test all platforms.

Let’s take a look at factors that make localizing audio pricey. Recording sessions involve specialized people – actors, voice directors, sound engineers, recording managers etc. – and require very sophisticated facilities and equipment. In addition, those people and facilities (studios) are usually on the other side of the world and you cannot bypass labour legislations or trade unions if you want to work with professionals.

You absolutely need native and skilled dubbing actors, a voice director that understands video game mechanics as well as a support / tech team that will come up with a solid schedule and of course the best quality for the best price. Cheap acting and poor quality recording will reflect badly on your game no matter how much special effects and music you throw on dialogue. There is really a threshold under which you cannot go (your sound team and publisher should be able to give you standards).

Agencies need to be local: they have bigger talent pools and a good grip on the market. You don’t want your German actors to sound like they’ve been living in Orange County for the past 25 years. Last but not least, localization recording is expensive because you ask vendors to work very fast. U.S. recordings usually take place three to four months before first submission (if all goes well). Depending on how long and late they are, on potential retake sessions, on post-production workload and number of audio bugs, you need localization recordings to be wrapped up very fast. U.S. recordings are commonly three times longer than localizations.

To draft a schedule and a budget you need to provide your vendors with figures so they can send you documented quotes. Each audio category has specificities: lip synch recording is more expensive and requires a specific setup; recording AI cues is very tiring for actors; lines with a strict time constraint will take longer to record. Here’s a quick breakdown of essential information to help your vendors assess needs and costs.

Please note that cost variations depend on countries’ labour costs and fixed union fees.

- Total Number of Lines and Total Words to Record (all categories)

This information helps to create a general timeframe. Averages are never very reliable but here are some (rough) references: recording 2,500 files (15,000 words) is a six-day job for a professional studio including two days for preparation and formatting before the loc audiobase is sent back to you.

Daily studio costs (facilities and staff) vary from 450 € to 900 € ($585 to $1,170) for recording while harmonization (cut & clean, naming) ranges from to 250 to 600 € per day ($325 to $780). Some studios offer per file rates, but it’s rarely to your advantage (that usually means they are using a subcontractor on their end).

- Breakdown of Lines and Words Per Category

You need more specific figures because they affect the schedule and the budget.

AI cues (AI triggered as opposed to scripted or pre-rendered dialogues) include:

“Barks” or “organics” - These are non verbal lines, onomatopoeias if you will. They are screams, surprise, pain reactions etc. They do not require translation per se (in rare cases some will need adaptation because they’re not applicable for all languages – particularly for Asian languages).

In a perfect world, you wouldn’t need to record them (i.e. you would use the U.S. in all localized versions) but annoying voice discrepancy issues happen. If there are a lot and you are behind schedule and running out of money you can skip recording these: sound designers are eager to offer players diversity but it’s not uncommon to use only three out of the ten recorded variations. So if you cut, you want to cut where there’s meat.

Note: They are also extremely tedious and tiring to record. Actors can’t record barks for more than one or two hours in a row without putting their voice at risk (some actors will ease up if they're on stage or have extra recording work scheduled for the same day).

One liners- Short sentence lines pertaining to various reactions from non playing characters and playing characters in predetermined AI conditions. They can be taunts used in boss fights, warnings from your SWAT commander, etc. They are extensively used in multiplayer games. They need translation and recording in all languages.

“Intruder! I repeat intruder in HQ!”

“Send back-up!”

“Friendly fire!”

“Fire in the hole!”

“Is this the best you can do?”

Note: AI cues are almost never subtitled: they usually play while gamers are focusing on action rather then reading, plus lines can play simultaneously and create subtitle overlapping.

Ambient Dialogue

This type of dialogue brings lively background to environments: two mercenaries discuss how low their wage is or whine about their superior, merchants and customers bargain in a market, etc. Usually they are location triggered: the player reaches a zone and the dialogue starts – the closer the player gets to the scene the louder it is. This isn’t generally subtitled.

Scripted Events

Scripted events are in-game scenes where the camera and point of view are usually blocked or semi blocked so that players are forced to watch the whole scene as it is essential to story development. The scene and dialogues are programmed to play under certain conditions. If your game is subtitled this type of dialogue (also called "critical path”) is your number one priority.

Forms: it can be a single file (dialogue has been edited on one track) and if so, you want to make sure it has been edited correctly in all languages. That is why you want each individual file to be named the same so that your editor who doesn’t understand Japanese or Italian can edit dialogue efficiently and with a low risk factor. Another solution consists of using different tracks playing one after the other; in that case, errors are easier to fix (you don’t have to edit the whole dialogue again) but also more likely to happen, i.e. a file doesn’t play, lags or plays in the wrong language, etc..

High Resolution Cinematics (AKA cut scenes)

The Rolls Royce of audio / video production: they generally intro and outro levels, and you want the quality to be top notch. Scenes feature animated close-ups and sometimes require lip synch. And if it does, you need the image to record, meaning the animation has to be locked (you can record with temporary textures and visual special effects).

The main problem is you get to that stage pretty late, which forces you more often than you’d want to hold a separate recording session for cinematics. It's a bit more expensive – you need to book studios, voice directors and actors again, and a bit stressing – your post production team will have less time to finish the final mix – but it’s a good bet.

Producing cinematics is very expensive: they are usually short and don’t have a lot of dialogue, so hopefully if you need to hold a late recording session it won’t take long. Once scenes are final, it’s almost a “can’t go wrong” scenario aside from format and subtitle issues.

Figure 3: Breakdown for a medium sized FPS

- Number of Characters to Cast

Depending on what you want, you either ask your vendors for already recorded samples (the price of which is usually included in the project management fee) or for a live casting.

Live casting is expensive because you use actors and voice director in studio facilities. They come and give audition lines. Casting costs vary greatly depending on actors’ minimum fees. A 10 parts casting can reach 3,000 € ($ 3,900) in countries where labor is expensive. But it’s very practical when you want to ensure chemistry between actors, and comes in handy when you need to speed up your licensor or publisher’s approval if they lack imagination.

- Number of Characters

Unless you’ve robbed a bank or have Steven Spielberg’s budget, it’s unrealistic to hire one actor per role. Studios will cast one actor for several roles (usually a 1 to 4 ratio).

Legal fees and labor legislation are different from one country to another, but unless your only dialogue is a single voice over, actors’ salaries are always the bulk of your localization audio budget (around 30%).

Be specific about what you want and list your recordings specs:

- Distance between actors and microphone

- Type and / or brand of microphone

- Levels and volume settings

- Studio environment (rooming etc.)

- Cut and clean rules: what and where to cut to avoid unnecessary silences, acceptable clicks or other parasite sounds etc.

- Format (i.e. wav 48/24) and folder structure for delivery: individual files sorted by character and categories, Pro Tool or Pyramix sessions for videos with tracks synched on image etc.

These technicalities are not trivial: they help overall consistency and save a lot of time during post production; levels adjustment will be light and the final M&E (music and effects) will not require much fine tuning.

Make sure this list is sent early enough overseas so that it can be translated if needed.

Time constraints are important to manage disc space and streaming but also to optimize animation: the more rigid they are, the more U.S. and localized audio waves will look alike (and the longer it will take to record). These are decided by your lead sound person in coordination with animation. Finally, translators need to know how much they need to twist the number of syllables or bend their translated text so that it matches the constraint.

- Voice Over (A.K.A. VO)

Oddly enough, it’s a no constraint and usually applies to AI barks and one liners whose dependency on image is poor. You don’t get to see close-ups on characters delivering lines. In reality you want lines to be roughly the same length but that doesn’t need to be precise.

- Time Constraint (A.K.A. TC)

Localized files can be -/+10% longer or shorter than U.S.’s. It’s mostly a safety net to prevent movement synch issues: you’ve just stabbed a guard in the back and he collapses. It’s nicer if he stops moaning after he’s dead. The difference is quite detectable for collision, jumps, hits and impacts you can actually see on screen. But unless you’ve got bionic ears you won’t be able to tell a TC line from a STC. But if you zoom in on the wave you’ll definitely see a difference.

Figure 4: Blue graph is U.S., green is localized file.

- Strict Time Constraint (A.K.A. STC)

Length of U.S. and localized files match (exactly). Those are mainly used in scripted dialogue that do not feature close ups.

Figure 5: STC files

- Sound Synch (A.K.A. SS)

The SS is an overzealous STC that mirrors silences in its corresponding U.S. file. It’s well illustrated if you compare two waves: length and form are identical. It’s used in scenes where animation and dialogue are intricate, i.e. you see the back of one character yelling on the phone: you don’t want the arms to wave on silence. If it’s a close-up of a giant speaking mouth you want words to come out of it when it’s open. Sound synch, commonly used in games for kids, can also be considered a (cheap) variant of lip synch. Note that the bigger the constraint is, the tougher on translators and actors.

- Lip Synch A.K.A. (lip)

High resolution cinematics call for lip synch but not for all lines (thank God) mainly because face animation is super long. The best setup is to prepare U.S. sessions in which dialogue and image are already synched (locked on time codes). Localization studios just need to mirror the dialogue tracks to get an identical print. Spanish (purple) mirrors US (blue). You get almost ready to mix material on which M&E will adjust fine (see Figure 6: synch session).

Figure 7: 2 lip synch files

You need detailed quantities and rates because you will then initiate a purchase order. If the quantity is huge you may be able to negotiate rates. Here is what a sound quote looks like (for one language):

Note that some companies may ask for a 30% or so installment. Should quantities change significantly you need to ask for a new quote; adapt your budget and schedule. This is why the person in charge of the localization budget and overall organization must be kept in the loop at all times.

But your audio budget is not yet final. The following costs may be added (they may be in-house or external).

Check on localized audiobases (overall quality, integrity): n days of work x languages

Post production on localized audiobases (special effect, editing)

Additional recording session for cinematics: n languages

Video editing: n days of work x languages

ubtitling: n days of work x languages

Integration: n days of work x languages

Sound debug : n days of work x languages

Well-organized documentation offers various advantages: information is presented in a consistent way, data can be processed more easily and you don’t start from big bang for each new project. It’s also quite helpful when you want to run post production analysis. You can compare what’s comparable only.

Character Brief

If you want localization agencies to cast actors they need food for thought. But you’re lucky on this one: this document should already exist for the US version. It just needs a bit of revamping so that it answers questions relevant to localization. (You also probably want to have visuals a bit more elaborate than mine.)

Let’s take a closer look at the table’s categories:

It goes without saying: characters are not always humans; they may be animals, aliens, monsters etc. Voice ages are for voice range reference only. Remember: in most countries casting children is a real headache due to strict labor regulation and because young actors are less flexible and tire out faster. Studios know that and most of them have a pool of actors who will do a good job impersonating kids or very old persons.

Accents are trickier for several reasons: some work well for languages, but others don’t and good actors who won’t sound artificial are hard to find. I remember discarding all mercenaries’ Asian accents in all European versions of a first person shooter because it simply didn’t work. Tests showed it was way too cartoony for this type of game.

In other situations, accents need to be rethought because they are meaningless: In the U.S. version of Finding Nemo (Disney / Pixar 2003), Jacques the hermit crab was French and spoke “of cooorse wiz a verry heavy French accent”. Obviously that wasn�’t going to work neither for the French movie nor for the French localized game. In the end, Jacques was given a south of France accent (Marseille) that kept the character's specificity and "cuteness".

Some games feature foreign language lines to give local color. That works nicely in war and action games set in realistic environments like Call of Duty or Splinter Cell. But if you have German lines what about the German game (where all characters should speak German)? Es klappt nicht! (It doesn’t work!)

If you decide not to localize those lines (mainly because you are not Croesus and are running out of smart ideas), sound integration might get complicated: you can swap mirrored audiobases because the number of files is identical. It won’t be the case with hybrid localized audiobases including original lines. Finally, you might not need recordings but remember you may need translation if you have subtitles (if it’s critical path dialogue) so do not take the lines out of the script.

Accents and cultural references work hand in hand. They are relevant if sonorities and tones are discernable in different languages: French people can tell Scots from Irish when they speak English (well some of us can) but it’s much harder when they speak French (I will eat my laptop if you prove me wrong). So in this case, you might want to go for a simple British accent.

Devil’s advocate: “So why bother? We should focus on the original version and subtitle everything for foreign markets.” These countries are – like it or not – dubbing countries and altogether they represent huge markets (for some franchises the main market). So you really want to be cautious with accent choices. Some can even be offensive for local minorities. The right Commonwealth pick may be totally irrelevant for the rest of the world. Discuss these issues with your publisher and local managers and poll your localization agencies: they have good expertise in dubbing.10

Don’t overindulge in Hollywood references. They help getting the character’s feel but they can be misleading if you are looking for something very specific. Dubbing cultures are different. Localization does not necessarily induce copying; it’s very much about adaptation.

I remember near-death experiences listening to Spanish voices because our hero created a new testosterone standard and sounded 15 years older than in the US version. When I checked with some of our Castilian crew, they were enthusiastic. Standards are different. That is the very purpose of standards. Bruce Willis’ Spanish voice doesn’t sound like Bruce Willis. We might as well get used to it if we want to sell movies or games in Madrid.

Casting Lines

If you need a live casting, ask your game designer or creative director to select three or four lines archetypal of each character (it needs to cover a certain range of emotions unless you are looking for replicants). Even better, select a whole scene. Once lines are translated and recorded (usually three or four actors will test per role), edit a few files together so you can test chemistry between actors (it’s important if they share a lot of scenes; think buddy movies and good cop / bad cop routine).

Character Breakdown

This sheet assigns roles to actors. Because in big games there might be over a hundred parts and you can’t pay for a hundred actors you need to make sure that the breakdown is done in a way that no actor ends up speaking to himself. If Guard1, Guard5 and Colonel1 are assigned to Actor1, it means that Guard1, Guard5 and Colonel1 can’t have scenes together. You can use Excel’s cross dynamic function. This will greatly help organizing recording sessions.

10. Regarding specific licenses and movie tie-ins you may check my earlier article: Localizing Brands and Licenses.

Because many people (script writer, sound design, recording studios, translators, post production etc.) are going to work on the recording script and use it for different purposes, you need a layout that allows data sorting and painless formatting.

Excel works well if all fields are consistent and if you can play with columns without chaos-inducing consequences. A well-organized script gives you instant grasp on the big picture (storyline) without neglecting details. It’s a good idea to keep a master version of the script at all times (and to notify and document each important change) so that no one is kept out of the loop.

Recording Script

Category tabs are a good arrangement. It’s important that columns are the same (and ordered in the same way) in each tab because studios won’t use sequential order to record but rather proceed by characters, one after the other. They will create recording sheets for each actor using information gathered in several tabs and you want to make their lives easier. In each tab, there are a number of fields important for translation and recording and you don’t want to lose information while copy/pasting.

Characters (column B) if your character’s name is Jo, make sure there is no entry where it’s spelled Joe or Joseph or so much for data sorting and character breakdown. Same logic for Guard1 and Soldier1. Make choices and stick to them.

Location (column C) helps getting the general context; it also spots where the line should play for testing purposes. There can be sublevels for extra accuracy (stating for example in which conditions the line plays).

The context info (column D) provides details on premises, action, protagonists etc. It’s important for translation and crucial to acting. Let’s take a very plain line: “Man, I can’t see a thing.” The delivery will be very different whether the character is in stealth mode in the dark or about to skydive. You don’t want this information in the text column (F) because it can be quite lengthy and will disrupt actors’ reading plus you need an accurate word count for translation and for recording.

File naming (column E) a.k.a. Pandora’s Box. The naming convention must be identical for all languages and you should be able to increment it if lines are added while recording (alternative takes or new lines) without offsetting the whole thing. Keep it logical: type, character, generic location (abbreviation should also be logical) and number (starting with 000 is essential for sorting).

Bear in mind that people around the world and across the universe work on different platforms (MAC, PC, Linux), so try avoiding file names that are too long (including extension); they shouldn't exceed 31 characters - use letters (you can stick to the 26 letters English alphabet) or numbers + extension (three characters).

One last thing: often, generic characters have identical lines (for the sake of variety) and to wrap a recording session faster they’re sometimes named the same. Each line must have its own file name (even if they are located in different folders/repertories). Eventually you’ll need to name them (for post production and debugging purposes) and renaming several thousand audio files (each will need one "in" and one "out" name) is highly time consuming, expensive and risky with foreign languages.

Using the column H, I, J, K filters you can sort out lines by time constraint, isolate those that are not to be recorded (organics or native), lines that have subtitles (this will help the person in charge of linking text and audio) etc. Filters will save your life when it’s time to budget.

You can add as many columns as you see fit as long as the column order remains identical, i.e. a column for integration that would read: integrated / work in progress / post production in progress, a column for special effect and video etc...

Note: script writers usually hate writing in Excel (who doesn’t?). They are much more comfortable with screenplay formats like Final Draft for instance. Name someone to take care of copy/pasting your writer’s script into a format that works for recording and translation. Then have your lead animator, lead sound design person enter their own specs, info etc.

As Recorded Script

The ARS is a commented script as recorded. It documents all lines which content was modified and / or alternate takes were recorded.

In our U.S. mini script, two lines were added: one onomatopoeia line was recorded with different projection and tone so we have two types of volume and distance effects we can later choose from (animation isn’t locked and we still don’t know how far Bob and Cal are going to swim from each other). One line was duplicated using a different word (seaweed instead of weed). Of course, changes are highlighted in a different colour and file names have been incremented.

Adding comments is really helpful. You can’t figure the difference unless you actually listen to the files (which of course you need to do) and read a quick description. If you have hundreds of files to listen to, it’s best to know what you’re looking for. Because you need to clear the as rec script of stricken-through words and other comments, run a last spell and typo check; that way you already correct minor (but annoying) issues. Last, you have subtitles that are a perfect match with audio (Sony Computer Entertainment really cares for that): your testers will have more time to focus on important bugs.

Localized agencies need to send you an as recorded script as well for the same reasons. Plus, it’s of great help during post production. Even if your team doesn’t understand a word of Dutch, they can follow the script (of course forget about this with other alphabets – Cyrillic for instance – or writing system such as Kanji, Katakana etc. The world is an imperfect place).

Here’s an example:

We subtitle games for hearing impaired players and so that gamers give their ears a break. Also because you won’t sell many games in Europe or Asia if they’re not at least partially localized (meaning original dialogue with subtitle).

In some countries, French Canada and France for example, you need a French version if you want your game on shelves. Partial localization is also way cheaper than full (because of the 50 to 70% budget). So you’d better anticipate and have your programming team adapt the engine (tags linking text and audio so when dialogue plays, its corresponding text is displayed on screen). Additionally, it needs to adapt to all writing systems depending on markets you target.

So if you plan on having subtitles it’s best to let your user interface lead know. You need sufficient space for the text (horizontally and vertically – don’t forget there are a lot of diacritical characters and that some of them are 2,000 year old ideograms). Some languages also read vertically or from right to left – think Hebrew or Arabic for instance (do not mock small markets). Be positive: it could be worse if hieroglyphs were still used. Check if all alphabets are supported in the fonts you use (TTF or bitmaps – uppercases AND lowercases).

Á | É | Í | Ó | Ú | Ü | Ñ | ¡ | ¿ |

á | é | í | ó | ú | ü | ñ |

Figure 7: Castilian diacritical characters (caps and lowercases)

High res video subtitling works a little bit differently. To start with, you don’t have enough disc space to physically duplicate videos (per language) nor can they remain in their original format (huge files): you will use a smaller format set to play different channels depending on the selected language (like in Bink).

Unfortunately, this (very practical) option doesn’t work for subtitles and text cannot be image-inlayed like it is in traditional subtitling (it would create as many duplicated files as you have languages). So the engine needs to call a one text file that will display text based on time code (as opposed to single audio files calling single bits of text in-game). Again, high res do not feature too much dialogue so we’re not talking Twelve Labours.

You also want to be careful with annoying things such as PAL vs. NTSC (if settings are not right, image or sound can lag). Last but not least, run some tests on the font you’re planning to use, the minimum of which being legibility (all the more if you develop or port on PSP. Its 16/9 screen remains small).

Remember: video game subtitling is different from movies. Only a fraction of dialogue is actually subtitled in movies or television (again the critical path) mainly because our ability to differentiate between syllables and words and associate meaning before processing the information is faster than that of our eyes.

Developers don’t have the time to create real subtitles – the adaptation time is significant. So they came up with text that comes and goes (or rolls) along with the audio creating a rhythm that is not very natural. So renounce fancy fonts that will display poorly or dribble on regular TVs.

Organization & Process

We’ll go through this quickly as there’s enough to cover on linguistic testing for a standalone article (hopefully soon to be ready!). However tempting this may be, skipping linguistic testing is not a good idea. No matter how well organized you were and how stable your build is, you will have localization specific bugs and other functionality issues impacting all versions to deal with. There is no such thing as a bug free build and eventually you’ll need to waive some.

Linguistic QA requires a lot of expert resources, equipment and a bullet-proof process so that testing and debugging move along smoothly. Very few developers or publishers are set up to carry it out in-house (I believe only Nintendo is). You need testers that are not solely natives from all the countries where your game will be released but also fine grammarians / proofread specialists and that’s no piece of cake. Then you need to sit everyone next to a debug kit and a pc to enter bugs in a database, burn them copies and provide them guidance and documentation.

Let’s assume your game is small (if so, one tester per language is enough) and localized only in four languages; it’s still four debug kits and PCs less for functionality testing (times the number of platforms). It also requires a lot of flexibility (late or night shifts are not unusual), so unless you have C3PO droids working around the clock, you will need to outsource at some point.

Working with a QA contractor means coming up with a solid QA plan so that testing time is optimized. You might want to continue working with the localization agencies that ran translations and recordings in the first place, but they are not necessarily staffed to meet urgent requests. One of the biggest challenges for outsourcers is responsiveness: things rarely turn out as expected in this line of work and you cannot expect every company to gather a 15 German SWAT within 2 hours.

And you don’t want those 15 German testers to idle (while getting paid) because you’re late on your next build or it crashes. Plus, that means dealing with as many localization agencies as you have languages and sending x builds to the other side of the world will make your security and data managers go bananas.

You may also choose to hire a company specialized in QA: they have bigger resources, provide tech support, consoles and PCs (and chairs…) and deal more easily with emergency demands (and with some clients’ lunacy). You also get results for all languages at the same time which is helpful.

In either case, vendors need to be prepared: they must get their hands on a U.S. version to get familiar with the game’s content and mechanics. They need to know what you want them to do and they need a rough schedule so they can arrange for resources.

That said you also need to clear all security issues beforehand. Have them sign a non disclosure agreement and check their facilities and set up (remember you may also work on an IP that’s not yours or on a movie tie-in and you don’t want the plot to leak). Provide them with a detailed walkthrough (with cheats) and other support documentation such as the as recorded script.

If your game is multiplatform you might just as well test the main first. Chances are that fixing those bugs will prevent duplicates from pullulating in your database. Have the other platforms tested once those first bugs have been fixed. Arrange your schedule so that you have enough debugging time and resources between testing rounds. Linguistic QA is expensive: between 20 to 50 euros an hour depending on languages (late and night shifts cost more).

Bugs Categories

Explain how you want the bugs to be referenced and how you wish them to be described. Here are a few examples of audio bugs:

- Audio file not playing:

This might happen for several reasons: file name issue, file was overlooked during integration or is still in post production etc.. It can also be a volume issue if the mix isn’t final yet. If it’s an insignificant one liner, it’s not really a problem but all of your critical path must play.

- Audio not playing in a pre-rendered video:

Check channels assigned to languages etc.

- Audio playing in English or in the wrong language:

Again this may be an integration issue or setup in the sound data.

- Wrong audio:

This one is annoying, especially if the wrong line is totally out of context or if the video dialogue has not been correctly edited.

Please note that the categories listed above are likely to jeopardize your submission success. But other joyful issues will arise such as lags (usually in the US version as well), scratches and volume problems etc. (due to negligence or a simple build burn issue). Subtitles will bring headaches because they display their ID tag instead of the text or won’t display, or display out of synch etc. You need to prioritize especially at this time when your dev team is already buried under work: the last thing they want to hear about is another localization overload.

Assess if fixing the bug is essential: some are really not worth it and you’re better off with a “will not fix.” All the more once you have a solid master candidate, ready to go through its last functionality testing before it’s sent for approval.

***

Audio plays a big part in localization’s appreciation. Well translated lines, adapted jokes and sound casting will show local gamers that you care for them. The market has to grow and we need to struggle to get the best quality for all countries.

Studios and publishers need to get ready: with the expected inflation of storyline content and sky-high costs of next gen developing, localizing audio will get riskier and much more expensive. Investing time and money in tools, process and fruitful business relationships with outsourcers will become mandatory. Royalties and fee issues will also generate tensions with actors’ unions and eventually a deal will have to be brokered. Best if we address those issues now.

Should you have any comments or questions, feel free to email me.

Read more about:

FeaturesYou May Also Like