Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Where's game audio heading? Veteran audio designer Bridgett (Scarface) gathers mixing case studies on titles from LittleBigPlanet to Fable II<, and concludes by looking at the next 5-10 years in the field.

[In the second installment of Rob Bridgett's series on the future of game audio, following his suggestion that audio mixing is primed for a revolution, the Scarface and Prototype audio veteran gathers mixing case studies on titles from LittleBigPlanet to Fable II, and concludes by looking at the next 5-10 years in the field.]

Case Studies

In this installment, I would like to look in depth at several video game audio mix case studies. I think it important for these studies not to contain just examples of projects I have been involved with here at Radical Entertainment, but also the varying tools and techniques that are used on other titles by other developers.

In this way we can begin to see common ground -- as well as the differences in approach from game to game and from studio to studio. So, as well as comments on two of the games that I worked on, I reached out to the audio directors of titles including Fable II, LittleBigPlanet, and Heavenly Sword to explain the mixing process in their own words, as follows:

Scarface: The World Is Yours (2006) Xbox, PS2, Wii, PC

Rob Bridgett, audio director, Radical Entertainment

"Scarface was the first game we had officially "mixed" at Radical, and we developed mixer snapshot technology to compliment some of the more passive techniques such as fall-off value attenuation which we already had.

The mixer system was able to connect to the console and to show the changes of the faders as they occurred at run-time, we could also edit these values live, while listening to the changes at run-time.

The entire audio production (tools and sound personnel -- myself and sound coder Rob Sparks) was taken off site to Skywalker Ranch for the final few weeks of game development. We used an experienced motion picture mixer, Juan Peralta, who worked with us on a mix stage at Skywalker Ranch to balance the final levels of the in-game and cinematic sound.

Mixing time took a total of three weeks: two weeks on the PS2 version (our lead SKU) in Dolby Pro Logic II surround, and a further week exclusively on the Xbox version in Dolby Digital. We also hooked up a MIDI control surface, the Mackie Control, to our proprietary software to further enable the film mixer to work in a familiar tactile mixing environment.

In terms of methodology, we played through the entire game, making notes and/or tweaks as we went. One of the first things we needed to do was to get the output level down, as everything during development had been turned up to maximum volume, in order to make certain features audible above everything else. Once we had established our default mixer level, we tweaked generic mixer events such as dialogue conversation ducks and interior ambience ducks which carried through the entire game.

We also spent time tweaking mixer snapshots for every single specific event in the game too, for example each and every NIS cinematic had its own individual snapshot, so we could tweak levels accordingly. In total we had somewhere in the region of 150 individual mixer snapshots for the entire game, for individual mission specific events, generic events and cinematics. Skywalker also has a home listening room with a TV and stereo speaker set-up, and we would often take the game there to check the mix."

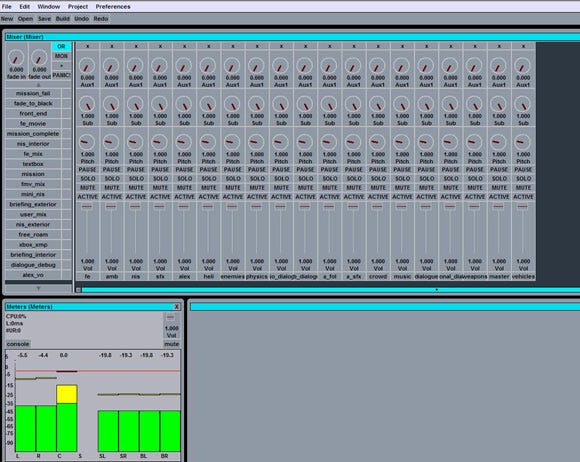

Above: A screenshot (click for full size) of Radical's mixer snapshot technology in our proprietary engine 'Audiobuilder' as used on Prototype. Show in the main window are the various buses, to the left of which are the lists of predefined snapshots. At the bottom left is also a run-time level meter that shows the output levels from the console (whether it be the 360, PS3 or PC)

Prototype: (2009) Xbox360, PS3, PC

Scott Morgan, audio director, Radical Entertainment

"Scott Morgan and myself spent a total of six weeks mixing the in-game content for this title. Three weeks were spent on the Xbox 360 mix; we also spent a further week on the PS3 with the cloned values from the 360. This was because we used a different audio codec on this platform and the frequencies of the sounds that cut through were noticeably different.

We also spent a further two weeks tweaking the mix, as final (late) cinematic movie content drifted in, and we integrated this into the game-flow. In many ways we started mixing a little early -- four weeks prior to beta. At this point in development the game code was fairly fragile and we experienced a lot of crashes and freezes which hindered the mix progress. This meant, unfortunately, that on some days we got very little done, because of these stability issues. These issues tended to disappear after beta, when the game code was considerably more solid.

The methodology used was that we played through the entire game, making notes and tweaking buses as we went. The first day was spent getting the overall levels adjusted to a reasonable output level, we mixed at a reference listening level of 79dB. We compared the game with both Gears of War 2 (a much louder title) and GTA IV (a much quieter title) in order to hit a comfortable mid-ground.

From then on, we played through the game in its entirety, tweaking individual channels as we went, with particular attention on the first two to three hours of gameplay. One of the newer techniques we adopted for the mix was to record the surround output into Nuendo via one of the 8-channel pre-outs on the back of the receiver.

What this allowed us to do was to see the waveform and compare levels with earlier moments in the game for internal consistency. It also gave us instant playback of any audio bugs that we encountered, such as glitches or clicks, which we could get a coder to listen to and debug much faster than having them play through and reproduce. This also gave us instant playback of any sounds that were too quiet, such as dialogue lines for mission-specific events, so we could quickly identify the line and correct the volume.

The game was mixed in a newly constructed 7.1 THX approved mix stage built at Radical Entertainment in 2008. We used our proprietary technology (Audiobuilder), much improved from the Scarface project, but using many of the same mix features and techniques (passive fall-off and reverb tuning with reasonably complex mixer snapshot behavior and functionality). We again used hardware controllers, a Mackie Control Pro + 3 Extenders, that were able to display and give us tactile tuning control over all the bus channels on fader strips.

It has to be said that mixing a game at a reference listening level can be a fatiguing experience, especially over three or four weeks. It is true that the sheer quantity of action and destruction in Prototype adds to this, so we devised a routine of regular breaks and also took whatever opportunity we could to test the mix on smaller TV speakers.

One of the most useful things about the way the Radical's mix studio is equipped is that we have RTW 10800X-plus 7.1 surround meters, which allow us to see clearly and instantly what is coming from what speaker and very quickly debug any surround sound issues or double-check the game's positional routing.

It is interesting to note that we used no LFE in the Prototype game whatsoever. Knowing how much LFE is over-used in video games, it was decided early on that we would rely on crossover to the sub channel from the main speakers to provide the entire low end, in this way it gave us a far more controlled and clean low frequency experience."

Fable II (2008) Xbox 360

Kristofor Mellroth, audio director, Microsoft Game Studios

"Kristofor Mellroth and Guy Whitmore flew out to Lionhead studios on Fable II to mix the game along with the dialogue supervisor Georg Backer and the composer/audio director Russ Shaw, who were already on site in the UK. Using a very effective three-plus person team, they got a game that is very cleanly mixed.

We tend to mix games over two-plus days. Set aside a minimum of two days, because you need time to digest the changes from the previous day. Ear fatigue and exhaustion do factor in when you spend long hours mixing. Ideally you'd have a week.

The process is sort of painstaking and we try to play through the "golden path" during the final mix, but even then it's usually too long to accomplish in our travel time-frame. Instead, we know the key moments to test-mix. We also test the entire range of game mechanics against each other. With Fable, we knew what the key moments were. We made sure to use debug to skip to them and play through as real players would.

One of the keys of game mixing, especially in the system for Fable II, is that radius is as, or more important than, volume modifiers. Since we plan and chart radius mathematically we know how to change the values on one element of one game object and how that should stay in relation to other sounds. This is a roundabout way of saying: If radius sword hit = 60 meters while radius sword scrape = 30 meters, and we want the sword to have a bigger radius by five meters, it's really easy to change all sword elements in one pass without noodling too much. The math solves it for you. This is very important when mixing for two player co-op.

Dynamic range is something else we push hard for. We figure out what our loudest sound is and what our baseline quiet sound is. Then we start to stack-rank sounds between. For instance, I know in gameplay that X should be louder than Y unless factor Z is in play. This gets really complicated but it is a good reality check when you're getting lost in the piles of assets. For Fable II it was Troll slam attack vs. player footsteps. That is our maximum dynamic range. That means when a troll is slamming his fists, even your legendary gunshot should be quieter.

This edge-case is also a good check if you're wondering what your mix should sound like when you're at another edge case, say two players on the same screen with one at max distance shooting at the troll in the foreground.

Should you be able to hear that max distance player reload? If you do, does it take you out of the emotional experience of fighting a giant monster? We wrestle with these questions and sometimes make compromises but in general I'm happy with the results. It worked well on Crackdown and it's worked pretty well on Fable II.

It'd be a lot easier if we could draw our own falloff curves! If I had that I could have "cheated" the radius in just the perfect amount instead of relying on linear or equal energy falloffs. This is something we need to add to the Lionhead tools for future titles and I'd consider it a mandatory feature for all tool sets."

LittleBigPlanet (2008) PS3

Kenneth Young, audio director, Media Molecule

"LBP uses FMOD, so we had to roll much of our own mixer functionality. It uses FMOD's channel groups to specify what is being mixed. It doesn't have a control UI (other than notepad), but we do have real-time update of mix settings (though stuff needs to be re-triggered to get the new values, so I'd usually restart a level to get them). We also have an in-game debug visualization of group levels.

We have an overall parent (master) snapshot where the level of every channel group is specified. You can only have one of these active at any given time, but you can change it. Then we have child (secondary) snapshots which override the parent -- these can contain one or as many channel group specifications as is required for that mix event.

It is hard-coded so that when two child snapshots are in place, and they both act upon a given channel group, the second snapshot cannot override the settings of the first. (This works for LBP, but I can see why you'd want explicit control over that -- or perhaps have a priority system in place).

We can specify level and also manipulate any exposed effect settings. In the code which calls the snapshot we specify a fade-in and fade-out time for the smooth addition and subtraction of the child snapshot. This is mainly used to cope with rather high level changes in the game's context; entering the start menu, a character speaks etc.

Interestingly, despite the fact the characters speak with gibberish voices, it sounded weird not ducking other sounds for them. Before the fact I assumed it wouldn't matter what with their voices not containing any explicit information, but not focusing on their voices whilst they are "speaking" makes what they are saying (i.e. what you are reading) feel inconsequential. I guess that's a nice example of sound having an impact on your perception, and highlights the importance of mixing.

Other functionality which has an effect on the mix is auto-reduce on specified looping sounds so that after, say, 15 seconds from initial event trigger a loop will be turned down by a given amount over, say, 30 seconds so that it makes an impact and then disappears. That's hard-coded.

In terms of mixing time, as is typical, it was just me, and I mixed in the same room as the audio was developed in. I tested the mix on other setups in the studio as well as taking a test kit home to try it out in a real-world environment, taking notes on things that could be improved. The mix was a constant iterative process throughout development with a couple of days during master submission dedicated to final tweaks."

LittleBigPlanet's mixer debug screen (click for full size)

Heavenly Sword (2007) PS3

Tom Colvin, audio director, Ninja Theory

"Heavenly Sword also used FMOD. FMOD provides the ability to create a hierarchical bus structure as described in the section above. Each bus can have its own volume and pitch values, which can be modified in real-time. At the time, FMOD had some performance constraints related to the number of sub-buses within the bus structure, so we tried to keep the hierarchy as simple as possible.

The Ninja Theory tools team built our own proprietary GUI, which allowed us to configure mix snapshots, and adjust the mix in real-time. We were able to prevent the game from updating the mix if desired, so we could play with a mix template without the game suddenly changing the mix on us while we were working. We also had an in-game onscreen debug UI that showed us what mix templates were active, their priorities and so on.

Mixer snapshots were largely activated and deactivated by scripted events. This was one of the weaknesses of the mix system -- the game scripts were not the easiest things to work with -- they obviously couldn't be changed while the game was running, and reboot times were long, so it was pretty time consuming getting the mix templates to switch on and off in the right places.

We decided to set up a blanket set of empty templates before the mix session, so we wouldn't have to spend time actually getting the templates to switch on and off whilst mixing. This constrained the scope of the mix somewhat.

NT's audio coder (Harvey Cotton) devised a snapshot priority system, which simplified implementation a great deal. The priority system made sure that the snapshot with the highest priority was the one you actually hear. Here's an example of how this would work. You're in a combat section of the game, and you perform one of the special moves, and then pause the game during this special move -- what would happen with the mix system?

A default low priority mix would always be active. When the special move was initiated, a higher priority "special moves" template would be activated (we used scripted events fired from animations to do this.) When the user pressed pause, the pause mix would activate, and having the highest priority, would then take over from the special move mix.

At this point, three different mix templates are "active", but only one is audible. The game is un-paused, and we move back to the next lowest priority template, the special move ends, and we move back to the default. The priority system is important because it prevents you from needing to store the game's previous mix state, in order to return to it once any given scenario has finished.

Throughout development we constantly checked the mix on as many setups as possible -- a reasonable spec TV, our own dev-monitors, crappy PC speakers, etc. During mastering, we had a week to set up the final mix templates. The mixing environment was a calibrated mix room. Our one week of mixing didn't really feel like enough. There were all sorts of content changes we wanted to make at the mix stage, which just weren't possible due to time constraints."

Game Mixing: The Next Five (to Ten) Years

I was going to call this section 'The Next Five Years", but looking back over the last five years, I am reminded how glacial progress seems to be in these areas. If all this stuff happened in the next three years, our jobs as sound designers would be awash with exciting new possibilities and endless high quality sonic possibilities, but wait... slow down, slow down....

I would like to explore some of the areas where I see video game mixing heading in the next few years. There are certainly a great many opportunities, and many ways to go about integrating new features and techniques. In many respects it is the types of games themselves that will push these requirements. The richer the visuals and the more control over visuals that is gained over the coming years, we will see an undoubted increase on the focus of sound.

Establishing a Reference Listening Level for Games

Work is already underway in this area. For some years now it has been impossible to know the recommended reference listening level to mix a game at. It could be either 85dB, the same as that of theatrical movie releases, or 79dB, the same as DVD remixes or TV, or just match the output of a competitive game.

The first is designed specifically for films to be heard in a theatrical context, the second is designed to reconfigure the mix to a home environment, specifically to allow dialogue, that while in a theatre and played loud can be clearly heard, is lost and less audible in the home environment. Typically there is slightly less dynamic range in a home entertainment mix for this reason, and a great deal of dynamic range in a theatrical mix, but these two mixing systems depend solely on the playback levels of the content being either 85 or 79.

Common sense would suggest that games should match the same output levels of DVD movies. However, games tend to have much longer moments of loudness, or action, in them than movies, which typically have a story dynamic of dialogue, action, dialogue. With racing games or action games in particular, the narrative dynamic is far more intense for longer, and so it is arguable that 79dB could be established for game reference listening levels.

The higher the reference listening level, the more dynamic range and quieter certain sounds will be to achieve dramatic effect. The lower the level, the louder the output levels will be. Currently, games are incredibly loud and very mismatched in terms of output levels -- not only from console to console, but from game to game. Even games released by the same studio have inconsistent output levels.

As mentioned before, there is even internal inconsistency within the same game of differing levels between cinematics and in-game levels to contend with. Once a recommendation for a standard is published, it will be much easier to know where the output levels of the game need to be.

Enrichment of Software Tools, Both Third-Party and Proprietary

In-house tools and third-party solutions will solidify on a basic feature set that is solid, robust and reliable enough to ship many games. It is onto this basic core feature set that additional systems and add-ons will be developed. Audiokinetic's Wwise has a particular focus for interactive mixing technology and has already proven a solid basic mixing structure with its bus ducking and bus hierarchy.

The more enhanced and developed that third-party tools become, the more pressure there is on in-house tools to compete with these solutions and to have the same, if not more features. This subsequent climate then puts pressure on in-house technology to be more agile and versatile, which in turn results in further innovation, eventually spreading out to the wider industry.

I asked Simon Ashby, product director of Wwise at Audiokinetic, to share some of his thoughts on both the limitations and future directions of mixing for video games:

"The barrier for high quality mix in games is mainly caused by the fact that we are still not really good with storytelling in our games. We have trouble mapping and controlling the emotional response of the player in order to pace the story with the right intensity progression and a larger emotional palette. We still ask the end user to execute repetitive actions and because of this, several games end up offering a monotonous experience. As long as games are produced this way, the mix quality will remain inferior to that which films achieve, despite the quality of the tools.

Wwise offers both active and passive systems for the developer. Passive mixing is achieved by effects such as the peak limiter or auto-ducking system. The active system on the other hand is represented by the 'state mechanism', which operates like mixer snapshots with custom interpolation settings between them. The event system also offers an active system with a series of actions such as discreet volume attenuation, LPF and effect bypass, and these can be applied to any object in the project.

Video game mixes have further complexities, as the game experience can last between four to 10 times longer than movies and they have far more unpredictable assets to mix. The main complexity remains the interactivity, where the mixer has to take into account various different styles of gameplay; the soundtrack emerging out of a single game played by a Rambo-kamikaze gamer is way different than the one from a stealth type of gamer even though it is the same game using the same ingredients.

In terms of new mixing features for Wwise, we usually don't reveal our mid and long term plans since we cannot vouch for the future. That said, you can be sure that we have a series of new features in our roadmap covering both passive and active systems that will help bring mixing technology for games to a previously unforeseen pro level."

Dedicated Mix Time at the End of Production

In many cases, the mix of a game is a constant iterative process that goes on throughout the entirety of development, with perhaps some dedicated time at the end of the project to make final tweaks. That amount of time is often very short, due to the proximity of the game's beta production date to the gold master candidate date, but I expect that time to get bigger as the quality of mixing tech and the understanding of sound needing to iterate after design and art have finished tweaking is better understood.

Taking games off-site to be mixed, or to dedicated in-house facilities is also an area that will increase the amount of time needed to complete a mix. Who should and who shouldn't attend the mix is also not currently fully understood. One thing is for sure, though: it is often a minimum two-person job. Lots of questions and doubts come up in a mix, is something too loud, is it loud enough, and to be able to bounce these questions off another set of ears is very important as a sanity check.

Established Terminology

Right now, particularly with proprietary tech, there are a huge variety of naming conventions that are very different from one mixing solution to the next. The third-party market does not suffer so much from the differing terminology as there are only a couple of leading solutions on the market, both with similar terminology.

Over the coming years, there will certainly be a more established vernacular for interactive mixing. Terms such as snapshots (sound modes), side-chains (auto ducking), ducking, buses, defaults, overrides, events and hierarchical descriptions like parents and children will become more established and solid -- referring to specific interactive mixing contexts. Once this happens, a lot more creative energy can be spent in using and combining these features in creative ways, rather than worrying about what they are called and explaining them and their functionality to others.

Mapping to Hardware Control Surfaces & Specially Designed Control Surfaces

The world of post-production mixing is all about taking your project to a reference level studio and sitting down in front of a mixing board and tweaking levels using a physical control panel. The days of changing the volumes of sounds or channels using a mouse pointer on a screen, or worse, a number in a text document, are almost behind us.

The ability to be able to hook up the audio tools to a hardware control surface, such as the Mackie Control Pro, via MIDI, have enabled physical tactile control of game audio levels and have opened up the world of video game mixing to professional mixers from the world of motion picture mixing.

There are several big players in the control surface market, all of which have their own communication protocols, such pro film devices like Digidesign's Pro-Control line of mixing boards, not to mention Neve products, have a presence in the majority of the world's finest studios. Once access to these control surfaces is unlocked by video game mixing tools, a huge leap will be made into the pro audio world.

Right now, it is still quite an intense technical and scheduling challenge to mix a game at a Hollywood studio, hauling proprietary mixing tech and consoles (such as Mackie controls) along to the studio in question. The ability to mix a game on a sound stage in the world's best post-production environments, without having to compromise the control surface, will enable huge shifts in the quality and nuances of mixing artistry.

Again, the technology is only the facilitator to the artistic and creative elements that will become available. Putting video game sound mixes into the hands of Hollywood sound personnel and facilities will allow a really interesting merging of audio talent from the worlds of video game and movie post production.

Of course there may also emerge a need for a very customizable "game-only" mixing surface, which accommodates many of the parameters and custom control objects that I have described. Something along the lines of the JazzMutant multi-touch Dexter control surface, which can easily display 3D sound sources and allow quick and complex editing of EQ or fall-off curves, may actually end up leading the way in this kind of mixing and live tuning environment.

Specialized Game Mixers (for-hire personnel)

Once the technology and terminology is in place and is well understood by game sound designers and mixers, it will only be a matter of time before real masterpieces of video game sound mixing begin to emerge. It is only when the technical limitations have been effaced and effective and graceful mixing / tuning systems are in place that artistic elements can be more freely explored.

Ultimately, the mix of a game should be invisible to the consumer. They should not recognizably hear things being "turned down" or changing volume, in much the same way as a convincing movie score or sound design does not distract you from the story. The mix is ultimately bound by these same rules -- to not get in the way of storytelling.

Ironically for games, the hardest scenes to mix in movies are prolonged action scenes, and to some extent many video games boil down to one long protracted fire-fight. The focus of attention changes constantly and mixing needs to help the player to navigate this quickly changing interactive world by focusing on the right thing at the right time, be that elements of dialogue, sound effects or music.

It is easy, then, to envisage a situation where a specialized game mixer is brought on to a project near to the end of development to run the post-production and to mix the game. As a fresh and trusted pair of ears, this person will not only be able to finesse all the technical requirements of a mix such as reference output levels and internal consistency, but will also work with the game director and sound director on establishing point-of-view and sculpting the mix to service the game-play and storytelling.

Industry Recognition for Game Mixes

In order for video game mixes to be recognized and held up as examples of excellence, there need to be audio awards given out for 'best mix' on a video game. The Academy Awards, for instance, recognize only two sound categories, Sound Editing and Sound Mixing, which should encourage those awards ceremonies wanting parity with Hollywood.

Once awards panels begin to recognize the artistry and excellence in the field of mixing for games, there will be greater incentive for developers to invest time and energy in the mix .

Unforeseen Developments: Game Audio Culture

As with any speculative writing, there is always some completely left-field factor, either technological or artistic, that cannot ever be predicted. I am certain that some piece of technology or some innovation in content will also come along, either in film sound, in game sound or from a completely different medium all together, that will influence the technological and artistic notions of what a great game mix will be.

Often it is a revolutionary movie, such as Apocalypse Now or Eraserhead, that redefines the scope and the depth to which sound can contribute to the story-telling medium, the repercussions of which are still being felt in today's media.

These are areas that I like to define as game or film "sound culture" -- often enabled by technology, such as Dolby, but pushed in an extreme direction by storytellers. These are the kinds of games that I expect to emerge over the next 10 years given the technological shifts that are occurring today, essentially: game audio culture defining experiences.

Notes:

(1) "The Hollywood listener is bestowed with an aural experience which elevates him/her to a state which may be defined as the super-listener, a being (not to be found in nature) able to hear sounds that in reality would not be audible or would sound substantially duller" from Sergi, Gianluca (1999), 'The Sonic Playground: Hollywood Cinema and its Listeners', http://www.filmsound.org/articles/sergi/index.htm Accessed 1st April 2009

Read more about:

FeaturesYou May Also Like