Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

How and why you should be budgeting 5% of your development funds on these 5 player-centric activities

Question: What is your studio doing to deliver better a player experience? More than ever, the end-user’s experience of commercial game titles is tantamount to a title’s critical and commercial success. Indie teams like Hipster Whale can compete with high-budget development teams in quality and mind-share - it is their player experience that sets titles apart from their peers rather than budget, IP or marketing spend. So what tools are your team using to ensure you deliver these experiences to players?

Recently, emphasis on telemetry-based variable-twiddling is often cited as ‘focusing on the player’, but it is becoming clear to many that analytics are an important part of the answer, but don’t offer the complete picture.

So what are you really doing to address the market's new-found focus on experience, playability and accessibility? How have your development practices changed in order to accommodate player-centric design, focusing on usability, understandability, in delivering a polished player experience, and ensuring that your intended experience is realised by players?

Here are 5 practical tasks that every studio - large or small - should be including in their game development cycle in order to improve their player experience right from the start of the project. This research will require around 5% of development budget, delivering return on investment by spotting assumptions and mistakes, shortening coding time, and ultimately reducing development risk, toward producing a genre-leading experience.

Competitor analysis is a commonly-used tool in evaluating game features, but competitor titles are a goldmine of more than just game mechanics. When it comes to implementing helpful animations, standardisation of UI layouts, interactions and teaching the player, developers are often reinventing the wheel - and badly. By not taking the time to step back and understand the finer points of competitors’ implementations, teams often miss their opportunity to understand and improve upon the little details that often make all the difference. Taking a few minutes to play some less-successful Clash of Clans or Boom Beach competitors provides spades of evidence for this.

My last article for Gamasutra considered the differences between a successful game and a less-successful clone, and uncovered a number of key differences that contributed to the latter title’s lack of success; but this isn’t about mindlessly copying, this is about formalising differing approaches to well-understood design challenges, and ensuring that your next title is at least as good as the best-in-genre.

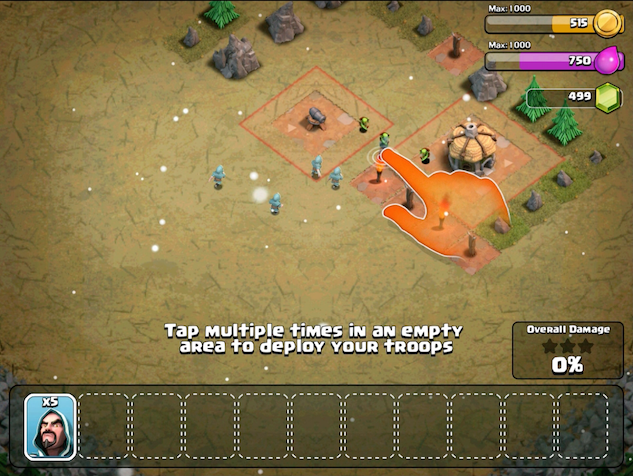

For example: Clash of Clans’ first tutorial uses ghost troops to demonstrate placement - but did you notice that the ghost hand places a troop between the buildings (see the screenshot below). This nifty teaching trick (and hundreds more) would likely be missed unless you were specifically analysing the title for ‘player experience best practices’. It’s the little things.

The CoC tutorial demonstrates the extremes of troop placement [Image credit: Supercell]

So how should one approach a formalised competitor analysis? The simplest method is to perform a cognitive walkthrough - a sort of step-by-step, comparing games by breaking down the players’ tasks (based on each of the features your game offers). Take the genre-leader and break it down into different UI and critical player tasks. Assume every element and interaction is specifically designed, and take time to understand the reasoning for every design decision. Without critique of this depth, studios risk missing out on the smaller touches that have helped players understand and successfully onboard their competitor title, making theirs comparatively harder to understand, and less accessible.

In software and website development, wireframes prototypes are edited, tested and iterated wildly before committing to code. In gamedev, focusing on getting minimum viable products up-and-running is often at-odds with time spent wireframing UI and user flows, but this period is absolutely vital for making player-centric design choices.

On one of our clients' recently-released iOS titles, we completed 4 rounds of review of the wireframes on paper, catching an average of 11 usability issues per screen, per round. Some were minor (inconsistent button labelling, unclear instructions), and others would have developed into complete show-stoppers had they not been caught. In free-to-play, every small trip-up is a potential financial risk, and poorly-designed UI is often cited by both journalistic review and players’ ratings.

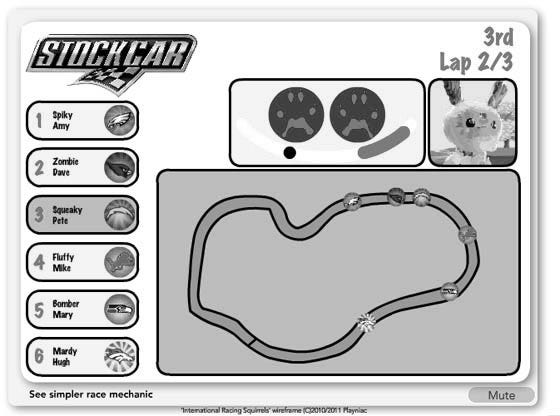

Evaluating wireframes can catch a wide array of problems. [Image credit: International Racing Squirrels, Playniac]

There is a great deal of value in getting these wireframes in front of players, not just designers - they’re a cinch to make interactive at a basic level, allowing you to explore different UI layouts, label text and UI element positions cheaply and quickly through small iterative playtests. Letting simple usability mistakes make it to the art and code stage is one of the biggest pitfalls of developing an MVP as fast as possible.

Playtesting is the mainstay of player-centric development. Games analytics will generate no end of data about what players are doing, but knowing why they’re doing it is a matter that needs finer exploration - this is where playtests kick in. Data on understandability, usability and the player experience is best generated by playtesting, yet entire development teams are doing without formalised playtesting as part of development. I see a definite correlation between the games that’re being playtested properly, and those games performing well critically and financially. No major game development studio is doing without playtests run by experienced games user researchers, on mobile or PC/console; from indie teams like ustwo, who have “invested heavily in playtesting [Monument Valley]”, or AAA console teams like Monolith, who were “continually bringing in playtest[ers] of all ability levels [in Shadow of Mordor]”.

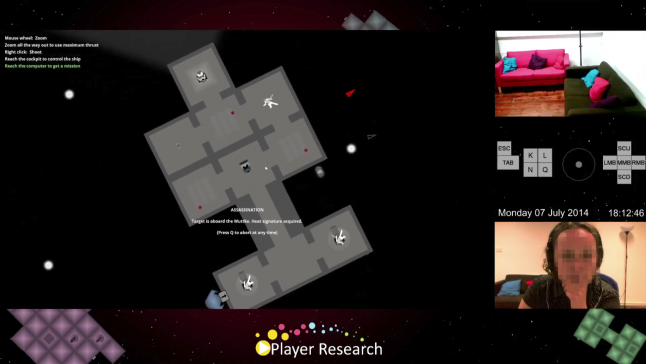

Observing and understanding player behaviour during playtest [Image credit: Player Research and Heat Signature]

Playtests should be included in two capacities: firstly, short and iterative playtests focusing on usability, i.e. that players understand their goals, the menus, the control schemes are suitable. Playtests like this should be small-scale, quick and iterative, with 6 to 12 players, starting from wireframe stage, and not stopping until all players are meeting success metrics chosen by the team, be that in terms of accessibility (“non-gamers should be able to pick-up and play my title, and understand the game within 3 matches”), or perhaps raw timing (“players should be able to customise a character completely in under a minute”). Setting these success metrics is another player-centric task that can help drive your vision.

Once you’ve got usability under control with small iterative playtests, it is time to consider the player experience, and ramp up playtesting efforts. Larger cohort groups of 30+ should play the game under observation, watching out for progression blockers and times where players’ experiences don’t meet design intent. To omit either one of these types of playtesting would cause significant issues, with latent usability issues tainting the experiences of the larger groups, or player experience issues like sluggish pacing or imperfect balancing not being caught before launch.

Feedback from early access customers can give some insight into these factors, but actually observing players and understanding them directly - rather than a few players’ own limited interpretations - generates better-structured and more objective data that makes all the difference.

Playtesting should begin at the wireframe stage and not stop - they help give context and meaning to analytics data throughout soft-launch and beyond. Monolith were even using playtest data to compare against competitor games, benchmarking against their own title throughout development. As an added bonus, once the game is launched you can use analytics to target specific problem areas in playtest, directed by player behaviour at a larger scale, or even playtest with players who tried your game and chose not to stick around.

Engagement. Retention. Motivation. Difficult concepts to explore, but getting actionable and objective data on players’ actual experiences in the long-term isn’t as hard as it sounds. By organising a small, controlled distribution of a pre-release version of the game with selected (and NDA'd) players, and providing a carefully-written Play Diary to fill out, studios can capture players’ feelings and experiences over weeks or even months, feeding these insights back into design. You can identify late-game frustrations and loads of potential pitfalls that playtests (lasting a few hours at most, under an hour typically) just won’t catch. Play Diaries are a lot of work, but they’re incredibly insightful - adding the rich, qualitative data over an extended period that compliments analytics perfectly.

So, there you are. 5 activities that will help better your game by gaining actionable insight from the competitors, bettering your UI before code, playtesting with small and large groups, and finishing with a long-term perspective in exploring long-term engagement. All of these tasks will improve the player experience, and also serve to reduce financial risk by avoiding surprises on launch day.

Conducting all of this research should come in at around 5% of the project budget, which may sound like a fair chunk, but consider website and software development studios routinely set aside double that percentage for ‘user experience testing’ - and they anticipate doubling of the relevant quality metrics as ROI.

Could a bettering your player experience using these tools double your player life-time value?

About The Author

Seb Long is a User Researcher at Player Research, an award-winning playtesting and user research studio based in Brighton, UK. Player Research help developers deliver their design vision through playtesting, user research and understanding the player experience, helping bring dozens of titles to the front page of the App Store, and genre-leading experiences to more than 500 million gamers worldwide.

Read more about:

Featured BlogsYou May Also Like