Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

This article will try to cover everything you need to know about 3D Scanning using Photogrammetry including the choice of software, equipment, and computer, in addition to a guideline on properly capturing photos.

In my previous blogs, I always skipped the introduction to photogrammetry as there are a lot of introductory tutorials, yet I recently noticed that most of them don’t go into depth on how to properly capture your images. Therefore, I decided to create my own guide on everything I know about photogrammetry.

This article is meant to one for these audience categories:

Those who never used photogrammetry or 3D scanned before

Those who used photogrammetry before, you read the introductory tutorials, and they want to improve their scan quality

Those who probably know all of this, and you are just checking if they missed something.

To keep it simple and easier to read, I’ve separated each point into a section. Feel free to skip the one you feel confident in. If you are a beginner, I would highly recommend that you check out the blog posts I mention, I might also link to multiple article that cover the same topic, you might want to read all of them if you have time. These blog posts are meant to be independent of each other, and therefore if you read some of my previous post, please excuse the repetition.

Special thanks to Jugoslav Pendić for going over this article and adding some his knowledge to it, and another thanks to the 3D scanning group wo helped me fill some gaps in my knowledge.

What is Photogrammetry?

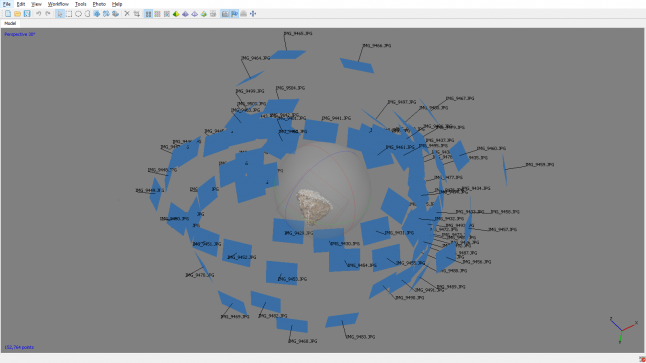

If you are reading this article, hopefully you already know what photogrammetry is, but in short, photogrammetry is the process of creating 3D models from multiple images of the same object taken from different angles.

Though this technique is not recent at all, it’s much older than the machine vision derived workflow that we have today and it was widely used as part of a mapping/geodetic toolset. It became more popular lately as it became more accessible with the increase of the processing power of consumers machines, which enabled it to spread to other markets like VFX and GameDev.

If you want to quick start with photogrammetry, I would highly recommend checking out the following:

PhotoScan Guides and Tests (Short Videos) by James Candy.

The Poor Man’s Guide to Photogrammetry by Bertrand Benoit.

Although these links mentions Agisoft Photoscan, the explained concepts should work with any other photogrammetry software solution out there.

The Software

There are many photogrammetry software that you can use to process your captured images, and usually most of them will give you decent result. Though there are some areas where one might shine over the other. It is worth noting however, while the rules of capturing your photos are universal to all software, due to how each software process the data differently, there are some software-specific guidelines that will enable you take advantage of the full power of certain a solution, and I would recommend investing some time in knowing your software.

For example, for Agisoft due to the limitation of your processing power, you might want to squeeze as much details as possible into one image, and Agisoft is good at reconstructing background details. While Reality Capture tend to filter out those background details as they might introduce some noise, though Reality Capture is faster, and you can just take more photos.

One thing to note, these descriptions are based of my personal experience with these software which might differ for your projects, and I would recommend you explore these options (or other options) yourself, and form your own opinion.

Autodesk Remake: You might have heard of Autodesk 123DCatch Mobile App, which is the cloud-based photogrammetry solution for mobile which is limited to 50 images/project that are downgraded to a 3 Mpx resolution. This was followed by the PC solution Memento that was later renamed Remake, which has both offline and cloud processing options. Remake is a one-click generate-all process where you have minimal control on how your data is processed. It is available in both free and pro versions. The free version is limited to 125 images/project and only cloud processing based, while the pro version is subscription based 30$/month, and is limited to 250 images/project and has also the offline option available. Once the scan is processed, you can edit it and clean it inside remake or import meshes from other software, which is a nice option that I keep making using of, though having more control over the mesh generation process would be nice to have. It also has a polycruncher (reduces mesh polycount), and texture bake options, which can be a quick way to create placeholder meshes for your level. Though you might want to check the licensing for your scans when processed using the cloud, as it may give access to Autodesk to use them as promotional material.

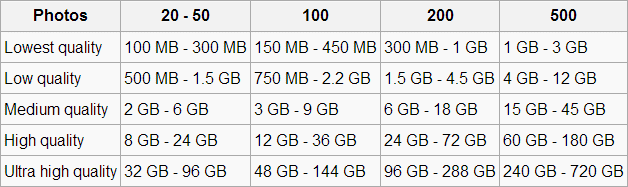

Agisoft Photoscan: This is a very popular solution that is widely used in the in the entertainment industry. Agisoft gives great scan results, gives you control over the mesh generation process, and has a friendly user interface that is well documented. The standard version cost 180$, however if you need the ground control point option, which a very useful feature when it comes to large scan, you need to get the professional version which cost 3500$. Nonetheless, while Agisoft does not have an image/project limit, the more images you use the more powerful your rig has to be in order to process it, not to mention that the processing time can be quiet slow. You can check out the Agisoft Support website for some tutorials, and Tips and Tricks. Here’s a helpful guide of PhotoScan Memory Requirements, where you can find useful tables like this RAM requirement table for the building model step of 12MPx images.

Reality Capture: It can process data much faster than its competitors, and can handle a huge amount of images on a regular desktop as long as it has an NVidia GPU. A cool feature that reality capture excels in is the quick align features, which allows you to align images in a matter of seconds on your laptop, which is an awesome tool to test your dataset on site, and make sure you have enough images. You can get similar alignment speeds in Agisoft using the lower settings, though it’s the point cloud reconstruction step where RC excels at, and is significantly faster than Agisoft. It cost 99€ for 3 months, however this version only handles 2500 images/project which is more than enough to scan anything other than large structures (castle, terrains, etc.), if you need to process more images, you need to get the CLI version which costs 7500€ for a year. Both version have all the same features including ground control points. It is worth noting that as of right now Reality Capture does not have 16-bit output support, nonetheless it is relatively recent software, and it is constantly being updated, 16-bit support will be implemented eventually.

Pix4d: If you want to process more than 2500 images, and if it is a onetime project, then you might be better of going with Pix4d monthly subscription of 350$. It can handle large datasets on powerful computer, and is slightly faster than Agisoft. This software is mostly spread in the industrial and agriculture industry, due to its specialized set of tools. Pix4d have some interesting webinars on their YouTube channel, where they go over how to make the most out of their software, and discuss some tips and trick for photogrammetry. It is worth noting that during my testing, I was not quite satisfied with the resulting texture quality, though this can be improved by tweaking the settings a little.

Free Solutions: There are many free alternatives, though unfortunately I haven’t tested any myself. I’ve reached out to the community and asked their opinion about it, and here’s what I got. VSFM is a nice solution though it is outdated, and you cannot use it for commercial use. There are more recent solutions that you can use like Micmac, MVS, or Python Photogrammetry Toolbox (PPT) though these options don’t have a GUI (graphical user interface), and with the lack of documentation and programming background, it might be challenging for new adopters. Nonetheless these solutions are still powerful, accurate and very flexible, Micmac even have a wiki page. There is a new solution named Graphos that has a GUI, and is supposed to be free or cheap, though it has not been released yet, and we haven’t heard of an update recently.

There is also SuRe which is interesting because just dealing with it makes you learn a lot - it does have GUI (only the very first versions have had command line workflow) and authors are very open about what they are offering. That being said, Sure is almost totally focused on large format cameras, and/or selling their code to be included into more user friendly environment. Sure does not even have 'alignment' step, but relies on data exported from Pix4D, Agisoft or other products. It excels on speed of generating dense point clouds, and amazingly accurate texturizing, but has insanely huge RAM memory demands, bigger than Agisoft. Also, models it creates, meshes - amazing detail, however, hard to deal with unless you have a good machine. Also / it is not free, but is available for scientific purposes, through communication with authors.

PC

Depending on what software you decide to use, you might need different specs, however after some research and experimentation, I found these setting to be the in-common recommended minimum requirement for most software:

CPU: I would recommend a core i7 with a minimum of 4 physical cores. While Xeon are nice, some software like Reality Capture prefers faster cores over cores count, also a single CPU rig is recommended.

GPU: An NVidia GTX card with at least 4gb of VRAM (though you might not need more than 4gb either). There is little performance increase between a Quadro GPU and a GTX, and a GTX is much cheaper. You can also use AMD cards, which have shown some better performance with Agisoft, unfortunately Reality Capture only support NVidia. Also a dual GPU setup can be beneficial, like a dual GTX 1060 or GTX 1080, while a TITAN might be an overkill, though you will need to disable one LOGICAL (not Physical) CPU core per GPU if you are using Agisoft. Here’s a link to some GPU benchmarks for Agisoft, you can always google more recent benchmarks for GPUs and CPUs.

RAM: 32GB is a good amount, after testing different RAM amounts, and reading some threads, I found that 32GB is a safe bet, and most scans will work with 16GB tough. Pix4D and Reality Capture will run with no issues with 32GB, while Agisoft will require that you increase that amount depending on the image count and resolution of the dataset you are processing. It’s worth noting that most i7 CPUs support up to 64gb of RAM.

Storage: Most software will cache the result on your drive in order to reduce the required RAM amount, therefor an SSD will reduce your processing time. Never go below 240GB, and a 512GB would be recommended. You can always use a secondary HDD drive for storing your Images which is much cheaper, this will only increase the first step of the processing when the software tries to load the images into memory.

Cooling System: let’s not forget that processing the images will take a lot of time sometime days, or even weeks, and therefore you should definitely invest in a good cooling system to reduce the chance of burning your hardware. Also please don’t use a laptop, even if you have a good gaming laptop that is well ventilated like an MSI or an Alienware, they are not build to handle such a load. (I understand that you might be on a budget, and cannot afford desktop, we all been there, in fact I totally ignored my own advice and used my laptop, but you shouldn’t because you will be shortening its lifespan)

Equipment

When using Photogrammetry here are the most common tools that you might use:

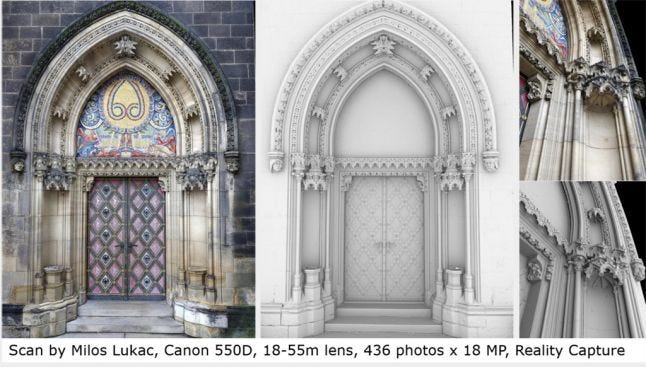

Camera: This is the most obvious one, can’t take photos without one duh. The ideal camera would be the one that offers the sharpest image (i.e. Nikon 810, Canon 5D), pixel count is important but not that much, and you can still do some incredible scans with a 300$ DSLR, you just have to take more pictures. I fact more people are starting to do some decent scans using their phone’s camera. Also always shoot in RAW when possible, and a little noise removal and sharpening post-processing can gain you some resolution boost. Here’s an example of a scan that was taken by Milos Lukac using a Canon 550D and Reality Capture.

Lens: Use a fixed focal length lens, the sharper the better. If the camera came with a variable lens and you want to use that, as long as you use the upper-limit or the lower-limit and you stick with it during the entire capture session, you should be fine.

The ideal settings to use for a camera according to Agisoft users is the following:

ISO as low as possible, preferably not higher than 400.

Shutter Speed as fast as possible, preferably not lower then 1/125.

Focal Length, use a lens of 50mm to minimize the distortion.

Aperture a value of f8 is recommended. Note that using a higher value does not guarantee good results, as the object in the background might introduce noise, and sometimes it’s good to blur them out so the software can ignore them.

White Balance should be set manually and should not be changed during the capture of the entire capture session.

Camera remote: You can get one for a couple of bucks on amazon so why not, it’s useful especially when your own shadow is appearing in the scan and you have to take the picture from a distance, or if your camera is out of reach like when it’s attached to pole to get higher angles, plus you can use it to take selfie photos.

SD Card: If you plan to take a lot RAW photos then a good SD card with a large capacity would be a good investment, just make sure you don’t get a storage that is larger than what your camera can handle.

Monopod: it's useful, especially if you have shaky hands and you can’t use a higher shutter speed. And, it only cost 10$, so why not?

Tripod: Yes, you need one, especially when you are in some low light situations, and you can't use a flash, and you have to take long exposure images.

Color Checker: This can be useful if you want to scan some accurate colors. Check out this short free tutorial by eat3D on how to use one. Color Correct Reference Workflow.

Drone: This is mostly useful if you are trying to scan a terrain, and in that case you can fly in a Nadir path. Pix4d has this process automated for you using their mobile app, and it is well integrated with the all in one solution DJI drone, you can also use a drone with a GoPro. Other use of a drone would be to scan a building or big structures, especially to take photos from location that are out of reach. You can always combine your aerial data set with your ground data that you took using your DSLR. In fact, it is recommended since there is a limit to the type of camera you can attach to an affordable drone, and there for a combination of the two will boost the quality of your scan.

CPL: Cross Polarized Photography is a useful technique that consists of attaching a polarized filter to you light source (i.e. flash), and your camera lens in order to light your model without creating highlights, which will help you create more consistent and flat textures.

Special De-Light Rig: This special rig is usually used for de-lighting, which is removing the lighting information (i.e. Direct Lighting, Ambient Lighting, Bounce Lighting) that belongs to the original environment in which the scanned object used to be. This will enable us to re-apply a different lighting information that matches the new environment the scan will be placed in. Epic Games used this rig in their Kite demo and wrote a wrote a blog post about how they created the assets for the open world demo, another blog about the choice of equipment, and another explaining their delighting process. This rig consists of 3 elements, a reflective chrome ball, a matte grey ball, and a color checker which I already explained how it works. This rig is usually accompanied with some method of capturing a panoramic HDR image of the environment that the rig is placed in. This rig is later used to recreate the same lighting conditions of the scanned item, we then use the captured panoramic image and we try to orient it in a way that its reflection on a virtual chrome ball matches the reflection on the real life chrome ball. The matte grey ball is used to measure the light level. Having this information, we can calculate the original lighting, bake it down on the scanned object, then subtract it from the original texture leaving us with a de-lit object. If you can’t get this rig, there is another software-based technique that you can use in order to remove lighting information from an object, it was used the Star Wars battlefront. The team behind the Star Wars game was kind enough to explain their workflow during GDC in a well presented talk titled “Photogrammetry and Star Wars Battlefront” which is available for free on the GDC Vault, and I would highly recommend you check it out, really go watch it. I also discussed this “Delighting a Texture” technique in this article.

Scale bar or Coded Targets: Coded targets are printed markers that can be placed in the scene before photos are taken and could be used in Photoscan Professional as reference points for coordinate system and scale definition or as a valid match between images to help camera alignment procedure. It is best to use when you have a small object that you would like to scan, and that needs to have an accurate scaling (i.e. customer demand). Will also function very nice with structures that you would want to document in similar fashion, but then you would look for a bigger scale bar.

Ground Control Points: This represent an actual 3D point in your scene that belongs to your scan, and that you highlight on multiple images in order to manually align some photos that the software failed to align usually for the lack of overlap between, picture. A software would require at least 3 control point in order to align 2 entities. Technically this is not an equipment, but used with a combination of a high accuracy GPS to record some of the coordinates values on field, you can place them in your project which help you properly align and scale your scan. It is recommended that you pick control points the furthest away from each other in order to reduce error.

In tech terms, for a high accuracy position device, you would use either a DGPS (differential GPS) rover unit, or a total station. This is mostly related to the use of drones in terrain mapping and is a geodetic surveying practice. 3 is a minimum of minimums, and you probably place more. You will position them in such a way that they GCP are distributed evenly around the area documented, and with few of them in the center too. These are very expensive to get into the field, and are usually connected to some well-funded projects. Scaling is not an issue here, but positioning, since the main product is a surveying one, meaning that some kind of plans, analysis or controls will be derived from the final product. When you have something like this needed in your work, it usually means that you will be using it in GIS workspace.

Spray: Photogrammetry does not scan translucent and reflective surfaces well, and a way to overcome that would be to cover your object using a harmless matt spray. If you want to know more, you can check out this tutorial by studio ten24 on 3D Scanning Reflective Objects with Photogrammetry. You can use something like Krylon 11-Ounce Dulling Spray, or if you can’t get one try using an airbrush with water soluble paint.

Turntable: Sometimes it might be hard to rotate around an object, and it would be easier to just rotate the object itself while the camera stays fixed. Quick tip, use a newspaper to cover your turntable base, this will provide you with additional tie points (identifiable patterns) that will make the alignment easier.

Guidelines

This section contains some general tips on how to properly capture your image, and the things to be on the lookout for.

First, go read this excellent article titled The Art of Photogrammetry: How to Take Your Photos, it’s really well explained, read it.

Second, here are some general tips collected from the reality capture forums, the 3D Scanning User Group, and personal experience.

Do not limit the number of images, Reality Capture can handle any. (Agisoft can handle it too, but you will need more processing power).

Use the highest resolution possible.

Each point in the scene surface should be clearly visible in at least two high quality images. The more - the better rule applies here, and you should aim for at least 3 images since most programs use triangulation calculation to get a result. In Agisoft you definitely need more than 3 to reduce noise.

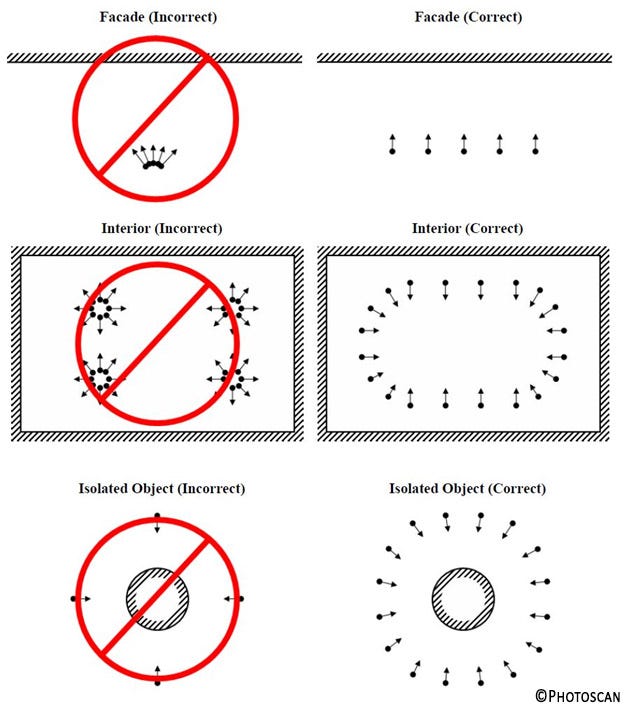

Always move when taking photos. Standing at one point produces just a panorama and it does not contribute to a 3D model creation in fact it will introduce someerrors to your scan. Move around the object in a circular way while trying to assure 80% overlap between photos.

Do not change a view point more than 30 degrees.

Start with taking pictures of the whole object, move around it and then focus on details. Beware of jumping too close at once, make it gradual.

Complete loops. For objects like statues, buildings and other you should always move around and end up in the place where you started.

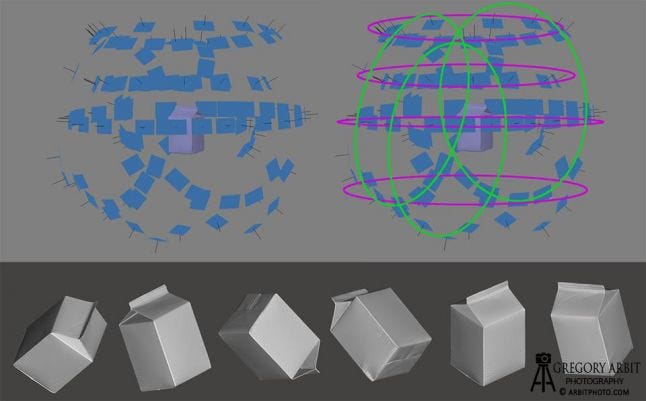

Don’t just take one loop, take multiple ones from different Heights

Rotate the camera (horizontal and vertical ensure better calibration

Trust your instincts, experiment, and feel free to break some rules if you think it fits.

Third, even if you are not planning to use Agisoft, I would highly recommend that you read Chapter 2 Capturing photos of their PDF User Manual. It is short, easy to read, beginner friendly, and it covers topics like Equipment, Camera settings, Object/scene requirements, Image preprocessing, Capturing scenarios, Restrictions. Here’s a few pictures taken from that PDF:

Forth, It’s always a good idea to define your target resolution from the beginning, in fact you might not that much resolution as you think, and it will help you reduce your processing time. Resolution depends on processing parameters, image resolution and number of photos. all this can and should be taken into account. If you are scanning rocks for games you can get away with as low as 20 pictures, and still get a high resolution models especially if you apply some procedural detail-texture on top of your scanned texture. If you are scanning a castle, you might not need that much resolution either, just scan the castle in low res, identify the key repetitive features and scan those in high res, then generate the rest. Nonetheless, it might be the opposite, and you might need to take 500 images of a single pebble.

Most of the time you might not need to process in Ultra High Settings in Agisoft or High settings in Reality Capture.

Practice

Now that you know a few things on photogrammetry, here a few interesting cases that you might want endeavor:

Scan a Rock, this an easy target and a good start, just try to take as few images as possible, in order to reconstruct a full mesh, then start adding images in order to increase the details' resolution.

Scan a Statue, a statue is similar to rock with the added value of having some interesting concave shapes that will add a little challenge to your scanning journey.

Scan a Shoe, not sure why but everyone seems to be doing it, it might be an initiation ritual or something.

Scan a Tight Tunnel or some Stairs, the challenge here is that you won’t have enough room to move and take images from different angles, and the trick is to cross the tunnel while taking one photo of what’s in front of you each time you to take a step forward.

Scan an Interior Scene, valve has an interesting approach you can check out.

Scan a Building or even a Castle, try to do it with and without a drone, you can climb or some people attach their camera to a long pole. Hint

Scan a Reflective Surface, check out this tutorial by studio ten24 on 3D Scanning Reflective Objects with Photogrammetry

Scan a Head using One Camera, this is challenging, try not to move, and maybe use a turntable. Enjoy :)

Scan an Insect, here’s a good scan example of a scanned insect by 2cgvfx.

Scan a Terrain, check out this other approach by valve, though I would highly recommend you research the Nadir flight path for drones approach.

Other Scanning Methods

Please note that photogrammetry is not the solution for everything, and there are other scanning methods that can work better in some cases.

LIDAR is a surveying method that measures distance to a target by illuminating that target with a laser light. Lidar can be a faster way to collect 3D data, and is definitely more efficient for scanning vegetation and fields, though this method can be a little expensive. A LIDAR scanner is heavy and therefore it would be hard to attach it to a drone, yet some companies, have managed to create their own custom drones. Here is a quick video posted by Capturing Reality using Reality capture to combine laser data and photo data to create an accurate model, while explaining the advantages of using both methods.

David Laser Scanning is a much cheaper DIY alternative that also uses a laser to measure and scan an object. It can be used to scan small object, to medium objects (i.e. boats). It is worth noting that this solution has been recently acquired by HP.

Artec Scanner is a handheld laser scanner, that delivers remarkable results, and can be used to scan small items, cars, building depending on the scanner. Their site has some good resources on getting started.

Microsoft Kinect can also be used to scan objects and people, though the resolution is limited. The Kinect has been discontinued though you can find them on Ebay. Microsoft recently announced the Azure Kinect. Also Intel's Realsense is worth taking a look at.

RTI is a computational photographic method that captures a subject’s surface shape and color and enables the interactive re-lighting of the subject from any direction. RTI also permits the mathematical enhancement of the subject’s surface shape and color attributes.

Finally

Just do it, don’t overthink, just keep practicing, and try to scan with whatever equipment you can find.

If you read this, and all the articles I linked to, and you’re wondering what to read next for some reason, you can check out one of my other articles “The Workflows of Creating Game Ready Textures and Assets using Photogrammetry”. You can also check out the rest of the articles by visiting my game’s website World Void, and checking out the Devlog page.

If you have any questions, or if you think I missed anything, please let me know in the comments down below, and feel free to reach out to me on twitter @JosephRAzzam

Read more about:

Featured BlogsYou May Also Like