Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In this Sponsored Feature, part of <a href="http://www.gamasutra.com/visualcomputing">Intel's Visual Computing microsite</a>, Shrout and Davies examine the 'shift in processor architecture and design over the last few years' that has changed once simple rules regarding CPUs and computer chips in general into a 'much more complicated scenario'.

October 19, 2009

Author: by Ryan Shrout & Leigh Davies

[In this Sponsored Feature, part of Intel's Visual Computing microsite, Shrout and Davies examine the 'shift in processor architecture and design over the last few years' that has changed once simple rules regarding CPUs and computer chips in general into a 'much more complicated scenario'.]

No matter what platform you work on, developing a game can be a lot like trying to build something on quicksand: Somewhere between the time you start designing the game and the day it launches, the landscape you thought you were developing for shifts.

This might be a simple change to the game design, the inclusion of a new graphic technique to a more drastic shift to a completely new hardware platform, while on the PC the hardware installed based is constantly evolving. That is, while you were busy writing game code for the current hardware in the studio, the hardware industry has been aggressively increasing processor performance and adding new features to your customers' PCs.

Until as recently as 2005 the main processor advances were targeted at improving single threaded performance with each generation offering higher operating frequencies and better instruction level parallelism. Developers assumed that as newer processors were released they would "just be faster," and the code and applications would scale accordingly, frequently code was designed with the assumption the top end PC at the time of release would be faster than the development system the original code was written on.

However, a shift in processor architecture and design over the last few years has changed this one-time rule into a much more complicated scenario. With the introduction of the Intel Core i7 processor game developers can now up to eight times the number of computing threads that were once available.

Processor speed and features are no longer increasing in simply a linear fashion and new technologies such as turbo boost can have a significant impact on the system's performance, making it critical that the code written for any application be able to not only scale from processor generation to generation but also reliably adapt to future architectures down the road.

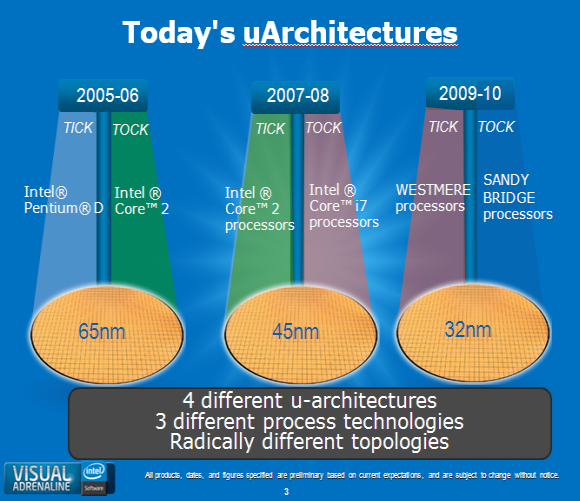

This fundamental shift in processor performance is a result of the many technical innovations in the world of processor architecture. Even in the last three years we have seen at least two cycles of the Intel "tick-tock" model of improvements: The "tick" introduces a die shrink process technology

that allows designers to fit more transistors in the same physical die space, while the "tock" introduces a new microarchitecture with performance and feature enhancements.

During the "tick" years the process technology has evolved from 65nm-generation products introduced in 2005 to 45nm generation in 2007 and will become 32nm by the end of 2009. During the "tock" years the original Intel Pentium processor microarchitecture evolved into the Intel Core microarchitecture in 2006, leading to the introduction of the Intel Core i7 processor and a new microarchitecture in 2008. Figure 1 illustrates this progression.

Figure 1. Intel's "tick-tock" model of architecture progression.

The upcoming Sandy Bridge microarchitecture will be introduced in 2010 and, in keeping with the other architectures shown in Figure 1, will differ dramatically from previous-generation processors. Although code written and optimized for one architecture will run on succeeding architectures, developers can frequently improve performance going forward and avoid potential performance pitfalls as new processors are released by ensuring their code can enumerate the hardware it's running on and adapt to it.

For many years the hardware and software development cycles were mostly independent of each other. Programmers of compilers and other low-level applications needed intricate knowledge of the hardware underneath in order to extract the best performance from the platform, while games relied on the compiler to optimize the game code for the hardware while the coder concentrated on finding the best algorithm for the job . Today the same level of hardware expertise is now required for more general computing tasks, such as game development. And it's easy to understand why.

Average game development takes from about one to three years, and any new core engine technology must be programmed to last even longer on the market as it will likely span multiple shipping titles; it is not unusual for a large-scale game engine to take up to five years total development time from inception to shipping. Intel's rapid-development tick-tock model means the average game engine will need to span many different processor architectures.

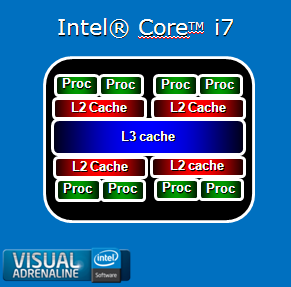

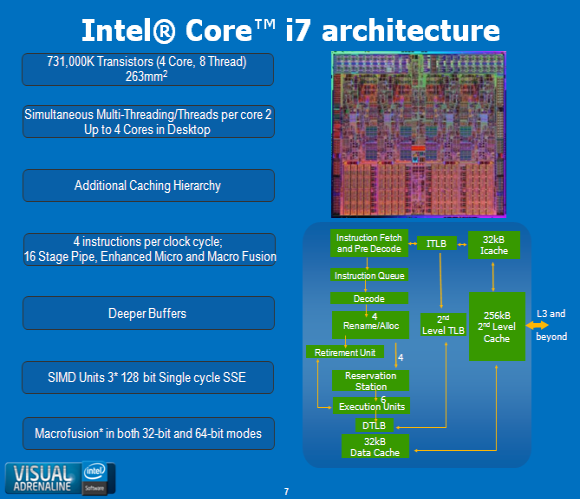

The introduction of multi-core processors (rather than simply higher clocked processors) means that developers need to write code that scales to make use of that hardware. With the increasing emphasis on improving processor power through larger core counts, the hardware can no longer speed up existing code easily unless the designer has purposely built the software with multi-threading in mind. Consider the Intel Core i7 processor in Figure 2, to fully utilize this processor the game would need to use 8 threads communicating through a hierarchical cache.

Programming decisions made today will dramatically impact, the performance of a game on future architectures. So although processors are continually getting faster and more powerful, developers must make sure they are properly using the computing power at their disposal.

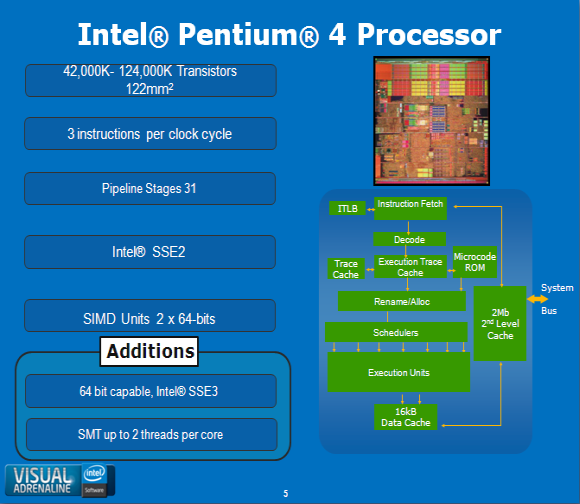

To place this issue into context, let's look at the evolution of the most recent Intel architectures, starting with the Intel Pentium 4 processor (Figure 3).

Figure 3. The features of the Intel Pentium 4 processor microarchitecture.

In 2005 the Intel Pentium 4 processor architecture was the top-end consumer processor from Intel on the market. It featured from 42 to 124 million transistors depending on the exact model and had a die size of about 122 mm2. The architecture allowed three instructions per clock cycle to be issued, and the pipeline was comparatively deep at 31 total stages in later years.

The processor integrated Intel Streaming SIMD Extensions 2 (Intel SSE2) instructions and had 64-bit Single Instruction Multiple Data (SIMD) units; thus, every 128-bit SIMD instruction took two cycles to execute. Toward the end of this architecture's life cycle, Intel added support for 64-bit processing, Intel SSE3 instructions, and simultaneous multi-threading (SMT)-Intel Hyper-Threading Technology (Intel HT Technology). Low-level programmer optimizations tended to focus either on improving branch prediction behavior or using Intel SSE to parallelize data algorithm hot spots.

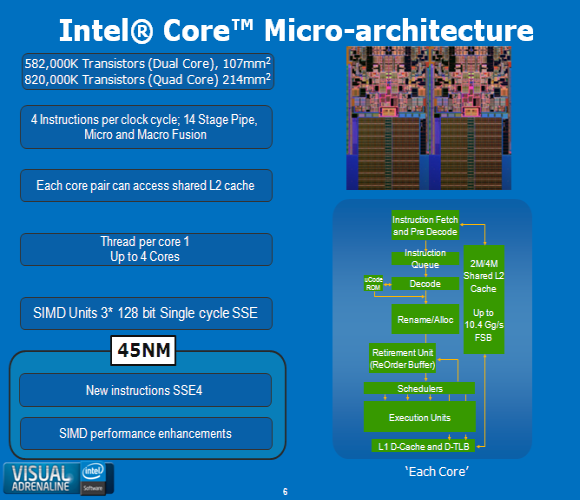

Figure 4. The features of Intel Core microarchitecture.

Figure 4 shows the Intel Core microarchitecture, the foundation for a family of high-performing multi-core processors. Transistor counts increased by up to seven times compared to Intel Pentium 4 processors, with 582 million for a dual-core processor and 820 million for a quad-core processor. One important development was the move to a 14-stage pipeline that reduces the performance hit when a branch miss-prediction occurs, while the instructions issued per clock were increased to four.

This was also the first desktop Intel architecture to have a shared L2 cache between two cores on a single die, while a quad-core processor actually has two shared L2 caches that need to communicate over the comparatively slower front-side bus. Game developers were faced with the need to scale to up to four threads of processing just to make efficient use of the transistors on the die. Functional parallelism was common with two to three main game threads, with additional lightweight operating system (OS) and middleware threads, such as sound.

As well as the increasing core count and more efficient pipeline the Intel Core microarchitecture also doubled the performance of the SIMD units because the 128-bit processing can be done on a single processor clock cycle. The "tick," which brought 45nm technology, also introduced the Intel SSE4 instruction set and a shuffle unit speed up of three times faster for enhanced SIMD setup speed, providing increased opportunities for optimizing floating-point-intensive algorithms.

Algorithms that previously had been difficult to optimize using SIMD because of data structures that were unsuitable for fast SOA (structure of arrays) processing became feasible due to the much improved performance of the shuffle unit and a wider range of instructions for reordering data

Figure 5. The next-generation Intel Core microarchitecture exemplified by the Intel Core i7 processor.

In 2008 the latest processor microarchitecture from Intel was introduced with the Intel Core i7 processor (Figure 5). It featured a native, monolithic quad-core processor for the first time from Intel and used fewer transistors than the Intel Core 2 Quad processor (731 million versus 820 million). Intel HTTechnology last seen on the Pentium 4 was reintroduced on the quad-core processor to allow the Intel Core i7 processor support for up to eight simultaneous threads together with increased resources in the out-of-order engine to support the increased number of threads.

Despite the reduction in transistors, the die size increased to 263 mm2 thanks in large part to the more complex architecture design that includes three levels of cache hierarchy. The pipeline was extended by only two stages, while both the microfusion and macrofusion in the decoder were enhanced. The amount of architectural change from the Intel Core microarchitecture to the Intel Core i7 processor microarchitecture is as dramatic as the change from the Intel Pentium processor microarchitecture to the Intel Core microarchitecture though the differences are not as obviously laid out.

Figure 6. The core and uncore diagrams of the Intel Core i7 processor design.

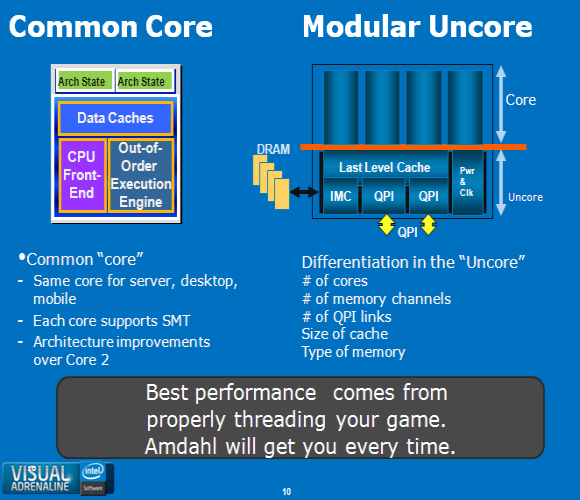

The Intel Core i7 processor architecture is designed from the ground up to be modular, allowing for a variable number of cores per processor. Each of the cores on the Intel Core i7 processor is identical and support better branch prediction, improved memory performance when dealing with unaligned loads and cache splits and improved stores compared to previous architectures. Each common core supports two register sets to support SMT, these two register sets share the L2 and L1 data caches and the out-of-order execution units. Each core appears as two logical processors to the OS.

The Intel Core i7 processor microarchitecture also introduced an uncore portion to the processor design. The uncore contains the other processor features, such as the memory controller, power, and clocking controllers. It runs at a frequency that differs from that of the common cores. The architecture allows for different numbers of cores to be attached to a single uncore design and for the uncore to vary based on its intended market, such as adding additional QPI links to allow for multi-socket servers and workstations.

To get the best performance benefit from the Intel Core i7 processor developers need to aggressively implement multithreading. Game engines that have been written for four or fewer threads will utilize only a fraction of the available processing power and may see only limited performance gains over previous quad-core processors. Developers have to go beyond functional parallelism and merely separating the physics or AI and instead move to data-level parallelism with individual functions distributed across multiple threads.

One of the key requirements of any graphically intensive game will be ensuring the rendering engine itself scales across multiple threads and the graphic pipeline between the game and video card doesn't become the bottleneck limiting the use of both the processor and graphics processing unit (GPU). Hopefully the support for multi-threaded rendering contexts in DirectX 11 will significantly benefit this area of games programming.

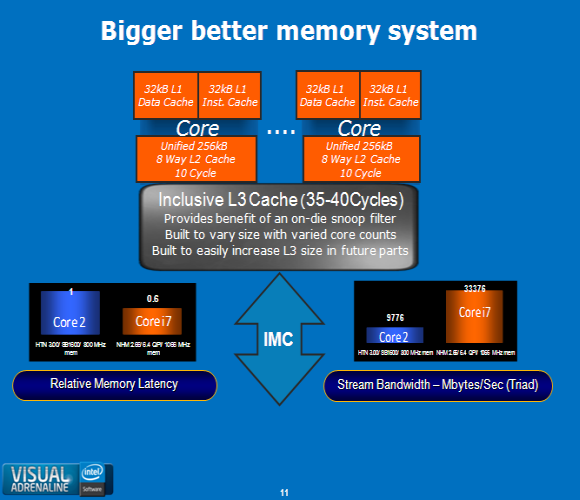

Figure 7. The memory hierarchy on the Intel Core i7 processor.

Figure 7 shows the memory hierarchy on the Intel Core i7 processor which is significantly different from previous Intel desktop designs. Each core has a 32 K L1 cache accessible inside of four cycles-incredibly fast. Compared to the Intel Core processor family, the 256 K L2 cache is significantly smaller, even though it is much faster at a 10-cycle access time and runs at a higher clock rate. The smaller L2 cache is offset by an additional, much larger L3 cache that is part of the uncore and shared by all of the common cores.

The new L3 cache has a 35-40 cycle access time depending on the variable ratio between the core and uncore clock speeds. The L3 cache has a shared, inclusive cache design that mirrors the data in the L1 and L2 caches. While the inclusive cache design might at first glance seem a less efficient use of the total available cache on the die, the inclusive design allows for much better memory latency.

If one core needs data that it can't find in its own local L1 and L2 cache stores, it need only query the inclusive L3 cache before accessing the main memory, instead of probing the other cores for cache results. The L3 cache is connected to the new integrated memory controller that is connected to the DRAM in the system offering much high bandwidth than the previous Front-Side bus used on Core 2 processors. For a multi-socket system the memory controller can communicate to another socket via a quickpath interconnect replacing the Front-Side bus.

Compared to the previous generation of high end desktop systems the performance of the main memory increased both in terms of bandwidth (by a factor of three) and latency (by a factor of 0.5). When deciding whether to run eight threads of computing, it is important to have memory bandwidth available to keep from starving the processor cores.

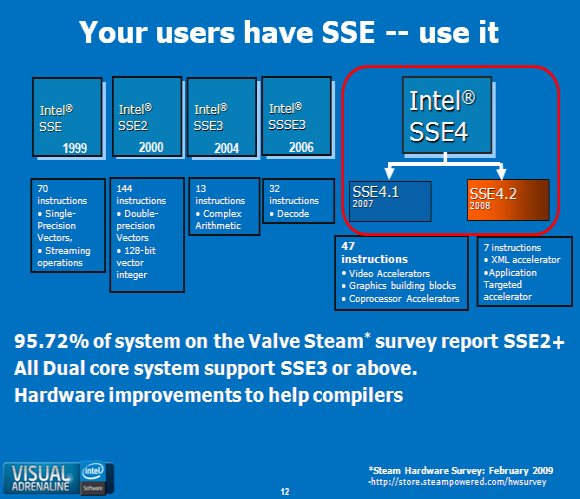

The Intel Core i7 processor also introduced and extension to the various SIMD instruction sets support on Intel processors called SSE4.2 The new instructions were targeted at accelerating specific algorithms and while they themselves may have limited benefits in games (depending on whether you use an algorithm that benefits from them) the support of SSE in general is very important to getting the most from the PC. It is frequently surprising to games developers just how wide spread the support for previous versions of SSE is.

The Intel SSE instruction set was originally introduced in 1999, and the Intel SSE2 set soon followed in 2000 (Figure 8). It seems reasonable to assume that most game developers today are targeting hardware built after 2000, and thus developers can essentially guarantee support for Intel SSE2 at a minimum. Targeting at least a dual-core configuration as a minimum specification will guarantee support for Intel SSE3 on both Intel platforms and AMD platforms.

Figure 8. The evolution of Intel Streaming SIMD Instructions.

In addition to hand-coded optimizations, one of the goals of each new architecture is to allow compilers more flexibility in the types of optimizations they can automatically generate. Improvements allowing better unaligned loads and more flexible SSE load instructions enable the compiler to optimize code that previously could not be done safely with the context information available at compile time. Thus it's always advisable to test the performance with the compiler flags set to target the specific hardware. Some compilers also allow the multiple code paths to be generated at the same time providing backward compatibility together with fast paths to targeted hardware.

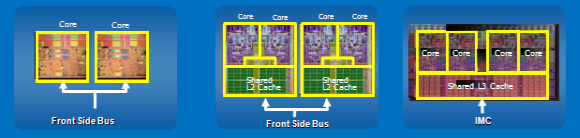

One of the more important aspects of threading for game development is the interaction of the various threads with the cache. As previously mentioned, the cache configurations on the Intel Pentium 4 processor, the Intel Core processor family, and the Intel Core i7 processor differ. (Figure 9)

Figure 9: Cache layouts on different microarchitectures

The Intel Pentium 4 processor has to communicate between threads over the front-side bus, thus requiring at least a 400-500 cycle delay, while the Intel Core processor family allowed for communication over a shared L2 cache with a delay of only 20 cycles between pairs of cores and the front-side bus between multiple pairs on a quad-core design The use of a shared L3 cache in the Intel Core i7 processor means that going across a bus to synchronize with another core is unnecessary unless a multiple-socket system is being used.

And because the cache sizes are not increasing as rapidly as are the number of cores, developers need to think about how to best use the available finite resources. Techniques such as cache blocking, which limits inner loops to smaller sets of data and streaming stores, can help to avoid cache line pollution thereby reducing cache thrashing and minimizing the communications required over the last level of shared cache.

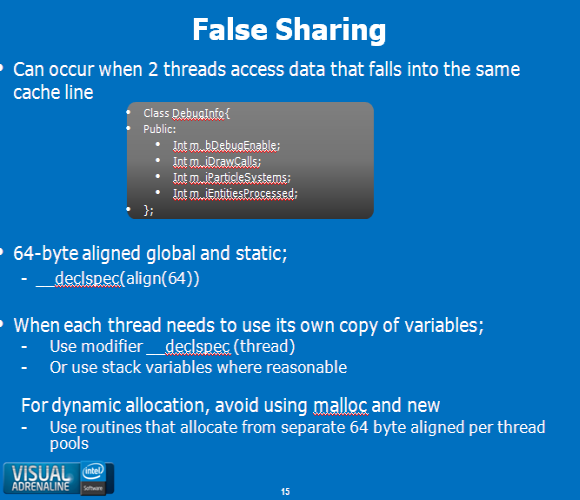

One important potential cache pitfall is the false sharing that occurs when two threads access data that falls into the same cache line.

Figure 10. An example of false sharing.

Figure 10 shows an example of false sharing, in which the developer created a simple global object to store debug data. All four of the integers created easily fit into a single line of cache. However, multiple threads can access different portions of the structure. When any thread updates its relevant variable, such as the main render thread submitting a new draw call, it pollutes the entire cache line for any other thread accessing or updating information in that object. The cache line must be resynchronized across all the caches, which can decrease performance.

If those two threads are communicating via the front-side bus of an Intel Core processor or Intel Pentium 4 processor, the user can experience as much as a 400-500 cycle delay for that simple debug code update. Care should be taken when laying out variables to ensure their layout accounts for typical usage patterns. Tools such as the Intel VTune Performance Analyzer can be used to locate cache issues, such as false sharing.

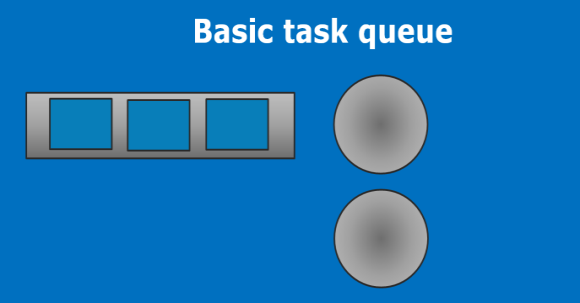

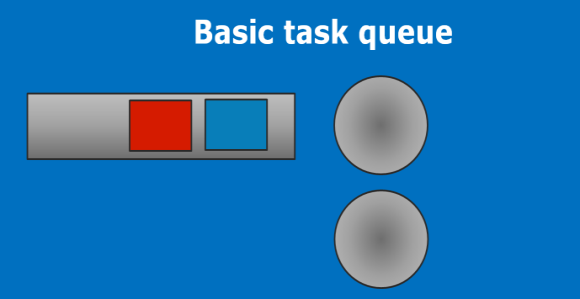

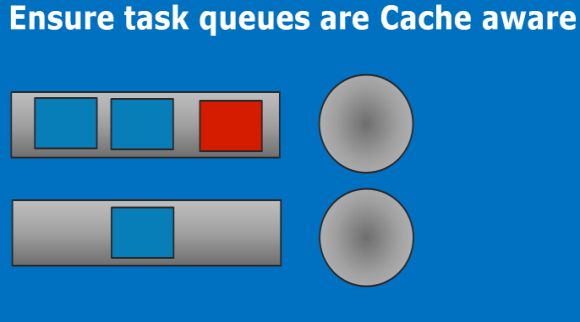

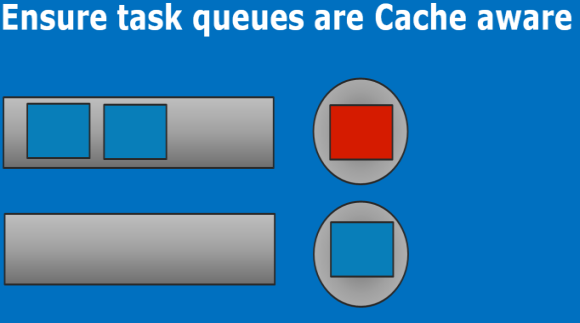

As an example of how cache design can significantly affect the performance of an algorithm, we can use a basic task manager that distributes a collection of tasks to worker threads as they become available after completing their previous jobs. This approach is a common way of adding scalable threading into an application by breaking the workload into discrete tasks that can be executed in parallel. However, as Figure 11 shows, several issues can arise from using this approach. First, both threads would have to access the same data structure as it pulls the information off the queue.

Figure 11a. Simple task queue. Figure 11b. Tasks assigned to threads

Depending on how the programming synchronizes the data, this delay can be anywhere from a few cycles with atomic operations on shared cache to a few hundred cycles when using the front-side bus to as much as a few thousand cycles if using a mutex or any other operation that requires a context switch. The overhead of pulling small tasks off the basic task queue can be quite significant and can even outweigh the benefits gained from parallelizing them.

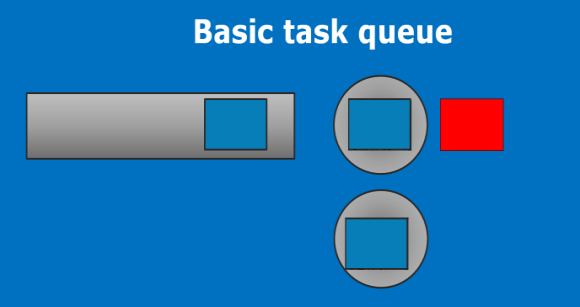

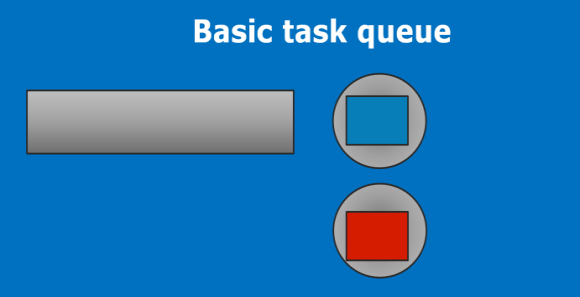

Figure 11c. Procedural task added to queue. Figure 11d. New task executed on different core

Another problem with this queue design is there is no correlation between the threads and the data processed on them. Tasks that require the same data can be executed on different threads, increasing memory bandwidth and potentially causing sharing issues similar to those outlined above. Problems can also occur when developers put procedurally generated tasks (Figure 11b and 11c ) back into the queue without correlating the thread it executes on to the thread the parent job was originally executed on.

The developer is fortunate if the task happens to hit the same core and thread, and much of the data it requires may still reside in the cache from the previous task; otherwise, the task will need to be completely resynchronized again with the delays mentioned above( Figure 11d). And obviously as the number of available threads increases the complexity of such a system increases dramatically, and the chances of "lucky" cache hits decrease.

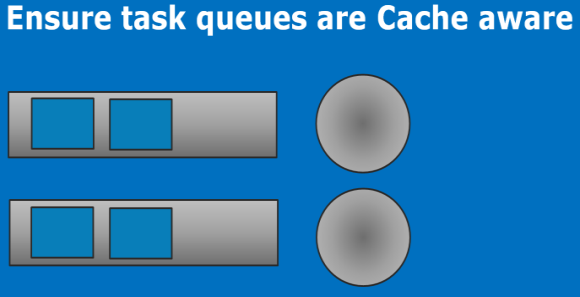

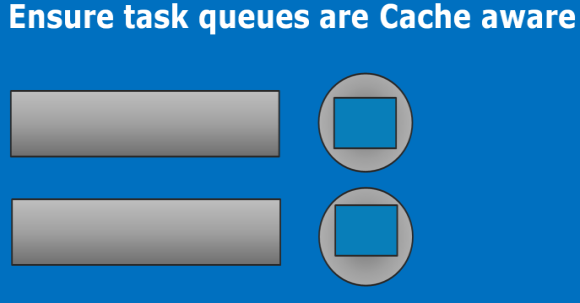

One approach that is gaining favor is to build individual task queues per thread (Figure 12) thereby limited the synchronization points between threads and implementing a task-stealing approach similar to that used in the Intel Threading Building Blocks.

Instead of placing procedurally generated tasks on the end of a single queue, this method uses a last-in-first-out order in which the new task is pushed to the front of the same queue its parent task came from. Now the developer can make good use of the caches because there is a high likelihood that the parent task has warmed the cache with most of the data the thread needs to complete the new task.

The individual queues also mean tasks can be grouped by the data they share.

Figure 12a. Per-thread Figure 12a. thread independently pull data from queues

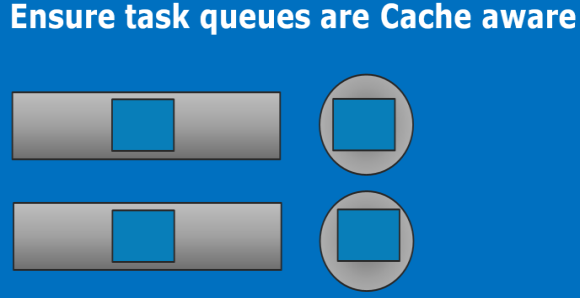

Figure 12c. LIFO for procedural tasks Figure 12d. task used hot cache

Taking a task from another queue occurs only when a thread-specific queue is empty. Although this is not the most efficient use of the cache, compared to sitting idle it may be the lesser of the two evils and provides a more balanced approach.

Figure 12e. thread task steal when queue empty

In addition to an intelligent task queue the algorithms themselves that are being parallelized may also need to be aware of the under lying hardware, intelligent use of task assignment and grouping of when jobs get executed can greatly improve cache utilization and therefore overall performance, the best optimization tool is always the programmers brain.

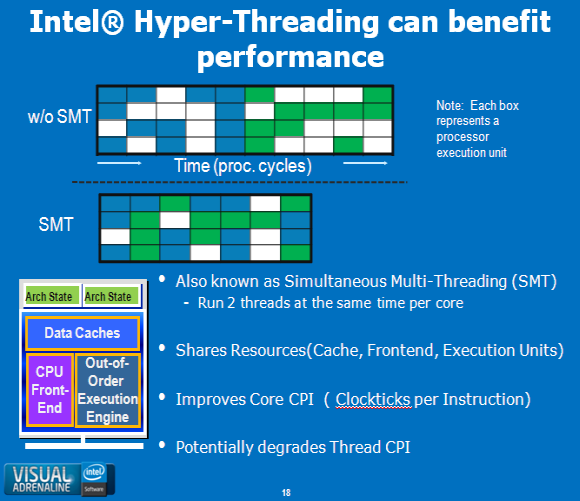

Introduced with the Intel Pentium 4 processor, Intel HT Technology enables a second thread of computing on a single-core processor at a small cost of transistors (Figure 13). In theory it is a power-efficient way to increase performance because the two threads would be sharing the same execution cores and resources. In many cases a core has "bubbles" in which a single thread cannot execute the maximum four instructions per clock due to dependencies; these bubbles can be filled in with instructions and work from another thread, thereby completing earlier than they would otherwise.

The implication here is that while overall core clock ticks per instruction improves, individual thread performance can go down slightly as the processor juggles the data and instructions for each thread. This is normally something that can be ignored, but occasionally it can create an issue when a performance-critical thread executes alongside a thread doing low-priority work because SMT will not distinguish between them and treats both threads as equal. Where a thread executes depends on the OS; newer OSs improve how multiple threads are distributed, maximizing hardware performance.

Figure 13. Intel Hyper-Threading Technology removes "bubbles" from process threads improving core clock ticks per instruction.

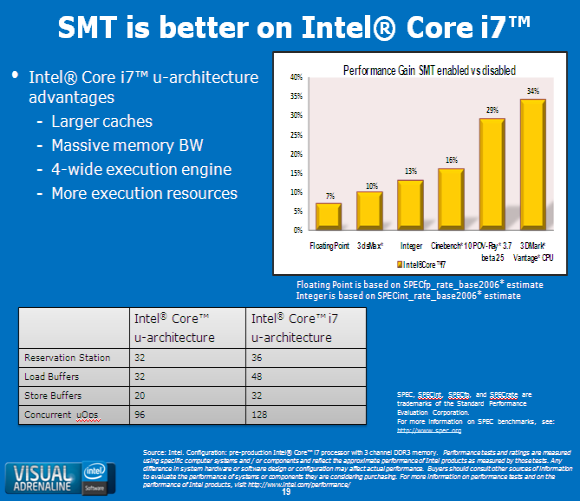

SMT support has been improved in its implementation on the Intel Core i7 processor by removing many of the bottlenecks that limited its efficiency on earlier architectures. With the increase in memory bandwidth by a factor of three, the processor is much less likely to be bandwidth limited and can keep multiple thread feed with data. Resources are no longer split at boot time when Intel HT Technology is enabled, as was the case with the Intel Pentium 4 processor; the Intel Core i7 processor logic is designed to be totally dynamic.

When the processor notices that the user is running only a single thread, the application gains access to all the compute resources, and they are shared only when the software and OS are running more than one thread on that physical core. The actual number of resources available to the threads has also increased (Figure 14) relative to the previous generation.

Figure 14. Simultaneous multi-threading improvements on the Intel Core i7 processor microarchitecture.

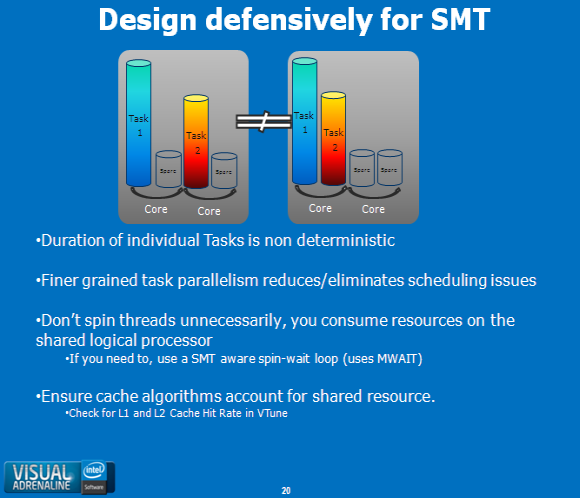

Figure 15. Programming for SMT can avoid performance penalties.

There are also some simple approaches to the application coding that will allow SMT to help performance or, at the very least, prevent potential problems (Figure 15) in situations where the work is unevenly distributed across logical and physical cores. The first fix is to break up the tasks into more granular pieces that can be shuffled between logical processors more often. This will effectively lower the likelihood that the program will end up with the two biggest tasks running on the same physical core.

Another issue arises in cases where a developer is trying to spin threads for scheduling purposes. A thread spinning on a logical processor uses execution resources that could be better utilized by the other thread on the core. The solution is to use SMT aware methods such as an EnterCriticalSectionSpinwait, which can put the actual thread to sleep for 25 cycles before a recheck or use events to signal when a thread can actually run rather than relying on Sleep.

A similar affect can happen if a developer is using "sleep zero" to make a background task yield to the primary threads in an application and there are fewer threads than logical processors on the system the yield won't stop the background task running because the OS thinks it has a completely free processor. The spin essentially gets ignored, and the single thread that was supposed to run only occasionally gets thrashed over and over, causing a slowdown in performance for any thread sharing the same physical core. In this case more explicit synchronization is needed such as a windows event to ensure threads only get run once and at an appropriate time.

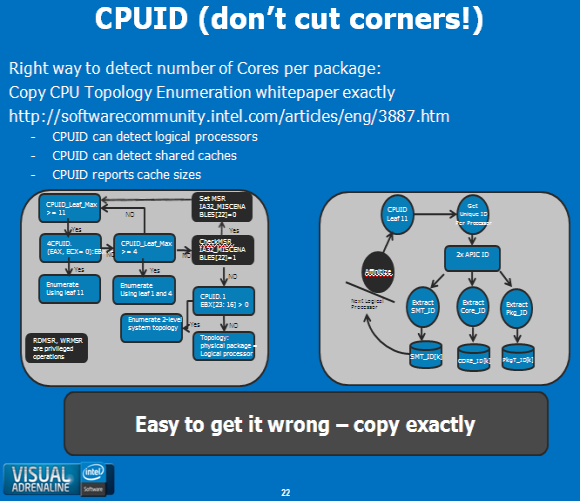

One seemingly straightforward way to program and plan for these different cache hierarchies and Intel HT Technology scenarios is to find the topology of the specific processor on the user's system and then tailor the algorithms to specifically target the exact hardware. The problem lies in exactly defining a processor topology even with knowledge of the CPUID (derived from CPU IDentification) specifications.

The use of CPUID has changed over time as additional leafs have been added. The incorrect but common way used in the past to calculate the number of available processors was to use CPUID leaf 4. This accidentally worked on previous architectures because it provided the maximum number of addressable ID in the physical package, and on earlier hardware this was always the same as the actual number of processors. However, this assumption doesn't hold true on the Intel Core i7 processor.

Figure 16. Testing for exact processor topology is very complex.

There are ways to make CPUID work to your advantage. However they are extremely complex, and it is a long multistep process (Figure 16). Several good white papers with concrete examples of CPUID detection algorithms can be found on Intel's Web site. Once in place the CPUID information can tell you not only how many cores the user's processor has but also what cores share which caches. This opens up the possibility of tailoring the algorithms for specific architectures, though the performance and reliability of the applications can easily be downgraded if corners are cut during this process.

Even more complications arise when it comes to using thread affinitization in a game engine. Masks generated by topology enumeration can vary with the OS, OS versions (x64 versus x86), and even between service packs. This can make setting thread affinity a very risky thing to do in a program. The order in which the cores appear in a CPUID enumeration on the Intel Pentium 4 processor is completely different from how they appear on the Intel Core i7 processors, and the Intel Core i7 processor can show up in reverse order between OSs. The affinitization of the code will likely cause more headaches than prevent them if these differences aren't accounted for through careful enumeration and algorithm design.

Developers must also consider the entire software environment of the game and the OS; middleware within an application can create its own threads and may not provide an API that allows them to be scheduled well with the user's code. Graphics drivers also spawn their own threads that are assigned to processor cores by the OS, and thus the work that went into properly coding the affinity for the game threads can be polluted. Setting the affinity in the application is basically betting on short-term gains against a world of potential headaches down the development road.

If hard-coded thread affinity with incorrect CPUID information is used, the application performance will in fact get hit twice! In the worst case scenario, applications can fail to run on newer hardware because of the assumptions made on previous architectures. For gaming the advice is to avoid using thread affinity because it provides a short-term solution that can cause long-term headaches if things go wrong-far outweighing any gains. Instead, use processor hints such as SetIdealProcessor rather than a hard binding.

If a developer decides to go down this road, a safety feature should be built in allowing the user to disable affinitization if future processor architectures and hardware greatly impact the performance or stability, or better still allow an opt-in approach and ensure the application performs as well as possible without affinitization in its default configuration. If the software requires affinitization to run, there is probably a nasty bug waiting to happen on some combination of the hardware and software environments.

Each version of the Microsoft Windows* OS handles thread scheduling slightly differently; even Windows 7 has improvements over how Windows Vista* handles them. The scheduler is a priority-based, round-robin system that is designed to be fair to all tasks currently running on the system. The time allotment that Windows gives for threads to execute before being pushed out is actually quite large- around 20-30 milliseconds or so-unless an event triggers a voluntary switch. For a game this is a very large span of time, potentially lasting more than a frame.

Given the course gain OS scheduling it's important for a game to correctly schedule its own threads to ensure tasks execute for an appropriate amount of time. Incorrect thread synchronization can mean that minor background or low priority threads run far longer than expected, significantly affecting the game's critical threads. Unfortunately, too much synchronization can also be a bad thing because changing between threads will cost thousands of cycles.

Too many context switches between threads sharing data can lower performance. To avoid the performance penalty of context switching, it's beneficial to execute a short spin-lock on resources that are likely to be used again very soon. Of course, performance degradation will occur if the waits are too long, so monitoring thread locks closely is important.

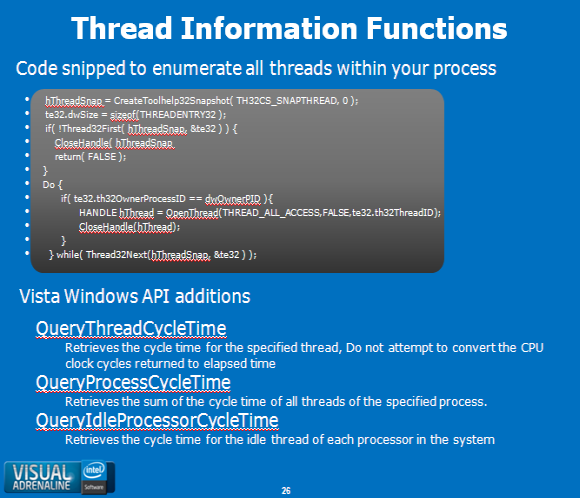

Figure 17. Self monitoring the threads allows easy location of performance bottlenecks.

Figure 17 shows a way to enumerate the threads that the application and the middleware the program uses have created. Vista introduced a few new API additions that, once the threads are enumerated, can tell the developer how much time has been spent on this particular core, how long a particular thread has been running, and so on. Taking this into consideration, it would be easy to build an on-screen diagnostic breakdown of threading performance for development or even end users.

Two interesting technologies come to mind when thinking about the future of multi-threading, microarchitectures and gaming. The next "tock" called Sandy Bridge architecture will introduce the Intel Advanced Vector Extensions (Intel AVX). Expanding on the 128-bit instructions in Intel SSE4 today, Intel AVX will offer 256-bit instructions that are drop-in replaceable with current 128-bit SSE instructions. In much the same way as current processors that support 64-bit registers can run 32-bit code by using half the register space, the AVX-capable processors will support Intel SSE instructions by using half of the registers.

Vectors will run eight-wide rather than the four-wide that architectures can handle today, thus increasing the potential for low-level data-level parallelism and providing improvements for gaming performance. Sandy Bridge will also provide higher core counts and better memory performance allowing for more threading at a fine-grained task-based level.

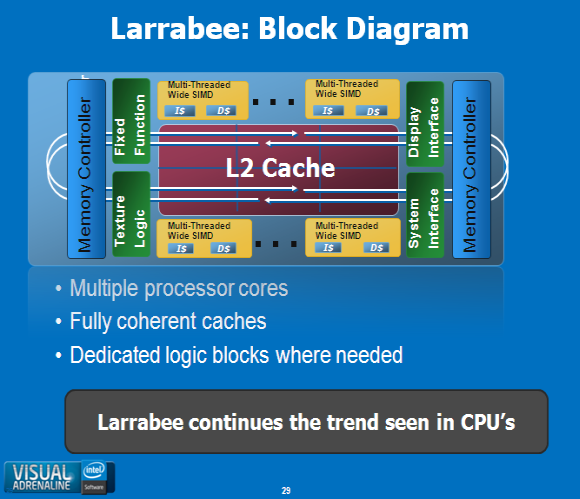

Figure 17. Larrabee will bring processor-type architectures to the graphics community.

The multi-core Larrabee architecture (Figure 17) is the other upcoming technology of interest. Though ordained as a GPU, the design basically takes the current multi-core processor to the next level. Everything mentioned in regard to threading performance, programming models, and affinity will likely apply to this architecture, though scaled to a much higher core count. Developers planning for the future should consider how to scale beyond the Intel Core i7 processor and even Sandy Bridge and look at Larrabee in terms of cache and communication between threads.

The desktop processor is evolving at a rapid rate, and although the performance enhancements are offering developers a huge opportunity to improve realism and fidelity, they have also created new problems requiring different programming models. Developers can no longer make the assumption that the code they write today will automatically run better on next year's hardware. Instead they need to plan for upcoming hardware architectures and write code that easily adapts to the changing processor environments, especially in regard to the number of available threads.

One side effect of the evolving hardware is the increasing importance of performance testing. Using the correct tools and developing a structured performance testing suite with defined workloads is critical to making sure an application performs its best on as wide a range of hardware as possible.

Testing early in a game's design cycle on a variety of hardware is the best possible way to ensure design decisions offer the maximum long-term performance solution. Many off the issues outlines during the article can be identified using tools such as Intel VTune Performance Analyzer which can monitor cache behavior and the instruction throughput in a particular thread; in addition there are tools such as the Intel Thread Profiler designed to measure thread concurrency.

Optimization guidelines for the processors mentioned in the article as well as other Intel processors can be found at http://www.intel.com/products/processor/manuals/.

Read more about:

FeaturesYou May Also Like