Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Part 2 in a 5-part series analyzing the results of the Game Outcomes Project survey, which polled hundreds of game developers to determine how teamwork, culture, leadership, production, and project management contribute to game project success or failure.

This article is the second in a 5-part series.

Part 1: The Best and the Rest is available here: (Gamasutra) (BlogSpot) (in Chinese)

Part 2: Building Effective Teams is also available here: (Gamasutra) (BlogSpot) (in Chinese)

Part 3: Game Development Factors is available here: (Gamasutra) (BlogSpot) (in Chinese)

Part 4: Crunch Makes Games Worse is available here: (Gamasutra) (BlogSpot) (in Chinese)

Part 5: What Great Teams Do is available here: (Gamasutra) (in Chinese)

For extended notes on our survey methodology, see our Methodology blog page.

Our raw survey data (minus confidential info) is now available here if you'd like to verify our results or perform your own analysis.

The Game Outcomes Project team includes Paul Tozour, David Wegbreit, Lucien Parsons, Zhenghua “Z” Yang, NDark Teng, Eric Byron, Julianna Pillemer, Ben Weber, and Karen Buro.

The Game Outcomes Project, Part 2: Building Effective Teams

As developers, we all spend many years and unfathomable amounts of effort building game projects, from tiny indie teams to massive, sprawling, decades-long development efforts with teams of dozens to hundreds of developers. And yet, we have no good way of knowing with certainty which of our efforts will pay off.

In a certain sense, game development is like poker: there’s an unavoidable element of chance. Sometimes, tasks take far longer than expected, technology breaks down, and design decisions that seemed brilliant on paper just don’t work out in practice. Sometimes external events affect the outcome – your parent company shutters without warning, or your promised marketing budget disappears. And sometimes, fickle consumer tastes blow one way or the other, and an unknown indie developer can suddenly find that the app he made in a two days reached the top of the app charts overnight.

But good poker players accept that risk is part of the game, and there are no guaranteed outcomes. Smart poker players don’t beat themselves up over a risk that didn’t work out; they know that a good decision remains a good decision even if luck interfered with the outcome of one particular hand.

But some teams clearly know how to give themselves better odds. Some teams seem consistently able to craft world-class games, grow their audience, and give themselves better odds year after year.

It’s a well-known statistic that the best programmers are 10 times as productive as mediocre ones; it seems obvious to anyone who has worked on more than a few teams that there must be similarly broad differences between teams (especially if these teams include the 10x-more-productive programmers!)

What makes the best teams so much better than the rest? If we could find out what makes some teams consistently able to execute more successfully, that would be extraordinarily useful, wouldn't it?

And most importantly: are we, as an industry, actually trying to figure that out?

Are we even asking the right questions?

The Game Outcomes Project was a systematic, large-scale study designed to deduce the factors that make the most effective game development teams different from the rest. We ran a large-scale anonymous survey in October and November of 2014 and collected responses from several hundred developers. We described the background of the survey in our first article and explained the technical details of our approach on the Methodology blog page.

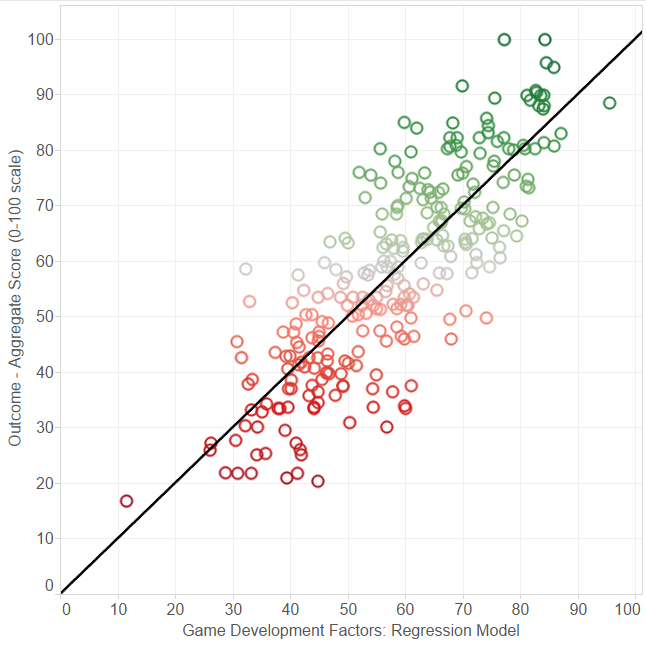

Take a look at Figure 1 below, which illustrates some of our results.

Figure 1. Correlation of our game development linear regression model (horizontal axis) with aggregate project outcome score (vertical axis). 273 data points are shown.

This scatter plot shows a correlation of the predictive model we created (based on roughly 30 factors) against the aggregate game development project outcome score we described in Part 1. The model has a very strong correlation of 0.82 and a statistical p-value below 0.0001 (allowing us to interpret this as evidence against the assumption that the data is independent).

In other words, each point represents one game development project reported by one of our survey respondents. The vertical axis is how successful each game was, and the horizontal axis is how successful our model predicts each game would be.

What this chart tells us is that the teams in the upper-right (green) are doing things very differently from the teams in the lower left (red), and we can all benefit from learning what those differences are.

Here are four more charts, shown as an animated GIF on a 4-second timer. Only the vertical axis changes in each case.

Figure 2. Correlation of the predictive model (horizontal axis) with each of the 4 outcomes from our survey: return on investment, aggregate review/MetaCritic scores, project delays, and internal satisfaction. This is an animated GIF with a 4-second interval. Color (from red to green) is tied to each individual outcome, and the points in between the rows are interpolated values for missing data points.

This is the same model as in Figure 1, mapped against the four individual outcomes:

internal team satisfaction with the project (correlation 0.47)

timeliness / severity of project delays (correlation 0.55)

return on investment (correlation 0.52)

aggregate reviews/MetaCritic scores (correlation 0.69)

(All of these correlations are statistically significant, with p-values below 0.0001.)

As with a poker game, there is an unavoidable element of risk involved in game development. But learning better strategies will raise our game and improve our odds of a better outcome. If we move ourselves further to the right side of the correlation graph above, that should also improve our odds of moving upwards, i.e., experiencing better project outcomes.

Most of us on the Game Outcomes Project team are game developers ourselves. We initiated this study because we wanted to learn the factors that will allow us to make better games, help YOU make better games, and allow all of us to be happier and more productive while doing it.

If you want to find out what those factors are, keep reading.

Hackman’s Model: Setting the Stage

The core of our survey was based around three separate models of team effectiveness, each of which was derived from one of the three bestselling books on team effectiveness shown below.

The first model was built from J Richard Hackman’s Leading Teams: Setting the Stage for Great Performances. This book describes a model for enhancing team effectiveness based on five key enabling conditions that allow a team to function optimally, based on extensive research and validated management science. This approach is unique in that rather than seeing leaders and managers as drivers of team success, it views leaders as facilitators, setting up the proper conditions to maximize the team’s effectiveness.

Briefly, Hackman’s enabling conditions are:

It must be a REAL TEAM. The task must be appropriate for a team to work on; the members must be interdependent in task processes and goals; there must be clear boundaries in terms of who IS and who is NOT on the team; team members must have clear authority to manage their own work processes and take charge of their own tasks; there must be stable membership over time, with minimal turnover; and the team's composition must be based on a combination of technical skills, teamwork skills, external connections, team size, functional and cultural diversity, and experience.

Compelling Direction. There must be a motivating goal or important objective that directs attention, energizes and sustains effort, and encourages development of new strategies.

Enabling Structure. Tasks, roles, and responsibilities must be clearly specified and designed for individual members.

Supportive Context. The team needs a shared belief that it's safe to take interpersonal risks (“psychological safety”), which includes a deep level of team trust that leads to a willingness to regularly point out errors, admit mistakes, and warn of potential problems or risks. The team also needs incentives encouraging desirable behaviors and discouraging undesirable behaviors, ample feedback and data to inform team members toward improving their work, and the tools and affordances to get their jobs done.

Expert Coaching. The team needs access to different types of mentors outside the team boundaries helping members perform tasks more effectively. This includes motivators (individuals who enhance effort and minimize social loafing), consultants (individuals who improve performance strategy, avoid mindless adoption of routines, and make sure work matches task requirements) and educators (people who can enhance knowledge and skill).

In our developer survey, we attempted to capture each of these broad categories with a set of several questions. Due to space constraints in the survey, we had to limit ourselves to 2-4 questions for each of Hackman’s five enabling conditions.

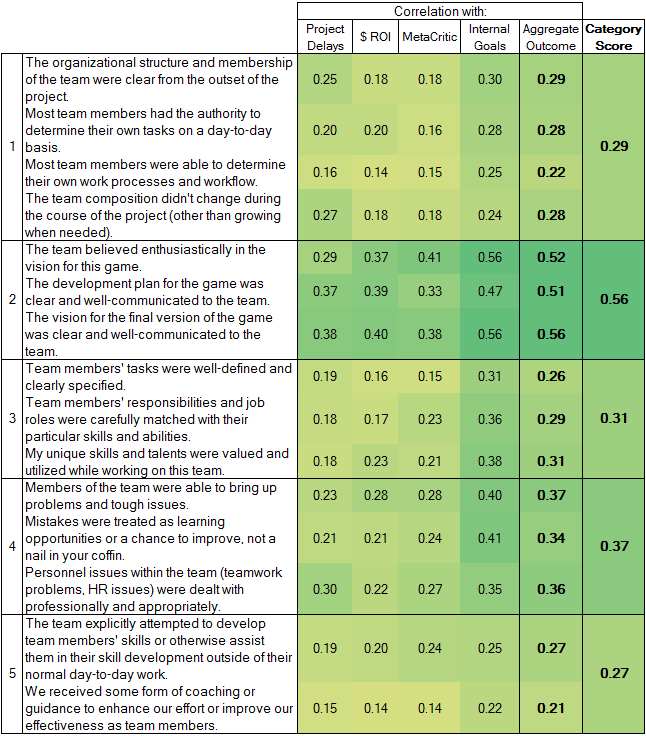

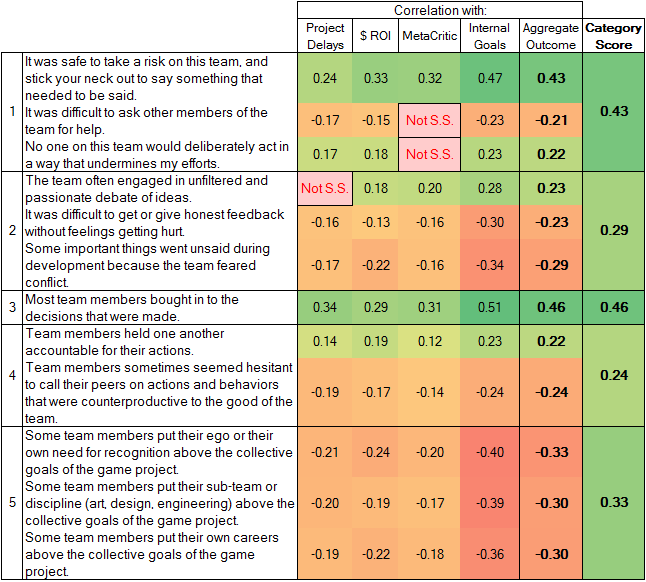

The results are in Figure 3 below. The columns at right show the correlation of more positive answers to each of these questions with our four individual outcome questions at the end of the survey (“delays,” “ROI,” “MetaCritic,” and “Internal”), as well as our aggregate outcome score (“Aggregate Outcome.”) The “Category” column lists the highest aggregate outcome in each category as the overall value of that category.

Figure 3. The Hackman model results, showing the correlation of each question in the model's 5 categories with each outcome question and the aggregate outcome score. The "Category Score" is the highest correlation from each question in a category.

These results are simply staggering. Every single question correlates significantly with game project outcomes. The second category in particular – designed around Hackman’s “compelling direction” enabling condition – has a correlation over 0.5 for every single question! This tells us in no uncertain terms that project leaders would be well-advised to achieve clarity around the product vision, communicate it clearly to the team, and seek buy-in from all team members when beginning a new project, and be very careful about subsequent shifts in direction that might alienate their team.

(Note also that all of these correlations have p-values well under 0.01, indicating a clear statistical significance. For the remainder of these articles, all correlations can be assumed to have statistical p-values below our significance threshold of 0.05, and we use the text "Not S.S." to clearly indicate all cases where they do not.)

Compare these results to article 1, where we often struggled to find any correlation between the questions we listed and project outcomes. Even our question about production methodologies showed no meaningful correlation.

Lencioni’s Model: The Five Dysfunctions

The second team effectiveness model was based on Patrick Lencioni’s famous management book The Five Dysfunctions of a Team: A Leadership Fable. Unlike Hackman’s model, this model is stated in terms of what can go wrong on a team, and is described in terms of five specific team dysfunctions, which progress from one stage to the next like a disease as teams grow increasingly dysfunctional.

Of course, there’s no substitute for reading the book, but briefly, we can paraphrase the factors as follows:

Absence of Trust. The fear of being vulnerable with team members prevents the building of trust within the team. (Note the uncanny resemblance of this factor to “psychological safety” described in part 4 of Hackman’s model: they are almost identical).

Fear of Conflict. The absence of trust and the desire to preserve artificial harmony stifle the occurrence of productive conflict on the team.

Lack of Commitment. The fear of conflict described above makes team members less willing to buy in wholeheartedly to the decisions that are made, or to make decisions that they can commit to.

Avoidance of Accountability. The factors above – especially the lack of commitment and fear of conflict – prevent team members from holding one another accountable.

Inattention to Results. Without accountability, team members ignore the actual outcomes of their efforts, and focus instead on individual goals and personal status at the expense of collective success.

As with Hackman’s model, we composed 1-3 questions for each of these factors to try to determine how well this popular management book actually correlated with project outcomes.

Figure 4. Correlations for the Five Dysfunctions model. “Not S.S.” stands for “not statistically significant,” meaning we cannot infer any correlations for those relationships.

Note that there is a lot of red in the chart above, and many negative correlations. This is to be expected, and it’s not a bad thing. Most of the questions are asked in a negative frame (for example, “It was difficult to ask other members of this team for help”), so a negative correlation indicates that answers that agreed more with this statement experienced more negative project outcomes overall, which is exactly what you would expect.

What’s important is the absolute value of each of the correlations above. The greater the absolute value, the greater the correlation, whether positive or negative. And in every single case above, the sign of the correlation perfectly matches the frame with which the question was asked, and matches what the Five Dysfunctions model says you should expect.

In the Category column at the right, we looked only at the highest absolute value in the "aggregate outcomes" category, and these scores tell us that these five factors are roughly equal to Hackman’s model in terms of their correlations with game project outcomes.

This is another remarkable result. Clearly, a separate and quite different model of team effectiveness has shown a remarkable correlation with game project outcomes.

There are a few cases where the correlation is weak or not statistically significant (with p-values over 0.05). Two of the questions in the first category show no relationship with the game’s critical reception / MetaCritic scores, for reasons that are not clear. Also, engaging in unfiltered and passionate debate has no statistical significance with regard to project delays specifically. We speculate that while unfiltered and passionate debate has an overall positive effect on the game’s quality, this debate can be time-consuming in itself, and while it helps the team's ability to identify ways to improve product quality, it sometimes leads them to trade off the schedule for higher quality, which counteracts any positive effects it would have otherwise had on the schedule.

It seems clear at this point that both of these models are incredibly useful. It’s particularly nice that while Hackman’s model looks at the enabling factors and supportive context surrounding a team, Lencioni’s model looks more closely at the internal team dynamics, giving us two complementary and equally effective models for analyzing team effectiveness.

A Closer Look at Correlation

But what do these correlations actually mean? How powerful is a “0.5 correlation?”

For the sake of comparison, in Part 1, we found that a team’s average level of development experience has a correlation right around 0.2, so it’s not unreasonable to assume that any factor above 0.2 is more important than experience.

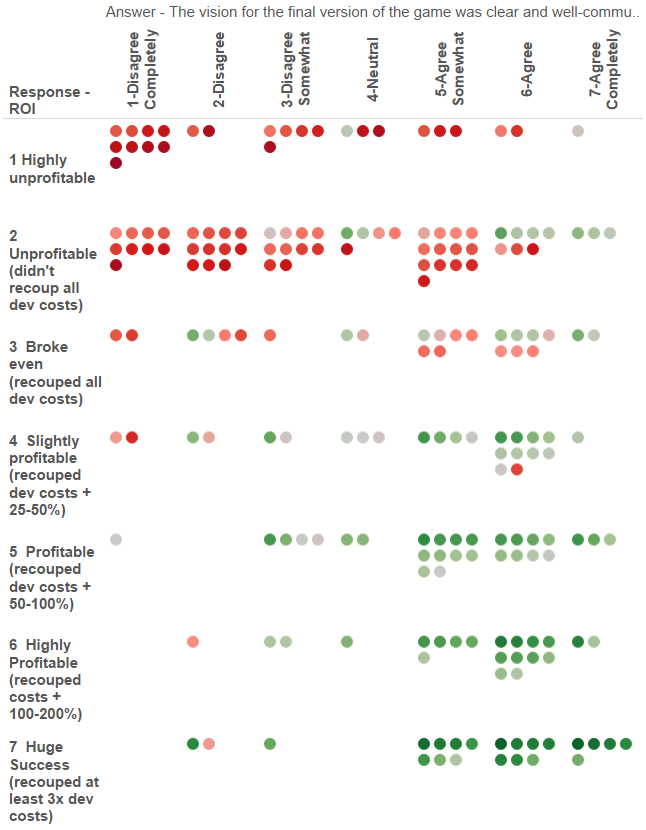

Let’s put it in context with a few graphs. We’ll take one of the highest-scoring questions from our section on Hackman’s model above and graph it against the answers to the question about the game’s return on investment (since some might argue that our aggregate outcome score is more subjective). We’ll pick the question “The vision for the final version of the game was clear and well-communicated to the team,” with an 0.4 correlation with ROI (although its correlation with our aggregate score is even higher, at 0.56). For clarity, we’ve colored each entry with its corresponding composite game outcome score, where green is a higher aggregate score and red indicates a lower aggregate score.

Figure 5. "The vision for the final version of the game was clear and well-communicated to the team" (horizontal axis), graphed against the return on investment question (vertical axis). Each dot represents one survey response. Each dot's color indicates that game's aggregate game outcome score. 202 data points are shown.

Notice a pattern? The green is all along the bottom right, the upper-left is all red, and there’s a mix in the top right. 19 out of the 22 respondents in the “Huge Success” category (86%) answered somewhere between “Agree Somewhat” and “Agree Completely,” with similar responses in the “Highly Profitable” category. In “Unprofitable,” however, 30 of 58, or 52%, answered between “Disagree Somewhat” and “Disagree Completely.”

More than anything, the emptiness of the lower-left quadrant is quite revealing – this is the quadrant where we would expect to see teams that somehow achieved a reasonable ROI without a clear and well-communicated vision, but there are almost none.

This seems to be telling us something: teams with a clear vision don’t always succeed, but teams without a clear vision almost always fail.

In other words, you can’t guarantee success, but you can certainly guarantee failure.

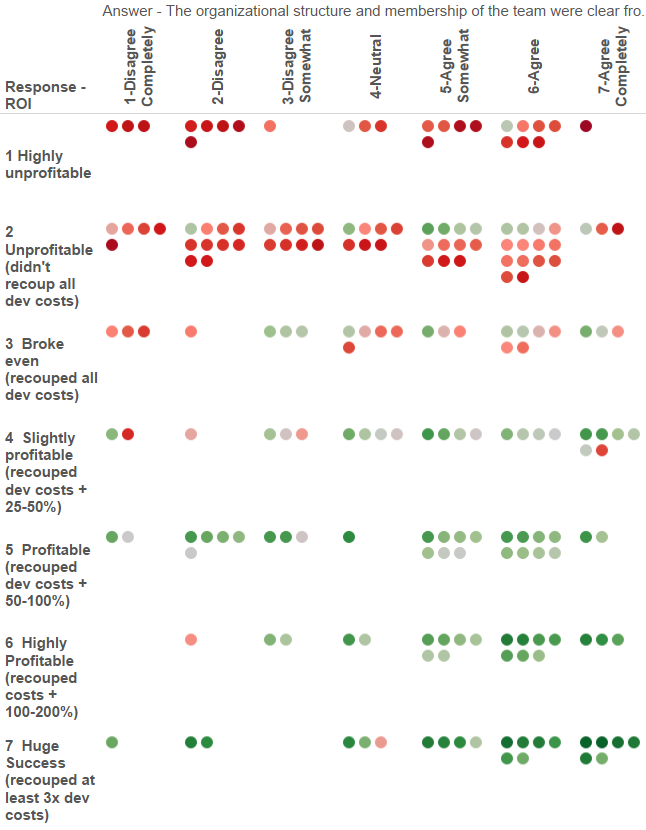

We would see a similar pattern with any other question we had chosen. All of them get similar results. Here’s another example, looking at the results for the question “The organizational structure and membership of the team were clear from the outset of the project.” This has a significantly lower correlation of 0.18 with ROI (and 0.29 with the aggregate outcome), but the pattern is still clear.

Figure 6. Clarity of organizational structure (horizontal axis) graphed against ROI (vertical axis), with each dot's color indicating the aggregate game outcome score for that project.

Correlation is not causation, and it’s impossible to prove that these factors directly caused these differences in outcomes. In particular, one could object that some other, “hidden” factors caused the differences.

Playing devil’s advocate, one could argue that perhaps the more effective teams just hired better people. Better employees could have then caused both the differences in the team character (as revealed by the questions above) and the improved game outcomes, without the two having anything to do with one another.

We won’t try to rigorously debunk this theory (although we’ll make our raw data available later to anyone who wants to study it). However, when we look only at individual tiers of team experience level – say, only the most experienced teams, or only the least experienced – all of these results still hold, which we would not expect to happen if it all came down to the skill levels of the individual team members.

Finally, let’s take a look at our third model and see how it fared.

Gallup’s Model: ‘12’

The third and final team effectiveness model was based on Wagner & Harter’s book 12: The Elements of Great Managing, which is derived from aggregate Gallup data from 10 million employee and manager interviews. This model, as you may have guessed from the name, is based on 12 factors:

Knowing What’s Expected

Materials & Equipment

I Have the Opportunity to Do What I Do Best

Recognition and Praise

Someone at Work Cares About Me as a Person

Someone at Work Encourages My Development

My Opinions Seem to Count

I Have a Connection With the Mission of the Company

My Co-Workers are Committed to Doing Quality Work

I Have a Best Friend at Work

Regular, Powerful, Insightful Feedback

Opportunities to Learn and Grow

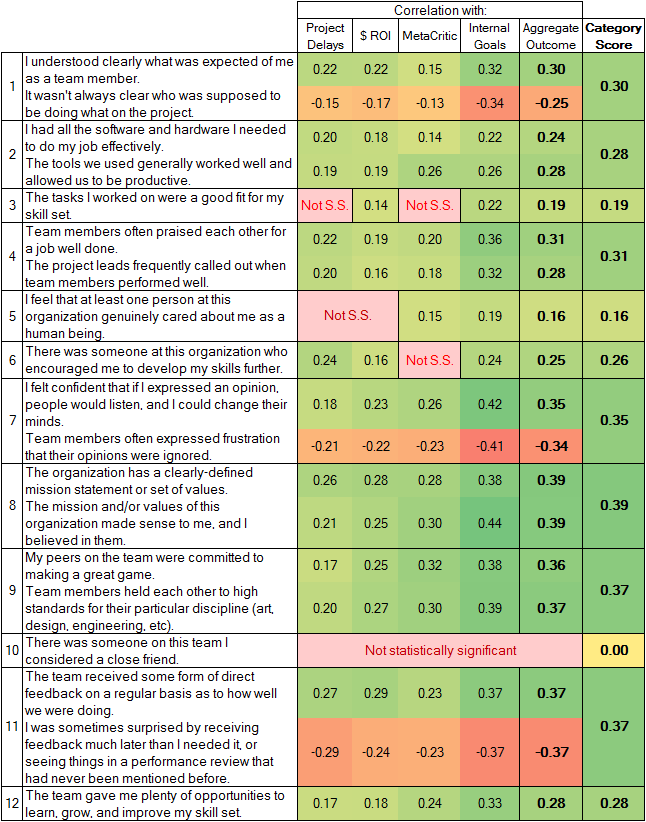

As this model has a larger list of factors than the other two, we limited ourselves to one or two questions per factor for fear of making the survey too long. Our correlations were as follows:

Figure 7. Correlations for the Gallup model, based on the book "12" by Wagner & Harter.

These findings are somewhat more mixed than the Hackman and Lencioni models. Factor 10 – having a best friend at work – showed no statistical significance, and appears to be irrelevant. Factors 3 and 5 – whether the tasks were a good fit for one’s skill set, and whether one person on the team cared about the respondent as a person – both showed relatively weak positive correlations, almost at the point of making them irrelevant, and each showed no statistical significance with 2 of the 4 outcome factors. Factor 6 was also relatively weak, with a modest correlation and no statistical significance when correlated with critical reception / MetaCritic scores.

However, several of the other factors – especially 7, 8, 9, and 11 – showed remarkably strong correlations with project outcomes. Moreover, nearly all of these factors are fundamentally orthogonal to the factors in Hackman and Lencioni’s models. Clearly, listening to team members' opinions, having a connection with the mission of the company, a shared commitment to doing quality work, and regular, powerful, insightful feedback are a big part of what separates the best dev teams from the rest. Factors 1, 2, 4, and 12 also showed healthy and statistically significant correlations.

The very strong correlation of the 8th category – a connection with the mission of the company – is particularly noteworthy. The correlation of this category is the strongest in all of the Gallup questions, and it’s unique from the Hackman and Lencioni models.

There are many organizations that have no clearly-defined mission statement or values statement, or that don’t actually act in accordance with their own stated values. So it’s very easy to be cynical about values and write them off as a pointless corporate flag-waving exercise that doesn’t actually relate to anything.

But our results show clearly that organizational values have a substantial impact on outcomes, and organizations that think deeply about their values, take them seriously, and carefully work to ingrain them into their culture seem to have a measurable advantage over those that do not. A few hugely successful companies like Valve and Supercell are noted for taking culture unusually seriously and viewing it as the centerpiece of their business strategy, and both have their own unique and very well-defined approaches to defining and organizing their internal culture.

Our results suggest that this is no coincidence.

We see this result as not only a partial validation of the Gallup model, but also a validation of the ability of our survey and our analytical approach to pinpoint which factors are not actually relevant to game project outcomes.

Conclusions

When you look at an individual game project, it can be difficult to see what made it a success or a failure. It’s all too easy to jump to conclusions about the way a single project turned out, and give all the credit or blame for the outcome to its design, its leadership, its business model or unique market environment, the team’s many hours of crunch, or the game’s technology.

Put many projects side-by-side, however, and a dramatically different picture emerges.

Although there's an unavoidable element of risk, as with our poker player example, and external factors (such as marketing budgets) certainly matter, the overwhelming conclusion is that the outcome is in your hands. Teams have control over the overwhelming majority of their own destiny.

Our results lead us to the inescapable conclusion that most of what separates the most effective teams from the least effective is the careful and intentional cultivation of effective teamwork, and this has an absolutely overwhelming impact on a game project’s outcome. The factors described in this article, along with those we will describe in our next article, are sufficient to describe a 0.82 correlation with aggregate outcome scores, as shown in Figure 1.

The famed management theorist Peter Drucker was well-known for the quote, “culture eats strategy for breakfast.” This is generally interpreted to mean that as much as we may believe that our strategies -- our business models, our technologies, our game designs, and so on -- give us an edge, the lion’s share of an organization’s destiny, and its actual ability to fulfill its strategy, are determined by its culture.

Team trust and “psychological safety” lay the groundwork to allow team members to openly point out problems, admit mistakes, and warn of impending problems and take corrective action. An embrace of creative conflict, a compelling direction that includes commitment to a clearly-defined shared vision, acceptance of accountability, the availability of the necessary enabling structure, the availability of expert coaching, a connection with the mission of the company, a belief that your opinions count and your colleagues are committed to doing quality work, and regular, powerful, insightful feedback all allow teams to work together more effectively. Put together, all of these make an enormous part of the difference between successful teams and failed ones.

It’s tempting to say that the secret sauce is teamwork – but this would be a trivialization of what is actually a far more subtle issue.

The actual secret sauce seems to be a culture that continually and deliberately cultivates and enables good teamwork, gives it all the support it needs to flourish, and carefully and diligently diagnoses it and fixes it when it begins to go astray.

If you take anything from the Game Outcomes Project, take this: the clearest finding from our survey is that culture is by far the greatest contributor to differences in project outcomes.

As developers, we spend an enormous amount of effort optimizing our code and our data. There’s no reason we shouldn’t put an equivalent amount of effort into optimizing our teams.

We encourage developers who may be interested in further reading on the subject to pick up the books that inspired our models and learn directly from the source.

In Part 3, we examine a raft of additional factors our team investigated that are specific to game development, including design risk management, crunch / overtime, project planning, respect, team focus, technical risk management, outsourcing, communication, and the organization’s perceptions of failure.

In Part 4, we offer a detailed analysis on the topic of crunch specifically, and we show that the data makes a surprisingly strong case on this topic.

Finally, we summarize our findings in Part 5.

The Game Outcomes Project team would like to thank the hundreds of current and former game developers who made this study possible through their participation in the survey. We would also like to thank IGDA Production SIG members Clinton Keith and Chuck Hoover for their assistance with survey design; Kate Edwards, Tristin Hightower, and the IGDA for assistance with promotion; and Christian Nutt and the Gamasutra editorial team for their assistance in promoting the survey.

For further announcements regarding our project, follow us on Twitter at @GameOutcomes

Read more about:

Featured BlogsYou May Also Like