Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

While 3D interfaces are just taking off for consumers, years of research have been poured into the field. Dr. Joe LaViola of the University of Central Florida shares detailed academic findings about building useful and fun ways to interact with games.

April 22, 2010

Author: by Joseph LaViola Jr.

[While 3D interfaces are just taking off for consumers, years of research have been poured into the field. Dr. Joe LaViola of the University of Central Florida shares detailed academic findings about building useful and fun ways to interact with games.]

Over the last few years, we have seen motion controller technology take the video game industry by force. The Sony EyeToy and the Nintendo Wii have shown that game interfaces can go beyond the traditional keyboard and mouse or game controller to create more realistic, immersive, and natural gameplay mechanics.

In fact, in the next few months, with the Sony Move, Microsoft's Natal, and Sixense's TrueMotion, every major console and the PC will have input peripherals that support 3D spatial interaction. This paradigm shift in interaction technology holds great potential for game developers to create game interfaces and strategies never thought possible before. These interfaces bring players closer to the action and afford more immersive user experiences.

Although these devices and this style of interaction seem new, they have actually existed in the academic community for some time. In fact, the fields of virtual reality and 3D user interfaces have been exploring these interaction styles for the last 20 years.

As an academic working in this field since the late '90s, I have seen a significant amount of research done in developing new and innovative 3D spatial interface techniques that are now starting to be applicable to an application domain that can reach millions of people each year.

As a field, we have been searching for the killer app for years, and I believe we have finally found one with video games.

I believe that the game industry needs to take a good look at what the academic community has been doing in this area. There is a plethora of knowledge that the virtual reality and 3D user interface communities have developed over the years that are directly applicable to game developers today.

Although it is important for game developers to continue to develop innovative interaction techniques to take advantage of the latest motion sensing input devices, it is also important for developers to not have to reinvent the wheel.

Thus, the purpose of this article is to provide a high-level overview of some of the 3D spatial interaction techniques that have been developed in academia over the last 10 to 15 years. It is my hope that game developers will use this article as a starting point to the rich body of work the virtual reality and 3D user interface communities have built.

As part of this article, I am also providing a short reading list that has more detailed information about research in 3D spatial interfaces and as well as their use in video games.

What is a 3D spatial interaction anyway? As starting point, we can say that a 3D user interface (3D spatial interaction) is a UI that involves human computer interaction where the user's tasks are carried out in a 3D spatial context with 3D input devices or 2D input devices with direct mappings to 3D. In other words, 3D UIs involve input devices and interaction techniques for effectively controlling highly dynamic 3D computer-generated content, and there's no exception when it comes to video games.

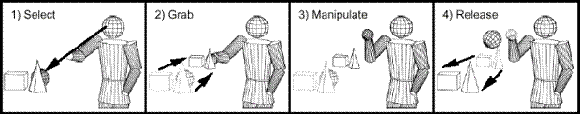

There are essentially four basic 3D interaction tasks that are found in most complex 3D applications. Actually, there is a fifth task called symbolic input -- the ability to enter alphanumeric characters in a 3D environment -- but we will not discuss it here. Obviously, there are other tasks which are specific to an application domain, but these basic building blocks can often be combined to let users perform more complex tasks. These tasks include navigation, selection, manipulation, and system control.

Navigation is the most common VE task, and is consists of two components. Travel is the motor component of navigation, and just refers to physical movement from place to place. Wayfinding is the cognitive or decision-making component of navigation, and it asks the questions, "where am I?", "where do I want to go?", "how do I get there?", and so on.

Selection is simply the specification of an object or a set of objects for some purpose.

Manipulation refers to the specification of object properties (most often position and orientation, but also other attributes). Selection and manipulation are often used together, but selection may be a stand-alone task. For example, the user may select an object in order to apply a command such as "delete" to that object.

System control is the task of changing the system state or the mode of interaction. This is usually done with some type of command to the system (either explicit or implicit). Examples in 2D systems include menus and command-line interfaces. It is often the case that a system control technique is composed of the other three tasks (e.g. a menu command involves selection), but it's also useful to consider it separately since special techniques have been developed for it and it is quite common.

There are two contrasting themes that are common when thinking about 3D spatial interfaces: the real and the magical. The real theme or style tries to bring real world interaction into the 3D environment. Thus, the goal is to try to mimic physical world interactions in the virtual world.

Examples include direct manipulation interfaces, such as swinging a golf club or baseball bat or using the hand to pick up virtual objects. The magical theme or style goes beyond the real world into the realm of fantasy and science fiction. Magical techniques are only limited by the imagination and examples include spell casting, flying, and moving virtual objects with levitation.

Two technical approaches used in the creation of both real and magical 3D spatial interaction techniques are referred to as isomorphism and non-isomorphism. Isomorphism refers to a one to one mapping between the motion controller and the corresponding object in the virtual word.

For example, if the motion controller moves 1.5 feet along the x axis, a virtual object moves the same distance in the virtual world. On the other hand, non-isomorphism refers to ability to scale the input so that the control-to-display ratio is not equal to one. For example, if the motion controller is rotated 30 degrees about the y axis, the virtual object may rotate 60 degrees about the y axis.

Non-isomorphism is a very powerful approach to 3D spatial interaction because it lends itself to magical interfaces and can potentially give the user more control in the virtual world.

The key to using many of the 3D user interfaces techniques found in the VR and 3D UI literature is having an input device that supports 6 DOF. In this case, 6 DOF means the device provides both position (x, y, and z) and orientation (roll, pitch, and yaw) of the controller or user in the physical world.

In some cases, it is also important to be able to track the user's head position and orientation. This will be somewhat problematic with current motion controller hardware, but these techniques can certainly be modified when no head tracking is available.

6 DOF is essentially the Holy Grail when it comes to 3D spatial interfaces and, fortunately, this is the way the video game industry is going (e.g., Sony Move, Sixense TruMotion, and Microsoft Natal).

One can still support 3D spatial interfaces with a device like the Nintendo Wii Remote, but it is slightly more challenging since the device provides some of these DOF under certain conditions. As part of the reading list at the end of the article, I provide some papers that discuss how to deal with the issues surrounding the Nintendo Wii remote and 3D spatial interaction.

Navigation: Travel

The motor component of navigation is known as travel (e.g., viewpoint movement). There are several issues to consider when dealing with travel in 3D UIs.

One such issue is the control of velocity and/or acceleration. There are many methods for doing this, including gesture, speech controls, sliders, etc. Next, one must consider whether motion should be constrained in any way, for example by maintaining a constant height or by following the terrain.

Finally, at the lowest-level, the conditions of input must be considered -- that is, when and how does motion begin and end (click to start/stop, press to start, release to stop, stop automatically at target location, etc.)? Four of the more common 3D travel techniques are gaze-directed steering, pointing, map-based travel, and "grabbing the air".

Gaze-Directed Steering

Gaze-directed steering is probably the most common 3D travel technique and was first discussed in 1995, although the term "gaze" is really misleading. Usually no eye tracking is being performed, so the direction of gaze is inferred from tracking the user's head orientation.

This is a simple technique, both to implement and to use, but it is somewhat limited in that you cannot look around while moving. Potential examples of gaze-directed steering in video games would be controlling vehicles or traveling around the world in a real-time strategy game.

To implement gaze-directed steering, typically a callback function is set up that executes before each frame is rendered. Within this callback, first obtain the head tracker information (usually in the form of a 4x4 matrix). This matrix gives you a transformation between the base tracker coordinate system and the head tracker coordinate system.

By also considering the transformation between the world coordinate system and the base tracker coordinates (if any), you can get the total composite transformation. Now, consider the vector (0,0,-1) in head tracker space (the negative z-axis, which usually points out the front of the tracker).

This vector, expressed in world coordinates, is the direction you want to move. Normalize this vector, multiply it by the speed, and then translate the viewpoint by this amount in world coordinates. Note: current "velocity" is in units/frame. If you want true velocity (units/second), you must keep track of the time between frames and then translate the viewpoint by an amount proportional to that time.

Pointing

Pointing is also a steering technique that was developed in the mid 1990s (where the user continuously specifies the direction of motion). In this case, the hand's orientation is used to determine direction.

This technique is somewhat harder to learn for some users, but is more flexible than gaze-directed steering. Pointing is implemented in exactly the same way as gaze-directed steering, except a hand tracker is used instead of the head tracker. Pointing could be used to decouple line of sight and direction of motion in first and third person shooter games.

Map-Based Travel

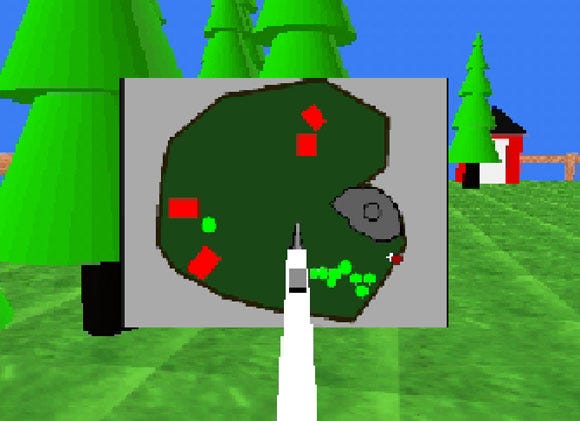

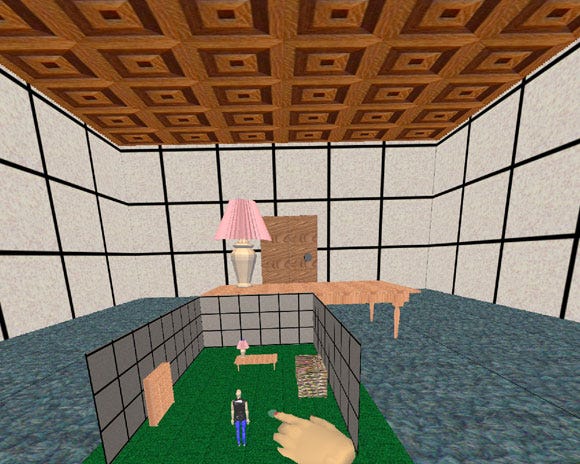

The map-based travel technique is a target-based technique. The user is represented as an icon on a 2D map of the environment. To travel, the user drags this icon to a new position on the map (see Figure 1). When the icon is dropped, the system smoothly animates the user from the current location to the new location indicated by the icon. Map-based travel could be used to augment many of the 2D game maps currently found in many game genres.

Figure 1. Dragging a user icon to move to a new location in the world. This image was taken in 1998.

To implement this technique, two things must be known about the way the map relates to the world. First, we need to know the scale factor, the ratio between the map and the virtual world. Second, we need to know which point on the map represents the origin of the world coordinate system. We assume here that the map model is originally aligned with the world (i.e. the x direction on the map, in its local coordinate system, represents the x direction in the world coordinate system).

When the user presses the button and is intersecting the user icon on the map, then the icon needs to be moved with the stylus each frame. One cannot simply attach the icon to the stylus, because we want the icon to remain on the map even if the stylus does not.

To do this, we first find the position of the stylus in the map coordinate system. This may require a transformation between coordinate systems, since the stylus is not a child of the map. The x and z coordinates of the stylus position are the point to which the icon should be moved. We do not cover here what happens if the stylus is dragged off the map, but the user icon should "stick" to the side of the map until the stylus is moved back inside the map boundaries, since we don't want the user to move outside the world.

When the button is released, we need to calculate the desired position of the viewpoint in the world. This position is calculated using a transformation from the map coordinate system to the world coordinate system, which is detailed here.

First, find the offset in the map coordinate system from the point corresponding to the world origin. Then, divide by the map scale (if the map is 1/100 the size of the world, this corresponds to multiplying by 100). This gives us the x and z coordinates of the desired viewpoint position.

Since the map is 2D, we can't get a y coordinate from it. Therefore, the technique should have some way of calculating the desired height at the new viewpoint. In the simplest case, this might be constant. In other cases, it might be based on the terrain height at that location or some other factors.

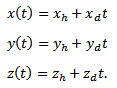

Once we know the desired viewpoint, we have to set up the animation of the viewpoint. The move vector  represents the amount of translation to do each frame (we are assuming a linear path). To find

represents the amount of translation to do each frame (we are assuming a linear path). To find  , we subtract the desired position from the current position (the total movement required), divide this by the distance between the two points (calculated using the distance formula), and multiplied by the desired velocity, so that

, we subtract the desired position from the current position (the total movement required), divide this by the distance between the two points (calculated using the distance formula), and multiplied by the desired velocity, so that  gives us the amount to move in each dimension each frame.

gives us the amount to move in each dimension each frame.

The only remaining calculation is the number of frames this movement will take: distance/velocity frames. Note that again velocity is measured here in units/frame, not units/second, for simplicity.

Grabbing the Air

The "grabbing the air" technique, also first discussed in 1995, uses the metaphor of literally grabbing the world around you (usually empty space), and pulling yourself through it using hand gestures. This is similar to pulling yourself along a rope, except that the "rope" exists everywhere, and can take you in any direction. The grabbing the air technique has many potential uses in video games including climbing buildings or mountains, swimming, and flying.

To implement the one-handed version of this technique (the two-handed version can get complex if rotation and world scaling is also supported), when the initial button press is detected, we simply obtain the position of the hand in the world coordinate system.

Then, every frame until the button is released, get a new hand position, subtract it from the old one, and move the objects in the world by this amount.

Alternately, you can leave the world fixed, and translate the viewpoint by the opposite vector. Before exiting the callback, be sure to update the "old" hand position for use on the next frame.

Note it is tempting to implement this technique simply by attaching the world to the hand, but this will have the undesirable effect of also rotating the world when the hand rotates, which can be quite disorienting. You can also do simple constrained motion simply by ignoring one or more of the components of the hand position (e.g. only consider x and z to move at a constant height).

Selection

3D selection is the process of accessing one or more objects in a 3D virtual world. Note that selection and manipulation are intimately related, and that several of the techniques described here can also be used for manipulation.

There are several common issues for the implementation of selection techniques. One of the most basic is how to indicate that the selection event should take place (e.g. you are touching the desired object, now you want to pick it up). This is usually done via a button press, gesture, or voice command, but it might also be done automatically if the system can infer the user's intent.

One also has to have efficient algorithms for object intersections for many of these techniques. We'll discuss a couple of possibilities. The feedback given to the user regarding which object is about to be selected is also very important. Many of the techniques require an avatar (virtual representation) for the user's hand.

Finally, consider keeping a list of objects that are "selectable", so that a selection technique does not have to test every object in the world, increasing efficiency. Four common selection techniques include the virtual hand, ray-casting, occlusion, and arm extension.

The Virtual Hand

The most common selection technique is the simple virtual hand, which does "real-world" selection via direct "touching" of virtual objects. This technique is also one of the oldest and dates back to the late 1980s. In the absence of haptic feedback, this direction manipulation is done by intersecting the virtual hand (which is at the same location as the physical hand) with a virtual object.

The virtual hand has great potential in many different video game genres. Examples include selection of sports equipment, direct selection of guns, ammo, and health packs in first person shooter games, as a hand of "God" in real-time strategy games, and interfacing with puzzles in action/adventure games.

Implementing this technique is simple, provided you have a good intersection/collision algorithm. Often, intersections are only performed with axis-aligned bounding boxes or bounding spheres rather than with the actual geometry of the objects.

Ray-Casting

Another common selection technique is ray-casting. This technique was first discussed in 1995 and uses the metaphor of a laser pointer -- an infinite ray extending from the virtual hand. The first object intersected along the ray is eligible for selection.

This technique is efficient, based on experimental results, and only requires the user to vary 2 degrees of freedom (pitch and yaw of the wrist) rather than the 3 DOFs required by the simple virtual hand and other location-based techniques. Ideally, ray-casting could be used when ever special powers are required in the game or when the player has the ability to select objects at a distance.

There are many ways to implement ray-casting. A brute-force approach would calculate the parametric equation of the ray, based on the hand's position and orientation. First, as in the pointing technique for travel, find the world coordinate system equivalent of the vector (0,0,-1). This is the direction of the ray. If the hand's position is represented by ( ), and the direction vector is (

), and the direction vector is ( ), then the parametric equations are given by

), then the parametric equations are given by

Only intersections with  should be considered, since we do not want to count intersections "behind" the hand. It is important to determine whether the actual geometry has been intersected, so first testing the intersection with the bounding box will result in many cases being trivially rejected.

should be considered, since we do not want to count intersections "behind" the hand. It is important to determine whether the actual geometry has been intersected, so first testing the intersection with the bounding box will result in many cases being trivially rejected.

Another method might be more efficient. In this method, instead of looking at the hand orientation in the world coordinate system, we consider the selectable objects to be in the hand's coordinate system, by transforming their vertices or their bounding boxes. This might seem quite inefficient, because there is only one hand, while there are many polygons in the world. However, we assume we have limited the objects by using a selectable objects list. Thus, the intersection test we will describe is much more efficient.

Once we have transformed the vertices or bounding boxes, we drop the z coordinate of each vertex. This maps the 3D polygon onto a 2D plane (the xy plane in the hand coordinate system).

Since the ray is (0,0,-1) in this coordinate system, we can see that in this 2D plane, the ray will intersect the polygon if and only if the point (0,0) is in the polygon. We can easily determine this with an algorithm that counts the number of times the edges of the 2D polygon cross the positive x-axis. If there are an odd number of crossings, the origin is inside, if even, the origin is outside.

Occlusion Techniques

Occlusion techniques (also called image plane techniques), first proposed in 1997, work in the plane of the image; object are selected by "covering" it with the virtual hand so that it is occluded from your point of view.

Geometrically, this means that a ray is emanating from your eye, going through your finger, and then intersecting an object. Occlusion techniques could be used for object selection at a distance but instead of using a laser pointer metaphor that ray casting affords, players could simply "touch" distant objects to select them.

These techniques can be implemented in the same ways as the ray-casting technique, since it is also using a ray. If you are doing the brute-force ray intersection algorithm, you can simply define the ray's direction by subtracting the finger position from the eye position.

However, if you are using the second algorithm, you require an object to define the ray's coordinate system.

This can be done in two steps. First, create an empty object, and place it at the hand position, aligned with the world coordinate system. Next, determine how to rotate this object/coordinate system so that it is aligned with the ray direction. The angle can be determined using the positions of the eye and hand, and some simple trigonometry. In 3D, two rotations must be done in general to align the new object's coordinate system with the ray.

Arm-Extension

The arm-extension (e.g. Go-Go) technique, first described in 1996 and inspired by '80s cartoon Inspector Gadget, is based on the simple virtual hand, but it introduces a nonlinear mapping between the physical hand and the virtual hand, so that the user's reach is greatly extended.

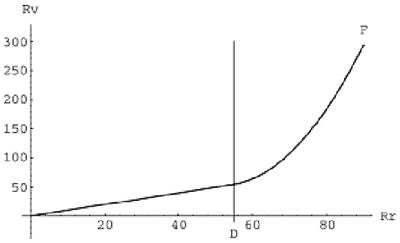

Not only useful for object selection at a distance, Go-Go could also be useful traveling through an environment with Batman's grappling hook or Spiderman's web. The graph in Figure 2 shows the mapping between the physical hand distance from the body on the x-axis and the virtual hand distance from the body on the y-axis.

There are two regions. When the physical hand is at a depth less than a threshold 'D', the one-to-one mapping applies. Outside D, a non-linear mapping is applied, so that the farther the user stretches, the faster the virtual hand moves away.

Figure 2. The nonlinear mapping function used in the Go-Go selection technique.

To implement Go-Go, we first need the concept of the position of the user's body. This is needed because we stretch our hands out from the center of our body, not from our head (which is usually the position that is tracked). We can implement this using an inferred torso position, which is defined as a constant offset in the negative y direction from the head. A tracker could also be placed on the user's torso.

Before rendering each frame, we get the physical hand position in the world coordinate system, and then calculate its distance from the torso object using the distance formula. The virtual hand distance can then be obtained by applying the function shown in the graph in Figure 2.

(starting at D) is a useful function in many environments, but the exponent used depends on the size of the environment and the desired accuracy of selection at a distance. Once the distance, at which to place the virtual hand is known, we need to determine its position.

(starting at D) is a useful function in many environments, but the exponent used depends on the size of the environment and the desired accuracy of selection at a distance. Once the distance, at which to place the virtual hand is known, we need to determine its position.

The most common implementation is to keep the virtual hand on the ray extending from the torso and going through the physical hand. Therefore, if we get a vector between these two points, normalize it, multiply it by the distance, then add this vector to the torso point, we obtain the position of the virtual hand. Finally, we can use the virtual hand technique for object selection.

Manipulation

As we noted earlier, manipulation is connected with selection, because an object must be selected before it can be manipulated. Thus, one important issue for any manipulation technique is how well it integrates with the chosen selection technique. Many techniques, as we have said, do both: e.g. simple virtual hand, ray-casting, and Go-Go.

Another issue is that when an object is being manipulated, you should take care to disable the selection technique and the feedback you give the user for selection. If this is not done, then serious problems can occur if, for example, the user tries to release the currently selected object but the system also interprets this as trying to select a new object.

Finally, thinking about what happens when the object is released is important. Does it remain at its last position, possibly floating in space? Does it snap to a grid? Does it fall via gravity until it contacts something solid? The application requirements will determine this choice.

Three common manipulation techniques include HOMER, Scaled-World Grab, and World-in-Miniature. For each of these techniques, the manipulation of objects in the game world could be used in many different genres including setting traps in first and third person shooter games, completing puzzles in action/adventure games, and supporting new types of rhythm games.

HOMER

The Hand-Centered Object Manipulation Extending Ray-Casting (HOMER) technique, first discussed in 1997, uses ray-casting for selection and then moves the virtual hand to the object for hand-centered manipulation. The depth of the object is based on a linear mapping.

The initial torso-physical hand distance is mapped onto the initial torso-object distance, so that moving the physical hand twice as far away also moves the object twice as far away. Also, moving the physical hand all the way back to the torso moves the object all the way to the user's torso as well.

Like Go-Go, HOMER requires a torso position, because you want to keep the virtual hand on the ray between the user's body (torso) and the physical hand. The problem here is that HOMER moves the virtual hand from the physical hand position to the object upon selection, and it is not guaranteed that the torso, physical hand, and object will all line up at this time.

Therefore, we calculate where the virtual hand would be if it were on this ray initially, then calculate the offset to the position of the virtual object, and maintain this offset throughout manipulation.

When an object is selected via ray-casting, first detach the virtual hand from the hand tracker. This is due to the fact that if it remained attached but the virtual hand model is moved away from the physical hand location, a rotation of the physical hand will cause a rotation and translation of the virtual hand. Next, move the virtual hand in the world coordinate system to the position of the selected object, and attach the object to the virtual hand in the scene graph (again, without moving the object in the world coordinate system).

To implement the linear depth mapping, we need to know the initial distance between the torso and the physical hand  , and between the torso and the selected object

, and between the torso and the selected object  . The ratio

. The ratio  will be the scaling factor.

will be the scaling factor.

For each frame, we need to set the position and orientation of the virtual hand. The selected object is attached to the virtual hand, so it will follow along. Setting the orientation is relatively easy. Simply copy the transformation matrix for the hand tracker to the virtual hand, so that their orientation matches.

To set the position, we need to know the correct depth and the correct direction. The depth is found by applying the linear mapping to the current physical hand depth. The physical hand distance is simply the distance between it and the torso, and we multiply this by the scale factor  to get the virtual hand distance. We then obtain a normalized vector between the physical hand and the torso, multiply this vector by the virtual hand distance, and add the result to the torso position to obtain the virtual hand position.

to get the virtual hand distance. We then obtain a normalized vector between the physical hand and the torso, multiply this vector by the virtual hand distance, and add the result to the torso position to obtain the virtual hand position.

Scaled-World Grab

The scaled-world grab technique (see Figure 3) is often used with occlusion selection and was first discussed in 1997.

The idea is that since you are selecting the object in the image plane, you can use the ambiguity of that single image to do some magic. When the selection is made, the user is scaled up (or the world is scaled down) so that the virtual hand is actually touching the object that it is occluding.

If the user does not move (and the graphics are not stereo), there is no perceptual difference between the images before and after the scaling.

However, when the user starts to move the object and/or his head, he realizes that he is now a giant (or that the world is tiny) and he can manipulate the object directly, just like the simple virtual hand.

To implement scaled-world grab, correct actions must be performed at the time of selection and release. Nothing special needs to be done in between, because the object is simply attached to the virtual hand, as in the simple virtual hand technique. At the time of selection, scale the user by the ratio (distance from eye to object / distance from eye to hand).

This scaling needs to take place with the eye as the fixed point, so that the eye does not move, and should be uniform in all three dimensions. Finally, attach the virtual object to the virtual hand. At the time of release, the opposite actions are done in reverse. Re-attach the object to the world, and scale the user uniformly by the reciprocal of the scaling factor, again using the eye as a fixed point.

Figure 3. An illustration of the scaled-world grab technique.

World-in-Miniature

The world-in-miniature (WIM) technique uses a small "dollhouse" version of the world to allow the user to do indirect manipulation of the objects in the environment (see Figure 4). Each of the objects in the WIM are selectable using the simple virtual hand technique, and moving these objects causes the full-scale objects in the world to move in a corresponding way. The WIM can also be used for navigation by including a representation of the user, in a way similar to the map-based travel technique, but including the third dimension.

Figure 4. An example of a WIM. This image was taken in 1996.

To implement the WIM technique, first create the WIM. Consider this a room with a table object in it. The WIM is represented as a scaled down version of the room, and is attached to the virtual hand. The table object does not need to be scaled, because it will inherit the scaling from its parent (the WIM room). Thus, the table object can simply be copied within the scene graph.

When an object in the WIM is selected using the simple virtual hand technique, first match this object to the corresponding full-scale object. Keeping a list of pointers to these objects is an efficient way to do this step. The miniature object is attached to the virtual hand, just as in the simple virtual hand technique.

While the miniature object is being manipulated, simply copy its position matrix (in its local coordinate system, relative to its parent, the WIM) to the position matrix of the full-scale object. Since we want the full-scale object to have the same position in the full-scale world coordinate system as the miniature object does in the scaled-down WIM coordinate system, this is all that is necessary to move the full-scale object correctly.

System control provides a mechanism for users to issue a command to either change the mode of interaction or the system state. In order to issue the command, the user has to select an item from a set. System control is a wide-ranging topic, and there are many different techniques to choose from such as the use of graphical menus, gestures, and tool selectors.

For the most part, these techniques are not difficult to implement, since they mostly involve selection. For example, virtual menu items might be selected using ray-casting. For all of the techniques, good visual feedback is required, since the user needs to know not only what he is selecting, but what will happen when he selects it. In this section, we briefly highlight some of the more common system control techniques.

Graphical Menus

Graphical menus can be seen as the 3D equivalent of 2D menus. Placement influences the access of the menu (correct placement can give a strong spatial reference for retrieval), and the effects of possible occlusion of the field of attention. Placement can be categorized into surround-fixed, world-fixed and display-fixed windows.

The subdivision of placement can, however, be made more subtle. World-fixed and surround-fixed windows can be subdivided into menus which are either freely placed into the world, or connected to an object.

Display-fixed windows can be renamed, and made more precise, by referring to their actual reference frame: the body. Body-centered menus, either head referenced or body-referenced, can supply a strong spatial reference frame.

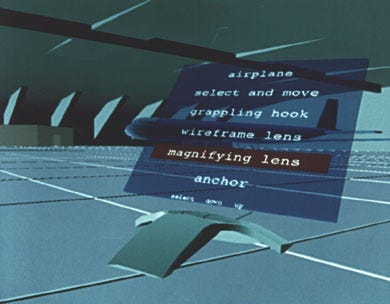

One particularly interesting possible effect of body-centered menus is "eyes-off" usage, in which users can perform system control without having to look at the menu itself. The last reference frame is the group of device-centered menus. Device-centered placement provides the user with a physical reference frame (see Figure 5).

Figure 5. The Virtual Tricorder: an example of a graphical menu with device-centered placement. This image was taken in 1995.

We can subdivide graphical menus into hand-oriented menus, converted 2D menus, and 3D widgets. One can identify two major groups of hand-oriented menus. 1DOF menus are menus which use a circular object on which several items are placed. After initialization, the user can rotate his/her hand along one axis until the desired item on the circular object falls within a selection basket.

User performance is highly dependent on hand and wrist physical movement and the primary rotation axis should be carefully chosen. 1DOF menus have been made in several forms, including the ring menu, sundials, spiral menus (a spiral formed ring menu), and a rotary tool chooser. The second group of hand-oriented menus is hand-held-widgets, in which menus are stored at a body-relative position.

The second group is the most often applied group of system control interfaces: converted 2D widgets. These widgets basically function the same as in desktop environments, although one often has to deal with more DOFs when selecting an item in a 2D widget. Popular examples are pull-down menus, pop-up menus, flying widgets, toolbars and slider.

The final group of graphical menus is the group known as 3D widgets. In a 3D world, widgets often mean moving system control functionality into the world or onto objects. This can also be thought of as "moving the functionality of a menu onto an object."

A very important issue when using widgets is placement. 3D widgets differ from the previously discussed menu techniques (1DOF and converted 2D menus) in the way the available functions are mapped: most often, the functions are co-located near an object, thereby forming a highly context-sensitive "menu".

Gestures and Postures

When using gestural interaction, we apply a "hand-as-tool" metaphor: the hand literally becomes a tool. When applying gestural interaction, the gesture is both the initialization and the issuing of a command.

When talking about gestural interaction, we refer, in this case, to gestures and postures, not to gestural input used with a Tablet PC or Interactive Whiteboard. There is a significant difference between gestures and postures: postures are static movements (like pinching), whereas gestures include a change of position and/or orientation of the hand. A good example of gestures is the usage of sign language.

Gestural interaction can be a very powerful system control technique as well as useful for navigation and selection and manipulation. In fact, gestures are relatively limitless when it comes to their potential uses in video games. Gestures can be use to communicate with other players, to cast spells in a role playing game, call pitches or give signs in a baseball game, and issues combination attacks in action games. However, one problem with gestural interaction is that the user needs to learn all the gestures.

Since the user can normally not remember more than about seven gestures (due to the limited capacity of our working memory), inexperienced users can have significant problems with gestural interaction, especially when the application is more complex and requires a larger amount of gestures.

Users often do not have the luxury of referring to a graphical menu when using gestural interaction -- the structure underneath the available gestures is completely invisible. In order to make gestural interaction easier to use for a less advanced user, strong feedback, like visual cues after initiation of a command, might be needed.

Tools

We can identify two different kinds of tools, namely physical tools and virtual tools. Physical tools are context-sensitive input devices, which are often referred to as props. A prop is a real-world object which is duplicated in the virtual world.

A physical tool might be space multiplexed (the tool only performs one function) or time multiplexed, when the tool performs multiple functions over time (like a normal desktop mouse). One accesses a physical tool by simply reaching for it, or by changing the mode on the input device itself.

Virtual tools are tools which can be best exemplified with a toolbelt. Users wear a virtual toolbelt around the waist, from which the user can access specific functions by grabbing at particular places on belt, as in the real world. Virtual toolbelts could potentially restructure how items and weapons are stored and accessed in game genres including first and third person shooters, role playing games, and action/adventure games.

Sometimes, functions on a toolbelt are accessed via the same principles as used with graphical menus, where one should look at the menu itself. The structure of tools is often not complex: as stated before, physical tools are either dedicated devices for one function, or one can access several (but not many) functions with one tool. Sometimes, a physical tool is the display medium for a graphical menu. In this case, it has to be developed in the same way as graphical menus. Virtual tools often use proprioceptive cues for structuring.

The techniques I have discussed in this article only scratch the surface for what has been done in the virtual reality and 3D user interface research communities over the years. As the video game industry incorporates more and more motion-based interfaces in the games they make, work done by researchers in this space will become increasingly important.

I would hope that the video game industry will take what we, as academics, have to offer in terms of a plethora of techniques and the lessons learned using them. Given the popularity of video games, academics from the virtual reality and 3D user interface research areas will continue to explore and develop new interface techniques specifically devoted to games. It is my hope that the game industry and academics can work together to symbiotically push the envelope in game interfaces and gameplay mechanics.

Here is a short reading list for anyone interested in learning about work academics have done with 3D spatial interaction.

Bott, J., Crowley, J., and LaViola, J. "Exploring 3D Gestural Interfaces for Music Creation in Video Games", Proceedings of The Fourth International Conference on the Foundations of Digital Games 2009, 18-25, April 2009.

Bowman, D., Kruijff, E., LaViola, J., and Poupyrev, I. 3D User Interfaces: Theory and Practice, Addison Wesley, July 2004.

Charbonneau, E., Miller, A., Wingrave, C., and LaViola, J. "Understanding Visual Interfaces for the Next Generation of Dance-Based Rhythm Video Games", Proceedings of Sandbox 2009: The Fourth ACM SIGGRAPH Conference on Video Games, 119-126. August 2009.

Chertoff, D., Byers, R., and LaViola, J. "An Exploration of Menu Techniques using a 3D Game Input Device", Proceedings of The Fourth International Conference on the Foundations of Digital Games 2009, 256-263, April 2009.

Wingrave, C., Williamson, B. , Varcholik, P., Rose, J., Miller, A., Charbonneau, E., Bott, J. and LaViola, J. "Wii Remote and Beyond: Using Spatially Convenient Devices for 3DUIs", IEEE Computer Graphics and Applications, 30(2):71-85, March/April 2010.

Read more about:

FeaturesYou May Also Like