Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

First of a three-part series on combat-focused game balance design, exploring its evolution and showcasing successful case studies.

November 18, 2024

I'm Breno Azevedo, and I've been working professionally in game balance design and development since 2006. This is the first part of a three-part series on combat-focused game balance design, exploring its evolution and showcasing successful case studies to illustrate key concepts and techniques.

As multiplayer gaming revenues continue to grow, recent research reveals that 47% of studios struggle to analyze and respond to the overwhelming volume of player feedback. In mobile games, nearly half of in-app purchases consist of currencies, which are deeply integrated into both the in-game economy and core gameplay. This raises a crucial question: how can developers effectively balance these increasingly complex multiplayer ecosystems to deliver the best possible player experience? Let’s shed some light on this topic, often seen as either black magic reserved for the initiated or the end product of years of brute force and trial-and-error — something we've witnessed even in well-funded triple-A games.

Until 2010, while working as a contract-based Game Balance Designer for EA Los Angeles' RTS (real-time strategy) games division, I had the opportunity to lead balance patch development for some of their most renowned titles, like Lord of the Rings: Battle for Middle Earth I & II, and in the pre-production phases of Command and Conquer 3 and 4. Those were exciting times, where I introduced what I called the 'Battletest system'. The system has two stages, first it focuses on on gathering feedback from controlled tests involving top players across the game's various factions. Then the balance designer combines statistical analysis with the good old 'gut feeling' to rapidly iterate patches and fine-tune these complex games to a fine state of perceived balance.

I began working on Battle for Middle Earth (BfME1) patch 1.03 as a collaborator, with no guarantee it would ever see an official release. EA’s strict review and approval process made patching expensive — around $50k per patch. Not a small investment for a game that had already launched, especially with another title in development, burning through its own budget. There were also concerns within the studio that relying on top players' feedback might lead to a gameplay style that could alienate lower-skilled players, who formed the basis of the player pyramid. I argued that top players were the ones being followed, setting trends and actually creating the optimal strategies, which would naturally trickle down to the broader player population over time.

On gamereplays.org and other public forums, top players began openly praising and promoting the private "battletest" modded versions they had been testing in pre-arranged sessions, and were later released publicly as mods. To our team's surprise, EA embraced the project and decided to turn it into an official patch — something unprecedented at the time. After a month of rigorous back-and-forth with their internal and external QA teams, BfME patch 1.03 was officially released in February 25, 2006 to widespread acclaim. As anticipated, the strategies developed by the top players during the patch's creation took months for the general player base to master, leading to an unusually long period of balance stability. And let's be honest — high-skill players tend to be the most vocal and influential in the community, for good reason. When they praise a game’s balance, it's hard to argue against.

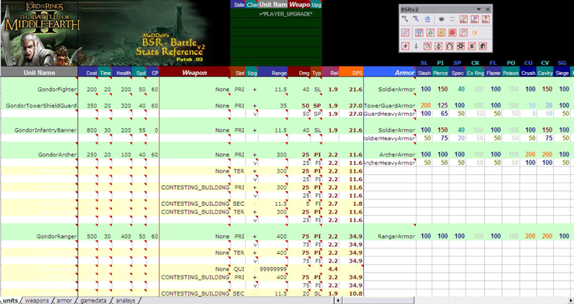

Next came Battle for Middle Earth II (BfME2), which posed an extreme challenge in terms of balance. With its six unique factions and over 1,600 unique data entries, balancing the game manually was practically impossible. To do it on time and within budget, I developed a specialized tool—the Battle Stats Reference (BSR) automated spreadsheet. Bear in mind, this was during an era when Excel reigned supreme, and there were no cloud-based alternatives. So I taught myself VBA (Visual Basic for Applications) and, within two months, completed version two of BSR just in time for the actual battletest game sessions. While opening the spreadsheet on a modern (2021) version of Excel “kinda works,” some features — like the original floating control bar — no longer function properly. You can, however, see a screenshot of it in its full original glory below:

[[ BSR v2 Screenshot ]]

BSR managed the multiple "ini" data files used by EA's proprietary SAGE engine through separate, cross-referenced sheets. It could seamlessly import and export game data to and from these sheets without breaking internal data connections. This capability enabled rapid comparison and bulk editing of game parameters, leading to a tenfold increase in productivity.

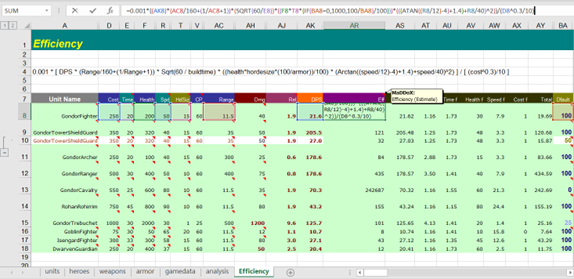

Simply editing and inputting numbers into the game wasn’t enough to achieve an initial raw-balanced version. I needed a solid (guess what?) strategy. My approach involved running a series of synthetic tests with two networked machines, each using different sets of units with roughly the same total cost, battling it out. I also defined a "base" or reference unit for each class, like the Rohan Rohirrim for cavalry and the Elven Lorien Archer for ranged units. By balancing these base units against each other, I could extend the results to other units within the same class, provided they were "internally balanced". Internal balance is accomplished among different units within the same class, and it requires a separate, later balancing pass. The real challenge was crafting an "efficiency" formula to distill a unit's battlefield effectiveness into a single number. Not only is there no standard formula for this somewhat subjective parameter, but RTS games also feature a wide variety of classes from swordsmen to pikemen and heroes, with tens or even hundreds of attributes for each unit type. Last but not least, factors like build time, movement speed, and weapon range each have different weights for efficiency, depending on the game's economy, map scale, and pace. At the end of the day, as with most complex systems, the key was to observe the synthetic game tests and back-fit an equation to match reality. And this led to the creation of this monstrous formula:

0.001 * [ DPS * (Range/160+(1/Range+1)) * Sqrt(60 / buildtime) * ((health*hordesize*(100/armor))/100) * (Arctan((speed/12)-4)+1.4)+speed/40)^2) ] / [ (cost^0.3)/10 ]

[[ Efficiency Calculation for BfME 2 ]]

The formula didn’t start out that complex, of course. Initially, drawing from my own in-game experience, I prioritized range and speed as the key parameters for efficiency. Gradually, I compiled results from the synthetic tests into a separate chart and refined the formula to match those results as closely as possible, much like creating a trendline in a spreadsheet. Once the formula was reasonably aligned with the ninety-or-so base unit test results, I compiled the first patch version and shared it with our VIP testers. The formula continued to evolve based on feedback from those incredibly high-skill matches. Remember, tournaments weren’t as common back then, so top players rarely faced each other; their main goal was climbing the quickmatch ranks and avoiding tough matches that could hurt their ratings. Still, after venting out for so long about the weaknesses and imbalances in their favorite factions, these players were eager to give their all with a new, improved, and (temporarily) exclusive patch. I even created the "Palantir" series of videos to showcase some of the best moments from those intense, close games. You can watch one of them here:

[[ BFME Patch 1.03 - "Palantir" Episode III ]]

BfME 1.03 and BfME2 1.05 were met with an enormously positive reception and marked the last official patches released for those games. The community was so pleased that many players advocated that no further balance patches were needed. That's a testament to the incredible work done with the help of a dedicated group of players from around the world — many of whom leveraged their well-deserved recognition to kickstart their careers in the games industry, such as HERO (Larry King), prepare (Jan Richter), and James Fielding. I also want to give a special shoutout to Aaron Kaufman, "the" community manager, who championed the community leads within the studio and made all of this possible. And yes, he even flew us out to Los Angeles for some unforgettable "in-person battletests"! Amazing times.

[[ One of the many in-person battletest teams, that one for C&C4 ]]

In the next article, we’ll explore how real-time combat game balance design evolved throughout the late 2010s and how cloud-based solutions literally changed the game. The third and final installment will delve into how F2P mobile gaming introduced yet another layer of complexity to this critical and ever-evolving aspect of game design. Comments and suggestions are always welcome, of course. Cheers!

You May Also Like