Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Unity’s Visual Effect Graph system can be a powerful tool. But for spawning a large number of effects, performance quickly becomes an issue. Visual Effect graph DOES allow input of 2d texture maps so we can use that to transfer positional data for spawn

But if you plan to use it for spawning a large number of effects at the same time, performance quickly becomes an issue.

Say, for instance, you want to use Visual Effect Graph for bullet impacts. If two bullet impacts need to be created on the same frame, normally you would spawn two separate impacts, each contained within a game object.

Creating multiple instances of the effect over different game objects is inefficient, and there is a better way to handle spawning multiple systems at the same time.

We could update the system’s position and then trigger an event that creates a new particle system. This works but only for a single particle system per frame.

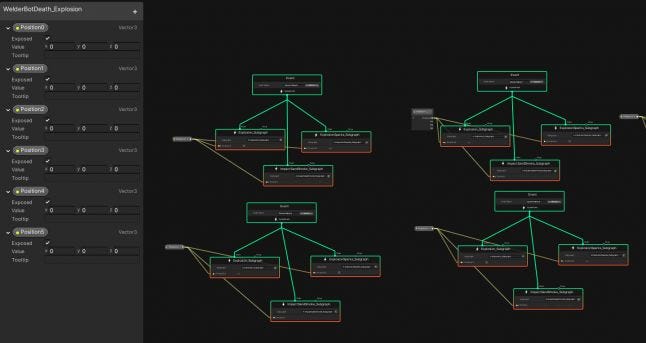

One way to get around this would be to make an array of systems inside the same Visual Effect graph, but that would require management of the different event triggers and positional data variables. It works well if done, but it is not a great system to deal with.

Visual Effect graph does not allow an array or list of positions, so we can’t upload the positional data except by doing it with a single position per variable. So we look to other allowed inputs to see if there is another way to insert the positional data into the graph.

Visual Effect graph DOES allow input of 2d texture maps. And there is a way to convert a list of 3d positional data into a 2d texture map. This way the positional data can be loaded into the graph and used to spawn multiple particle effect systems at the same time.

To do this we need to create a way to convert this data into a 2d texture map. This can be done by using the property binder component.

From the Unity Visual Effect Graph manual: “Property Binders are C# Behaviors you can attach to a GameObject with a Visual Effect Component: these behaviors enable making connections between scene or gameplay values and Exposed Properties for this Visual Effect instance.”

Property binders have a bunch of built-in data types you can use, and it’s pretty easy to create your own which allows you to define the data that is available to the Visual Effect instance.

Visual Effect Graph package comes with the following built-in property binders:

Audio

Audio Spectrum to AttributeMap : Bakes the Audio Spectrum to an Attribute map and binds it to a Texture2D and uint Count properties

GameObject

Enabled : Binds the Enabled flag of a Game Object to a bool property

Point Cache

Hierarchy to AttributeMap : Binds positions an target positions of a hierarchy of transforms to Texture2Ds AttributeMaps and uint Count

Multiple Position Binder: Binds positions of a list of transforms to a Texture2D AttributeMap and uint Count

Input

Axis : Binds the float value of an Input Axis to a float property

Button : Binds the bool value of a button press state to a bool property

Key : Binds the bool value of a keyboard key press state to a bool property

Mouse : Binds the general values of a mouse (Position, Velocity, Clicks) to exposed properties

Touch : Binds a input values of a Touch Input (Position, Velocity) to exposed properties

Utility

Light : Binds Light Properties (Color, Brightness, Radius) to exposed properties

Plane : Binds Plane Properties (Position, Normal) to exposed properties

Terrain : Binds Terrain Properties (Size, Height Map) to exposed properties

Transform

Position: Binds game object position to vector exposed property

Position (previous): Binds previous game object position to vector exposed property

Transform: Binds game object transform to transform exposed property

Velocity: Binds game object velocity to vector exposed property

Physics

Raycast: Performs a Physics Raycast and binds its result values (hasHit, Position, Normal) to exposed properties.

Collider

Sphere: Binds properties of a Sphere Collider to a Sphere exposed property.

UI

Dropdown: Binds the index of a Dropdown to a uint exposed property

Slider: Binds the value of a float slider to a uint exposed property

Toggle: Binds the bool value of a toggle to a bool exposed property.

In this case, we are interested in the Point Cache built-in property binder. You will find this in the dropdown like so:

The 2 fields need to be set to fields inside of the attached Visual Effect Graph on the same game object. They will be automatically populated for you.

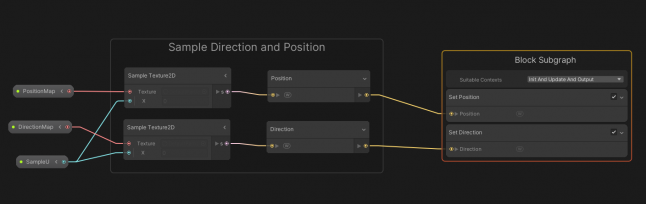

Next, you just need to convert that data inside of Visual Effect back into positional data that can be used to spawn independent Visual Effect systems.

This is done by a simple sub-graph shown below:

This will take the texture map data and convert it into positional and directional data. The data can be referenced in the VFX Graph by using the SampleU variable.

Now that the data is in the graph, we can use it to spawn each system individually. Just add the block that we made to convert the data back to positional and rotational values:

This is powerful because now the effects are all drawn on the same draw call. And because they are created in Visual Effect graph, it is all done on the GPU.

Doing this allowed the creation of tens of thousands of particle systems with very little effect on the frame-rate!

By Jake Jameson

Tags: big rook games, Component, Entities, Entity, Entity Component System, game development, gamedev, havok physics, indiegamedev, point cache, property binder, property binder component, raycast, System, Unity DOTS, Unity ECS, Unity Gamedev, Unity Shader Graph, Unity Visual Effects Graph, Unity.Physics, Unity.Physics raycast, visual effect graph

Read more about:

BlogsYou May Also Like