Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Historians reflect on the game industry's bumpy track record of accurately crediting developers, and make a case for why standardizing game credits is critical if we want to understand the industry.

You'd think that game credits would be simple.

It's just a list of names and roles, after all. How hard can that be to get right?

But credits are rarely simple, because neither is game development. And yet credits are an invaluable, underappreciated aspect of game making.

They're our best — and often only — record of the human labor that goes into game development, serving not only as a reminder that games are made by people — sometimes lots of them — but also as a tool for developers to advance their careers.

For studios, crediting can be a tool for leverage; amid the recent furor over Rockstar's bad labor practices, for example, we were reminded that the studio has long maintained a policy of not crediting people who worked on a game unless they were present when it shipped, to encourage the team "to get to the finish line."

For historians and journalists, meanwhile, they're a way to begin to peel back the layers. To uncover the stories of the people and companies behind the games.

Despite their importance, however, it's not unusual for the credits published with games to be inaccurate, incomplete, overly vague, or even (on rare occasions) downright misleading. This is a problem with many causes, but one of the big reasons, Fun Bits CEO Chris Millar told me in an interview earlier this year, is simply that credits in games aren't standardized.

"While they're much better than they used to be we're still not anywhere near the movie industry," he said, "in terms of giving people credit for all of their work on creative endeavors."

Indeed, the IGDA published the last version of its crediting guidelines back in 2014 — after multiple high-profile instances of bad crediting in the decade before, including an entire team of 55 people being wiped off the credits for Manhunt 2, and a years-long discussion about the importance of credit standards. But those guidelines are hard to find and with no union agreements in place they're for publishers to follow (or not) at their own discretion — provided they're even aware that the guidelines exist.

"[Atari not crediting game makers] was an attempt to dis-empower designers by removing the bargaining power associated with explicit authorship."

In order to get a proper understanding of how credits can help, hinder, contort, and otherwise affect games history and archiving, and to start to puzzle out how much of a difference credits standardization would actually make, I asked four historians and a few developers about the issue. Their stories reveal a complex relationship between labor, authorship, ownership, and recognition in game development throughout the history of the medium — and no doubt long into the future.

"The fact that credits exist in games reflects human concerns about authorship and ownership with regard to creative production," notes Laine Nooney, an assistant professor of media industries at NYU Steinhardt who has spent years researching and writing about the history of Sierra On-Line. The role of credits is to provide a factual record of this creative production but, as Nooney argues, they are also political.

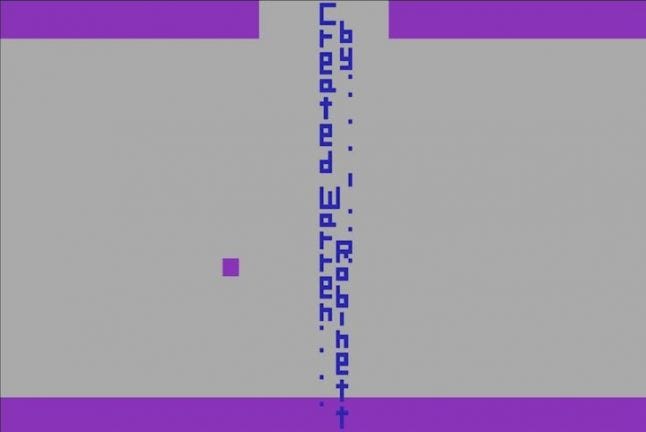

"When Atari management made it policy to not list designers' names on their games, this was an attempt to dis-empower designers by removing the bargaining power associated with explicit authorship," she explains. It backfired. Warren Robinett hid his name in a secret room in 1978 Atari VCS game Adventure, and five other star programmers soon left in protest of the policy to start Activision — ironically taking power away from Atari as a result.

Warren Robinett's famous hidden credit room, tucked away in Adventure

Games historian Jimmy Maher, who runs the Digital Antiquarian blog, points to other examples: "Radio Shack was also notorious for refusing to credit the people who made the TRS-80 games they carried in their stores," he says. "Even at a progressive publisher like Infocom, there was a lot of debate over whether and to what extent the authors of the games should be highlighted, as opposed to the Infocom brand and the so-called 'matrix' of genres and difficulty levels."

Some, Maher explains, thought their names should be on the box. Others "really couldn't care less, and just wanted to make the Infocom brand successful."

The historical relationship between credits and branding gets more intriguing as you dig deeper. MicroProse head Wild Bill Stealey — acting on a comment by the late comedian/actor Robin Williams about the games industry lacking recognizable stars — was responsible for Sid Meier's name becoming a branding tool. The Sid Meier's prefix soon came to decorate not only the titles of games that the Civilization designer led creatively but also the ones that he barely more than consulted on.

Maher adds that Origin's Worlds of Ultima: The Savage Empire similarly included Richard Garriott's name in the credits as an executive producer "despite having absolutely nothing to do with the game that I could discern." (And meanwhile Warren Spector was left off the credits despite reportedly creating the concept, setting, and plot outline for the game.)

Credits can be as much a reflection of internal politics as they are of actual project and company roles. While this gives historians interesting threads to explore, they must first become aware of which names are omitted or included because of politics.

This can result in history vanishing, as in the case of Arthur Abraham, who developed the prototype scripting language and game logic for Sierra's King's Quest and what would become the Adventure Game Interpreter (AGI) engine. "Abraham was fired part way through the development of King's Quest," explains Nooney, "and his name was left out of the credits of every King's Quest port (with the exception of the Apple IIe version), as well as every future Sierra release that used AGI."

Because of this, it took several interviews and extensive archival research, spread across several years, for Nooney to discern that Abraham was a key figure in AGI's development. "He died in prison before I could make an attempt to contact him," she continues. "Had I known at the start that he was foundational to AGI, I might have been able to correspond with him earlier and shed some light on the development of King's Quest — a game which is shrouded in misinformation about its development."

Many publishers have (or had) set policies of not crediting developers for their role on a project if they leave before it ships. I learned while conducting interviews for an Assassin's Creed oral history at Polygon, for instance, that several people who appeared in the credits under the title "additional" were in fact core team members who left before the game's four-year development concluded. Starcraft's original designer Ron Millar was similarly relegated to "special thanks" in the game's credits when he left to join Activision (which ironically now owns the entire company) while it was in testing.

Sometimes entire studios go uncredited for their work on a game. Games journalist and author of the Untold History of Japanese Game Developers book series John Szczepaniak notes that Namco does not allow anybody in Japan to disclose the names of staff who worked on any of its games. (Szczepaniak, however, has nothing preventing him from sharing those names outside Japan, and as such he has obtained a spreadsheet listing credits for the Pac-Land arcade game.) The original Castlevania was likewise published without credits, he adds. After extensive investigation, the best Szczepaniak and his colleagues can gather is that the main creator was Hitoshi Akamatsu — who they've been unable to contact.

Meanwhile, the practice of "white label" outsourcing — whereby companies are contractually-bound to keep quiet about their work on a game — has been around for decades. One Japanese studio, TOSE, reportedly works on 30 to 50 games per year and only receives credit for a handful of those (curiously, this only happens at the request of their clients — they have business reasons to want to stay anonymous).

A clip of the Castlevania credits

Szczepaniak, who wrote about this world of "ghost developers" like TOSE for The Escapist back in 2006, believes there should be some sort of international regulatory body preventing this from happening. "Every staff member should be credited for their work," he argues.

Even tiny indie and amateur games can wind up with names omitted entirely. "For small independent games, like fangames or freeware, one of the most difficult things is a total lack of credits," says Phil Salvador, a librarian and digital archivist who runs a blog about little-known and forgotten games called The Obscuritory.

"Sometimes developers will only go by a pseudonym or a company name, or they'll intentionally leave their name off. That's an understandable problem without much of a fix. Not everyone wants to use their real name on all their work or to be associated with a weird game they threw together when they were 14."

But when they do this — whether we're talking commercial efforts made by professionals or non-commercial games by amateurs and hobbyists — they also cause a huge headache for historians, who might want to learn more about how/when/why a game was made or to build up a more complete catalog of games released. "Even minimal credits can be helpful for asking around and starting the research process," notes Salvador. "With the companies often gone and their records presumably lost, anyone listed in a game's credits is a potentially helpful source."

If it seems like a tough challenge to use credits as a jumping-off point to uncovering more of the history behind Western-developed games, spare a thought for the people digging into the Japanese industry. "You cannot even begin to imagine the Herculean task of disentangling Japanese credit listings," says Szczepaniak. "And once you find a thread and follow it down the rabbit hole, you just bring up more questions than answers."

"Naoto Ohshima said Sega wouldn't allow staff to attribute their real name since it meant Sega had a stronger hold over the rights to any work created in-house."

Like the other historians interviewed for this article (and in my anecdotal experience, nearly everyone else), Szczepaniak uses MobyGames as a key reference guide for checking game and individual developer credits. He says its quick cross-referencing capabilities are invaluable for research, and it's been making great strides with both listing kanji for Japanese names and disentangling different people with the same name.

But it's an incredibly complex problem. Any fully-accurate staff crediting system for Japanese games, Szczepaniak argues, needs to have support for native kanji, phonetic hiragana and furigana (phonetic symbols that appear above kanji), and correct romanizations of these symbols, plus a means of differentiating between first and family names (in his book, Szczepaniak chose to put surnames in ALL CAPS) — as Japanese convention puts the surname first whereas Western convention is to put it last, but neither culture is always consistent.

Szczepaniak points to Naoto Ohshima as an example of problems with naming conventions. "There's actually three people with the same phonetically pronounced name, all in the games industry, who all worked on different series at different companies," he explains. "The Sega guy [who designed Sonic], another at ASCII who worked on the Wizardry series, and a graphics guy at Konami who worked on Silent Hill. And for a very long time a lot of websites mixed up the Sega and Konami, thinking they were the same person."

Even Sega-16, one of the leading sources on all things Sega. This then had knock-on consequences. The misattribution spread to Wikipedia and then across the Internet.

"This misattribution is due to lack of consistency with regards to listing kanji for non-English names," says Szczepaniak. "All three men have the same phonetic name, 'Naoto Ohshima,' but the OHSHIMA part uses different kanji for each of them — that is, different Japanese symbols, which have different pictographic meanings, but all sound exactly the same."

It gets more confusing. "This problem can also be inverted, with different people having exactly the same kanji symbols, but in each case using a different phonetic pronunciation," says Szczepaniak. Shigeru Miyamoto, for instance, was miscredited as Shigeru Miyahon in early NES games. And Szczepaniak adds that even Japanese people find this pronunciation issue confusing — to the point where many business cards use hiragana to explicitly state the pronunciation of someone's name, and at least one developer, Masatoshi Mitori of Human Entertainment, asks that the kanji for his surname not be listed because his family name uses archaic symbols that nobody recognizes.

Then you have the lack of gendered pronouns in Japanese conversation, which means interviews that mention a colleague named "Suzuki-san" could be referring to a man or a woman with the surname Suzuki — and if it's an archived interview then you can't necessarily just ask for clarification. As if that wasn't enough complexity, Japanese credits also have sometimes had nicknames in lieu of real names in them.

Szczepaniak explains that this was not always a case of programmers trying to be cool. Sometimes the publisher ordered it. "Sega was especially notorious for this, and Tecmo as well," he says. "The reason was to 'prevent headhunting,' since companies were terrified that skilled programmers would be snatched up by rivals, and also to prevent later copyright claims for work they had done. Naoto Ohshima said Sega wouldn't allow staff to attribute their real name since it meant Sega had a stronger hold over the rights to any work created in-house."

The reality is that credits, even as a snapshot, could never properly encapsulate the messiness of games history — the complicated power dynamics that form within companies and teams and between individuals, as well as the collaborative nature of the medium and the vast formal and informal support structures that lie beneath each company and project.

Roles are often fluid or informal. One person might start out on programming but finish as a writer or composer, or something else. When I was researching my book, The Secret History of Mac Gaming, I learned that the final design of the very first Mac game, Alice aka Through the Looking Glass, owed as much to the informal requests and complaints of Macintosh marketing rep Joanna Hoffman (who was the best player in the office) as to the work of its creator Steve Capps.

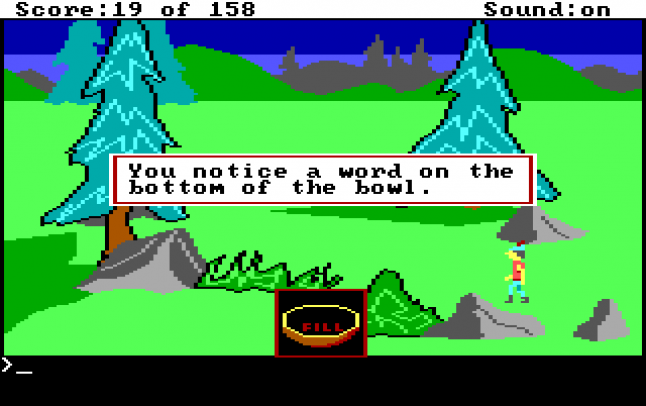

Similarly, Salvador gives an example where the de facto director of 1994 game Millennium Auction "was actually the company's vice president of business development, and they only received special thanks in the credits."

Millennium Auction in action

Nooney says that informal cross-pollination of roles was common at Sierra, too, whereby people with specific titles pitched in with work on other aspects of a game but weren't credited for that additional labor due to interpersonal politics.

This can go both ways. People might get a "thanks" credit for non-development labor, or perhaps benefit from a role title that oversells their actual contribution, then try to leverage that to get ahead in their career. Veteran developer Noah Falstein has come across the full spectrum of crediting issues during his 30-plus years in games, and he says he even once received a resumé from someone who said they'd worked on Sinistar — an arcade game project led and co-designed by Falstein.

"I didn't recognize his name," says Falstein. "I asked others I knew who had been at the company at the time, and it turned out he had helped load the games onto trucks, so technically it was correct, but had nothing to do with the role he was applying for."

The truth of the matter is as Maher says, sadly, that because of their inconsistencies and lack of standardization across the breadth of games history, credits must be looked at skeptically. They are a wonderful resource, no matter what, but their failures to properly document the history of labor perhaps reveal a need for something more than just credits as a high-level document record.

"It would certainly be interesting, and helpful for future historians, for companies to credit the entirety of their staff," says Nooney. "But I think a more provocative way to think about this issue is to recognize the limitations of the 'authorship model' as a basis for historical research on games. What else is worth knowing about the game industry beside who worked on a game?"

For Nooney — and indeed for anyone else doing macro-level histories of the different parts of the industry — internal organizational charts are often more valuable than credits because they provide insight into company-wide power relations. More valuable still, she says, is documentation of large corporate or economic events such as mergers, buyouts, layoffs, key hiring decisions that trigger internal re-organization, stock market crashes, and IPOs.

"We tend to miss this critical historical phenomena when we look at the game as our primary source of knowledge about the industry," she concludes.

In short: Credits matter, and we need to get them right, but if we want to have a good understanding of the history of this medium, and the industries built around it, they're actually just the tip of the iceberg. We need to do better, across the board, at documenting how we make and sell games.

You May Also Like