Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

This Dance Central postmortem was first published in Game Developer Magazine in January 2011.

This Dance Central postmortem was first published in Game Developer Magazine, January 2011. It has been republished here for the first time in November 2024.

Over 5 years ago, Harmonix developers were brainstorming about all the great ways that people interact with music and wondering how we might transform those interactions into authentic gameplay experiences. Dancing, with its visceral connection to rhythm, was the most potent and promising new idea. We knew that some day the time and tech would be right for us to make a fully immersive, authentic dance game. The opportunity to offer non-dancers the same type of approachable experience that exposed millions of non-musicians to the joy of rock music was a huge incentive to make this dream a reality. Serendipity struck when Microsoft showed Kinect to Harmonix, providing us with the perfect opportunity to develop an authentic dance game for a population fascinated with dancing shows and itching to get off the couch and join in.

For the dev team, this was the perfect opportunity to break from the Rock Band paradigm and craft a new universe of characters and venues. We were confident that the controller-free, body tracking, and fully immersive capabilities of Kinect made it the right technology for our game. Further, the opportunity to work with choreographers and dancers on a daily basis injected a new energy into an experienced and seasoned team. However, like any team working on a new IP, we made mistakes along the way, many of which were important learning experiences for us. The following is a selection of our most notable successes and missteps.

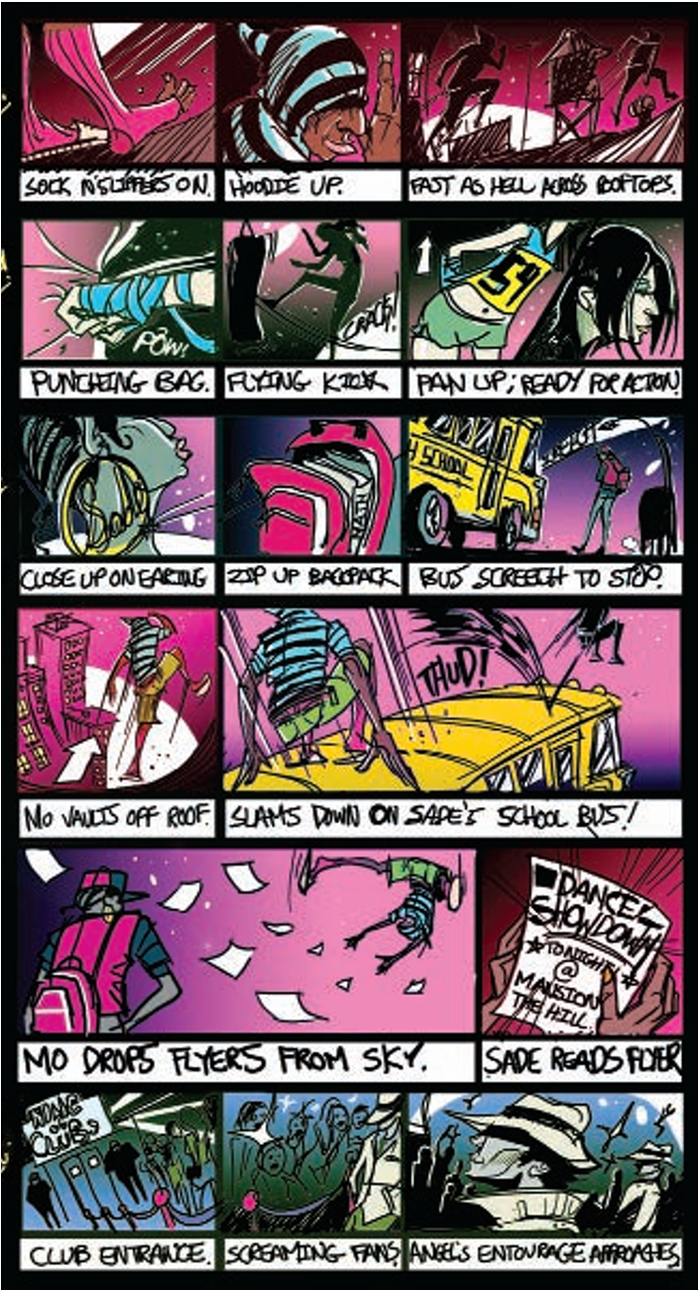

Image via Game Developer Magazine, January 2011.

Dance Central’s design started with a lofty aim—to create a game that will teach players real dance moves. This goal served as a compass during early prototyping, leading the team toward designs that gave prominence to dancing above all else. We utilized the instantly recognizable choreography from Soulja Boy’s “Crank Dat” as a litmus test for potential mechanics, throwing out a number of pose matching and gem hitting prototypes when they didn’t stand up to the challenge of communicating moves like the “Lean n’ Rock” or the “Supaman.” After a few months and several iterations, we settled on our move names and flashcards approach, which handily communicated the entirety of “Crank Dat.”

Although we had identified core gameplay mechanics that would teach real dance, we were still unsure of the makeup of our development team. In working on the Rock Band series, we’ve always considered our staff’s intimate knowledge of rock music a vital element of the franchise. Our developers’ experience touring, playing, and writing for their bands imbues Rock Band with an authenticity that we believe sets it apart from other music games. In order to inject the same type of authenticity into a dance game, we felt we had to assemble a team with a love for hip-hop, pop, and, most of all, dance.

At the dawn of the development of Dance Central, we held companywide tryouts to determine who would mocap the first prototype routine. We asked each prospective choreographer to both dance the routine to “Crank Dat” and develop a routine of his or her own design. While most Harmonix developers ran away screaming, a handful of Harmonix artists proved to be outstanding dancers and were promptly sent to mocap the first routines. This choreography proved essential during prototyping, but we quickly realized it would be critical to add professional dancers to the staff, both to create original professional-level choreography for the game and to teach the rest of the team how to dance.

We recruited local choreographers, eventually hiring Marcos Aguirre and Francisca “Frenchy” Hernandez as our internal choreography team. Marcos and Frenchy occupied various roles during development: creating choreography for game levels, holding dance classes for the team, and consulting on everything from voice over to song list. Their knowledge and excitement were a huge boost for the team. Through the team-wide dance classes, our choreographers imparted vital lessons about dance instruction, while exposing the team to the music, language, and culture of hip-hop dance.

As we tasked our choreographers with developing the first routines for the game, we refrained from imposing gameplay-driven choreographic restrictions so we could better understand what made up an authentic routine. This somewhat open approach was risky, but led to a refined focus on the game’s core mission, as we shaped our mechanics around actual dances rather than limiting or shoehorning routines into a rigid structure.

Given the complexity of the choreographed routines, it became clear that Dance Central would require an in-depth learning mode where players could spend hours learning more complicated combinations. We set out to create an experience that was not only effective at delivering instruction, but also fun to play. We knew that if it weren’t fun, no one would play it, and therefore no one would learn.

Our first step in designing what is now known as “Break it Down” was to observe Marcos and Frenchy. Their weekly dance lessons provided insight into how people master dance moves and routines. By observing their natural method of instruction, we narrowed the possibility space for BiD’s design, quickly discarding less fruitful ideas. We recognized that our play structure should emulate their teaching style, building on established elements such as the use of “recaps,” “slow-downs” (allowing the player to choose to slow down the action to give them a better look at the current move), and “verb barks” (shorts bursts of VO that step players through new moves, such as “Cross! Back! Step! Together!”). These elements formed the foundation of the shipping version of BiD and were critical to its success.

The same cross-discipline team responsible for developing BiD was also responsible for developing all other key gameplay features. This could have been an unmanageable amount of work, but we constructed a development process to mitigate the impact of the numerous iterations BiD required. Instead of continuous iteration, the development team worked with our internal playtesting department in a leapfrog fashion. A few key BiD features would be implemented, and while awaiting playtest feedback, the team would switch to development on something unrelated to BiD. Once playtest results came back, the team would refocus on BiD, with iteration driven by the playtest results. Other new non-BiD features, implemented in the meantime, were then sent to playtest. This alternating cycle continued throughout development.

We’re very satisfied with the final design of Break it Down. Its dynamic and detailed instruction methodology is unique to Dance Central, and is a critical component of the overall experience we wanted to construct. While there is plenty of room for improvement, the mode’s variable pacing successfully pairs game-like motivation with effective teaching methods. This would not have been possible without the inspiration provided by the choreographers paired with an effective process of playtest-driven iteration.

Image via Game Developer Magazine, January 2011.

Kinect doesn’t just enable new ways of playing games, it also demands new approaches to making games. At the onset of development, it was immediately clear that our existing workspaces lacked the physical space for developers to easily play and test the game. After reorganizing our office layout, we were able to give everyone on the team the room they needed to stand up, get down, and move around.

Next, we realized that it was difficult for coders, designers, and artists to maintain an efficient workflow if they frequently needed to stand up, wait for their skeleton to be tracked, and then sit back down at their workstation. Our first solution was decidedly low-tech: Because Kinect is also capable of tracking skeletons for non-human dummies, we constructed makeshift mannequins to stand in for real human developers. Our UI coder even went as far as building a functional mannequin with a movable right arm for testing our “swipe” shell gesture (it became affectionately known as “Swipey”).

Of course, a mannequin can’t perform a dance routine, so testing efficiency remained an issue for many on the team. To remedy this, we made an important investment in a recording and playback system for Kinect’s skeletal data. We developed a custom solution within our internal engine, making it easy for anyone on the team to record, play, loop, and analyze clips of skeletal data. We used our graphics engine to create debug visualizations, using geometric shapes and color. Because each recording was stored within our standard object files, it was straightforward to organize the hundreds of recordings into a reasonable database-like structure by annotating each clip with metadata.

Our recording tools proved invaluable for coders, artists, and designers working on any system reliant on skeletal data, including our dance detection, shell navigation, and freestyle “visualizer.”

As a Kinect launch title, we were faced with the challenge of building a game for a completely new input device while that device itself was still in development. The challenges were similar to developing for a new console, but in some ways the process was even more fluid as the Kinect software platform evolved alongside the hardware.

Given Dance Central’s reliance on dance detection, Kinect’s skeleton tracking was the key technology we needed to harness. Preliminary versions of the tracking pipeline were less precise and reliable than the final shipping system. As we designed our user interface and dance choreography, we uncovered gestures and poses that were difficult for the early tracking to reliably detect. It was impossible for us to predict exactly how robust tracking would be by launch, and there was some anxiety as to whether we would need to simplify or remove moves and gestures that were undetectable.

Rather than putting development off while the tracking improved, we accepted the situation and tried, whenever possible, to handle noisy skeletal data and cases of skeleton “crumpling” gracefully. We also began investing in sophisticated in-game detection technology that could better handle the possibility of imperfect tracking. The payoff was a more consistently playable and fun game throughout development. Even as the Kinect skeletal tracking was refined to its more robust shipping state, we realized that this wasn’t wasted work. Every Kinect game needs to handle cases where skeletal data isn’t reliable (e.g. if the player drifts out of the sensor’s field of view). Dealing with the design ramifications of noisy and unreliable data early on helped us better understand the capabilities of the technology and mitigate risk.

Early on, Microsoft provided us with detailed resource costs for using Kinect, including the CPU, GPU, and memory costs for skeletal tracking and other features of the platform. These costs are non-trivial, but because we had plenty of advance warning, we were able to plan and budget accordingly. The Kinect platform team also provided great resources for tackling some of the technical challenges unique to Kinect, such as smoothing algorithms, image processing, and latency reduction.

Creating new IP isn’t easy, especially when your studio is well known for one type of genre-defining game. We knew that the Dance Central team would need the drive to do something completely new while also quickly reaching consensus on feature scope. We looked back to the work our studio had done developing the original Guitar Hero as a reference point. At that time, Harmonix was a much smaller developer. We had to be prudent about our ambitions while remaining flexible and innovative. Our imperative for GH was first and foremost to completely nail the core fun experience. Naturally, it felt like Dance Central should share that approach.

We made the decision to keep the team small, composed of key members who had proven themselves on other Harmonix titles over the years. This was no easy task, given that we were simultaneously developing Rock Band 3! This core Dance Central group was able to break into agile sub-teams that rapidly iterated on gameplay prototypes. There was a conscious effort to focus the design on strengthening the core dance experience rather than adding breadth and complexity. Adding a character creator, in-depth single player campaign, or other ancillary feature would have detracted from achieving our core goals. Instead, we spent most of our time perfecting the dance gameplay—learning how to best handle difficulty, building a teaching mode, and making sure our flashcard and spotlight HUD were conveying the right information at the right time, all in the service of keeping the dancing as fun and entertaining as possible. Our strike teams consisted of a designer, coder, artists, sound designer, QA tester, and a producer, each empowered to scope, design, and prototype the main gameplay modes.

As noted earlier, we also made sure the team had a genuine interest in dancing and dance culture. That dedication shows in the final product. Having a common background, knowledge of club music, and passion for dancing meant we were able to approach all design choices knowing that they were grounded in the world of dance. By “speaking the same language,” we moved rapidly through iteration, since we didn’t suffer from off-the-mark decisions. We felt validated in our approach when we showed the game to professional dancers who were impressed with the authenticity of both the dancing and approach to teaching.

The combination of a small, powerful team, tasked with the goal of implementing a core feature set unhindered by feature bloat enabled us to execute a sophisticated game based on Microsoft’s brand new motion tracking tech in just 12 months.

Image via Game Developer Magazine, January 2011.

Our move detection system relies on Kinect’s skeletal tracking to score hundreds of unique dance moves across our 32 songs. Delivering immediate and accurate feedback to the player about how well they are dancing is at the heart of our game. As a result, we knew that the quality of our detection would make or break the experience. We also knew it would be one of our biggest technical challenges.

Eager to start, we began prototyping our move detection with modest expectations in terms of how well it would work. It didn’t take us long to develop reasonably good detection. While our prototype wasn’t at shipping quality, it worked well enough to facilitate gameplay and choreography testing while our engineering team continued to research better methods.

Unfortunately, getting from reasonably good to shipping-quality move detection proved much harder than our initial efforts led us to believe. Our two subsequent approaches demonstrated incremental improvements over our early prototype, but were ultimately deemed unacceptable and scrapped. We had learned a lot from our efforts, but we were running out of time. With only a few months left, we settled on what we believed was a solid technical solution.

To prove that this system was capable, we focused on authoring and tuning detection for one example song with the goal of getting it all the way to shipping-quality. The positive outcome was that the detection worked well and we finally had a system we were confident would do our dance moves justice. That said, we had an experience that remained unpolished until the final throes of development. This made it more challenging to evaluate our overall progress and led to other production woes as we closed in on GM.

After we got detection we were happy with for one of our songs, we began the process of applying that method to the entire title. It was immediately clear that we had greatly underestimated how much time it would take to author, tune, and validate this new detection for each song. With 31 more songs to go and rapidly approaching deadlines, this miscalculation created an arduous three-week “detection crunch” at the end of the project. This “detection crunch” bled over into a time when we were expecting to be focused on bug fixing. It was an immense challenge for the design team to revisit all the material in the game and to painstakingly prepare every routine for review and testing while we integrated other last minute additions like voice-over.

Furthermore, as each song was tuned, our QA team had to learn, master, and continually repeat demanding expert-level choreography so they could confirm the detection was working as intended. Dancing for hours a day for several weeks, our testers became physically and mentally exhausted. In order to acquire all the necessary test data, we enlisted dozens of additional staff from Harmonix to learn and perform routines. As our deadline loomed, it took a Herculean effort from our design, QA, and playtesting teams, with an assist from other studio volunteers over the final stretch, to make it to shipping quality detection for all 32 of our routines.

Image via Game Developer Magazine, January 2011.

Dance Central’s choreography covers a substantial range of difficulty, from simple two-steps to challenging top rock moves. We knew that having a broad range of choreography was necessary for the game to appeal to both novices and experts, but as the project unfolded we were unsure about the qualities that define the difficulty of a given move. We knew that delivering an accessible experience on easy would be vital to the title’s broad appeal, but throughout development, we tried and failed to establish an appropriate “low bar” numerous times.

Our first attempt began with our choreographers developing a few complex routines and presenting them to the design team. The design team, a group with a range of dance skills, tried out each move and discussed which were easy, medium, and hard. Using those ratings, we derived easy and medium combinations and videotaped the choreographers performing them. We presented these videos to various playtesters and had each try to dance along, rating the difficulty of the moves and the routines. Unfortunately, our playtesters weren’t good judges of their own skill level or performance. Once the songs were integrated into the game and players were scored, we found playtesters struggling with moves they had previous rated as easy. This problem was compounded by the fact that we had already motion-captured the routines and couldn’t reshoot, given the release schedule. We were stuck with some very challenging hard routines.

We tried again, this time encouraging our choreographers to come up with a few very simple routines. This time, our easy and medium levels turned out much easier. Some of our more talented playtesters were able to pick up hard levels without much effort. We thought we had reached an acceptable easy, but then tried presenting these levels to some key high-level staff who struggled, unable to perform the majority of the moves. With important members of the Harmonix brain trust unable to comment on the mechanics of the game, we knew we had yet to find universally accessible choreography.

With a few months to go, we finally figured out how to use staff members with minimal dance skills to our advantage. We asked our choreographers to generate a number of very easy moves and set up a dance class to teach these moves to the self-described “bad dancers” at Harmonix. The choreographers went through each move, asking the novices to follow along as the designers watched and noted which moves they picked up quickly. Using this information, we crafted four new easy songs, which make up the first tier of Dance Central. Although we succeeded in making these first songs very approachable, the difficulty ramp across all songs is not as smooth as we would have liked.

For many years, the process of licensing and integrating songs into our music games has been a well-oiled machine. The turnaround time from the moment a song is licensed to when it’s playable and bug-free in Rock Band is relatively quick and well-understood internally. For Dance Central, we initially thought we knew which production practices would work and which new processes would be needed. We quickly found out how easily it was to derail an unproven process and how complicated and dependent all the steps of bringing a song to completion were.

Unlike Rock Band’s process of licensing, stem prep, authoring, and testing, Dance Central’s process became licensing, choreographing and vetting, song editing, difficulty creation, mocap shooting and cleanup, animation integration, clip authoring, filter tuning, testing, and a handful of other steps along the way. Our two-to-three week-long processes became a two-to-three month-long process. This new process was much more fragile than we initially thought. Early missteps, however small, would ripple through the months-long pipeline. A single delay in song licensing or a mocap dancer being out sick for a day could end up putting an on-disc song at risk of being cut. With this high wire act performed by a small team with finite deadlines, we had an extreme production balancing act on our hands. Our producers became very adept at shifting schedules to keep things on track.

It was also easy to underestimate the physical demands of making a dance game. Both our choreographers and our QA testers were constantly being pushed to their physical limits in order to get their jobs done and stay on schedule. This physical burden on the testing and development teams was something we had never considered when developing previous games.

Nearly all of Harmonix’s games over the past decade have placed a heavy focus on creating a deep connection between the player and the music. Elements that distract from this have always been given less attention; we’ve devoted little focus to developing complex narratives or fleshing out character backgrounds. Only one of our games, antiGrav, featured characters that spoke, and while Guitar Hero 1 and 2 had selectable prefab characters, they never spoke, allowing the game to hint at a narrative context rather than inhabit a developed story. The Rock Band series has a very loose “rise to fame” narrative backbone focused on player-created band members, who start as unknowns and finish as superstars. However, all of this is presented in a lightweight fashion: characters don’t speak and are essentially blank slates onto which players can project their own personality.

For Dance Central, the decision was made early on to create a world inhabited by an ensemble cast of unique characters. As with our previous rock-focused games, we would keep things simple; any narrative would be loosely implied and supported by a lightweight text-based player ranking system. Our characters would have unique personalities, but these would be communicated through their dance styles, body language, and fashion sense. They would not speak.

Quite late in development it became clear that the decision to mute our characters had been the wrong one. We reached a point where the animated mocap sequences that bookend each song were fleshed out, and featured our characters doing crazy dance moves and strutting their stuff. The moves looked killer! However, with no voice, our characters were coming across as lifeless puppets. So we made the decision to add character VO to the game. We felt that this would bring our characters to life in ways that the visual cues and animation had not. In doing this, we underestimated both the risks and amount of work involved, in large part due to our inexperience in this area of development.

We kickstarted the process of giving each of our characters a voice and immediately encountered a slew of execution hindrances further compounded by our tight deadlines. Our staff writer was able to flesh out characters and turn around quality dialog quickly, but was not afforded the luxury of time to iterate. We located a company to secure and record VO talent, but due to time constraints our choice of available actors was limited and our opportunity for pickup recordings and line tweaks was almost non-existent. We had to roll new code in to support lip synch animation, and as a result our already strapped animation department now had more work thrown onto the pile. Then there was localization to deal with... the list of complications went on and on. The mad scramble that ensued to resolve these issues and meet our already aggressive deadline added stress and distractions to an already taxed team.

In the end, some of the characters came out fun and relatable and others missed the mark completely. We’ve learned that creating fully-fledged characters is not an undertaking that should be taken lightly or added late to the process; the risks in doing so cannot be underestimated.

Image via Game Developer Magazine, January 2011.

It wasn’t until Dance Central’s reveal at E3 that we truly understood how our decisions would pay off. It was an amazing moment to see people engage with our game for the first time, cast off their inhibitions and realize that anyone could step up and shake it. We always knew that this title would push the Kinect technology to its limits and we’re incredibly proud to have made a game that is getting people up off their couches, moving around and feeling good. In true Harmonix style, we took on risky work in an unfamiliar space with a short amount of time, and delivered a game that fits perfectly within the Harmonix universe.

Lastly, a sincere and heartfelt thank you to Microsoft and the teams there that worked closely with us, all of who stepped up and delivered resources and advice throughout development that kept us on track and on schedule. Beyond that, we thank them for creating Kinect itself, through which we’re bringing new and authentic experiences to gamers and non-gamers, dancers and non-dancers!

When developing a Kinect title, you also need to create a way to navigate menus using gestural input. Microsoft hadn’t yet released any UI libraries or guidelines, so despite the fact that none of our team members had any expertise in this field, we set out to design a solution from scratch.

Our approach to working in this unfamiliar and evolving field was to iterate quickly through rapid prototyping. This allowed us to fail quickly, identify what didn’t work, and eventually whittle down to what did. We formed a team to focus on the UI that met twice a week. The principal workers in this team were a programmer and a UI artist; the remainder of this team included the project director, producer, lead programmer, lead designer, and other senior members of the project. Generally these meetings had about 8 attendees total.

Here’s how we structured the team meetings: We’d begin by taking a look at the latest prototype, noting its deficiencies and limitations, and brainstorming ways to improve it. If someone had an idea, they would have to sell it to the rest of the team. Eventually, we’d reach a consensus and leave with a list of action items. Those action items alone determined the subsequent work for the programmer and UI artist. With this method we were able to allow a large group of individuals to have creative input while removing dependencies or changes in direction for the principal workers in between meetings.

As we ran through many prototypes, we learned some important lessons about full body gestural input. One major hurdle that separated this type of input from, say, a touchscreen, was the lack of an obvious way to signal engagement/disengagement. With an iPhone, if you want to press a button, flick between pages, or scroll a list, you can just touch your finger to the screen. When navigating a UI with Kinect, it’s essentially like your finger is always on the screen. Kinect doesn’t detect whether your hands are open or closed, so we couldn’t determine engagement that way. We tried using the player’s hand position in the depth axis and arm extension to determine engagement, and while it seemed like it would be an intuitive way to interact with this sort of UI, in practice it was unsatisfying and strange.

We decided to ditch using the depth axis, electing to use only the hand’s horizontal and vertical position to navigate. We prototyped a UI using this concept: a vertical list of buttons where a specific button was highlighted based on your hand height, and that highlighted button could be slid across the screen in order to select it. After the prototype concept was initially dismissed because it didn’t feel right, our UI artist had an idea for a treatment and created a video in Maya to mock up how he envisioned us using this navigation paradigm. He altered the look and animation of these buttons, while still retaining the same underlying user interaction. This added aesthetic polish elucidated the concept to the team, and the shipping version of our UI matches that mockup video very closely.

You May Also Like