Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Provides background information and many practical tips on C# memory management in Unity. Part 2 introduces tools and techniques for avoiding memory leaks; part 3 discusses object pooling.

[Note: This post presupposes 'intermediate' knowledge of C# and Unity.]

I'm going to start this post with a confession. Although raised as a C/C++ developer, for a long time I was a secret fan of Microsoft's C# language and the .NET framework. About three years ago, when I decided to leave the Wild West of C/C++-based graphics libraries and enter the civilized world of modern game engines, Unity stood out with one feature that made it an obvious choice for myself. Unity didn't require you to 'script' in one language (like Lua or UnrealScript) and 'program' in another. Instead, it had deep support for Mono, which meant all your programming could be done in any of the .NET languages. Oh joy! I finally had a legitimate reason to kiss C++ goodbye and have all my problems solved by automatic memory managment. This feature had been built deeply into the C# language and was an integral part of its philosophy. No more memory leaks, no more thinking about memory management! My life would become so much easier.

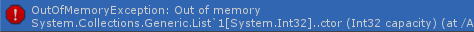

Don't show this to your players.

If you have even the most casual acquaintance with Unity and/or game programming, you know how wrong I was. I learned the hard way that in game developement, you cannot rely on automatic memory managment. If your game or middleware is sufficiently complex and resource-demanding, developing with C# in Unity is therefore a bit like walking a few steps backwards in the direction of C++. Every new Unity developer quickly learns that memory management is a problematic issue that cannot simply be entrusted to the Common Language Runtime (CLR). The Unity forums and many Unity-related blogs contain several collections of tips and best practices concerning memory. Unfortunately, not all of these are based on solid facts, and to the best of my knowledge, none of them are comprehensive. Furthermore, C# experts on sites such as Stackoverflow often seem to have little patience for the quirky, non-standard problems faced by Unity developers. For these reasons, in this and the following two blog posts, I try to give an overview and hopefully some in-depth knowlege on Unity-specific memory management issues in C#.

This first post discusses the fundamentals of memory management in the garbage-collected world of .NET and Mono. I also discuss some common sources of memory leaks.

The second looks at tools for discovering memory leaks. The Unity Profiler is a formidable tool for this purpose, but it's also expensive. I therefore discuss .NET disassemblers and the Common Intermediate Language (CIL) to show you how you can discover memory leaks with free tools only.

The third post discusses C# object pooling. Again, the focus is on the specific needs that arise in Unity/C# development.

I'm sure I've overlooked some important topics - mention them in the comments and I may write them up in a post-script.

Most modern operating systems divide dynamic memory into stack and heap (1, 2), and many CPU architectures (including the one in your PC/Mac and your smartphone/tablet) support this division in their instruction sets. C# supports it by distinguishing value types (simple built-in types as well as user-defined types that are declared as enum or struct) and reference types (classes, interfaces and delegates). Value types are allocated on the stack, reference types on the heap. The stack has a fixed size which is set at the start of a new thread. It's usually small - for instance, .NET threads on Windows default to a stack size of 1Mb. This memory is used to load the thread's main function and local variables, and subsequently load and unload functions (with their local variables) that are called from the main one. Some of it may be mapped to the CPU's cache to speed things up. As long as the call depth isn't excessively high or your local variables huge, you don't have to fear a stack overflow. And you see that this usage of the stack aligns nicely with the concept of structured programming.

If objects are too big to fit on the stack, or if they have to outlive the function they were created in, the heap comes into play. The heap is 'everything else' - a section of memory that can (usually) grow as per request to the OS, and over which the program rules as it wishes. But while the stack is almost trivial to manage (just use a pointer to remember where the free section begins), the heap fragments as soon as the order in which you allocate objects diverges from the order in which you deallocate them. Think of the heap as a Swiss cheese where you have to remember all the holes! Not fun at all. Enter automatic memory management. The task of automated allocation - mainly, keeping track of all the holes in the cheese for you - is an easy one, and supported by virtually all modern programming languages. Much harder is the task of automatic deallocation, especially deciding when an object is ready for deallocation, so that you don't have to.

This latter task is called garbage collection (GC). Instead of you telling your runtime environment when an object's memory can be freed, the runtime keeps track of all the references to an object and is thereby able to determine - at certain intervals - when an object cannot possibly be reached anymore from your code. Such an object can then be destroyed and it's memory gets freed. GC is still actively researched by academics, which explains why the GC architecture has changed and improved significantly in the .NET framework since version 1.0. However, Unity doesn't use .NET but it's open-source cousin, Mono, which continues to lag behind it's commercial counterpart. Furthermore, Unity doesn't default to the latest version (2.11 / 3.0) of Mono, but instead uses version 2.6 (to be precise, 2.6.5 on my Windows install of Unity 4.2.2 [EDIT: the same is true for Unity 4.3]). If you are unsure about how to verify this yourself, I will discuss it in the next blog post.

One of the major revisions introduced into Mono after version 2.6 concerned GC. New versions use generational GC, whereas 2.6 still uses the less sophisticated Boehm garbage collector. Modern generational GC performs so well that it can even be used (within limits) for real-time applications such as games. Boehm-style GC, on the other hand, works by doing an exhaustive search for garbage on the heap at relatively 'rare' intervals (i.e., usually much less frequently than once-per-frame in a game). It therefore has an overwhelming tendency to create drops in frame-rate at certain intervals, thereby annoying your players. The Unity docs recommend that you call System.GC.Collect() whenever your game enters a phase where frames-per-second matter less (e.g., loading a new level or displaying a menu). However, for many types of games such opportunities occur too rarely, which means that the GC might kick in before you want it too. If this is the case, your only option is to bite the bullet and manage memory yourself. And that's what the remainder of this post and the following two posts are about!

Let's be clear about what it means to 'manage memory yourself' in the Unity / .NET universe. Your power to influence how memory is allocated is (fortunately) very limited. You get to choose whether your custom data structures are class (always allocated on the heap) or struct (allocated on the stack unless they are contained within a class), and that's it. If you want more magical powers, you must use C#'s unsafe keyword. But unsafe code is just unverifiable code, meaning that it won't run in the Unity Web Player and probably some other target platforms. For this and other reasons, don't use unsafe. Because of the above-mentioned limits of the stack, and because C# arrays are just syntactic sugar for System.Array (which is a class), you cannot and should not avoid automatic heap allocation. What you should avoid are unnecessary heap allocations, and we'll get to that in the next (and last) section of this post.

Your powers are equally limited when it comes to deallocation. Actually, the only process that can deallocate heap objects is the GC, and its workings are shielded from you. What you can influence is when the last reference to any of your objects on the heap goes out of scope, because the GC cannot touch them before that. This limited power turns out to have huge practical relevance, because periodic garbage collection (which you cannot suppress) tends to be very fast when there is nothing to deallocate. This fact underlies the various approaches to object pooling that I discuss in the third post.

A common suggestion which I've come across many times in the Unity forums and elsewhere is to avoid foreach loops and use for or while loops instead. The reasoning behind this seems sound at first sight. Foreach is really just syntactic sugar, because the compiler will preprocess code such as this:

foreach (SomeType s in someList) s.DoSomething();

...into something like the the following:

using (SomeType.Enumerator enumerator = this.someList.GetEnumerator()) { while (enumerator.MoveNext()) { SomeType s = (SomeType)enumerator.Current; s.DoSomething(); } }

In other words, each use of foreach creates an enumerator object - an instance of the System.Collections.IEnumerator interface - behind the scenes. But does it create this object on the stack or on the heap? That turns out to be an excellent question, because both are actually possible! Most importantly, almost all of the collection types in the System.Collections.Generic namespace (List<T>, Dictionary<K, V>, LinkedList<T>, etc.) are smart enough to return a struct from from their implementation of GetEnumerator()). This includes the version of the collections that ships with Mono 2.6.5 (as used by Unity).

[EDIT] Matthew Hanlon pointed my attention to an unfortunate (yet also very interesting) discrepancy between Microsoft's current C# compiler and the older Mono/C# compiler that Unity uses 'under the hood' to compile your scripts on-the-fly. You probably know that you can use Microsoft Visual Studio to develop and even compile Unity/Mono compatible code. You just drop the respective assembly into the 'Assets' folder. All code is then executed in a Unity/Mono runtime environment. However, results can still differ depending on who compiled the code! foreach loops are just such a case, as I've only now figured out. While both compilers recognize whether a collection's GetEnumerator() returns a struct or a class, the Mono/C# has a bug which 'boxes' (see below, on boxing) a struct-enumerator to create a reference type.

So should you avoid foreach loops?

Don't use them in C# code that you allow Unity to compile for you.

Do use them to iterate over the standard generic collections (List<T> etc.) in C# code that you compile yourself with a recent compiler. Visual Studio as well as the free .NET Framework SDK are fine, and I assume (but haven't verified) that the one that comes with the latest versions of Mono and MonoDevelop is fine as well.

What about foreach-loops to iterate over other kinds of collections when you use an external compiler? Unfortunately, there's is no general answer. Use the techniques discussed in the second blog post to find out for yourself which collections are safe for foreach. [/EDIT]

You probably know that C# offers anonymous methods and lambda expressions (which are almost but not quite identical to each other). You can create them with the delegate keyword and the => operator, respectively. They are often a handy tool, and they are hard to avoid if you want to use certain library functions (such as List<T>.Sort()) or LINQ.

Do anonymous methods and lambdas cause memory leaks? The answer is: it depends. The C# compiler actually has two very different ways of handling them. To understand the difference, consider the following small chunk of code:

int result = 0; void Update() { for (int i = 0; i < 100; i++) { System.Func<int, int> myFunc = (p) => p * p; result += myFunc(i); } }

As you can see, the snippet seems to create a delegate myFunc 100 times each frame, using it each time to perform a calculation. But Mono only allocates heap memory the first time the Update() method is called (52 Bytes on my system), and doesn't do any further heap allocations in subsequent frames. What's going on? Using a code reflector (as I'll explain in the next blog post), one can see that the C# compiler simply replaces myFunc by a static field of type System.Func<int, int> in the class that contains Update(). This field gets a name that is weird but also revealing: f__am$cache1 (it may differ somewhat on you system). In other words, the delegator is allocated only once and then cached.

Now let's make a minor change to the definition of the delegate:

System.Func<int, int> myFunc = (p) => p * i++;

By substituting 'i++' for 'p', we've turned something that could be called a 'locally defined function' into a true closure. Closures are a pillar of functional programming. They tie functions to data - more precisely, to non-local variables that were defined outside of the function. In the case of myFunc, 'p' is a local variable but 'i' is non-local, as it belongs to the scope of the Update() method. The C# compiler now has to convert myFunc into something that can access, and even modify, non-local variables. It achieves this by declaring (behind the scenes) an entirely new class that represents the reference environment in which myFunc was created. An object of this class is allocated each time we pass through the for-loop, and we suddenly have a huge memory leak (2.6 Kb per frame on my computer).

Of course, the chief reason why closures and other language features where introduced in C# 3.0 is LINQ. If closures can lead to memory leaks, is it safe to use LINQ in your game? I may be the wrong person to ask, as I have always avoided LINQ like the plague. Parts of LINQ apparently will not work on operating systems that don't support just-in-time compilation, such as iOS. But from a memory aspect, LINQ is bad news anyway. An incredibly basic expression like the following:

int[] array = { 1, 2, 3, 6, 7, 8 }; void Update() { IEnumerable<int> elements = from element in array orderby element descending where element > 2 select element; ... }

... allocates 68 Bytes on my system in every frame (28 via Enumerable.OrderByDescending() and 40 via Enumerable.Where())! The culprit here isn't even closures but extension methods to IEnumerable: LINQ has to create intermediary arrays to arrive at the final result, and doesn't have a system in place for recycling them afterwards. That said, I am not an expert on LINQ and I do not know if there are components of it that can be used safely within a real-time environment.

If you launch a coroutine via StartCoroutine(), you implicitly allocate both an instance of Unity's Coroutine class (21 Bytes on my system) and an Enumerator (16 Bytes). Importantly, no allocation occurs when the coroutine yield's or resumes, so all you have to do to avoid a memory leak is to limit calls to StartCoroutine() while the game is running.

No overview of memory issues in C# and Unity would be complete without mentioning strings. From a memory standpoint, strings are strange because they are both heap-allocated and immutable. When you concatenate two strings (be they variables or string-constants) as in:

void Update() { string string1 = "Two"; string string2 = "One" + string1 + "Three"; }

... the runtime has to allocate at least one new string object that contains the result. In String.Concat() this is done efficiently via an external method called FastAllocateString(), but there is no way of getting around the heap allocation (40 Bytes on my system in the example above). If you need to modify or concatenate strings at runtime, use System.Text.StringBuilder.

Sometimes, data have to be moved between the stack and the heap. For example, when you format a string as in:

string result = string.Format("{0} = {1}", 5, 5.0f);

... you are calling a method with the following signature:

public static string Format( string format, params Object[] args )

In other words, the integer "5" and the floating-point number "5.0f" have to be cast to System.Object when Format() is called. But Object is a reference type whereas the other two are value types. C# therefore has to allocate memory on the heap, copy the values to the heap, and hand Format() a reference to the newly created int and float objects. This process is called boxing, and its counterpart unboxing.

This behavior may not be a problem with String.Format() because you expect it to allocate heap memory anway (for the new string). But boxing can also show up at less expected locations. A notorious example occurs when you want to implement the equality operator "==" for your home-made value types (for example, a struct that represents a complex number). Read all about how to avoid hidden boxing in such cases here.

To wind up this post, I want to mention that various library methods also conceal implicit memory allocations. The best way to catch them is through profiling. Two interesting cases which I've recently come across are these:

I wrote earlier that a foreach-loop over most of the standard generic collections does not result in heap allocations. This holds true for Dictionary<K, V> as well. However, somewhat mysteriously, Dictionary<K, V>.KeyCollection and Dictionary<K, V>.ValueCollection are classes, not structs, which means that "foreach (K key in myDict.Keys)..." allocates 16 Bytes. Nasty!

List<T>.Reverse() uses a standard in-place array reversal algorithm. If you are like me, you'd think that this means that it doesn't allocate heap memory. Wrong again, at least with respect to the version that comes with Mono 2.6. Here's an extension method you can use instead which may not be as optimized as the .NET/Mono version, but at least manages to avoid heap allocation. Use it in the same way you would use List<T>.Reverse():

public static class ListExtensions { public static void Reverse_NoHeapAlloc<T>(this List<T> list) { int count = list.Count; for (int i = 0; i < count / 2; i++) { T tmp = list[i]; list[i] = list[count - i - 1]; list[count - i - 1] = tmp; } } }

***

There are other memory traps which could be written about. However, I don't want to give you any more fish, but teach you how to catch your own fish. Thats what the next post is about!

Part 2

Part 3

Read more about:

Featured BlogsYou May Also Like