Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Using value = lerp(value, target, speed) to smoothly animate a value is a common pattern. A trivial change can make it frame rate independent and easier to tune.

A lot of game developers will instantly recognize this line of code:

value = lerp(value, targetValue, 0.1)

It’s super useful, and can be used for all sorts of things. With a higher constant, it’s a good way to smooth out jittery input from a mouse or joystick. With a low constant, it’s a nice way to smoothly animate a progress bar or a camera following a player. It will never overshoot the target value even if it’s changing, and it changes the speed based on how far away it is so it will always quickly converge on the target. Pretty good for a one liner!

Unfortunately it has a couple problems that are often ignored to some extent:

The first is that it’s highly dependent on frame rate. The common advice is to use a fixed time step, but that's not great advice. If it's for player or camera animation, you will likely end up with visible jitters. If you change your fixed time step, you need to retune.

The second is that the lerp() constant that you need to use is relatively hard to tune. It’s not uncommon to start adding some extra 0's or 9's to the front of the lerp() constant to get the desired smoothing amount. Assuming 60 fps, if you want to move halfway towards an object in a second you need to use a lerp() constant of 0.0115. To move halfway in a minute, you need to use 0.000193. On the other end of the spectrum if you use a lerp() constant of 0.9 you will run out of 32 floating point precision within 7 frames, and it will be exactly at the target value. That is insanely sensitive to hand tune with a UI slider, or even a hand typed value.

Fortunately, with a little math, both issues are easy to fix!

The key is to replace the simple lerp() constant with an exponential function that involves time. The choice of exp2() is arbitrary. Any base will work fine, you'll just end up with a different rate value.

value = lerp(target, value, exp2(-rate*deltaTime))

In this version, rate controls how quickly the value converges on the target. With a rate of 1.0, the value will move halfway to the target each second. If you double the rate, the value will move in twice as fast. If you halve the rate, it will move in half as fast. This is much more intuitive.

Even better, it’s frame rate independent. If you lerp() this way 60 times with a delta time of 1/60 s, it will be the same result as 30 times with 1/30 s, or once with 1 s. No fixed time step is needed, or the jittery movement it causes.

If you are worried about the performance, don't be. Even mobile CPUs have instructions to help calculate exponents and logarithms in a few CPU cycles. In 2018, you should be much more worried about cache misses than math lib costs, but that's a post for another day.

So this new version is frame rate independent, and easier to tune. How do you convert your old lerp() statements into the new ones without changing the smoothing coefficients that already work well at 60 fps? Math to the rescue again. The following formula will can convert them: (Note: Make sure to change the log base if you didn't use exp2())

rate = -fps*log2(1 - coef)

Recalculate them and don't look back!

Adjusting the numbers by hand is much easier now, but it's still not slider friendly. Negative rates cause the math to blow up, and slowing the rate down will be very sensitive. Just add more exponents!

rate = exp2(logRate)

value = lerp(target, value, exp2(-rate*deltaTime))

This will make sliders more intuitive. Moving the slider a certain amount will always change the rate by the same fraction, and you can precompute rate if you want.

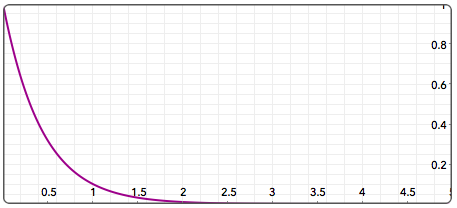

Say you are lerping by 0.9 each frame. That means you are leaving (1 - 0.9) = 0.1 = 10% of the remaining value. After 2 frames, there will be (1 - 0.9)*(1 - 0.9) = 0.01 = 1% of the remaining value. After 3, (1 - 0.9)*(1 - 0.9)*(1 - 0.9) = 0.001 = 0.1%. After n frames you’ll have (1 - 0.9)^n of the remaining value. In graph form:

So really all you are doing by repeatedly lerping is evaluating an exponential curve, and that's why you can replace it with an actual exponential curve based on time.

As an interesting aside, since you are moving partway towards the targe value each frame, you'll never actually arrive at the target... or will you? float can store ~7 significant digits, and double ~16. Here’s a quick snippet of Ruby code to test what happens:

value = 0.0

target = 1.0

alpha = 0.9

100.times do|i|

value = (1 - alpha)*value + alpha*target

puts "#{i + 1}: #{value == target}"

end

And the output?

1: false

2: false

... (more false values)

15: false

16: false

17: true

It shouldn't be too surprising that precision runs out after the 16th iteration. (1 - 0.9)^17 is quite small. 1e-18 to be exact. That is so small, that in order to store 1 - 1e-17 you would need 18 significant digits, and doubles can only store 16! More interestingly, no matter what your starting and ending values are, it will always run out of precision after 16 iterations. Most game engines use floats instead of doubles, and those can only store ~7 significant digits. So you should expect precision to run out after only the 7th iteration. What about for other constants? (Keep in mind I’m using doubles, and floats would run out in half as many iterations.) For 0.8 you run out of precision after 23 iterations, 53 for 0.5.

With constants less than 0.5 the pattern changes and something curious happens. Say you keep lerping with a constant of 0.5. Eventually, you will run out of precision and the next possible floating point number after value will be target. When you try to find the new value half way between, it will cause it to round up to target instead. If you use a constant smaller than 0.5, it will round down to value. So instead of arriving at target, it will get permanently stuck at the floating point number immediately before it. For a constant of 0.4, the value gets stuck at 70 iterations, or 332 for 0.1.

Read more about:

Featured BlogsYou May Also Like