Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

When I get an idea for a new game, it often starts with a fantasy. For my first VR game, Darknet, it was the fantasy of being an elite hacker, flying through an abstract neon cyberspace, breaking through the layers of security and racing against the clock. My next game idea also started with a Hollywood power fantasy: I wanted to be the General in a sci-fi war movie, using a high-tech 3D interface to command my troops to victory. I wanted to see the whole battlefield, displayed in miniature as a glowing blue hologram, where I could tactically maneuver my troops and outwit my hapless enemy. That’s how Tactera was born.

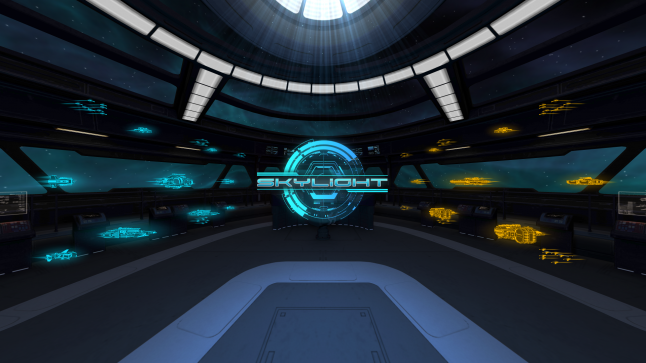

Today, I’m launching my third VR title, a turn-based tactics game called Skylight on Gear VR. This time, the fantasy was to be the admiral of a vast fleet of starships, carefully plotting our campaigns through space and trying to stay two steps ahead of the enemy. I wanted to feel like Admiral Ackbar, orchestrating one side of a massive battle from space, or Ender Wiggin calculating his next move against the Formics.

There was one problem: to do it right, I would need to be able to display a giant fleet of dozens of starships, from big capital ships to squadrons of tiny fighters, all flying around, with engine trails and blaster shots and explosions and lasers shooting everywhere. And all of this action would need to run in VR at a solid 60 FPS on a cell phone.

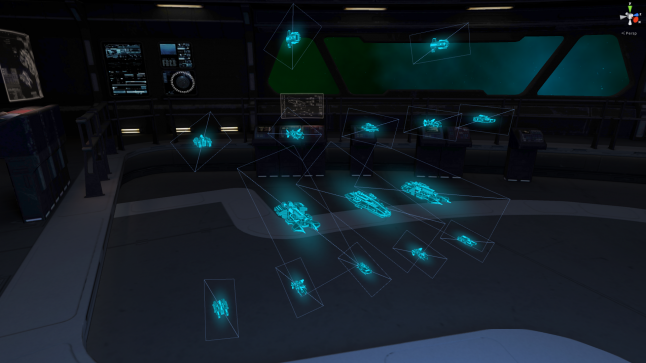

To face this challenge, I drew heavily on my experience with my earlier games. I had faced a similar issue when I made Tactera, and Skylight is built on the same tech. Both games were designed with performance limits in mind from the very start. To make a solid frame rate a realistic possibility, I decided to aim for an abstract, low-poly aesthetic for all of the gameplay elements. Both Skylight and Tactera are set inside a futuristic command center, and you control your units through a holographic interface.

Those stylized graphics let me keep the rendering process simple and straightforward. Using real-time dynamic lighting, shadows, and full-screen image effects can make for a prettier picture, but those techniques require the game to process each pixel many times over, which can become very slow. In my games, I used only unlit materials, which kept the rendering quick and easy. (I did use a lot of overlaid transparent objects, which can also cause fillrate issues, but I found that the Gear VR could handle those in moderation.) Also, since Skylight was set in a single room, it naturally limited the complexity of the environment. There was no need to render sprawling open areas filled with extraneous props, which also kept down the cost of the rendering.

Next, I drafted a performance budget. What would I need to compute or render each frame? How many of each object might there be? How many polygons would that involve? How many draw calls? I knew that Oculus recommends a lower limit of about 50K polygons and 50 draw calls for a Unity game, and I made sure that the math worked out.

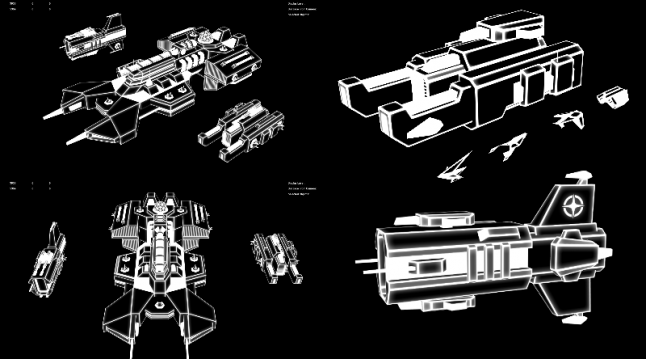

At this point, I knew that the polygon count would be a challenge, but I was luckily able to find a talented artist who could make ultra-low-poly models that still looked fantastic. I asked him to build ships ranging from 2,500 polygons (for a capital ship) to as few as 60 polygons (for a fighter). For the room itself, which required a more realistic style, he was able to make something that looked amazing with just a few thousand polygons. Since the player couldn’t freely move around and inspect the walls up close, this worked perfectly.

My next step, and perhaps the most useful, was to create a stress test. This was a simple Unity scene filled with placeholders for all the different objects in my budget. It looked like a big box crammed full of random models, glowing orbs, and particle effects shooting in every direction. To test CPU limits, I added some dummy scripts that ran through empty loops and randomly moved the models every frame, turning the scene into even more of a crazy mess. Then I stuck it onto a phone and tested it on Gear VR.

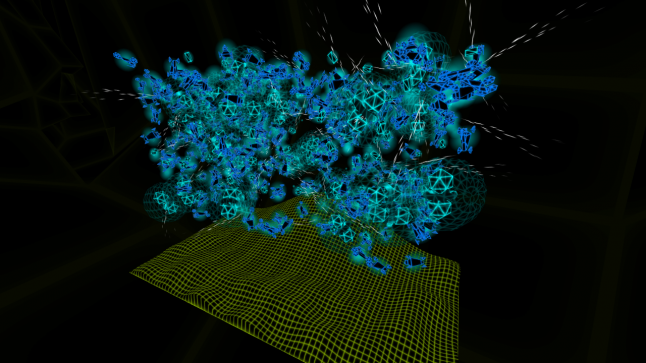

This was the start of a long process of discovery, with lots of experimentation, testing, and iteration. Unity had a few surprises for me. I found that it was sometimes much more efficient to manually combine objects into a single mesh, even if those objects could be statically or dynamically batched. I ended up creating my own system for combining lots of simple billboarded quads into a single object. Thus, large numbers of common spherical objects can be rendered in one low-poly mesh, like the bombs being dropped by this squadron of bombers:

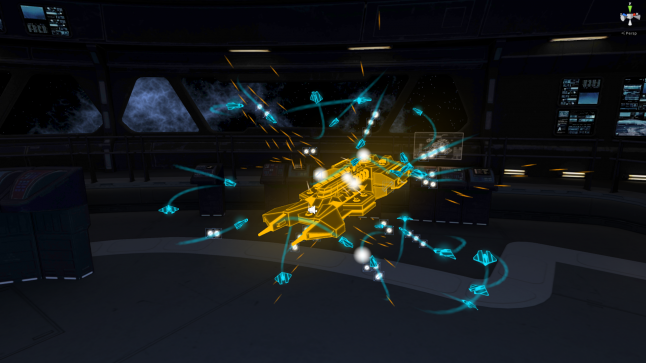

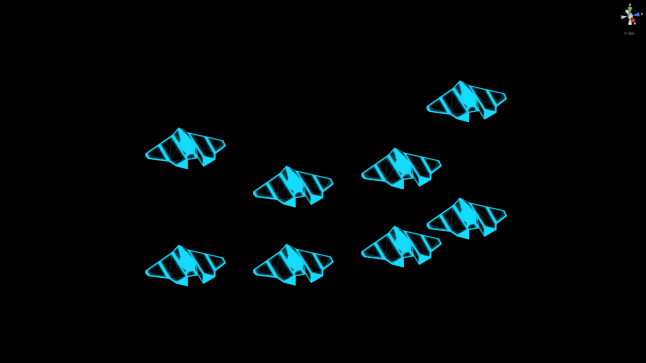

Similarly, I found that it was sometimes better to combine groups of units into one skinned and rigged mesh rather than keep them as separate objects (even though separate meshes could be batched and skinned meshes can’t). Each squadron of eight fighters in Skylight, like the one shown below, is actually a single skinned mesh, with each unit controlled via bones. Remarkably, this is the faster way of doing it, apparently because skinned meshes are just that much more efficient than on-the-fly batching.

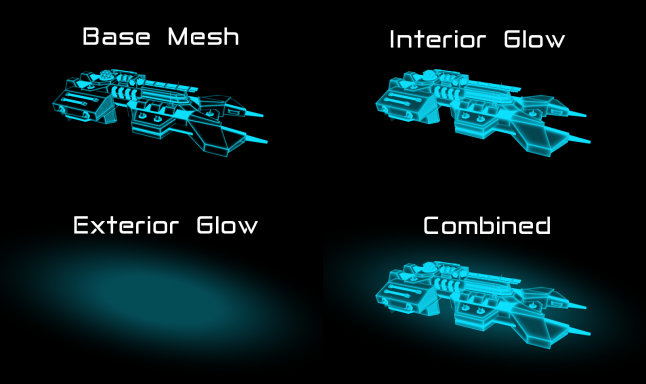

I also used some tricks to get cool graphical effects without too great a cost to performance. For one thing, I wanted my holograms to glow, so I implemented a “fake glow” effect for all the holographic ships. First, I added a glow effect to the model’s texture and rendered it as a normal opaque object. Then, I rendered a simple blurred circle (a billboarded quad) on each unit at a lower renderQueue, so it appeared behind the model.

Later, I upgraded the system to make the quads stretch and rotate based on the angle of the ship, so the glow would be in proper proportion to the rest of the model. Best of all, these “glow quads” worked great with the aforementioned system of combined billboarded quads. As a result, every glow effect for every unit in the game is rendered with just one mesh!

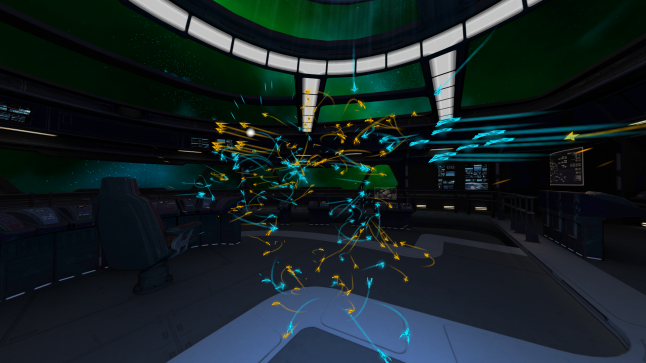

Another of my favorite tricks involved Unity’s built-in particles. I knew I would need to use Unity’s particle systems to render the laser shots, the twisting engine trails of fighters, and the giant blaster shots fired by the capital ships. However, I wanted to be able to render hundreds of fighters at once, each with their own trails and weapons, and each particle system requires its own draw call (i.e. they can’t be batched).

My solution was to create a single particle system for each of my purposes (lasers, blasters, engine trails) and let all of the ships share it. Every frame, each ship would take control of the particle system, move it into the correct position, manually emit a single particle, and pass it on to the next ship. (To make this work, you have to set the system’s Simulation Space to World. That way, each particle can move independently.) As a result, I can have hundreds of engine trails (or lasers, or blasters) all rendered in a single draw call.

There were a lot of other, more standard optimizations as well, many of which I’ve discussed before with respect to my previous games. I used the Unity Profiler to figure out where my game was slowing down, and I did my best to speed up those sections of code. In particular, I found that there were a lot of computationally expensive tasks that didn’t need to be executed every frame. (For example, ships need to figure out if they ought to be shooting at a new enemy target, but it’s okay to evaluate that every five frames or so.) Beyond that, I worked hard to batch draw calls, simplify meshes, and plug memory leaks. Chris Pruett has written about how to use the profiler and improve performance in Gear VR games, and I found his tips to be invaluable.

After all that work, and even after hitting 60 FPS, I can still think of ways to improve the performance further. And, as it says in the Oculus documentation, “no optimization is ever wasted” in mobile VR games due to heat and battery concerns. You can always make it faster.

Getting Skylight up to speed was a challenge, but I believe it was worthwhile in the end. Most of the time, I don’t like to emphasize the technical side of game development. Programming is neither my talent nor my passion, and smooth performance isn’t what making games is really about—it’s a means to an end. But, in this case, I’m very proud that I was able to make it work. I started out with a vision of a certain game experience, and I wanted to share that experience on Gear VR. A high frame rate isn’t what gets me out of bed in the morning, but it enabled me to make a better game and to share my vision with the world. And that’s what making games is about.

Read more about:

Featured BlogsYou May Also Like