Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

My current personal project, Bestiarium, depends heavily on procedural character generation. In this post, I talk about some of the techniques that I've experimented with to create a prototype where you can invoke procedural creatures.

After generating procedural chess pieces, the obvious step to take would be full blown creatures. There’s this game idea I’m playing around with which, as every side project, I may or may not be doing - I’m an expert in Schrödinger’s gamedev. This one even has a name, so it might go a longer way.

Bestiarium is deeply dependent on procedural character generation, so I prototyped a playable Demon Invocation Toy - try it out before reading! In this (quite big) post, I’ll talk about some of the techniques I’ve experimented with. Sit tight and excuse the programmer art!

Part 1: Size matters

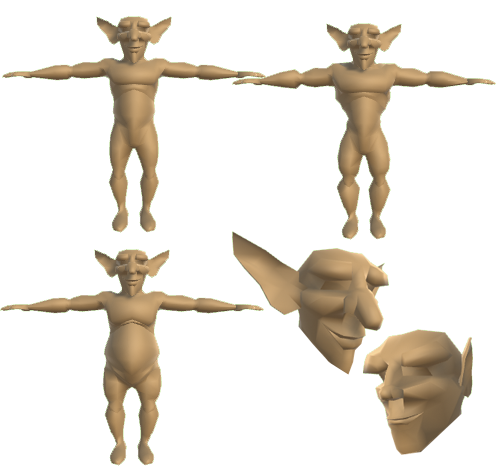

One thing I always played around with in my idle times when I worked on the web version of Ballistic was resizing bones and doing hyper-deformed versions of the characters (and I’m glad apparently I’m not the only one that has fun with that kind of thing). Changing proportions can completely transform a character by changing its silhouette, so the first thing I tried out was simply getting some old models and rescaling a bunch of bones randomly to see what came out of it.

One thing you have to remember is that usually your bones will be in a hierarchy, so if you resize the parent in runtime, you will also scale the children accordingly. This means you will have to do the opposite operation in the children, to make sure they stay the same size as before. So you end up with something like

private float ScaleLimb(List<Transform> bones, float scale)

{

for (int i = 0; i < bones.Count; i++)

{

bones[i].localScale = new Vector3(scale, scale, scale);

foreach (Transform t in bones[i])

{

t.localScale = Vector3.one * 1 / scale;

}

}

return scale;

}

(redundant operations for clarity)

But that leads to another problem: you’re making legs shorter and longer, so some of your characters will either have their feet up in the air, or under the ground level. This is a thing that I could struggle with in two ways:

But that leads to another problem: you’re making legs shorter and longer, so some of your characters will either have their feet up in the air, or under the ground level. This is a thing that I could struggle with in two ways:

Actually research a proper way of repositioning the character’s feet via IK and adjust the body height based on that.

Kludge.

I don’t know if you’re aware, but gambiarras are not only a part of Brazilian culture, but dang fun to talk about if they actually work. So I had an idea for a quick fix that would let me go on with prototyping stuff. This was the result:

Unity has a function for SkinnedMeshRenderers called BakeMesh, that dumps the current state of your mesh into a new one. I was already using that for other things, so while I went through the baked mesh’s vertices, I cached the one with the bottom-most Y coordinate, and then offset the root transform with that amount. Dirty, but done in 10 minutes and worked well enough, so it allowed me to move on. Nowadays I’m not using the bake function for anything else anymore, so I could probably switch it to something like a raycast from the foot bone. Sounds way less fun, right?

Part 2: variations on a theme

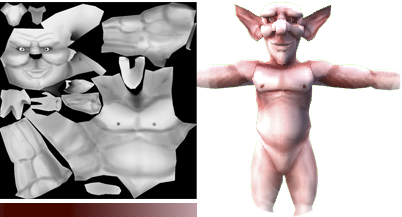

I started looking into modular characters, but I ended up with a problem: modularity creates seams, so it looks great on clothed characters (e.g.: it’s easy to hide a seam for hands in shirt sleeves, for the torso in the pants etc). In Bestiarium, however, I want naked, organic creatures, so the borders between parts have to look seamless.

This is probably the problem I poured most of my time into, and yet, I couldn’t find a good solution, so I timed out on that. The basics are easy: make sure you have “sockets” between the body parts that are the same size so you can merge the vertices together. But merging vertices together is way more complicated than it seems when you also have to take care of UV coordinates, vertex blend weights, smoothing groups etc. Usually, I ended up either screwing up the model, the extra vertices that create the smoothing groups or the UV coordinates; I even tried color coding the vertices before exporting to know which I should merge, but no cigar. I’m pretty sure I missed something very obvious, so I’ll go back to that later on - therefore, if you have any pointers regarding that, please comment!

However, since I wanted to move on, I continued with the next experiment: blend shapes. For that, I decided it was time to build a new model from scratch. I admit that the best thing would be trying out something that wasn’t a biped (since I’ve been testing with bipeds all this time), but that would require a custom rig, and not having IK wouldn’t be an option anymore, so I kept it simple.

The shapes were also designed to alter the silhouette, so they needed to be as different from the base as possible. From the base model, I made a different set of ears, a head with features that were less exaggerated, a muscular body and a fat one.

Part 3: there is more than one way to skin an imp

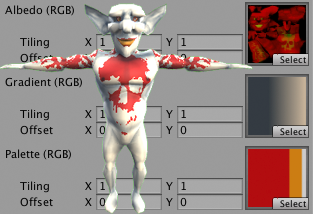

In the kingdom of textures, a good cost/benefit variation will usually come from colors. Biggest problem is, if you simply tint, you will probably lose a lot of color information - because usually tinting is done via multiplication, your whites will become 100% the tinted color, so you lose the non-metallic highlights.

Ok, so, let’s focus on those two things: multiplications and percentages. As I’ve said before, all the cool stuff that computers do are a just a bunch of numbers in black boxes. There’s a lot of ways to represent a color:RGB, HSL, HSV… but in all of them, you can always extract a 0 to 1 value, which is basically a percentage from “none” to “full value” in a color channel. Whatever that represents in the end, it’s still a [0,1] that you can play around with.

There’s a tool that you can use to texture stuff which is gradient mapping. Basically what you do is provide a gradient and sample it based on a value from 0 to 1. You can do a lot of cool stuff with it, including… texturing your characters!

Granted, that’s pretty easy to do in Photoshop, but how to do that in runtime? Shaders to the rescue! There’s another thing that goes from 0 to 1, and that’s UV coordinates. This means we can directly translate a color channel value to the UV coordinate of a ramp texture, sampling the pixel in the secondary (ramp) texture based on the value of the main (diffuse) one. If you’re not familiar with the concept, go see this awesome explanation from @simonschreibt in his article analyzing a Fallout 4 explosion effect.

In your shader, you’ll have something like

float greyscale = tex2D(_MainTex, IN.uv_MainTex).r;

float3 colored = tex2D(_Gradient, float2(greyscale, 0.5)).rgb;

Which roughly means “let’s read the red channel of the main diffuse texture into a float using the UV coordinates from the input, then let’s use that value to create a 'fake' UV coordinate to read out the ramp texture and get our final color".

Neat! We have a color tint and we don’t necessarily lose our white highlight areas. But… that still looks kind of bland. That’s because we have a monochromatic palette, and no colors that complement it. This is the point where I really started missing the old school graphics, where you could have 50 ninjas in Mortal Kombat by simply switching their palettes. So this is where I got kind of experimental: how could we have palettes in our 3d texture?

It would be easy if I just wanted to have 1 extra color: creating a mask texture and tinting the pixels using that mask would suffice. But what if we wanted to have several different colors? So far we have 1 diffuse texture and 1 for the ramp - would we then have to add 1 extra texture for each mask?

What I did in the end was having a multi-mask within one single channel: each area would have a different shade of gray, and that would also be used to read from a ramp texture. Since there’s filtering going on, we can’t really have all different 256 values, because the graphics card will blend neighboring pixels, but we could have more than 1. I tested with 3 and it looked decent, although it did contain some leaking, so I have to look a bit more into it.

So we’re down to 1 diffuse, 1 mask and 2 ramps (4 against 6 if we had 3 different masks), right? Wrong. Remember: we’re talking a single channel, so this means we can actually write both the diffuse AND the masks into 1 RGBA texture, simply using 1 channel for each. And that even leaves us 1 extra channel to play with, if we want to keep Alpha for transparency! This means if we wanted to only have 2 masked parts, we could even ditch the second ramp texture, the same if we had no transparency and wanted 3 masks.

Ok, we have a shader that we can cram stuff into, so all we must do is make sure we have enough ramps to create variety. This means either having a bunch of pre-made ramp textures or…

Part 4: gotta bake ‘em all!

Unity has a very nice Gradient class, which is also serializable. This leaves us the option of actually generating our gradients in runtime as well, also randomizing them. Then we simply have to bake a ramp texture from the Gradient, which is quite simple:

private Texture2D GetTextureFromGradient(Gradient gradient) {

Texture2D tex = new Texture2D((int)FinalTexSize.x, (int)FinalTexSize.y);

tex.filterMode = FilterMode.Bilinear;

Color32[] rampColors = new Color32[(int)FinalTexSize.x];

float increment = 1 / FinalTexSize.x;

for (int i = 0; i < FinalTexSize.x; i++)

{

rampColors[i] = gradient.Evaluate(increment * i);

}

tex.SetPixels32(rampColors);

tex.Apply();

return tex;

}

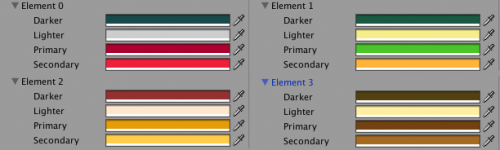

Here’s where I had to make a decision of where to go next: researching procedural palettes, or simply getting a bunch of samples from somewhere. For the purpose of this prototype, I just needed good palettes to test my shader, so I wondered where I could find tons of character palettes.

Even though I’m a SEGA kid, there’s no discussion about Nintendo being great at character color palettes. Luckily, right about the time I was doing the prototype, someone at work sent a link to pokeslack.xyz, where you can get Slack themes from Pokémon. Even better: the page actually created the palettes on the fly based on the ~600 Pokémon PNGs it used as source.

I wrote a Python script that downloaded a bunch of PNGs from that site and ported the palette creation code to C#, then made a little Unity Editor to extract the palettes and make them into gradients I could use to create ramp textures. Ta-da! Hundreds of palettes for me to randomize from!

The result to all of this is the Invocation prototype, so make sure you check it out and tell me what you think!

Prologue

If you read all the way here, I hope some part of this wall of text and the links within it are of any use to you! In case you have any questions and/or suggestions (especially regarding properly welding vertices at runtime without ruining everything in the mesh), hit me up in the comments section or on twitter @yankooliveira!

I’ll keep doing experiments with Bestiarium for now, and might even post about some of it (probably on my blog). Who knows, maybe this is a creature I’m supposed to invoke.

Read more about:

Featured BlogsYou May Also Like