Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

A demo of my work for the university. There will be a short video demonstration as well as a link to the Github project page.

HTC Vive was used as a VR device since my company agreed to borrow it to me for the duration of this project, so I decided to pair it with Unreal Engine 4 which was already installed on most of our machines. The initial setup ended being a cakewalk.

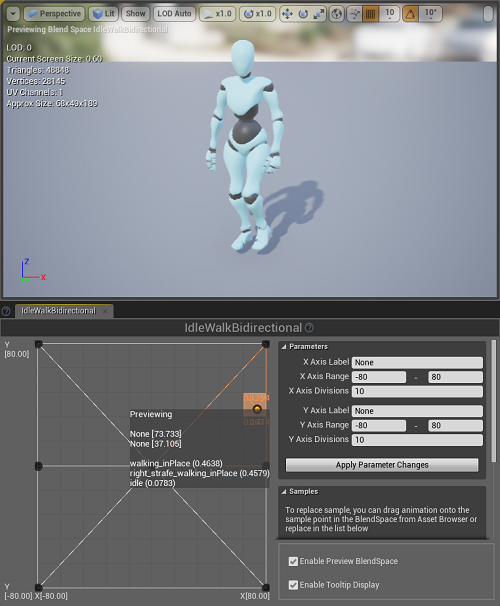

Besides HTC Vive and Unreal Engine 4, it should also be mentioned that the main skinned model (the one you see in the figure below) is downloaded from mixamo.com

Figure1. Flipping the table

The problem behind today's VR devices is that although they provide an excellent way for a user to interact with the relatively close environment, they do a poor job at representing user's body. In practice, this means only parts like head and fists will be visible to the user. This project addresses this issue by trying to complement these parts with the rest of the body, creating in result a full virtual body experience (with some expected mismatches in position and orientation of some bones). Three main techniques are used:

Animation blending

Forward kinematics

Inverse kinematics

The system is build so that user can rotate his/her body left or right and translate it in all four directions. There are no animations for rotating since it is less significant for this demo, but there are animations for all four directions user can translate. Input for the said translation is the absolute position in space of the HTC Vive headset.

Animations are blended together like it is shown in figure 2. Barycentric interpolation is used to get weights of the individual animations which gives the end result.

Figure 2. Animation blending

This part is completely built in Animation Blueprint. There are two important variables that need explaining before we go deeper. That is effector location or goal location for our end bone. If this location is in reachable radius (considering bones lengths and assumed root bone immovability) the end bone (e.g. a fist) will be in that position. Another variable is joint target location and it represents the location joint will gravitate towards.

In legs, the joint target location for each knee is set to be in front of each foot for each leg. This way the knees will always bend in the right direction (no grasshopper looking mutants). Effector location for feet is set to be unchanged except for the y component (height).

Hands are a little bit different, the effector location for each fist is set to be at the position of the Vive controller and the joint target location is set away from the body so that elbows always bend in that direction.

Once the entire model has been placed correctly in the scene and the limbs are positioned correctly, it is time to do the final touch, which comes down to rotating the head so that it is in correspondence with Vive headset and do the same for the controllers and fists.

This is not a complete or robust solution, there are many possible improvements but it served me to pass the semester and I hope it might serve you in some way.

Below is a link to this project on GitHub (you will need to have HTC Vive in order to try it out, of course).

Project GitHub page: https://github.com/Bojanovski/Virtual-body-ownership-UE4-HTC-Vive

Twitter: https://twitter.com/BojanLovrovic

Email: [email protected]

Read more about:

BlogsYou May Also Like