Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

With VR devices like the Oculus Rift the player can finally enter into the worlds created by you. However something is missing - his eyes are in the world but what about the rest of his body? How does the player interact once he slipped into the VR.

With VR devices like the Oculus Rift the player can finally gaze into the worlds created by you. He is IN the world and is able to freely look around. However something is missing - his eyes are in the world but what about the rest of his body? How does the player interact once he slipped into the virtual reality?

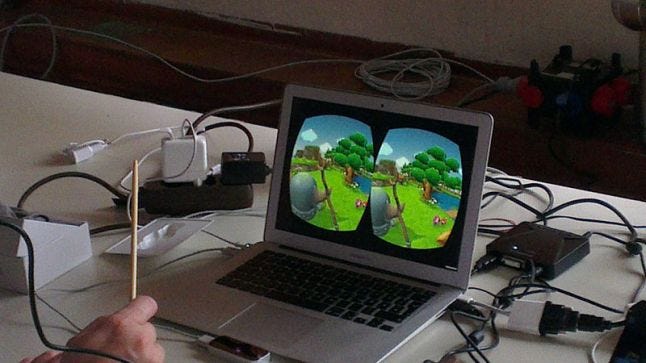

Rift + Leap Motion: It's Magic - One of our prototypes

Hi we are Marc & Richard from Beltfed-Interactive and and we’d like to share our experiences with various input methods for VR applications ranging from using the bare rift over the Razer Hydra, the Leap Motion, Wii Controllers and more.

We are VR enthusiasts and avid collectors of of all kinds of gadgets and we introduced hundreds of people to the rift by showing two of our demos at the Ars Electronica Festival and the GameCity Vienna.

Here we would like to share our findings and kick off a discussion with fellow VR developers. We mainly focus on the integration with Unity, however we hope the information is useful for others as well who are not using Unity.

Let’s get started with the easiest any maybe overlooked thing:

Everyone who does not live under a rock should know that the Oculus Rift has built-in low latency head tracking. At the moment the head tracking is limited to orientation tracking, however positional tracking will be included in the 2014 consumer version (and potentially in the DevKit 2)

What input schemes/game controls could be possible with these sensor readings alone without the need of any controller?

A straightforward way to use only the rift for controlling your game is to control the position of an object via head movement.

A good example for this method is the Breakout - inspired “Proton Pulse” by Pushy Pixels which lets you control a paddle’s x and y position with your head movement.

Another wonderful game following this approach is the game “Dumpy: Going Elephants” in which you control the trunk of an elephant by moving looking around. The trunk of the elephant is attached to the simulated head and by using a physics engine Dumpy fulfills your dream of hitting police cars with your massive trunk.

Of course instead of using only the orientation you could use the change in orientation (acceleration) as input. Imagine a Alien-facehugger which you have to get rid by shaking your head.

UI Navigation can be implemented by displaying a 2D cursor in the middle of the field of view. The cursor is controlled by the movement of the players head and activates an UI element (e.g. button) by hovering over it with the cursor and keeping the element focused for a given amount of time.

The UI system has to clearly communicate to the user which element is focused and how long it is going to take until it is activated. Most games using this method do this by displaying a loading bar near the element). In order to safe time you could also combine this approach with keyboard & mouse input.

Head orientation tracking enables simple head gesture recognition for intuitive controls. Simply shake your head for no or nod as an answer to a question. (Take care of cultural differences in head gestures - which is a fascinating topic in itself). Chime the discussion about head gesture recognition in the Rift forums: here, here or here.

The rift tracks the users head orientation by using three sensors: An accelerometer, a magnetometer and a gyroscope. By a process called sensor fusion and some handy movement prediction the SDK provides accurate low latency orientation values. However the low level API also allows to read each of these sensor values individually.

Until now we did not have need to dig that deep but it might come in handy one time. A headbanging simulator with simulated waving hair might be not the best idea … or would it?

Cost: Oculus Rift 300USD + tax + shipping

Pro:

People can finally look around in the virtual world. Looking and turning your head is one of the most natural things most people are capable of. Why not use it in your gamedesign?

No other input device needed. Basically you sit someone down and tell them “Just look around”.

Out of the box Unity integration, Low Level Interface available

Con:

Limited input possibilities. On the other hand sometimes limitations are good

Fast movement or awkward head positions might lead to neck strain. Not everyone is a metalhead and we’ve got some comments on neck strain for our Space Invader demo.

UI navigation is slow when the progress bar/loading time approach is used

Future iterations of the Oculus Rift will also include sensors for positional tracking (What's new with Oculus) which could also be integrated in your game design.

Lets move on to one darling of the Oculus Rift scene:

The Razer Hydra adds positional tracking with two controllers to the rift and opens up amazing concepts as shown by the UDK Hydra Cover Shooter and other demos.

The Hydra Cover Shooter:

Unity integration for Windows is as easy as it could be with a bundle from the asset store.

However you have to jump through a series of burning hoops to get the hydra to play nicely on Mac OS in combination with the rift & Unity. After registering in the sixense forums you will be able to download an alpha driver for Mac OS but the Hydra will refuse to work most of the time. After excessive debugging we found out that the problem is most likely related to initializing both devices at the same time. When you initialize the rift after the hydra is initialized everything works fine.

Once this is done you are ready to use positional tracking. Most likely you will need some form of inverse kinematics (IK) solver if you want to track the users hands. At the moment we are just testing the waters in this area - stay tuned for more. In the meanwhile take a look at the nice Oculus Maximus demo (UDK).

One big factor to consider is that by integrating the Hydra in your rift projects you will be able to get them ready for STEM, the wireless positional trackers by Sixense which is announced for 2014.

Cost: from approx. 150 USD + shipping (amazon.com)

Pro:

Robust positional tracking

Two controllers with lots of buttons and analog sticks

Easy Unity Integration

Get your games ready for STEM (wireless!)

Con:

Wired

Availability (as far as we know it is not produced anymore)

Mac OSX alpha support & Unity integration problems on Mac OSX

Next contender:

The Leap Motion is an interesting device. Small, rather affordable and it provides fast and accurate finger tracking.

In our magic demo we use it to track fingertips but while playing around with the SDK we uncovered the possibly best kept secret of the Leap - which is that it is also able to track tools!

Tools you say? - Yes tools! Everything which is shaped like a pen (smooth, straight, …) is detected as a tool by the Leap SDK. This enabled us to track wooden wands and hand them to the players - they now have a “real” magical wand in the virtual world and are able to shoot fireballs and perform gestures to change the current spell. This was instant hit with everyone at our booth.

We showed this prototype to hundreds of people and the Leap adds significantly to the immersion of the whole experience. Lots of people dream of being a wizard - this was as close to the “real” thing as it could get at the moment.

The biggest issue is the relative small tracking area of the Leap. The area extends as an inverted Pyramid out of the Leap and it’s very easy to leave the tracking area with the rift on. The use of long sticks, which poke into the tracking area, helped a lot but it would be awesome to have a bigger tracking area.

In the Leap Motion forums we were told by officials that they are working on Multi-Leap Support - Just connect multiple Leaps with a computer or maybe “Daisy Chain” them in order to increase the tracking area. You can find the discussion here.

Inspired by people in the Oculus forums we tried mounting the Leap on the Rift. It works somehow but the tracking is way worse and you lose the built in gesture recognition.

Other issues include occlusion of fingers and that the Leap loses tracking if you close your fingers. In addition the Leap will enter a “robust” mode with reduced accuracy when an external infrared source interferes with it (sunlight, light bulbs, …) . If you have a booth try to test the lighting before you run into troubles.

Unity integration of the Leap is really easy with the Leap SDK from the Leap Motion page (including sample code). It comes with simple gesture recognition (key tap, screen tap, circle, swipe) and the tool recognition.

We extended the SDKs gesture recognition by using the C# version of the $P point cloud recognizer for arbitrary gestures like hearts, waves, or triangles to perform different spells. This works very well with the exception that lots of player leave the tracking area while performing a gesture.

Cost: 79.99 USD + shipping taxes / 76,99€ + shipping+taxes

Pro:

Affordable

Intuitive use

Adds significantly to the immersion

Swift an accurate tracking of fingertips

Tool recognition (perfect for our magic demo)

Out of the box gesture recognition (key tap, screen tap, circle, swipe)

Con:

Tracking area is too small when used in combination with the rift

Occlusion problems

IR interference from sun and certain light bulbs

No identification of individual fingers (is it a thumb or the little finger?)

Now lets get casual:

Rift + Wiimote: Tribute to Space Invaders - Another of our prototypes

One of the biggest benefits of most Nintendo Wii controllers is that they are rather cheap and lots of people already own some (chances are you have some lying around or know someone who is willing to lend you their unused one).

The integration with Unity is as easy as it could be by using the 25$ WiiBuddy package available in the Unity Asset Store. If you are more into fiddling around you could try to run with the free UniWii package.

A major pitfall is that the Windows Bluetooth stack seems to cause lots of troubles and you might have to connect your Wii input device several times before it works properly.

After jumping through the integration hoops you are now free to use all the sensor inputs of your Wii-input devices.

We played around with a rift enabled Fruit Ninja variant using the WiiMote with Motion Plus but found that it was too unreliable to use as input control for our virtual ninja sword. Maybe some sensor smoothing could have helped, however for us it was not good enough “out of the box”.

Some people reported to successfully use the WiiMote as a pointing device with the orientation sensor alone (no sensorbar) for aiming a gun, but we did not try this option.

Surprisingly the Balance Board is the most fun device when integrated with the Rift. Standing on it without positional tracking for the head causes some unease but the real fun starts when you sit on it.

By using the four weight sensors of the device you can estimate the direction the player is leaning in and it opens up lots of gameplay ideas. Who is up for a Magic Carpet ride, a sledging simulator, a Hover Board or Segway simulator?

Pro:

Affordable

You probably have one

Your players might have one

Easy Unity integration

Con:

Windows Bluetooth stack problems

Inaccurate positional tracking

Only really usable for acceleration tracking

After the disappointment over the inaccurate tracking with the Wiimote controller we tried to integrate our trusty Playstation Move controller. Unfortunately there is no out of the box solution like WiiBuddy for the Move controller.

After diving into the C-API available at https://github.com/thp/psmoveapi (including SWIG-bindings for many languages) we got it to work but lost interest on the last mile and stopped the whole experiment.

There's also a C# Unity wrapper by the developers of JS Joust which exposes accelerometer data (no positional tracking) over at the UniMove project page.

Cost: approx. 55USD (Move Wand + Eye Cam)

Pro:

You might already own one

Better positional tracking (optical) if you get it to work

Orientation tracking

Con:

No "out of the box" support in game engines

Cumbersome calibration/pairing process

No navigation controller support with the above mentioned libraries

The classics:

This is a no brainer since the Oculus Rift needs a PC to operate you can be pretty sure that your target audience already has access to a keyboard and mouse and a good deal of PC gamers is well versed in the art of controlling their games via both devices.

The first thing you have to decide is if you want to decouple the looking direction from the movement direction or let the player always move in the direction he is facing. Also take care to reduce turning speeds significantly from what you are used to or you will set up your players for a severe case of motion sickness.

The downside of the using the traditional keyboard and mouse combo is that they are usually placed stationary on a desk in front of the player, which means he has to remain seated in the same position while playing the game. We showed our demos to hundreds of people at the Vienna GameCity and lots of people stopped to look around once they had their fingers on the keyboard - they got “rooted” in place and used the controls to change player orientation.

Another obvious drawback is that players are not able to see the mouse and keyboard while wearing the headset, which may lead to desperate search attempts once they let go of one of them. In case of the keyboard the player not only has to find the device itself, but also the keys he needs to control the game.

This breaks immersion and you might be better of by relying on other means of input. Some stickers on the keyboard or a gamer keyboard which only has the most important key might be good if you want to show your demo to an audience.

Pro:

Nearly everyone owns a mouse and keyboard

PC gamers are already used to the most common control schemes

Easy to integrate in most game engines

Con:

Player has to be able to navigate mouse and keyboard while being practically blind

Player is “rooted” by the keyboard and is less likely to look around.

Conventional mouse & keyboard controls seem complicated for non - PC gamers

Navigating the environment with this approach does not feel natural

Last but not least:

Have you heard that PC gamers use gamepads nowadays? Yes they do and you should consider this an option for your games. Especially since the Steam Controller might add heavily to the usability of gamepads for VR.

Compared to the keyboard & mouse combo is that gamepads (especially wireless ones) let the player move more freely. The problem of “rooting” a player in a fixed position is reduced.

Pro:

You might have a gamepad (Xbox 360, Dualshock controller, …)

A lot of gamers have access to gamepads

A gamepad is held in both hands at all times which means that the player can’t “lose” it while playing

Problem of “rooting” the player is reduced/removed

Gamepads are affordable

Can give the player feedback via rumble

Con:

The typical gamepad is still a complicated device for non-gamers

Navigating VR space still feels unnatural with a gamepad

Controller cable might restrict freedom of movement when using a wired gamepad

If you are on a budget our personal recommendation for VR input devices would be to first recall which gadgets you already own (Wii Motes, Xbox controller) and dive into the world of VR with them. If you have a WiiFit board we would love to see some wacky experiments for it.

On the other hand if you want to be ready for STEM you should definitely get a Hydra Razer and get to know your IK toolset.

The Leap Motion is a wonderful and affordable device with some drawbacks. However it opens up various game design options and was the most fun to play around with in our experience.

Always keep in mind which purpose you develop for. If you develop a prototype or demo which is shown at conventions/festivals or similar it is perfectly fine to integrate esoteric hardware. However if you want people to download your game and own a rift, a hydra, a leap and a wii balance board you might find that no-one but you is able to play your game.

We are also very excited about the future of VR input devices like the Virtuix Omni, STEM.

Especially since we found out that another omnidirectional treadmill project - the Cyberith Virtualizer is developed right around the corner from us.

We hope this overview was informative and you join in the discussion below, on twitter (@RichardKogelnig, @bitpew) or get in touch with us via our website.

Read more about:

Featured BlogsYou May Also Like