Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

One of the biggest challenges in VR development is the combined requirements of high framerate with high resolution. By transforming vertices into 'lens-space' we remove the need for full-screen render textures, massively improving mobile performance.

Cardboard Design Lab has been open-sourced by Google - you can download it on the Google GitHub repo https://github.com/googlesamples/cardboard-unity/tree/master/Samples/CardboardDesignLab

Brian is an independent game developer and graphics programmer. He was lead developer on Cardboard Design Lab, where he spent a lot of time fiddling with shaders.

One of the biggest challenges in VR development is the combined requirements of high framerate with high resolution. By transforming vertices into 'lens-space' we remove the need for full-screen render textures, massively improving mobile performance.

The following technique was developed and employed on Cardboard Design Lab (CDL) using Google's Cardboard Unity SDK - however, the same technique could apply to any VR system utilizing a lens distortion effect, given the appropriate distortion coefficients are provided to construct the vertex shader, and the SDK permits disabling the render texture.

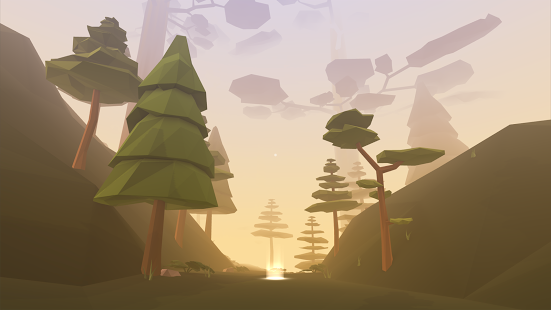

Cardboard Design Lab, by Google, Inc. Available on Google Play

This scene may use a low-poly aesthetic, but is actually highly tessellated

Before examining this technique, it's important to understand how most VR rendering is handled. For a background on why we need lens distortion, and the typical implementation, see this section of the Oculus rift documentation.

Lens distortion in VR apps counteracts the physical distortion of the glass lenses in the head-mounted-displays (HMDs) - yielding a wide field of view, without a visibly distorted image.

In most VR software, this reverse distortion is performed by rendering each eye-camera into a separate render target and then warping that image in a post process effect - either in a pixel shader, or by projecting the render target onto a warped mesh - and rendering the final output to the screen.

This article demonstrates a technique which performs the distortion ahead of time in the vertex shader of each object in the scene - thereby eliminating the need for a separate render target. The elimination of the render target virtually eliminated thermal issues on mobile devices, and allowed the addition of 2x-4x MSAA at full-resolution without noticeably impacting thermals or target framerate.

Of note, I'm not the first person to think of this, but as far as I know, Cardboard Design Lab is the first VR application to have been developed using this technique. Furthermore - at the beginning of the project, the team at Google had already prototyped a version of vertex distortion which would eventually become the code that is included in the SDK today.

So full disclosure - all I can take credit for is insisting vertex distortion based lens correction could work in a production application, and that it would be a boon for performance - some smart engineers at Google had already written the CGinc file. I'm writing this article because (A) It did work, (B) hopefully more developers use it and VR applications become a little bit better, and (C) engine developers realize forward rendering is still awesome, MSAA is still awesome, and relying on full-screen post process effects for core functionality stinks.

In developing a mobile VR application, there are 3 major considerations that dominate engineering effort, and more or less constrain what is possible.

Framerate

Apparent Resolution

Thermals

Framerate is something we are all familiar with. In normal game development 30-60 fps is generally acceptable. In VR, 60 fps is a bare minimum, and there is some consensus that a good VR experience demands a framerate between 90-120 fps.

Of course, on mobile, we do the best we can. Many devices clamp the maximum framerate to 60 fps, so I'll be using 60 as the benchmark for the rest of this article.

When a VR experience drops below 60 fps, the effects are immediate. Head tracking does not appear to 'keep up' with the display, and users become disoriented, and are much more likely to experience simulator sickness. Momentary performance hiccups (such as background asset loading, OS notifications, and the garbage collector) cause brief but extreme discomfort, while a persistently low framerate (between 30 and 60) might manifest as discomfort over time. Many users cannot actively identify if an experience is running at 45 fps vs 60 fps; however, in our testing at lower framerates, users were much more likely to experience nausea, which they attributed to a wide variety of features other than framerate. For this reason, in my opinion, VR experiences are terrible at 30fps, and pointless below 30fps.

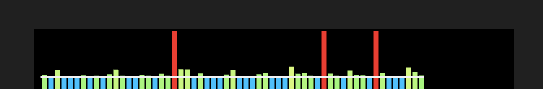

Integrating a visual frame time display into test builds is essential for debugging. Just make sure the debug itself isn't implemented in such a way as to cause GC allocations or unnecessary overhead. Above: A framerate display implemented using a ring buffer and a single draw call with raw GL.Vertex calls in Unity. Don't use the GUI systems!

Unfortunately, very few currently available mobile VR apps run consistently at 60fps, and desktop apps have outrageous hardware requirements. This has the side effect of leading to overly conservative views on best-practices in VR. I've read quite a few articles that claim 'moving the user in VR is always bad' - and while certain types of motion such as motion tangential to the view direction are problematic, well designed motion running at 60fps can be enjoyable for a majority of users. The popularity of roller coaster VR apps should be proof enough that motion is worth exploring.

Key takeaway: A less-than-60 framerate can turn a good experience into a nauseating one - and even experienced users and developers may not be able to correctly identify the cause.

Apparent resolution is how detailed a scene 'appears' to be. In VR, this is a combination of physical screen resolution, render buffer resolution, MSAA level, physical lens magnification, and any post-process effects (such as lens warp).

How much resolution do we need? I've read a lot of different numbers - but I'll use Michael Abrash's approximation of something like 4k x 4k per eye (at 90 degree fov) to match current monitors, and 16k x 16k to match retinal resolution (at 180 degree fov).

Obviously, no mobile device can do that, but a few land in the same county, if not the ballpark. The Samsung Galaxy S6 pushes 2,560 x 1440 pixels, or 1280 x 1440px per eye. Accounting for some loss around the edges, that's roughly 1024*1024 - or 1K - that's 1/16th of 4k.

So, without a time machine, the only thing we can do is crank up the MSAA - if you are unfamiliar with what MSAA does other than remove jaggies - I suggest a quick read here - or otherwise just accept that turning on 4x MSAA is a little bit like rendering on a screen from the future.

Now, I'm pretty sure when Michael Abrash was giving those figures, he meant 4k x 4k with MSAA turned on, yielding an insane 16k apparent resolution - but the principle is the same - MSAA is your friend.

Key takeaway: Anti-aliasing is extremely important, if expensive. I like to aim for 4x MSAA on high end mobile phones. Break out your forward renderer.

Brief aside: I know the deferred rendering folks out there have all kinds of post-process anti-aliasing techniques. Personally I think the move to post-process anti-aliasing made a generation of games look horrible - but back on topic - the point of this article is to remove the full-screen render textures from the mobile pipeline because of how expensive they are. Post-process AA requires a full-screen render texture, defeating the purpose. In Unity, forward rendering is very fast. Last time I checked UE4 didn't have a forward rendering mode, and worse, when I asked about cg-level shader customization I was told sarcastically "You have source" - as though recompiling the engine was a viable development strategy for an individual with deadlines. That said, I wouldn't be surprised if the folks at Epic are working on it.

Thermals refers to the heat output of the device. As a game developer, I'm accustomed to a few common performance bottlenecks on mobile - in order of frequency: GPU fill rate, CPU (typically draw calls), CPU-GPU memory bandwidth, GPU vertex ops, and crazy device specific driver issues, like how NaN or infinity is treated on the GPU.

Much of the time these are unrelated problems. Even if the GPU is fully utilized - a developer can continue adding functionality on the CPU. However, phones are small, and the processors are mostly in the same place, meaning heat is a global problem.

In order to keep the phone from melting, or exploding, or melting AND exploding - when too much heat is produced the phone will reduce power to the processor, slowing everything down. That's really amazing when you think about the hardware engineering involved to reliably make that happen, but goodbye framerate. Thermal throttling is not designed to smoothly balance performance and heat - it's designed to save your phone. When it first kicks in, a nice smooth 60fps will usually drop to 40fps or less. When it realizes the phone is still hot it will continue decreasing the power in fairly large increments. If your VR app is thermally limited, it will not be a good experience.

What makes heat extremely challenging is it is very hard to know what is causing the heat - suddenly everything is related. If you could reduce every function call into assembly instructions, maybe you could assume some constant heat factor per instruction - but that doesn't take into account hardware efficiency or the physical construction of the inside of your device, or factors beyond your application's control, like background processes, or wifi, etc.

Why is this unique to VR? Few other mobile applications demand high-resolution 3D rendering at a constant 60fps with 4x MSAA - but additionally, VR applications render scenes twice (once for each eye) - and most SDKs default to rendering to a high resolution render texture to perform distortion. As it turns out, that render texture is very expensive in terms of performance and heat generation.

John Carmack mentioned the problems related to thermals when the Gear VR was announced. Unfortunately, this isn't a problem that is going away soon.

Key takeaway: If your performance is fine for a few minutes, then seems to plummet inexplicably (while your phone is hot to the touch) - check for thermal warnings in the logs.

When the project began, the Cardboard Unity SDK's default method of rendering rendered into a 16-bit render texture without MSAA. Even at those settings, many low end devices encountered thermal limits within minutes. GearVR uses a similar pipeline, and encounters the same limitations. Rendering to full-resolution render textures on mobile phones is a performance hog, regardless of the SDK being used.

After switching to vertex-displacement VR, CDL rendered into the full 32 bit screen buffer (reducing color banding) - utilized 2x to 4x MSAA depending on the device, and generally maintained 60fps.

Additionally, by distorting the scene into 'lens space', we avoid the loss of resolution associated with warping a render target (or corresponding waste of render time by rendering into a higher resolution buffer). This is effectively like NVidia's recently announced multi-resolution shading - but it's free, and it works on a 6 year old smartphone.

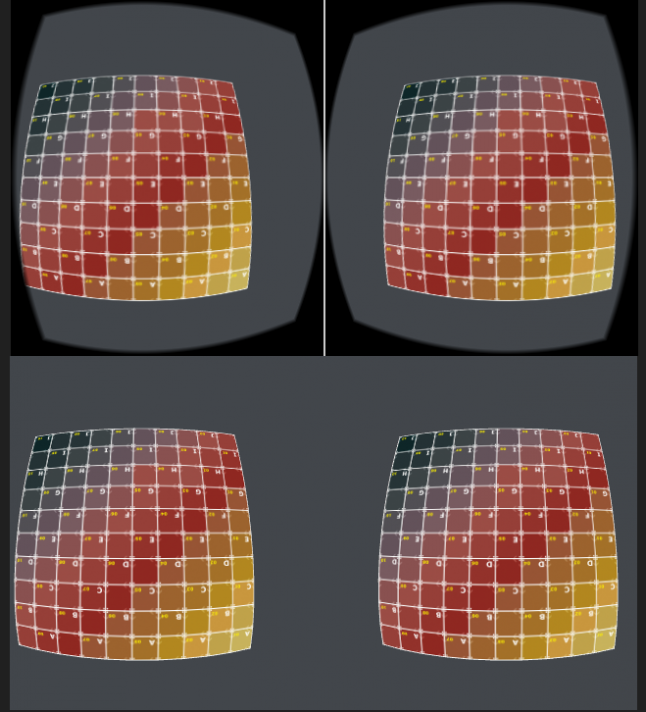

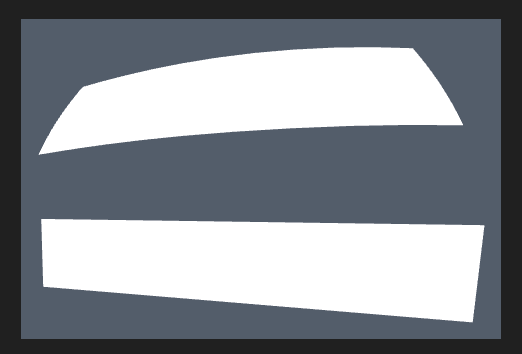

Above: A 121 vertex plane rendered using the standard render-texture technique.

Below: A 121 vertex plane rendered using vertex displacement, 2x MSAA, and no render textures.

UV Image from http://www.pixelcg.com/blog/?p=146

A bilinear magnification of the center of the two renders from above.

Left: Standard render-texture technique

Right: Vertex displacement

The current Cardboard SDK for Unity contains a CG include file titled CardboardDistortion.cginc

It contains a method which will convert a world-space vertex into inverse-lens distorted screen space ('lens space'), using the Brown–Conrady model for radial distortion correction.

Here is what a simple solid color shader looks like, using this functionality. Note that I've disabled the effect on desktop and in the editor using conditional compilation. Performing inverse lens distortion in the editor makes editing the scene quite challenging.

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

#include "CardboardDistortion.cginc"

struct VertexInput {

float4 position : POSITION;

};

struct VertexToFragment {

half4 position : SV_POSITION;

};

VertexToFragment VertexProgram (VertexInput vertex){

VertexToFragment output;

#if SHADER_API_MOBILE

///easy as that.

output.position = undistortVertex(vertex.position);

#else

///this is how a standard shader works

output.position = mul(UNITY_MATRIX_MVP, vertex.position);

#endif

return output;

};

fixed4 _Color;

fixed4 FragmentProgram (VertexToFragment fragment) : COLOR{

return _Color;

}

ENDCG

Note - when enabling vertex distortion mode in the Cardboard SDK, be sure to turn traditional Distortion Correction off!

This looks so easy you might wonder why everyone isn't doing this on every platform. Why did anyone bother with the render textures? Why don't we render everything in 'lens space'.

Lens distortion is non-linear, and therefore the reverse lens-distortion is also non-linear. When we distort vertices in the vertex shader, the line between them remains a line, when in fact it should be a curved arc. When you look at a curved arc through a curved lens, it looks like a line again. When you look at straight lines through a curved lens, you will see a cube with curved edges - neat - but probably not what you wanted.

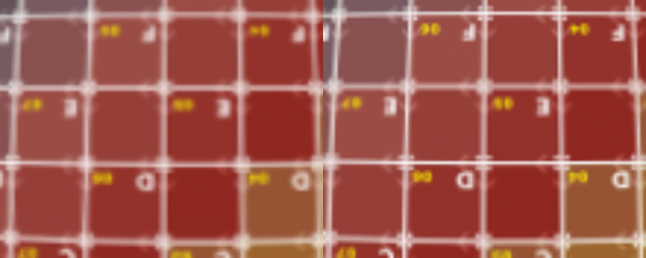

Top: A plane with 121 vertices.

Bottom: A quad with 4 vertices.

Note the non-linear distortion is preserved with the plane, but not with the quad.

The result of this is that if vertices are spaced far apart (in screen space), we won't end up correcting much distortion, and the final image will appear to 'warp' as we turn our head. This also unsurprisingly has a tendency to make users sick.

Perhaps worse, all that vertex motion tends to exaggerate depth-fighting issues between objects.

Fortunately the solution is straightforward - we need to add enough vertex tessellation such that the distortion is no longer noticeable. As it turns out, most high end mobile devices are capable of rendering between 200,000 and 800,000 vertices per frame. Unfortunately, we still need to render the scene twice, so our vertex limit is between 100,000 and 400,000 vertices, again, depending on the devices you want to support.

You might think adding all these vertices would again put us in thermal trouble - but modern phones have extremely dense screens - 1920x1080 being fairly common. 1920*1080 is about 2 million pixels - with a small amount of overdraw, we could be executing 10 million shader fragments per frame. If we need to render into a separate render target for distortion correction, it adds another 2 million pixels, plus the non-negligible cost of context switching and a lot of texture memory access.

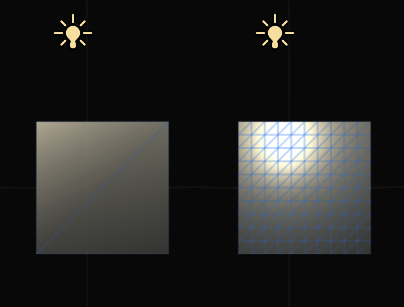

Since we just had to massively increase the vertex count of the scene - we can use that for another advantage. Instead of calculating lighting in the fragment program which gets run ~10 million times, we can calculate lighting in the vertex program, which gets run ~400,000 times. That is a 25x reduction in computation (and hopefully heat). Since our vertices need to be dense enough for non-linear lens distortion, the are probably dense enough for lighting (which is also generally non-linear).

Just like non-linear distortion, vertex lighting quality depends heavily on the vertex count.

Left: A 4-vertex quad using vertex lighting can't display much detail from the point light

Right: A plane with 121 vertices is a decent approximation at this distance.

Of note here: I don't use any of Unity's shaders, ever. I never use their skybox. I only use their lighting engine for prototyping. Every shader in CDL was authored in CG / Shaderlab (not using Surface Shaders), and the fragment programs either read the color value from the vertex shader and display it, or read two color values, access a texture, multiply the texture by one color and add the other. Keeping fragment programs stupidly simple, removing render textures, and minimizing overdraw keeps GPU fill in check.

There is one more catch to implementing a vertex-displacement pipeline - and that is dealing with 2D elements, such as UI.

Since we're in VR, UI is already a tricky subject. To maintain proper stereo convergence, UI needs to be rendered in 3D world space, preferably at the same depth as the 3D objects it references (e.g. a reticle needs to be rendered at the 3D depth of the object it occludes.)

UI needs to be undistorted as well - and to ensure it has a sufficient number of vertices, it makes sense to draw UI elements such as letters and textures on tessellated planes instead of quads. The exact amount of tessellation will depend on the maximum size of the UI element on screen.

Of course any UI that is head-locked will be distorted, but the distortion will not change as a user rotates their head - so the effect may not be noticeable.

In general - interfaces in VR are best handled diegetically anyway - so hopefully this doesn't present a large barrier to utilization of vertex-displacement lens correction.

Minimizing error in the vertex-displacement approach means minimizing the distance between vertices in screen space - meaning that as objects get further away from the camera, they do not need as much tessellation - and so standard LoD techniques are useful.

This offers a clear opportunity for optimization, particularly for fixed position rendering. In cases where the camera can move throughout a scene, optimization becomes much more challenging.

Frankly, I'm still shocked this isn't a technique that is being widely employed given the extremely high hardware requirements of the announced desktop HMDs. Vertex-displacement based lens correction enables smartphones to render VR to QHD (2560x1440) displays at 60 fps with up to 4x MSAA. Most modern integrated graphics cards should be able to muster at least that much performance, and a low to mid range dedicated graphics card could do much better. I'm not expecting to play Battlefront VR on my Intel HD 5000 - but I suspect the current crop of HMD hardware could be easily adapted to reach a much broader audience, without requiring SLI'ed titans.

Read more about:

Featured BlogsYou May Also Like