Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Deaf Accessibility in Video Games 2

We strive for players of all backgrounds to enjoy our games, including those who are d/Deaf and hard of hearing. So how can we make our games more accessible?

When/How to use Deaf Accessibility Tools

According to the World Health Organization (2016), over 360 million people in the world have mild to profound hearing loss. To narrow down further, approximately 48 million Americans have some degree of hearing loss. That is roughly 15% of the population who are hard of hearing or deaf. And that’s a lot.

Within the video game industry, we strive for players of all backgrounds to enjoy our games, including but not limited to those who are d/Deaf and hard of hearing (d/Deaf/HoH). So how can we make our games more accessible?

The two major tools to promote deaf accessibility in video games are (1) subtitles/captions and (2) visual cues. This basic guide will define the tools and outline when/how to integrate them into game design (tl;dr at the end).

Table of Contents

Subtitles and Closed Captions:

Subtitles vs. Closed Captions

Best Practices

Visual Cues

Integrating Deaf Accessibility Tools into Video Games

Why should the tools be added?

When should the tools be added?

How should the tools be added?

Subtitles and Closed Captions

Summary: Subtitles = Dialogue // Closed Captions = Dialogue + Sound Effects

Subtitles

Subtitles derive from text within a written script or spoken dialogue. As a tool, subtitles are designed under the assumption that the user is hearing. Still, there are many benefits to subtitles for both hearing and d/Deaf/HoH individuals. For example, if the dialogue is in another language, subtitles can provide a thorough and accurate translation for non-native speakers. Here is a quick example.

Hearing individuals will use subtitles for varying purposes, including but not limited to understanding poor audio quality, heavy accents, and dialogue in a noisy environment. Additionally, subtitles serve a functional purpose for those who are watching a video on their lunch break at work or trying not to wake a baby.

Generally, subtitles can also assist in comprehension. If content is designed to be informative (i.e. a lecture or “how-to” video), subtitles can provide extensive technical information in written form to assist users in following along with the dialogue. Additionally, users find that subtitles help with following a story by alleviating concentration fatigue. They also improve literacy, which is an added plus.

A friend recently joked that once he turned on subtitles in Red Dead Redemption 2, he found that he could never go back to playing games with the subtitles off. Subtitles complimented the game audio and dialogue, as well as assisted him in comprehension.

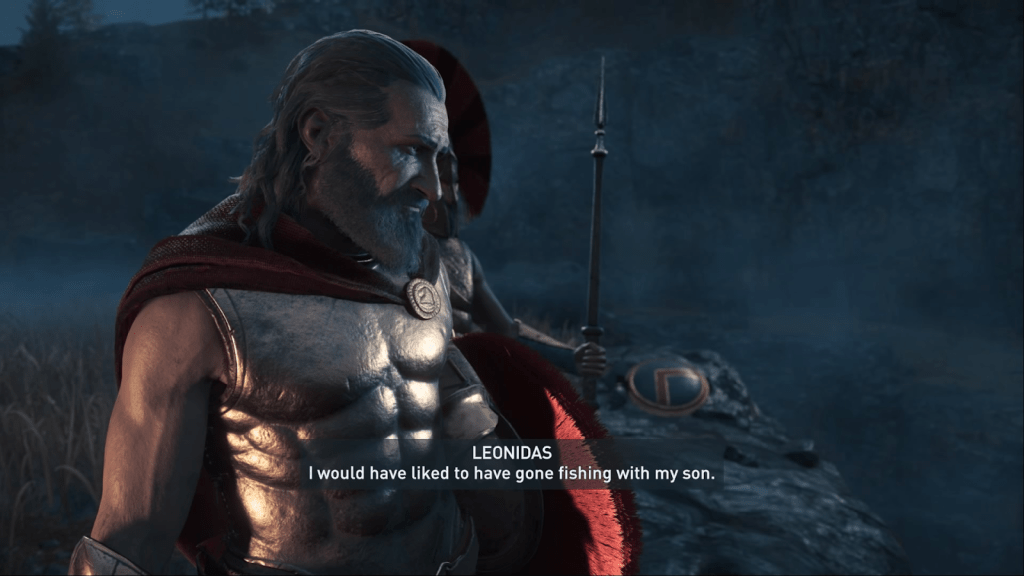

His experience is reflected by recent data released by Ubisoft, which shows that 95% of Assassin’s Creed Odyssey and 97% of Far Cry: New Dawn players kept subtitles on.

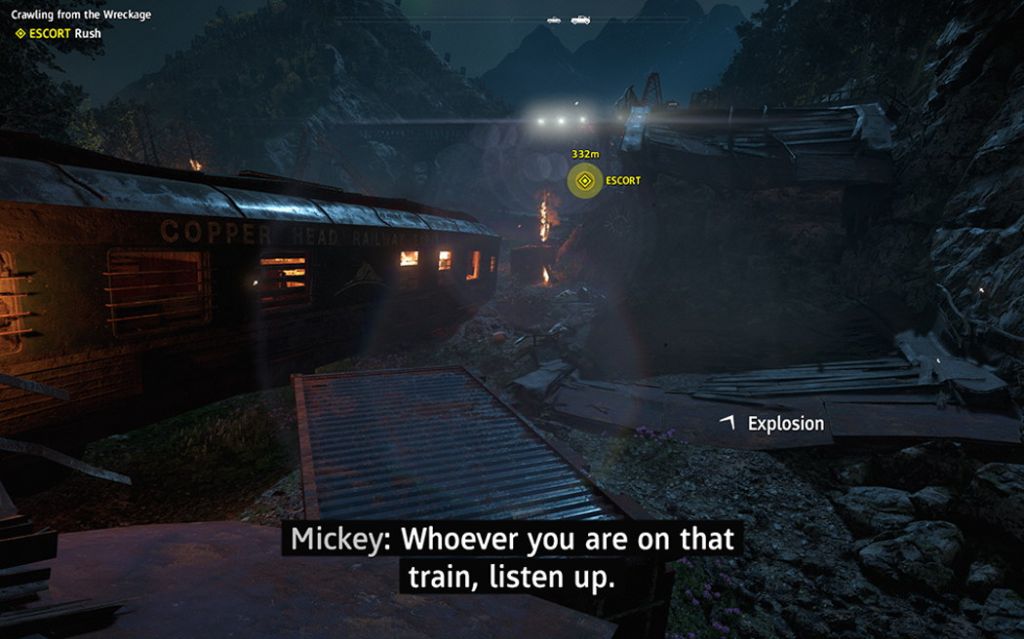

Red Dead Redemption 2 (2018)

However, though subtitles are helpful for deaf and hard of hearing individuals, it does not immediately equate to equal access.

Closed Captions

The term “closed captions” is often used interchangeably with subtitles, but both features are different. Closed captions, often displayed as [cc], are intended to not only accommodate for dialogue, but also other sound effects. Oftentimes, closed captions will include indicators for music or background noise (i.e. explosions, gunshots, breaking glass, etc.). As a tool, closed captions are designed under the assumption that the user is deaf or hard of hearing. The intent of the tool is to provide equal access to d/Deaf/HoH individuals.

It’s a Dog’s Life With Bill Farmer (2020): Two examples of closed captions from a Disney film.

If there is a sound effect or if something occurs off-screen, then captions will include a written cue to notify deaf and hard of hearing individuals.

The main benefits of closed captions is that they provide additional context and therefore, improve immersion for d/Deaf/HoH individuals. Users are able to focus more on the story and content, rather than spending their time trying to decipher subtitles and wondering what they might have missed.

NOTE: In the entertainment industry, captions are categorized as either open or closed. What makes them “closed” is that a user can toggle the captions on and off, whereas “open” captions are embedded in the product and cannot be turned off. In the video game industry, captions are almost always categorized as “closed.”

Best Practices for Subtitles and Closed Captions

Now that we know the difference between subtitles and closed captions, how do we add them to a game? The rule of thumb is to consider the following:

Accuracy: Within the FCC closed captioning rules, it states that all spoken words in dialogue needs to be accurate to the fullest extend possible. Keep in mind that scripts may not be the end product, as voice actors may add their own dialogue. If the team is strapped for time, Rev, GoTranscript, and Ai-Media are popular closed captioning services. However, game companies are still responsible for formatting.

Labels: Given the nature of video games, the text should always label the speaker(s). Typically, it’s recommended that speakers are labelled using all capital letters. Other designers will use color-coded names to indicate speakers. Just make sure that it is consistent. Additionally, if the speaker is off screen, use an arrow to indicate dialogue is occurring out of view.

Here are some examples:

< SUSANA: When I know more, I’ll let you know.

JAMES: What are you doing?

MAN: I don’t want to do that now.

BOTH: We want to play too!

ALL: Happy birthday, Timmy!

If the same speaker is continuing on to the next line or in a fresh set of subtitles/captions, the speaker does not need to be labelled.

Intonation and emotion: When applicable to the dialogue, intonation and emotion can be labelled within the text. The format is as follows:

(SLURRED): But I love you!

JAN (WHISPERS): Don’t let him near you.

Another option is to integrate intonation and emotion into the displayed text. For example rather than labelling text as (STUTTERS) it can be displayed as JOHN: I’m g-g-going home. Here are some other examples:

Everything that matters…is a mystery

What do you think you’re…?!

You mean you’re going to marry him?!

It’s a BOOK, damnit!

Lastly, a single subtitle/caption can indicate critical intonation that is not affiliated with a speaker.

APPLAUSE DROWNS SPEECH

LONG PAUSE

For additional technical examples, please check out the BBC Subtitle Guidelines.

Accents: Only indicate an accent when it is critical to the dialogue. Text can be displayed as

Steve [AMERICAN ACCENT]: I don’t know what you mean.

The Office (2005)

Music: Always label music and it’s source. For instance, if someone is whistling a tune, text should read HE WHISTLES AN UPBEAT TUNE. When applicable, describe incidental music using the following format:

MUSIC: “God Save The Queen”

Designers can also combine music source with incidental music, such as JOHN HUMS “God Save The Queen”. If there isn’t a source and the song name is not necessary, music can be labelled: EERIE MUSIC or UPBEAT MUSIC. Label mood music only when required.

Sound Effects: For closed captions, critical sound effects should be properly labelled in the text. The BBC rule of thumb:

Sound effects should be capitalized

Describe the sounds, not actions (i.e. GUNFIRE)

Subject + verb should be brief (i.e. FLOOR CREAKS)

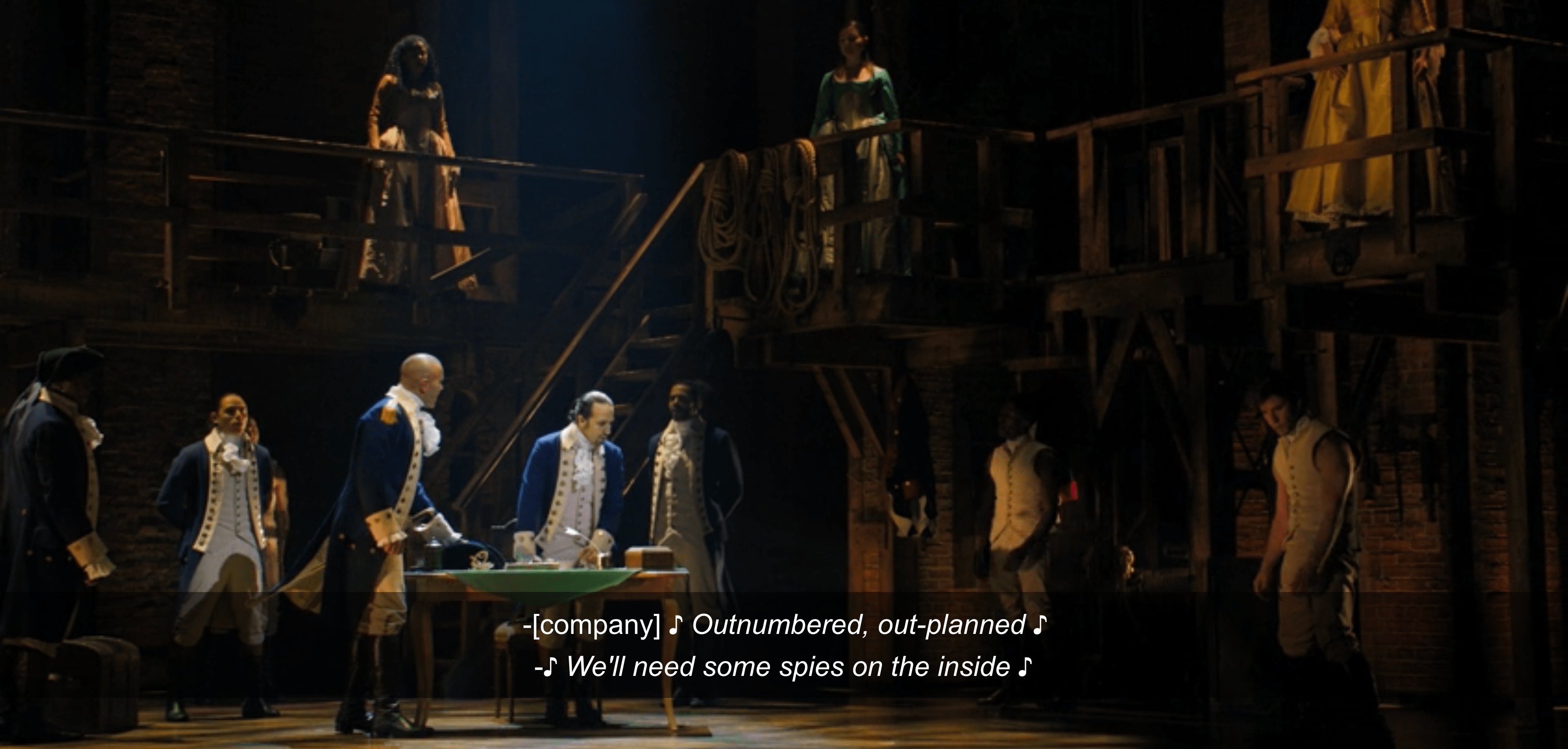

Hamilton (2020): The speakers are indicated and the text is properly labelled being sung.

Font: The font should be simple and legible. Rev recommends the following fonts: Lucida Grande, Arial, STIXGeneral, Verdana, Helvetica Neue, Times, and Futura. Essentially, any standard document-style font.

Size: Make sure the size is appropriate (and can be properly viewed from a couch). Well-researched standards state that the font size needs to fit within a minimum line height of 8% of the active video height. Other game experts recommend size to be a minimum of 46px for a 1080p screen and allowing scaling both up and down from there.

BBC Guidelines (2020): 0.5em = 8px

Text background: To improve legibility, the text should always contrast with the background. To assist with contrast, it is recommended to include a text background (opacity 50%) to accentuate text. On both sides of each line, the rectangular background should have a 8px gap (as seen above).

Color: BBC guidelines recommend that subtitles/captions should be white, yellow, cyan, or green (in order of priority). Colors can also assist in distinguishing names and other core concepts within combat. Just make sure to not go overboard and be mindful of colorblindness.

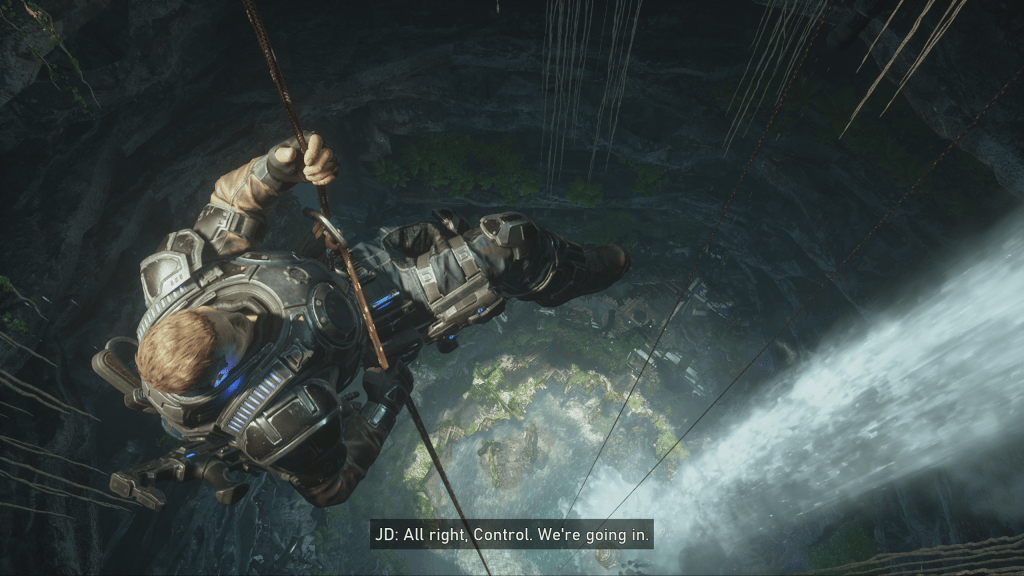

Gears of War 5 (2019): An example where the text is an appropriate size and the background properly contrasts with the text to improve legibility.

Wolfenstein II: The New Colossus (2017): An example where text is too small, the contrast between the background and text makes it illegible, and the speaker is not labelled.

Darkest Dungeon (2016): The text is not a standard color and does not contrast with the background, making it illegible.

Positioning: Traditionally, text is horizontally displayed on the bottom of the screen. Depending on the interface, it may be helpful to move the text to another location. Just make sure that the text is not obstructed by the gameplay and vice versa, as according to FCC guidelines on positioning.

Length: d/Deaf/HoH users utilize active reading skills, as they constantly need to flick their eyes between text and the gameplay. Therefore, do not present too much data all at once, as users should be able to easily read along with the dialogue. Again, BBC states that only two (at most three) lines should be displayed at a time and a single line should not exceed 38 characters.

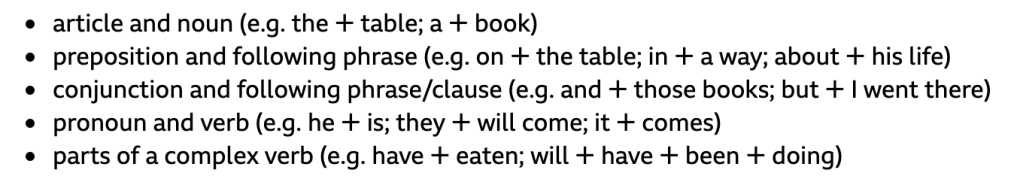

Break at natural points: Text should always break in logical points. Ideally, a line should be broken in the event of punctuation or the changed speakers. If the game requires split speech, it is advised by experts to avoid splitting in the event of a(n):

BBC Guidelines (2020)

Left, right and center justification can be used to identify sound location or speaker. Just make sure breaks also need to consider eye-movement.

BBC (2020) example of an unnatural line break.

![A woman holding a walkie talkie, stating Jonah I'm here. Just a little stuck. Uh-- a rock pinned my [UNNATURAL BREAK IN LINE] leg. A woman holding a walkie talkie, stating Jonah I'm here. Just a little stuck. Uh-- a rock pinned my [UNNATURAL BREAK IN LINE] leg.](https://leahybaker.files.wordpress.com/2020/07/pasted-image-0-5.png?w=1024&width=700&auto=webp&quality=80&disable=upscale)

Shadow of the Tomb Raider (2018): An example where the text is properly contrasted and easy to read, but includes an arbitrary, unnatural line break.

Time: When text appears on the screen, users should have an ample amount of time to read the text. Generally, users can read 160-180 words per minute. Channel 4 recommends a rough estimate of 2 seconds per line. But when adding combat or character movement to the equation, it is safe to say that readers may have a decreased reading rate. Since there isn’t data on this topic yet, be ready to have playtesters available. Additionally, as users cognitively adjust to the game’s subtitles/captions, make sure that the display time is consistent.

Synchronization: The text within the subtitles should always match what is happening in the gameplay. According to FCC guidelines, text should be displayed with corresponding dialogue and sound effects “to the greatest extent possible.” It wouldn’t be fun to read a spoiler before it happens on the screen.

Outcast – Second Contact (2017): The text is too long for users to read and comprehend in an appropriate amount of time, thus slowing down gameplay.

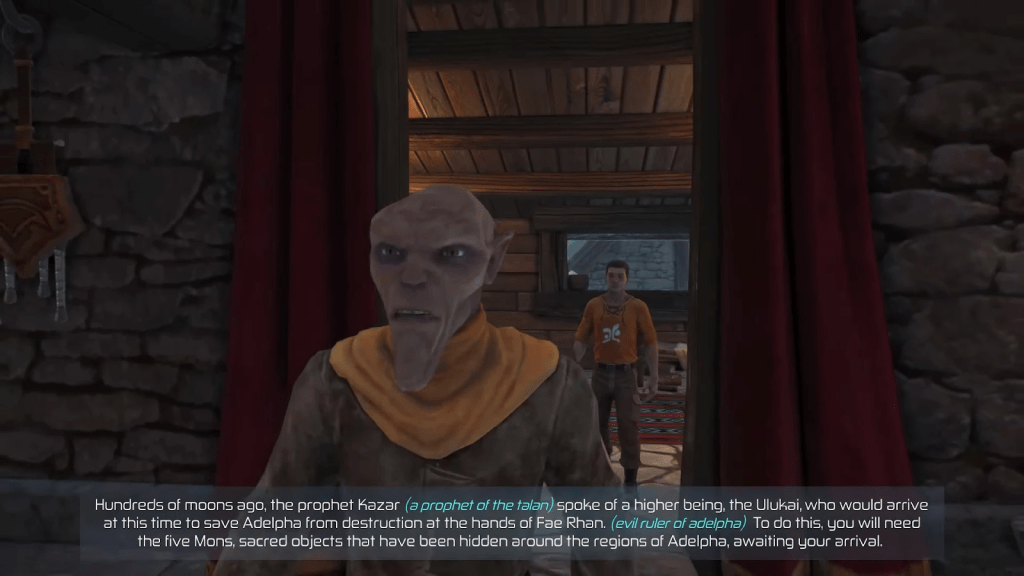

Assassin’s Creed Odyssey (2018): An example of text that is presented at an appropriate length, uses a clear text color with a suitable background contrast, and properly identifies the speaker.

Directionality: When possible, always indicate the direction of the dialogue, music, and sound. It can be as simple as an arrow point left or right.

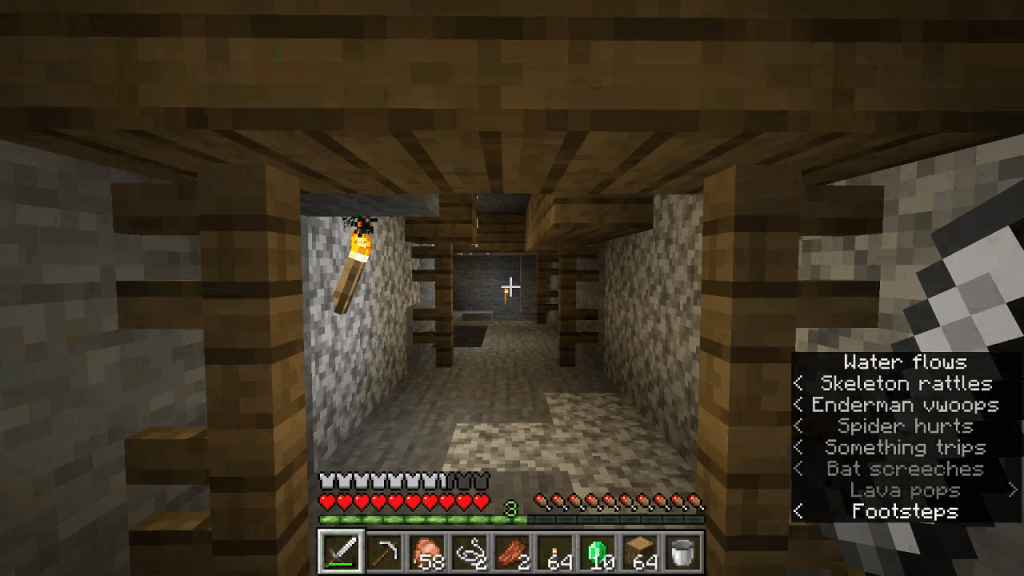

Minecraft (2020): An example of closed captions indicating the location of the sound using < or >.

Consistency, consistency, CONSISTENCY: Whether the team decides to use colors or brackets, make sure text is consistent. Doing so will ensure intuitive use for the product.

Visual cues

Games require a large amount of information to be translated to the user. The most commonly utilized method for helping a user understand and react to a game’s mechanics or intent is through the use of visual cues. Essentially, visual cues are used within game design to notify users of pertinent gameplay information so that the user may make informed decisions.

For deaf and hard of hearing users, visual cues are the key to success.

Valorant (2020): An example of a user taking damage and the game notifying the user through a damage directional indicator.

Visual cues can come in many shapes, sizes, colors, and forms. More often than not, designers will use visual elements that users are already familiar with, as doing so will make gameplay intuitive for users. Depending on the gameplay and designer’s intent, visual cues are often accompanied by a sound cue and at times can be subtle.

Some common examples of visual cues include:

Damage directional indicators

Pathfinding hints

Timing cues during combat (i.e. glint on weapon before a swing)

Highlights over loot and drops.

Remnant: From the Ashes (2019): An example of a user being visually notified of drops.

Integrating Deaf Accessibility Tools in Video Games

Now that we know about the tools to promote deaf accessibility, what do we do with them? It’s hard to say “these types of games should have these types of features” since genres are very much blended together. However, here are some general design suggestions.

Why should the tools be added to the game?

When it comes to Deaf Accessibility, there is no single solution. It’s easy to add closed captions and call it a day. But accessibility involves interactive and integrated design.

For example, only adding closed captions does not immediately equate to Deaf Accessibility. Hearing people��’s processing speed index benefits from both visual and auditory processing. However, from d/Deaf/HoH individuals, auditory processing is decreased or removed from the picture.

To create a more accessible product, designers will need to add additional visual information to assist with d/Deaf/HoH processing. The downside of only including closed captions is that active reading takes up a large portion of cognitive flexibility. This might work for TV or movies, but video games require player engagement. What if the gameplay is unpredictable and includes lots of sounds? For d/Deaf/HoH users, they’re forced into a multi-tasking dilemma and oftentimes, experience cognitive overload. They do not benefit from the interconnection between auditory and visual processing.

This is why the Deaf Accessibility tools are critical and, more so, why there needs to be healthy balance of both subtitles/captions and visual cues.

When should the tools be added to the game?

Accessibility should always be considered in the early stages of development. The reasoning is that oftentimes, foundational design choices prevent the application of accessible features.

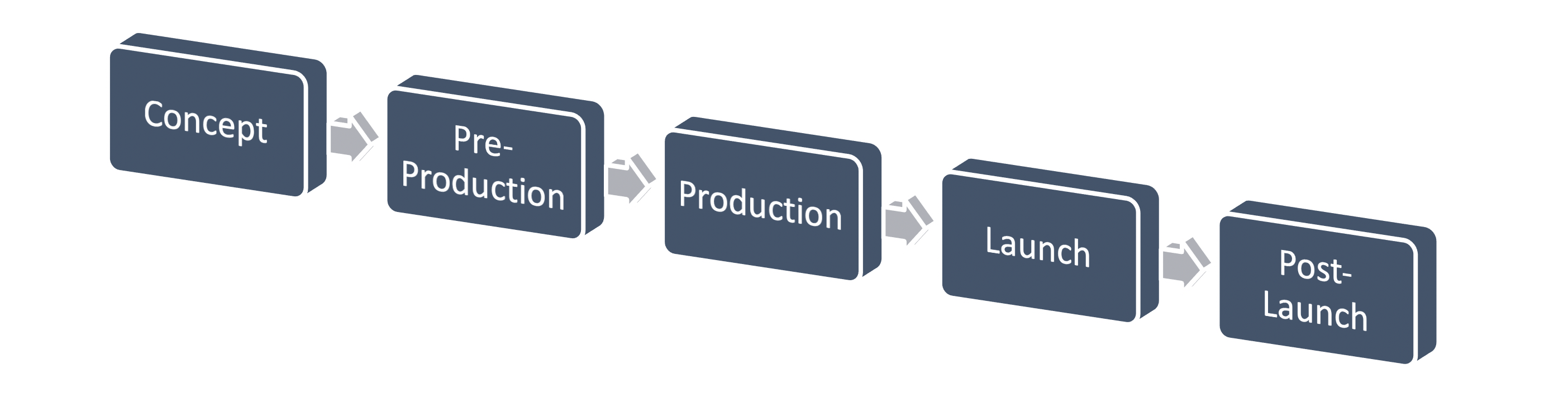

Within the game development pipeline, a game typically goes through five stages: concept, pre-production, production, launch, and post-launch. In pre-production, timelines are made, storylines are drafted, gameplay mechanics are determined, and level design is established. The game is taking shape and details are being defined.

Deaf accessibility tools need to be integrated during this pre-production phase. Once the game enters production, it is extremely difficult to go back and say, “Hey remember all those sound effects we made? Turns out they aren’t accessible to deaf people.” Sure, it’s easy to add some subtitles, but what about all the other sound cues? There will be a lot of backtracking and last-minute, imperfect solutions might be implemented.

To hold the development team accountable, it is advised to hire a full-time accessibility manager or specialist. Doing so will create a common language, understanding, and discourse that strives towards lasting changes and informed decisions. Of course, if the studio is small, then there is the option of hiring an accessibility consultant. Additionally, it may be helpful to designate one designer who actively researches accessibility and is in charge of holding others reliable. However, a full-time specialist is the most ideal route.

How should the tools be added to the game?

To answer this question, I will walk through some examples and scenarios.

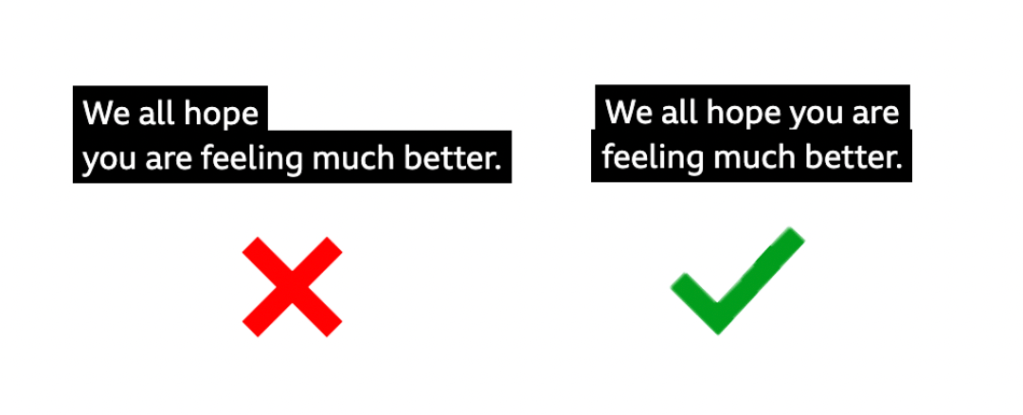

What to do with a story-driven game.

If the gameplay is intended to be story-driven with extensive dialogue, then the designers need to create thorough, comprehensive closed captions.

Assassin’s Creed Odyssey (2018)

What to do if dialogue happens off-screen.

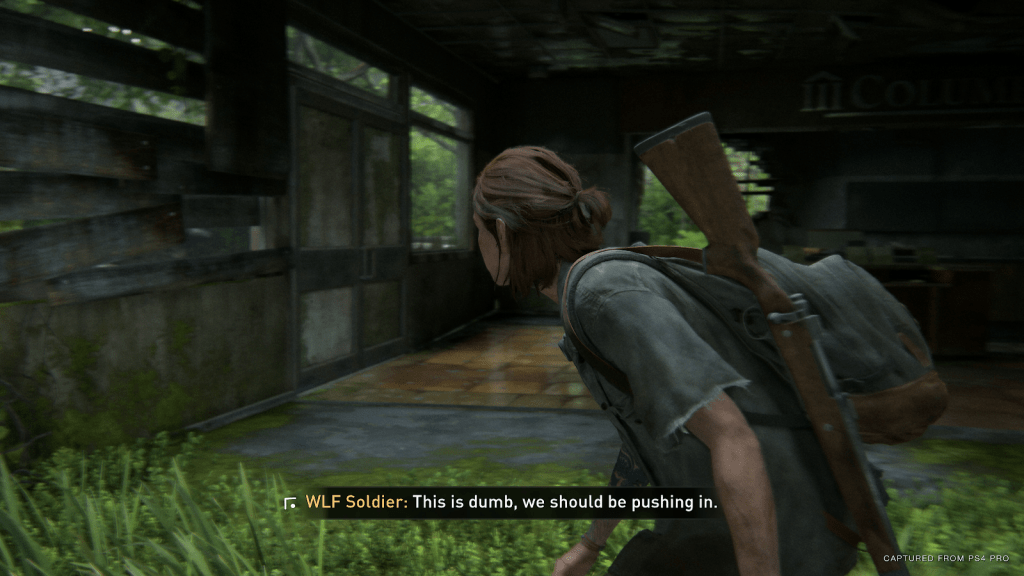

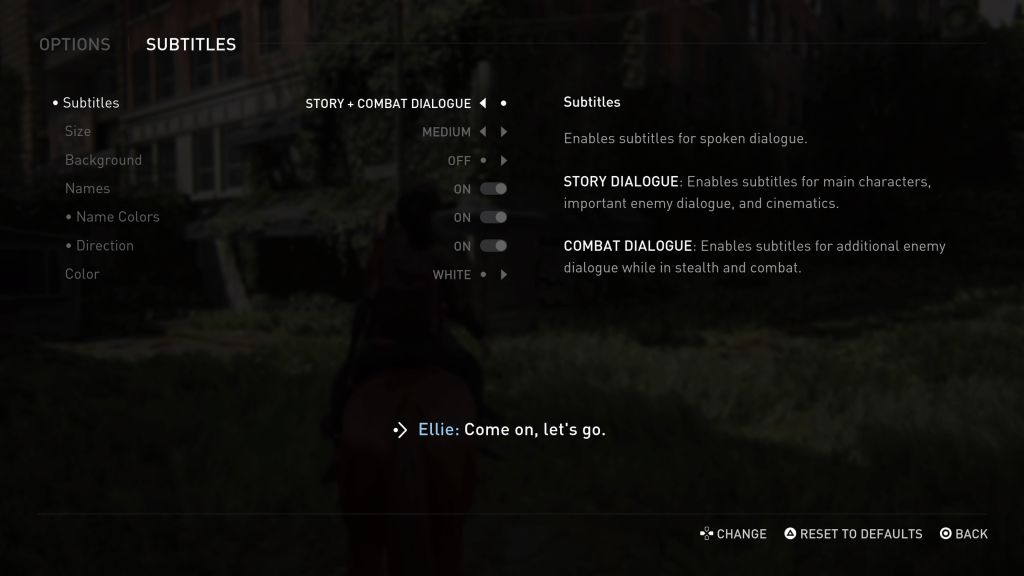

If dialogue occurs off-screen, closed captions can include a directional indicator to alert users of the source. For example, in The Last of Us Part II, combat is subtitled and includes a directional indicator to let d/Deaf/HoH users know their enemies’ location.

The Last of Us Part II (2020): The subtitles include a directional indicator.

What to do with a combat-driven game.

A major issue in combat-driven games is that off-screen events/alerts will occur, but will only notify the user through an audio cue.

To make combat-driven games accessible, there are generally three choices:

Make a visual cue

Caption it

Make a visual cue and caption it

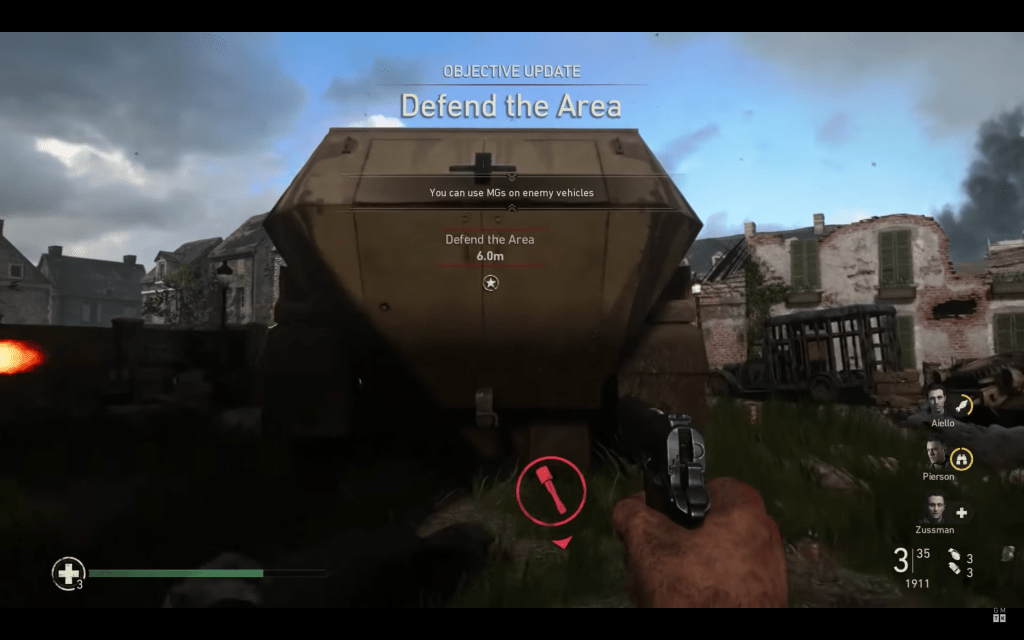

Making a visual cue is a quick and simple solution. For example, Sledgehammer Games includes a directional visual cue to alert users that there is a grenade off-screen that could be dangerous.

Call of Duty: WWII (2017): Includes a visual cue that indicates that a grenade is behind the user.

If events/alerts can be expressed through text, closed captions are a viable route. To optimize gameplay, closed captioning should include directional indicators. However, be mindful of “info dumping” text on the screen, as too many closed captions during combat may cause cognitive overload.

Overwatch (2018): Key voicelines are included as closed captioned to notify users that an ability is being used.

In other instances, a cue can be both visual and captioned. The benefit is that the user is alerted of off-screen events, while also receiving direct knowledge of what is causing the sound.

Far Cry New Dawn (2019): The off-screen “Explosion” visual cue is also captioned.

What to do with a combat-driven competitive game.

During a competitive game, users need to receive information as quickly and efficiently as possible. Typically, designers will use audio cues, as hearing individuals can subconsciously obtain information through subconscious, auditory processing. However, this does not work for d/Deaf/Hoh users.

The best course of action is visualizing sound effects. Fortnite uses a revolutionary visualization for all sound effects. Within the visualization, there are visual cues such as treasure chests, gliders, gunshots, and footsteps. Additionally, colors within the visualization helps distinguish which sounds are neutral vs. dangerous. Opacity is also used to indicate distance of the sound.

Fortnite (2017): Includes a directional indicator for treasure, gunshots, footsteps, and more.

How to improve player communication.

Team-based, first-person shooters are extremely popular these days and for many of these online games, they require player communication. However, voice chat is an obvious barrier for d/Deaf/HoH individuals. Users can use the text chat to communicate with each other or clarify information, but given the fast-paced nature of online games, this is neither efficient nor ideal. More so, text chat can inhibit users or place them at a disadvantage, as they spend more time reading/typing rather than searching for gear or engaging in combat.

One option is to include an integrated ping system. Having a precise and flexible ping system improves player communication, as users are able to quickly and efficiently provide information to their teammates. Plus, there are plenty of reasons people can’t or prefer not to speak in-game. Maybe their family is asleep, or they can’t afford a high-quality mic? Therefore, this is a universal solution that would not only benefit d/Deaf/HoH users, but also improve the game’s quality of life.

Apex Legends (2019): Showcases a three-dimensional, fully integrated ping system.

Another option is to convert speech to text. Though this solution is functional, there is a still chance the conversion is inaccurate due to poor microphone quality, background noises, accents, and incoherent speech. However, companies have proven it is good enough to show a substantial benefit for the d/Deaf/HoH communities.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/13745730/5Cg4Ccs.png?width=700&auto=webp&quality=80&disable=upscale)

Apex Legends (2019): Users are able to convert incoming voice to chat text.

What to do if the game relies on music.

Music can be visually represented in gameplay. Though Cadence of Hyrule is a rhythm game, Brace Yourself Games cleverly integrates a visual-heavy interface that relies on visual-audio cues. Notice that enemies pulse with the music, creating a visual cue that alerts users of the next move. Here is a sample of the game.

Cadence of Hyrule (2019): The rhythm is visually illustrated in the below bar.

How to accommodate for different types of hearing disabilities.

There is no such thing as one d/Deaf/HoH diagnosis. Hearing can range from mild to profound hearing loss. Some people use hearing devices such as hearing aids or cochlear implants, while others do not. Some were born with hearing loss, whereas others lose their hearing later in life. Therefore, as designers, it is difficult to predict user experience and the needs of the user base.

To accommodate varying deaf backgrounds, it is recommended to include:

Customization

Hearing Accessibility Presets

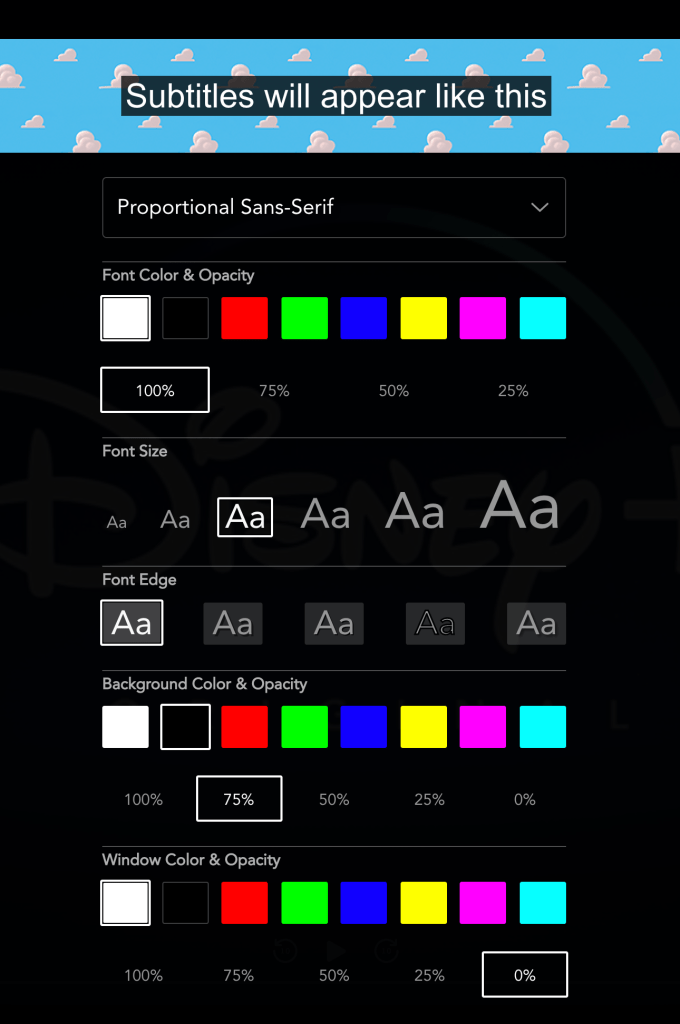

Customization: In the entertainment industry, it is always recommended to allow users to customize their subtitles/captions. Every individual has their own individual abilities that a designer is not always able to predict. For example, some people need full solid black text background with no transparency, particularly when dyslexia comes into it. Meanwhile, others may find font edging intrusive or distracting.

By adding customization to the interface, the designer is enabling users to adjust their interface to meet their needs. Recent advocates support the use of a full slider, as it allows fine tuned adjustments.

It is essential that a customization menu includes a preview so that users can identify pain points and shift settings, as needed.

Disney+: An example of extensive customization for subtitles/captions.

Here is a recent example: Naughty Dog’s game has extensive customization options within their accessibility settings. Users are able to adjust the size, background, names, directions, and colors of the subtitles.

The Last of Us Part II (2020): Subtitles with extensive customization options.

When to use controller vibration.

If the game uses a controller, designers have the opportunity to utilize vibration as an accessibility feature. However, note that vibration does not immediately indicate equal access. The reasoning is that not everyone is able to use controller vibration (i.e. Peripheral Neuropathy).

Controller vibration should only be used to emphasize the significance of a visual cue or closed caption that already exists, and should not be used as a core feature. For example, if there is an explosion in the background, the game can caption [EXPLOSION] and have the controller vibrate at the same time. Doing so will not only assist with immersion, but will also allow the player to prioritize during the decision-making process.

What to do with in-game stealth mechanics.

Whether it be the user completing a stealth mission or an enemy sneaking up on the user’s character, a common pain point for d/Deaf/HoH users is in-game stealth mechanics.

If a user is having difficultly while remaining stealthed, designers can add a noise indicator. For example, Nintendo added a noise indicator in their most recent Zelda installment (the circle with pink on the bottom left), which notifies users of their noise levels. Users may be unaware that one weapon is quieter than another. However, the noise indicator will notify deaf and hard of hearing users of their character’s noise levels, therefore serves as an extremely effective tool.

The Legend of Zelda: Breath of the Wild (2017): Stealth indicator assists with controlling character noise levels.

However, what do we do if an enemy is sneaking up on a user? In addition to integrating visual cues, designers can add awareness indicators. Awareness indicators will warn users when an enemy is about to spot them and from which direction the enemy is coming from. Awareness indicators assist in both stealth and combat, as it’ll let users know if an enemy is sneaking or charging up from behind them.

The Last of Us Part II (2020): Includes awareness indicators to notify the player that they are no longer stealth

Another overall solution is adding a mini-map that identifies all enemy locations through visual cues. Doing so will paint a clear picture of all enemy locations, leaving less room for interpretation and more room for tactical decision-making.

Concluding thoughts

Between subtitles/captions and visual cues, there are so many creative ways to promote accessibility in video games. This piece only scratches the surface of what we, as designers and developers, can do to make our games more accessible to deaf and hard of hearing gamers. I will continue to add to this piece as additional questions and examples surface. If you have a question, comment, or concern, please reach out to [email protected] or connect with me on Twitter @momoxmia.

Republished leahybaker.com (includes additional examples). Reviewed by Ian Hamilton (Accessibility Specialist, Independent), Anthony Norcott (Designer, Gunfire Games), Michael Anthony (Developer, Microsoft), and Jordan Colbert, MMFT (Assistive Technology Professional, USC).

tl;dr

The two tools are:

1. Subtitles/captions

Subtitles = Dialogue

Closed Captions = Dialogue + Sound Effects

2. Visual cues = Visually notifies users of pertinent gameplay information

Why should the tools be added to the game? d/Deaf/HoH individuals require accessibility in order to fully enjoy the video game. More so, they do not benefit from the interconnection between auditory and visual processing.

When should the tools be added to the game? In the early stages of the pipeline, ideally in the pre-production stage.

How should the tools be added to the game? Integrate subtitles/captions and visual cues into your game. Any audio cue should always have a corresponding visual cue.

Examples of integrating tools:

What to do with a story-driven game: Include detailed and comprehensive closed captions.

What to do if dialogue happens off-screen: Include directional indicators for closed captions.

What to do with a combat-driven game: If there is a sound effect, (1) make a visual cue, (2) caption it, or (3) make a visual cue and caption it. If applicable, rumble pack can additionally be used.

What to do with a combat-driven competitive games: Create a visualization for all sound effects (i.e. Fortnite).

How to improve player communication: Include an integrated ping system and voice chat transcriptions.

What to do if the game relies on music: Visually represent rhythm within the interface and through visual cues.

How to accommodate for different types of hearing disabilities: Include customization options and a Hearing Accessibility Preset.

When to use controller vibration. Use controller vibration to emphasize the significance of a visual cue or closed caption.

What to do with in-game stealth mechanics. Add noise indicators, awareness indicators, and a comprehensive mini-map.

Additional reading

For other helpful resources tips, I highly recommend reading Ian Hamilton’s piece on How to do subtitles well: basics and good practices. Max Deryagin also wrote a great piece on What Video Game Subtitling Got Wrong In 2017, which identifies common pain points. CanIPlayThat includes reviews and resources as complimentary reading material. Should videos be your preferred method of learning, you can also check out Making Games Better for the Deaf and Hard of Hearing by Game Maker’s Toolkit.

If you are hoping to broaden your understanding in accessibility, Game Accessibility Guidelines can be found here: http://gameaccessibilityguidelines.com/

Read more about:

Featured BlogsAbout the Author

You May Also Like