Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

The 1st of a 4-part series. Video game composer Winifred Phillips shares ideas from her GDC 2018 talk, Music in Virtual Reality. Part 1: The role of music in VR, with discussion of Presence, positional audio technologies, & tips for using ambient music.

By Winifred Phillips | Contact | Follow

Hey everybody! I'm video game composer Winifred Phillips. At this year’s Game Developers Conference in San Francisco, I was pleased to give a presentation entitled Music in Virtual Reality (I've included the official description of my talk at this end of this article). While I've enjoyed discussing the role of music in virtual reality in previous articles that I've posted here, the talk I gave at GDC gave me the opportunity to pull a lot of those ideas together and present a more concentrated exploration of the practice of music composition for VR games. It occurred to me that such a focused discussion might be interesting to share in this forum as well. So, with that in mind, I’m excited to begin a four-part article series based on my GDC 2018 presentation!

Virtual Reality is one of the newest platforms for gaming, and it’s an exciting time to be a video game composer. We’re entering a brand new phase in the history of game development. For the audio side of the equation, it can seem like the most alien frontier we've yet encountered, with lots of unique challenges. Since VR first burst onto the commercial scene with the Oculus Rift in 2016, I’ve had the pleasure of composing the music for a bunch of awesome VR games. When working in VR, I’ve noticed that there are some important issues for video game music composers to address. During my GDC talk, I concentrated on three of these top questions:

Virtual Reality is one of the newest platforms for gaming, and it’s an exciting time to be a video game composer. We’re entering a brand new phase in the history of game development. For the audio side of the equation, it can seem like the most alien frontier we've yet encountered, with lots of unique challenges. Since VR first burst onto the commercial scene with the Oculus Rift in 2016, I’ve had the pleasure of composing the music for a bunch of awesome VR games. When working in VR, I’ve noticed that there are some important issues for video game music composers to address. During my GDC talk, I concentrated on three of these top questions:

Do we compose our music in 3D or 2D?

Do we structure our music to be Diegetic or Non-Diegetic?

Do we focus our music on enhancing player Comfort or Performance?

During my GDC talk I presented the best examples from some of my recent VR projects. These are four very different VR games, including the Bebylon: Battle Royale arena combat game from Kite & Lightning, the Dragon Front strategy game from High Voltage Software, the Fail Factory comedy game from Armature Studio, and the Scraper: First Strike shooter/RPG game from Labrodex Inc. During my presentation, I shared video excerpts from these games in order to demonstrate concepts related to the role of music in VR. In these articles, I'll be embedding those same video clips so that we can further discuss concrete executions of some fairly abstract concepts.

But first, let’s pause so we can ask ourselves an important question. What does Virtual Reality mean for us game audio folks? How is it different from traditional game audio, and how is it the same?

Virtual Reality is all about presence, about making players feel as if they exist inside the VR space. Everything in VR works in tandem to enhance that feeling of presence, including the audio content. Let's first take a look at one practical example of how music functions in a VR game, and then we’ll step back and look at the bigger picture.

Virtual Reality is all about presence, about making players feel as if they exist inside the VR space. Everything in VR works in tandem to enhance that feeling of presence, including the audio content. Let's first take a look at one practical example of how music functions in a VR game, and then we’ll step back and look at the bigger picture.

One of my projects over this past year was the music for Scraper, a first-person VR shooter with RPG elements set inside colossal skyscrapers in a futuristic city. While playing Scraper, when we’re not shooting at enemies, we’re exploring the massive buildings. Ambient music sets the tone for this, but how to introduce it so that it feels natural in VR? After all, if virtual reality is all about presence, about making players feel as if they exist in a real place - where is the music coming from? Does it also exist in the VR world, and if it doesn't, how do we introduce it so that it doesn't disconcert the player and interfere with the realism of the VR experience?

At the beginning of music production for Scraper, I had a long meeting with the project director, and we were particularly concerned about this issue in connection with the ambient score. So we decided that the ambient music would come and go in subtle, gradual ways. This works really well in VR, because it shifts the player's focus away from the music when it first begins playing. Hopefully, by the time that players notice music, it will already have been playing long enough to integrate itself into the environment in an unobtrusive way. To that end, I composed the ambient tracks in Scraper so that they gently float into existence and then build steadily. Here’s an example of what it was like when ambient music began playing during exploration gameplay in Scraper:

At the beginning of music production for Scraper, I had a long meeting with the project director, and we were particularly concerned about this issue in connection with the ambient score. So we decided that the ambient music would come and go in subtle, gradual ways. This works really well in VR, because it shifts the player's focus away from the music when it first begins playing. Hopefully, by the time that players notice music, it will already have been playing long enough to integrate itself into the environment in an unobtrusive way. To that end, I composed the ambient tracks in Scraper so that they gently float into existence and then build steadily. Here’s an example of what it was like when ambient music began playing during exploration gameplay in Scraper:

And here's another video clip in which some time has elapsed, and the ambient music is now gently fading away during continued exploration in the Scraper VR game:

So we've taken a brief look at a simple example of music implementation in VR, but the issues surrounding audio content in Virtual Reality become increasingly complex as we further consider how audio differs in a VR space.

Generally speaking, in order for audio to behave in convincing ways within VR, all sounds should emanate faithfully from their positional sources in the fully 3-dimensional VR world. Music can also be spatialized as well, although decisions about music spatialization are more complicated. We'll be exploring that question in more detail later in this article series, but for now let's pause to think about what 3D sound means for us in VR. While it might seem like audio spatialization in VR presents an exceptional challenge, it’s actually a discipline with a long history in game development – including some controversies. Since it’s useful to understand how we got to this point, let’s quickly review a bit of that history.

The whole discipline of positional audio began in the 1930s with English engineer and inventor Alan Blumlein’s invention of the famous stereo audio format. We can thank Blumlein (pictured right) for those two very familiar sound channels; left and right. But for the purposes of our discussion, it’s more interesting to consider what Alan Blumlein initially called his newly invented technology – because he didn’t name it ‘stereo.’ He called it ‘binaural sound.’

The whole discipline of positional audio began in the 1930s with English engineer and inventor Alan Blumlein’s invention of the famous stereo audio format. We can thank Blumlein (pictured right) for those two very familiar sound channels; left and right. But for the purposes of our discussion, it’s more interesting to consider what Alan Blumlein initially called his newly invented technology – because he didn’t name it ‘stereo.’ He called it ‘binaural sound.’

We now understand the concept of binaural sound as a two-channel recording technique that’s much more complex and immersive than Blumlein’s simple stereo technology, but for a long time, the two terms (binaural and stereo) were used interchangeably.

Meanwhile, the late 1960s brought another big leap forward in sound spatialization. We understand that Blumlein’s stereo format allows us to localize audio content across a horizontal axis from left to right – but what about vertical positioning? The ambisonic format makes that possible. Using specialized multi-channel microphones capturing audio from all directions, an ambisonic recording can be decoded into lots of ‘virtual’ audio channels that can be spread out and localized. Unfortunately, the ambisonic format failed to became popular with consumers, so it languished in obscurity for decades.

Meanwhile, the late 1960s brought another big leap forward in sound spatialization. We understand that Blumlein’s stereo format allows us to localize audio content across a horizontal axis from left to right – but what about vertical positioning? The ambisonic format makes that possible. Using specialized multi-channel microphones capturing audio from all directions, an ambisonic recording can be decoded into lots of ‘virtual’ audio channels that can be spread out and localized. Unfortunately, the ambisonic format failed to became popular with consumers, so it languished in obscurity for decades.

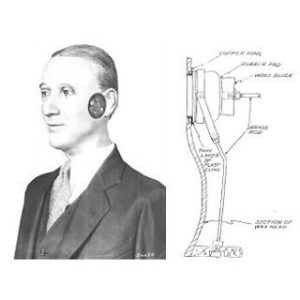

Meanwhile, Blumlein’s two-channel audio format was getting a big upgrade. Remember, Blumlein’s stereo gives us two audio channels that correspond with our two ears… but to localize sound, we need more than just a pair of ears. We also need… a head. So AT&T provided one, as a part of a very successful exhibit at the 1933 World's Fair in Chicago. Author Cheryl R. Ganz described the exhibit in her book about the 1933 Chicago World's Fair.

"In AT&T's most popular attraction, Oscar, a mechanical man with microphone ears, sat in a glass room surrounded by visitors wearing head receivers. Amazed, they heard exactly what Oscar heard. Flies buzzing, footsteps, or whispers all seemed to surround each listener." Due to this amazing auditory phenomenon, the 'Oscar' display was "by far the most popular attraction in the exhibit."

"In AT&T's most popular attraction, Oscar, a mechanical man with microphone ears, sat in a glass room surrounded by visitors wearing head receivers. Amazed, they heard exactly what Oscar heard. Flies buzzing, footsteps, or whispers all seemed to surround each listener." Due to this amazing auditory phenomenon, the 'Oscar' display was "by far the most popular attraction in the exhibit."

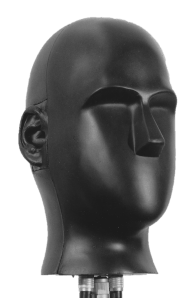

'Oscar' (pictured right) was, in fact, a crude precursor to the dummy head binaural microphone that would later emerge. Even though it astounded visitors to the AT&T exhibit at the World's Fair, the basic idea behind 'Oscar' didn't really take off until 1975. That's when the Neumann microphone manufacturer released their fully-realized version of the famous dummy head binaural microphone.

The principle of the dummy head binaural microphone is simple. Sound reaches the head. It interacts with the shape of the head, the shape of the ear lobes and ear canals, until it finally reaches the two microphones inside. The binaural dummy head does a great job of replicating how human beings perceive sound in real life. As sound transfers from the built-in resonators and mufflers embodied by our heads, all this bouncing around provides loads of data to our brains. It’s how we get both horizontal and vertical info about where sounds are coming from. The whole process is called Head Related Transfer Function (HRTF). The Verge multimedia magazine produced a video that does a great job of demonstrating the principles behind HRTF – let’s take a look at that:

The principle of the dummy head binaural microphone is simple. Sound reaches the head. It interacts with the shape of the head, the shape of the ear lobes and ear canals, until it finally reaches the two microphones inside. The binaural dummy head does a great job of replicating how human beings perceive sound in real life. As sound transfers from the built-in resonators and mufflers embodied by our heads, all this bouncing around provides loads of data to our brains. It’s how we get both horizontal and vertical info about where sounds are coming from. The whole process is called Head Related Transfer Function (HRTF). The Verge multimedia magazine produced a video that does a great job of demonstrating the principles behind HRTF – let’s take a look at that:

So, if we’ve had binaural audio since the 70s, why aren’t we listening to everything in binaural format now? Because binaural sound requires headphones to work – it won’t translate to a speaker system. Ambisonics will work with a speaker system… but consumers didn’t warm up to the format. So, the two technologies remained obscure, while everybody embraced surround sound for 3D audio.

Lots of speakers means great positional audio, right? Except surround sound doesn’t account for the vertical axis. No height. It’s not a sphere of sound – it’s more like a hula hoop.

Lots of speakers means great positional audio, right? Except surround sound doesn’t account for the vertical axis. No height. It’s not a sphere of sound – it’s more like a hula hoop.

As we all know, video games have included all of these positional audio technologies… and yet only surround sound gained any long-term traction. Which is weird. Gamers like wearing headphones, so binaural audio should have been a perfect fit. And actually, it was… in the 1990s. And then it dropped off a cliff.

Let's take a look at why that happened:

Aureal Semiconductor blew everybody’s minds in the nineties by releasing the A3D sound middleware for their Vortex PC sound card chipsets. The A3D middleware enabled Head Related Transfer Functions for game audio, which essentially delivered a form of simulated binaural sound. Maximum PC Magazine called it one of the greatest 100 PC innovations of all time. But then in 1998, Creative Labs slapped Aureal Semiconductor with a patent infringement lawsuit. The legal fees bankrupted Aureal, Creative Labs bought the company in September 2000, then quietly buried the A3D technology... and the years went by.

Aureal Semiconductor blew everybody’s minds in the nineties by releasing the A3D sound middleware for their Vortex PC sound card chipsets. The A3D middleware enabled Head Related Transfer Functions for game audio, which essentially delivered a form of simulated binaural sound. Maximum PC Magazine called it one of the greatest 100 PC innovations of all time. But then in 1998, Creative Labs slapped Aureal Semiconductor with a patent infringement lawsuit. The legal fees bankrupted Aureal, Creative Labs bought the company in September 2000, then quietly buried the A3D technology... and the years went by.

So now we come to the thing that changed everything: Virtual Reality! VR is all about presence. The VR world has to surround us in a genuine, believable way – so positional audio becomes hugely important. Both the binaural and ambisonic formats are enjoying a renaissance right now, as game audio experts deploy these formats to spatialize their sound content for VR. We all understand the importance of good positional audio for the sound design content of the game… but what about the music?

Over my next three articles, I'll be exploring the role of music in VR through the examination of three important questions for VR game music composers:

Do we compose our music in 3D or 2D?

Do we structure our music to be Diegetic or Non-Diegetic?

Do we focus our music on enhancing player Comfort or Performance?

The next article will focus on the difference between 3D and 2D audio strategies for music implementation in VR games. In the meantime, please feel free to leave your comments in the space below!

This lecture presented ideas for creating a musical score that complements an immersive VR experience. Composer Winifred Phillips shared tips from several of her VR projects. Beginning with a historical overview of positional audio technologies, Phillips addressed several important problems facing composers in VR. Topics included 3D versus 2D music implementation, and the role of spatialized audio in a musical score for VR. The use of diegetic and non-diegetic music were explored, including methods that blur the distinction between the two categories. The discussion also included an examination of the VIMS phenomenon (Visually Induced Motion Sickness), and the role of music in alleviating its symptoms. Phillips' talk offered techniques for composers and audio directors looking to utilize music in the most advantageous way within a VR project. Takeaway Through examples from several VR games, Phillips provided an analysis of music composition strategies that help music integrate successfully in a VR environment. The talk included concrete examples and practical advice that audience members can apply to their own games. Intended Audience This session provided composers and audio directors with strategies for designing music for VR. It included an overview of the history of positional sound and the VIMS problem (useful knowledge for designers.) The talk was intended to be approachable for all levels (advanced composers may better appreciate the specific composition techniques discussed). |

Winifred Phillips is an award-winning video game music composer whose most recent projects are the triple-A first person shooter Homefront: The Revolution and the Dragon Front VR game for Oculus Rift. Her credits include games in five of the most famous and popular franchises in gaming: Assassin’s Creed, LittleBigPlanet, Total War, God of War, and The Sims. She is the author of the award-winning bestseller A COMPOSER'S GUIDE TO GAME MUSIC, published by the MIT Press. As a VR game music expert, she writes frequently on the future of music in virtual reality games.

Winifred Phillips is an award-winning video game music composer whose most recent projects are the triple-A first person shooter Homefront: The Revolution and the Dragon Front VR game for Oculus Rift. Her credits include games in five of the most famous and popular franchises in gaming: Assassin’s Creed, LittleBigPlanet, Total War, God of War, and The Sims. She is the author of the award-winning bestseller A COMPOSER'S GUIDE TO GAME MUSIC, published by the MIT Press. As a VR game music expert, she writes frequently on the future of music in virtual reality games.

Follow her on Twitter @winphillips.

Read more about:

Featured BlogsYou May Also Like