Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Interactive music systems are increasingly important in games. In this article, I discuss two music system prototypes that were revealed at GDC 2016. I also discuss a music system from one of my own released projects: the Spore Hero game (EA).

As a speaker in the audio track of the Game Developers Conference this year, I enjoyed taking in a number of GDC audio sessions -- including a couple of presentations that focused on the future of interactive music in games. I've explored this topic before at length in my book (A Composer's Guide to Game Music), and it was great to see that the game audio community continues to push the boundaries and innovate in this area! Interactive music is a worthwhile subject for discussion, and will undoubtedly be increasingly important in the future as dynamic music systems become more prevalent in game projects. With that in mind, in this blog I'd like to share my personal takeaway from two sessions that described very different approaches to musical interactivity. After that, we'll discuss one of my experiences with interactive music for the video game Spore Hero from Electronic Arts (pictured above).

As a speaker in the audio track of the Game Developers Conference this year, I enjoyed taking in a number of GDC audio sessions -- including a couple of presentations that focused on the future of interactive music in games. I've explored this topic before at length in my book (A Composer's Guide to Game Music), and it was great to see that the game audio community continues to push the boundaries and innovate in this area! Interactive music is a worthwhile subject for discussion, and will undoubtedly be increasingly important in the future as dynamic music systems become more prevalent in game projects. With that in mind, in this blog I'd like to share my personal takeaway from two sessions that described very different approaches to musical interactivity. After that, we'll discuss one of my experiences with interactive music for the video game Spore Hero from Electronic Arts (pictured above).

Baldur Baldursson (pictured left) is the audio director for Icelandic game development studio CCP Games, responsible for the EVE Online MMORPG. Together with Professor Kjartan Olafsson of the Iceland Academy of Arts, Baldursson presented a talk at GDC 2016 on a new system to provide "Intelligent Music For Games."

Baldur Baldursson (pictured left) is the audio director for Icelandic game development studio CCP Games, responsible for the EVE Online MMORPG. Together with Professor Kjartan Olafsson of the Iceland Academy of Arts, Baldursson presented a talk at GDC 2016 on a new system to provide "Intelligent Music For Games."

Baldursson began the presentation by explaining why an intelligent music system for games can be a necessity. "We basically want an intelligent music system because we can't (or maybe shouldn't really) precompose all of the elements," Baldursson explains. He describes the conundrum of creating a musical score for a game whose story is still fluid and changeable, and then asserts, "I think we should find ways of making this better."

Baldursson shared during the course of the talk that the problem of adapting a linear art form (music) to a nonlinear format (interactivity) forces game audio professionals to look for technological solutions to artistic problems. A dynamic music system can best address the issue when it retains the ability to "create the material according to the atmosphere of the game," Baldursson says. "It should evolve according to the actual progression of the game in real time. It should be possible to control various parameters of music simultaneously."

With these goals in mind, Baldursson teamed up with Professor Olafsson (pictured right), who had designed an interactive music system as part of his thesis at Sibelius Academy in Finland in 1988. Olafsson's music system, dubbed CALMUS (or CALculated MUSic), is the product of over twenty years of research and experimentation. "The idea," says Olafsson, "was to make a composing program that could use musical elements and material (as we are doing with pencil and paper), and use this new tool to make experimental music." To this end, Olafsson studied other interactive music systems, such as the one developed by the team at Electronic Arts for the Spore video game (described in greater detail in this Rolling Stone article). After these studies and experiments, Olafsson refined the CALMUS system until "in the end we had this program that could compose music for orchestra, for chamber music, electronic music, and now today we are focusing on realtime composing."

With these goals in mind, Baldursson teamed up with Professor Olafsson (pictured right), who had designed an interactive music system as part of his thesis at Sibelius Academy in Finland in 1988. Olafsson's music system, dubbed CALMUS (or CALculated MUSic), is the product of over twenty years of research and experimentation. "The idea," says Olafsson, "was to make a composing program that could use musical elements and material (as we are doing with pencil and paper), and use this new tool to make experimental music." To this end, Olafsson studied other interactive music systems, such as the one developed by the team at Electronic Arts for the Spore video game (described in greater detail in this Rolling Stone article). After these studies and experiments, Olafsson refined the CALMUS system until "in the end we had this program that could compose music for orchestra, for chamber music, electronic music, and now today we are focusing on realtime composing."

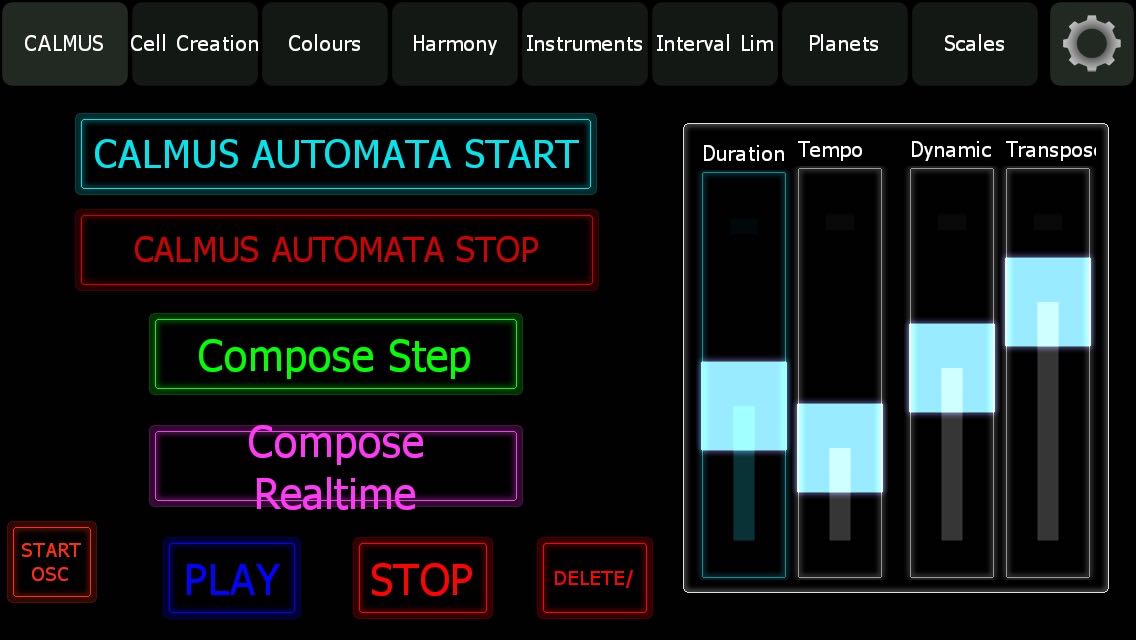

In order to fully exploit the capabilities of the CALMUS system for realtime interactive composition, a game composer must first use the CALMUS application (pictured left) to define the best set of parameters to be used by the system to determine musical events such as themes, harmonies, scales, melodies, textures, tempos, etc. "The algorithms we are using, they take care of putting together tones for harmonies and for melodies," Olaffson says, "and the artificial intelligence system makes it possible for the system to go on, to compose music by itself, but as we are doing it, the composer using the system is defining the framework."

In order to fully exploit the capabilities of the CALMUS system for realtime interactive composition, a game composer must first use the CALMUS application (pictured left) to define the best set of parameters to be used by the system to determine musical events such as themes, harmonies, scales, melodies, textures, tempos, etc. "The algorithms we are using, they take care of putting together tones for harmonies and for melodies," Olaffson says, "and the artificial intelligence system makes it possible for the system to go on, to compose music by itself, but as we are doing it, the composer using the system is defining the framework."

As a part of ongoing testing of the CALMUS software, Olafsson designed a performance-art experiment called "Calmus Waves," in which the movements of dancers were motion-tracked (using the accelerometers, gyroscopes and compasses built into iPhones strapped to the dancers' bodies). This data was used during the dance performance to define the best parameters for musical events (such as melodies, harmonies, etc) in the CALMUS software. While the dancers moved, the software recorded their movements, and translated them into musical algorithms.

As a part of ongoing testing of the CALMUS software, Olafsson designed a performance-art experiment called "Calmus Waves," in which the movements of dancers were motion-tracked (using the accelerometers, gyroscopes and compasses built into iPhones strapped to the dancers' bodies). This data was used during the dance performance to define the best parameters for musical events (such as melodies, harmonies, etc) in the CALMUS software. While the dancers moved, the software recorded their movements, and translated them into musical algorithms.

These algorithms were then instantaneously translated into sheet music appearing on computer tablets for the musicians who were executing the music live during the dance performance. The result was a reversal of the normal course of creative inception -- instead of the dancers moving in accordance with the shapes and activity of the music, the musicians were playing in accordance with the shapes and activity of the dance. Here's a video that shows this performance in action:

Uses of this generative music technology for video games are still in experimental stages. During the GDC talk, Baldur Baldursson described how his audio team integrated the CALMUS system into prototypes of the EVE Online game, using the audio middleware Wwise from Audiokinetic. "CALMUS feeds the MIDI events into Wwise," Baldursson explains, "which hosts the instruments. Currently the system runs outside WWise but ideally we're going to have it as a plugin so that we can use it with other games we're making."

Here's a video showing the prototype of the CALMUS system operating within EVE Online:

The "Intelligent Music for Games" talk was a fascinating exploration of a MIDI-based generative music system. The entire talk is available for viewing by subscribers to the GDC Vault.

In linear media (such as films), the narrative is written ahead of time. With the story fully conceived, the musical score can emotionally prepare the listener for events that have not yet occurred. But how do we achieve the same awesome results in nonlinear media, when the story is fluid, and there is no way to predict future events? In the talk, "Precognitive Interactive Music: Yes You Can!," Microsoft's senior technical audio director Robert Ridihalgh is joined by former Microsoft audio director Paul Lipson. Together, they explore ways to structure music so that it reacts so quickly to in-game changes that it seems to anticipate what's to come.

"We've come up with a modular approach to build the musical content on the fly," says Ridihalgh (pictured left). This modular approach to interactive music has been dubbed Project Hindemith (named for the famous German composer Paul Hindemith). The system converts linear music into interactive components in a number of ways. First, a selection of linear loops are composed. The instrumentation in these loops is subsequently broken down into several submixes - each representing a different level of energy and excitement. The music system is then able to switch between these submixes as the player progresses and activates triggering points corresponding to the different submix recordings. This collection of loops and triggers results in music that reacts to gameplay in emotionally satisfying ways -- and while these ideas are hardly new, Project Hindemith introduces novelty in the way in which the system utilizes musical transitions.

"We've come up with a modular approach to build the musical content on the fly," says Ridihalgh (pictured left). This modular approach to interactive music has been dubbed Project Hindemith (named for the famous German composer Paul Hindemith). The system converts linear music into interactive components in a number of ways. First, a selection of linear loops are composed. The instrumentation in these loops is subsequently broken down into several submixes - each representing a different level of energy and excitement. The music system is then able to switch between these submixes as the player progresses and activates triggering points corresponding to the different submix recordings. This collection of loops and triggers results in music that reacts to gameplay in emotionally satisfying ways -- and while these ideas are hardly new, Project Hindemith introduces novelty in the way in which the system utilizes musical transitions.

The ultimate goal of the Hindemith system is to build drama in a satisfying way towards a predetermined "event" - which can be any in-game occurrence that the development team would like to carry extra emotional weight. To make this work, the Hindemith system focuses special attention on the moments occurring shortly before the player would encounter and activate the "event."

In preparing music for the Hindemith system, the video game composer is asked to pore through the musical composition looking for short, declarative segments that may include musical flourishes or escalations. The video game music composer is not asked to create these short musical segments from scratch, but to isolate them from music that was composed in a traditionally linear way.

"Content is extracted from pre-existing cues," says Lipson (pictured right). "Write a beautiful piece of music. Write a linear idea. And then pull from that the content that you need that can then be stitched together."

"Content is extracted from pre-existing cues," says Lipson (pictured right). "Write a beautiful piece of music. Write a linear idea. And then pull from that the content that you need that can then be stitched together."

Copied and isolated from the larger composition, these short chunks of music are now referred to as "Hindebits" in the system. To prepare these short "Hindebits" for use in the interactive music matrix, the composer processes the music chunks into many variations, each one representing a small change in tempo and/or pitch. The Hindemith system then calculates how many of these Hindebits will be required to bridge the gap between the player's current position and the position of the event trigger (towards which these Hindebits are emotionally building). The system is able to string the Hindebits together by calculating their length and extrapolating the remaining time before the player triggers the event.

"It allows us to react to changes in the amount of time that we're anticipating it's going to take a player to get to a particular event," says Ridihalgh. "And on top of that, because it's dynamically changing and it's built up of these bits, we're able to fight repetition because its different each time."

The system also allows for the creation and utilization of Hindebits that would be activated if the player were to retreat from the event, rather than triggering it. In this way, the musical drama can swell and recede in accordance with the player's decisions, and without requiring the composer to create tons of short musical snippets from scratch. "Let the composers create their vision and then be able to take that vision and put it into a system like this," says Ridihalgh. "This is really a holistic system we've come up with. There's a guidance spec for composers, and a guidance spec for developers... it does work. It can really add to gameplay and how the music supports the entire story of the game."

While Project Hindemith is also in early stages and hasn't been implemented fully in a released game, Lipson and Ridihalgh did mention in their presentation that a simplified version of Project Hindemith was used to enable musical interactivity for the Sunset Overdrive video game (pictured left). We may be able to hear some of this interactivity in the way that the music builds during boss battles - so here is a video containing all of Sunset Overdrive's boss fights. It's a pretty long video, but I was especially able to notice the interactive musical transitions during the first boss fight in this video, which is fought against a creature called "Fizzie."

While Project Hindemith is also in early stages and hasn't been implemented fully in a released game, Lipson and Ridihalgh did mention in their presentation that a simplified version of Project Hindemith was used to enable musical interactivity for the Sunset Overdrive video game (pictured left). We may be able to hear some of this interactivity in the way that the music builds during boss battles - so here is a video containing all of Sunset Overdrive's boss fights. It's a pretty long video, but I was especially able to notice the interactive musical transitions during the first boss fight in this video, which is fought against a creature called "Fizzie."

The "Precognitive Interactive Music: Yes You Can!" talk offered some interesting new ideas pertaining to musical interactivity for game audio implementation. The entire talk is available for viewing by subscribers to the GDC Vault.

We've been talking about some very early prototypes of interactive music systems that haven't yet debuted in released game titles, so let's take a quick look at an example of how musical interactivity works in practical applications. I've worked on quite a few projects featuring interactive music systems, and one of the more interesting of these was the Spore Hero video game from Electronic Arts. The Spore Hero soundtrack featured multiple systems of musical interactivity, as well as special musical minigames that tied the music directly to the gameplay mechanics. One of my favorite interactive music moments was right at the very beginning, during the game's opening menu system. It's a simple interactive music system, and the music happens to be the main theme of the game. While simple, the interactive system is quite satisfying in its execution, and it shows that sometimes musical interactivity can be very straightforward and streamlined, while still providing pleasing reactions to the choices of players.

We've been talking about some very early prototypes of interactive music systems that haven't yet debuted in released game titles, so let's take a quick look at an example of how musical interactivity works in practical applications. I've worked on quite a few projects featuring interactive music systems, and one of the more interesting of these was the Spore Hero video game from Electronic Arts. The Spore Hero soundtrack featured multiple systems of musical interactivity, as well as special musical minigames that tied the music directly to the gameplay mechanics. One of my favorite interactive music moments was right at the very beginning, during the game's opening menu system. It's a simple interactive music system, and the music happens to be the main theme of the game. While simple, the interactive system is quite satisfying in its execution, and it shows that sometimes musical interactivity can be very straightforward and streamlined, while still providing pleasing reactions to the choices of players.

In the following video, you'll see the Spore Hero main menu system in action. There are three basic musical components to this system -- the Main Menu music, the Battle Menu music, and the Sporepedia Menu music. All three of these tracks present very different executions of the same melodic content for the Spore Hero Main Theme, with different instrumentation and atmosphere. For instance, the Main Menu is by far the most dramatic in its orchestral treatment, while the Sporepedia Menu is quietly ambient and the Battle Menu is jaunty and primitive. Transitions from one of these tracks to another are seamless because they are playing simultaneously in a Vertical Layering system (which we've discussed in previous blogs). The shifts in emotion and intensity between the menus is directly attributable to the emotive swells and dips emanating from the interactive music system. Here's a video that shows this system in action:

On a related note, since Disney's The Jungle Book movie is currently riding high as a number one box office blockbuster, I thought I'd share this video in which the same Spore Hero Main Theme that I composed for the game was subsequently featured prominently in an official trailer in which director Jon Favreau talks about his popular Jungle Book movie.

On a related note, since Disney's The Jungle Book movie is currently riding high as a number one box office blockbuster, I thought I'd share this video in which the same Spore Hero Main Theme that I composed for the game was subsequently featured prominently in an official trailer in which director Jon Favreau talks about his popular Jungle Book movie.

I think this use of the interactive music (that I originally composed for Spore Hero), now heard in the movie trailer for Disney's The Jungle Book also serves to demonstrate that this type of interactive music can be written with the kind of emotional contours associated with linear composition for movie soundtracks:

While the systems described in the presentations from this year's Game Developers Conference are prototypes, they offer an interesting glimpse into what ambitious game audio teams will be implementing for musical interactivity in the future. I hope you've enjoyed this blog that explored a few interactive music systems, and please let me know what you think in the comments section below!

Winifred Phillips is an award-winning video game music composer. Her credits include five of the most famous and popular franchises in video gaming: Assassin’s Creed, LittleBigPlanet, Total War, God of War, and The Sims. She is the author of the award-winning bestseller A COMPOSER'S GUIDE TO GAME MUSIC, published by the Massachusetts Institute of Technology Press. Follow her on Twitter @winphillips.

Winifred Phillips is an award-winning video game music composer. Her credits include five of the most famous and popular franchises in video gaming: Assassin’s Creed, LittleBigPlanet, Total War, God of War, and The Sims. She is the author of the award-winning bestseller A COMPOSER'S GUIDE TO GAME MUSIC, published by the Massachusetts Institute of Technology Press. Follow her on Twitter @winphillips.

Read more about:

Featured BlogsYou May Also Like