Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Technical sound designer Damian Kastbauer breaks down the current generation's innovations in sound design from a technical perspective, outlining what current games can do aurally, and speaks to other developers to find out more.

It's a great time in game audio these days. As we move forward in the current console generation, several emerging examples of best practices in audio implementation have been exposed through articles, demonstrations, and video examples.

Even though in some ways it feels like the race towards next gen has just begun, some of the forward-thinking frontrunners in the burgeoning field of Technical Sound Design have been establishing innovative techniques and pulling off inspirational audio since the starting gun was fired over four years ago with the release of the Xbox 360.

It's a good feeling to know that there are people out there doing the deep thinking in order to bring you some of the richest audio experiences in games available today. In some ways, everyone working in game audio is trying to solve a lot of the same problems.

Whether you're implementing a dynamic mixing system, interactive music, or a living, breathing ambient system, the chances are good that your colleagues are slaving away trying to solve similar problems to support their own titles.

In trying to unravel the mystery of what makes things tick, I'll be taking a deeper look at our current generation of game sound and singling out several pioneers and outspoken individuals who are leaving a trail of interactive sonic goodness (and publicly available information) in their wake. Stick around for the harrowing saga of the technical sound designer in today's multi-platform maelstrom.

Reverb is one area that has been gaining ground since the early days of EAX on the PC platform, and more recently thanks to its omnipresence in audio middleware toolsets.

It has become standard practice to enable reverb within a single game level, and apply a single preset algorithm to a subset of the sound mix. Many developers have taken this a step further and created reverb regions that will call different reverb presets based on the area the player is currently located. This allows the reverb to change based on predetermined locations using predefined reverb settings.

Furthermore, these presets have been extended to areas outside of the player region, so that sounds coming from a different region can use the region and settings of their origin in order to get their reverberant information. Each of these scenarios is valid in an industry where you must carefully balance all of your resources, and where features must play to the strengths of your game design.

While preset reverb and reverb regions have become a standard and are a welcome addition to a sound designer's toolbox, there is still the potential to push further into realtime. By calculating the reverb of a sound in the game at runtime either through the calculation of geometry at the time a sound is played or through the use of reverb convolution.

Leading the charge in 2007 with Crackdown, Realtime Worlds set out to bring the idea of realtime convolution reverb to the front line.

"When we heard the results of our complex Reverb/Reflections/Convolution or 'Audio-Shader' system in Crackdown, we knew that we could make our gunfights sound like that, only in realtime! Because we are simulating true reflections on every 3D voice in the game, with the right content, we could immerse the player in a way never before heard."- Raymond Usher, to Team Xbox

Crackdown

So, what is realtime Reverb using ray tracing and convolution in the context of a per-voice implementation? Here's a quick definition of ray tracing as it applies to physics calculation:

"In physics, ray tracing is a method for calculating the path of waves or particles through a system with regions of varying propagation velocity, absorption characteristics, and reflecting surfaces. Under these circumstances, wavefronts may bend, change direction, or reflect off surfaces, complicating analysis.

Ray tracing solves the problem by repeatedly advancing idealized narrow beams called rays through the medium by discrete amounts. Simple problems can be analyzed by propagating a few rays using simple mathematics. More detailed analysis can be performed by using a computer to propagate many rays." - Wikipedia

On the other side of the coin you have the concept of convolution: "In audio signal processing, convolution reverb is a process for digitally simulating the reverberation of a physical or virtual space. It is based on the mathematical convolution operation, and uses a pre-recorded audio sample of the impulse response of the space being modeled.

To apply the reverberation effect, the impulse-response recording is first stored in a digital signal-processing system. This is then convolved with the incoming audio signal to be processed." - Wikipedia

What you end up with is a pre-recorded impulse response of a space being modified (or convoluted) by the ray-traced calculations of the surrounding physical spaces. What this allows the sound to communicate in realtime is a greater sense of location and dynamics as sound is triggered from a point in 3D space, and sound is reflected off of the geometry of the immediate surrounding area.

You can hear the results of their effort in every gunshot, explosion, physics object, and car radio as you travel through the concrete jungle of Crackdown's Pacific City. It's worth noting that Ruffian Games' Crackdown 2 will be hitting shelves soon, as will Realtime Worlds' new MMO All Points Bulletin.

With a future for convolution reverb implied by recent news of Audiokinetic's Wwise toolset, let's hope the idea of realtime reverb continues to play an integral part in the next steps towards runtime spatialization.

Listen, the snow is falling... In addition to that, my computer is humming, traffic is driving by outside, birds are intermittently chirping, not to mention the clacking of my "silent" keyboard. Life is full of sound. We've all spent time basking in the endless variation and myriad ways in which the world around us conspires to astound and delight with the magic of its soundscape.

Whether it is the total randomness of each footstep, or the consistency of our chirping cell phones, the sound of the world lends a sense of space to your daily life and helps ground you in the moment.

We are taking steps in every console generation toward true elemental randomization, positional significance, and orchestrated and dynamic ambient sounds. Some of the lessons we have learned along the way are being applied in ways that empower the sound designer to make artistic choices in how these sounds are translated into the technical world of game environments.

We are always moving the ball forward in our never-ending attempts at simulating the world around us... or the world that exists only in our minds.

The world of Oblivion can be bustling with movement and life or devoid of presence, depending on the circumstances. The feeling of "aliveness" is in no small part shaped by the rich dynamic ambient textures that have been carefully orchestrated by the Bethesda Softworks sound team. Audio Designer Marc Lambert provided some background on their ambient system in a developer diary shortly before launch:

"The team has put together a truly stunning landscape, complete with day/night cycles and dynamic weather. Covering so much ground -- literally, in this case -- with full audio detail would require a systematic approach, and this is where I really got a lot of help from our programmers and the Elder Scrolls Construction Set [in order to] specify a set of sounds for a defined geographic region of the game, give them time restrictions as well as weather parameters." - Marc Lambert, Bethesda Softworks Newsletter

In a game where you can spend countless hours collecting herbs and mixing potions in the forest or dungeon crawling while leveling up your character, one of the keys to extending the experience is the idea of non-repetitive activity. If we can help to offset that from a sound perspective by introducing dynamic ambiance it can help offset some of the grind the player experiences when tackling some of the more repetitive and unavoidable tasks.

"[The ambient sound] emphasizes what I think is another strong point in the audio of the game -- contrast. The creepy quiet, distant moans and rumbles are a claustrophobic experience compared to the feeling of space and fresh air upon emerging from the dungeon's entrance into a clear, sunny day. The game's innumerable subterranean spaces got their sound treatment by hand as opposed to a system-wide method." - Marc Lambert, Bethesda Softworks Newsletter

It should come as no surprise that ambiance can be used to great effect in communicating the idea of space. When you combine the use of abstracted soundscapes and level-based tools to apply these sound ideas appropriately, the strengths of dynamics and interactivity can be leveraged to create a constantly changing tapestry that naturally reacts to the environment and parameters.

Similarly, in Fable II, the sound designers were able to "paint ambient layers" directly onto their maps. In a video development diary, Lionhead audio director Russel Shaw explains:

"I designed a system whereby we could paint ambient layers onto the actual Fable II maps. So that as you're running through a forest for instance, we painted down a forest theme, and the blending from one ambiance to another is quite important, so the technology was lain down first of all." - Russel Shaw, posted by Kotaku

Fable II

In what could be seen as another trend in the current console cycle, enabling the sound designers to handle every aspect of sound and the way it is used by the game is just now becoming common. The ability to implement with little to no programmer involvement outside of the initial system design, setup, and toolset creation is directly in contrast to what was previously a symbiotic relationship requiring a higher level of communication between all parties.

In the past, it was not uncommon to create sound assets and deliver them with a set of instructions to a programmer. A step removed from the original content creator, the sounds would need to be hand coded into the level at the appropriate location and any parametric or transition information hard coded deep within the engine.

It is clearly a benefit to the scope of any discipline to be able to create, implement, and execute a clear vision without a handoff between departments to accomplish the task. In this way I feel like we are gaining in the art of audio implementation and sound integration -- by putting creative tools in the hands of the interactive-minded sound designers and implementation specialists who are helping to pave the way for these streamlined workflows.

As we continue to move closer towards realistically representing a model of reality in games, so should our worlds react and be influenced by sound and its effect on these worlds. In Crysis, developer Crytek has made tremendous leaps towards providing the player with a realistic sandbox in which to interact with the simulated world around them. In a presentation at the Game Developers Conference in 2008 Tomas Neumann and Christian Schilling explained their reasoning:

"Ambient sound effects were created by marking areas across the map for ambient sounds, with certain areas overlapping or being inside each other, with levels of priority based on the player's location. 'Nature should react to the player,' said Schilling, and so the ambiance also required dynamic behavior, with bird sounds ending when gunshots are fired." - Gamasutra

In a game where everything is tailored towards immersing the player in a living, breathing world, this addition was a masterstroke of understatement from the team and brings a level of interactivity that hadn't been previously experienced.

Audio Lead Christian Schilling went on to explain the basic concept and provide additional background when contacted:

"Sneaking through nature means you hear birds, insects, animals, wind, water, materials. So everything -- the close and the distant sounds of the ambiance. Firing your gun means you hear birds flapping away, and silence.

"Silence of course means, here, wind, water, materials, but also -- and this was the key I believe -- distant sounds (distant animals and other noises). We left the close mosquito sounds in as well, which fly in every now and then -- because we thought they don't care about gun shots.

"So, after firing your gun, you do hear close noises like soft wind through the leaves or some random crumbling bark of some tree next to you (the close environment), all rather close and crispy, but also the distant layer of the ambiance, warm in the middle frequencies, which may be distant wind, the ocean, distant animals -- [it doesn't] matter what animals, just distant enough to not know what they are -- plus other distant sounds that could foreshadow upcoming events.

"In Crysis we had several enemy camps here and there in the levels, so you would maybe hear somebody dropping a pan or shutting a door in the distance, roughly coming from the direction of the camp, so you could follow that noise and find the camp.

It was a fairly large amount of work, but we thought, 'If the player chooses the intelligent way to play -- slowly observing and planning before attacking -- he would get the benefits of this design.'"

In this way, they have chosen to encourage a sense of involvement with the environment by giving the ambient soundscape an awareness of the sounds the player is making. The level of detail they attained is commendable, and has proven to be a forward thinking attempt at further simulating reality through creative audio implementation.

If we really are stretching to replicate a level of perceived reality with video games, then we must give consideration to every aspect of an activity and attempt to model it realistically in order to convey information about what the gameplay is trying to tell us. When we can effectively model and communicate the realistic sounds of the actions portrayed on screen, then we can step closer towards blurring the line between the player and their interactions.

What we are starting to see pop up more frequently in audio implementation is an attempt to harness the values of the underlying simulation and use them to take sound to a level of subtlety and fidelity that was previously either very difficult or impossible to achieve due to memory budget or CPU constraints.

It's not uncommon for someone in game audio to comment and expound on the "tiny detail" that they enabled with sound to enhance the gameplay in ways that may not be obvious to the player. While previously encumbered by RAM allocation, streaming budgets, and voice limitations, we are now actively working to maximize the additional resources available to us on each platform. Part of utilizing these resources is the ability to access runtime features and parameters to modify the existing sample based content using custom toolsets and audio middleware to interface with the game engine.

Ghostbusters

In the Wii version of Ghostbusters, the Gl33k audio team handled the content creation and implementation. Some of the ways that they were able to leverage the real time parameter control functionality was by changing the mix based on various states:

"The 'in to goggle' sound causes a previously unheard channel to rise to full volume. This allowed us to create much more dramatic flair without bothering any programming staff." The PKE Meter was "Driven by the RTPC, which also slightly drives the volume of the ambient bus.

"Ghost vox were handled using switch groups, since states would often change, but animations did not. Many of the states and sounds associated with them that we wanted to happen and come across, did not actually have any specific animations to drive them, so we actually ended up going in and hooking up state changes in code to drive whatever type of voice FX we wanted for the creature. This helped give them some more variety without having to use up memory for specific state animations." - Jimi Barker, Ghostbusters and Wwise, on Vimeo

In Namco Bandai's Cook or Be Cooked, says Barker via email, "I tied [RTPC] in with the cooking times, so when a steak sizzles, it actually sounds more realistic than fading in a loop over time. This allowed me to actually change the state of the sound needed over time to give a more realistic representation of the food cooking as its visual state changed. It's totally subtle, and most people will never notice it, but there's actually a pretty complicated process going on behind that curtain.

"I (had) roughly four states per cookable object that went from beginning, all the way through burned. There were loops for each of those states that fed into each other. These were also modified with one-shots -- for example, flipping an object or moving it to the oven. We tried to provide as much variation as we could fit into the game, so almost every sound has a random container accompanied with it."

Similarly, with the FMOD Designer toolset on Nihilistic Software's Conan, the developers were able to use the distance parameter to adjust DSP settings based on the proximity of an object to the player. In one example, a loop was positioned at the top of a large waterfall far across a valley with a shallow LPF that gradually (over the course of 100 meters) released the high frequencies.

As the player approaches, the filter gradually opens up on your way toward two additional waterfall sources, placed underneath a bridge directly in front of the waterfall. The additional sources had a smaller rolloff with a steeper LPF applied and were meant to add diversity to the "global" sound.

The shifting textures and frequencies of the three sounds combined sound massive as you battle your way across the bridge which helps to add a sense of audio drama to the scenario, which you can view here.

Whereas parameter values have always existed behind the screen, they have not always been as readily available to be harnessed by audio. The fact that we are at a place in the art of interactive sound design where we can make subtle sound changes based on gameplay in an attempt to better immerse the player is a testament to the power of current generation audio engines and the features exposed from within toolsets.

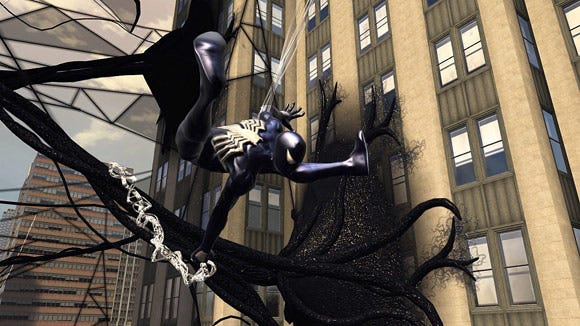

In 2008's Spider-Man: Web of Shadows, Shaba Games lead sound designer Brad Meyer was able to use the player character's good/evil affinity, in addition to the dynamic "mood" of each level, to determine the sound palette used, as well as the sound of the effects, using the Wwise toolset.

By tying the transition between Spiderman and Venom to a switch/state in Wwise, a DSP modification of the sounds triggered could be applied. The change could be easily auditioned with the flip of a switch within the Wwise toolset, allowing for prototyping in parallel and outside the confines of the game engine and gameplay iteration. This ability to mock-up features is a key component in the current generation, where iteration and polish allow for the development of robust audio systems and highly specialized sound design.

Spider-Man: Web of Shadows

"To explain what I [ended up doing] on the implementation side... was drop the pitch of Spider-Man's sounds by a couple semitones when he switched to the Black Suit, and also engaged a parametric EQ to boost some of the low-mid frequencies. The combination of these two effects made the Black Suit sound stronger and more powerful, and Red Suit quicker and more graceful.

"The effect was rather subtle, in part because it happens so often I didn't want to fatigue the player's ears with all this extra low frequency information as Black Suit, but I think it works if nothing else on a subliminal level." - Brad Meyer, via email

It makes sense that with a powerful prototyping toolset at the sound designer's disposal, the ability to try out various concepts in realtime without the aid of a fully developed game engine can be a thing of beauty.

By enabling the rapid iteration of audio ideas and techniques during development, we can continue to reach for the best possible solution to a given problem, or put differently, we can work hard towards making sure that the sound played back at runtime best represents the given action in-game.

In the field of Technical Sound Design there is a vast array of potential at the fingertips of anyone working in game audio today. Under the surface and accessed directly through toolsets, the features available help bring sample based audio closer towards interactivity. In what can sometimes be a veiled art, information on implementation techniques has at times be difficult to come by.

We are truly standing on the shoulders of the giants who have helped bring these idea's out in the open for people to learn from. It is my hope that by taking the time to highlight some of the stunning examples of interactive audio, we can all continue to innovate and drive game audio well into the next generation.

Read more about:

FeaturesYou May Also Like