Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

This article focuses on the unique technical and aesthetic challenges involved in making our audio-reactive action game, Suara. Sound and music played a critical role in the overall design of the game as gameplay reacts dynamically to the game's score.

The Soundscape of Suara: An Audio-Reactive Action Game

by Greg Dixon and Nicholas Gulezian

Suara is a 3D action arcade game that takes place on the surface of audio-reactive geometric worlds. Fight waves of enemies, defeat bosses, and bring life back to the Geoverse.

Suara’s sound effects and epic synthesized music soundtrack evoke an abstract, surreal, and alien sonic landscape. The music is written with a focus upon the player’s continual exploration and their struggle against evil.

Our team was comprised of 9 programmers, 2 designers, and 1 composer/sound designer. Suara was developed in our own custom C++ engine where we used DirectX for graphics, Audiokinetic Wwise for audio, and Bullet for physics. Our team being programmer-heavy allowed for us to have 3 members dedicated to graphics, which through custom code and shaders helped Suara have the look that it does without having any artists.

This post will focus upon the unique creative and technical challenges involved in making an audio-reactive action game. Sound and music played a critical role in the game’s overall design. Suara has a unique soundscape where the line between music and sound design is distinctively blurred. There are definite challenges in creating a soundscape where sound effects and music have to relate in a cohesive, aesthetically pleasing manner. Along the way we faced many challenges and limitations in designing the music system.

The game’s enemies, environments, and in-game animations use Wwise middleware along with code to make gameplay dynamically react to the musical score’s tempo changes. The mechanics of Suara's enemies are driven by the tempo of the background music. To achieve this, first we apply tempo metadata to each of the game’s musical segments in Wwise. Using Wwise’s callback functionality we then send tempo data, and rhythmic beat timing data to the game’s engine, which then is designed to react to these tempo changes in real-time. During most portions of gameplay Suara’s mechanics are directly driven by the music’s tempo. Suara also uses Wwise metering plug-ins to monitor the game’s musical dynamics and tie them to animations, colors, and light.

Some of Suara’s levels feature background music that has a steady, consistent tempo while other levels feature gradually increasing or decreasing tempi. When background music gets faster enemy movement gets quicker; enemies attack faster, and generally gameplay gets more difficult. This malleable approach to our music’s tempo is reflected in the game world’s graphics as well; the music and visuals coexist to give the player a feeling of shifting, malleable elapsed space and time.

Using Wwise, one limitation we encountered was that PCM audio music assets could only be assigned a single tempo marking in their metadata. This tempo data relates directly to what we use for our callbacks needed for Wwise to communicate to the game engine. For this reason, in order to make tempo changes work we segmented music tracks so that they are broken apart for every new tempo change. In order to create steadily increasing or decreasing tempo shifts each segment is concatenated, flowing into the next music segment without any pause in between.

The following video example shows a music track where tempo gradually increases over time. One can see how the track is segmented in Wwise by watching the small arrow as it moves from each segment, along with the beats per minute that we use in the name of each music segment.

The overall movement cycle for enemies is based upon measures (bar lengths) or beats. In designing Suara’s music system we opted to limit tempo changes to only happen on the downbeat of a new measure. This simplified the game’s mechanics so that new tempo changes aren’t coming in too quickly as well as potentially updating tempo in the midst of an enemy’s entire four-beat movement cycle.

Our cube character, the Bruiser, has movement that is dictated by an overall beat structure that allows it to move by turning on the beat as well as jumping and landing on the beat. Before the Bruiser jumps, there is also a preparatory period before jumping that also lasts one beat. The sound design for the Bruiser is made up mostly of the sound of musical instruments including large thumping tom-toms, tympani rolls, and tympani glissandi, augmented by some FM sound synthesis applied to the Bruiser’s landing.

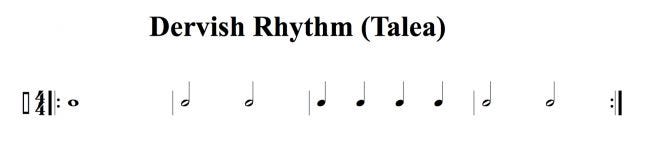

Our shooting enemy, The Dervish, has a basic shot cycle comprising 4 measures consisting of a whole note, two half-notes, four quarter-notes, and two half-notes (a total of 9 rhythmic units).

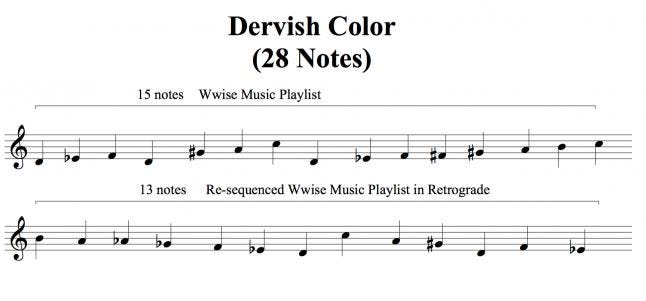

Each Dervish shot plays back a single musical note made using a synthetic clarinet-like instrument. The Dervish shots are placed into a Wwise music playlist container that features the following sequence:

The Wwise music playlist container holds 15 notes and is played back by step. We use the functionality of Wwise to reverse the order of playback of the sequence at the end of the music playlist. The retrograde version of this same sequence ends up comprising 13 notes since the first and last note of the sequence are not repeated. By using Wwise’s reverse order function the sequence of notes is extended to a total of 28 notes. These 28 notes are played back rhythmically based upon the 9 rhythmic units of the shot cycle. Our technique mimics the isorhythmic motets by composers that range back to the 13th century. The repeated pitch sequence (color) of 28 notes is not easily divisible by the rhythmic sequence of 9 unit metric units (talea) and therefore this algorithmic music applied to the Dervish shot creates a rich and multifaceted set of musical melodies/ rhythms not repeating for very long stretches of time. Here is an example of a single Dervish firing at the player— listen to the shot sound and how it generates a rich and varied melody that plays on top of the background music.

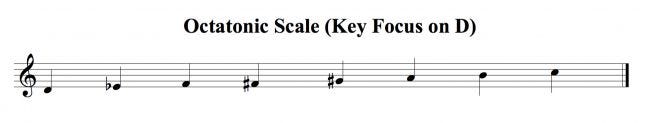

Almost all of the background music for Suara is composed using a single octatonic (8-note) scale with the note D as the central key focus. The octatonic scale is rich with both melodic and harmonic potential; like diatonic scales it can be utilized to create bright and regal music along with dark and dissonant music. The symmetrical nature of the scale means that it’s not governed by the dominant to tonic tension and release inherent to diatonic scales. This exotic scale compliments the strange, abstract, surreal atmosphere of the game and its symmetry exhibits a sense of stasis that compliments each of Suara’s unique geometric levels.

The Dervish’s melody is based upon this exact musical scale, also with a focus on the note D. When developing the game, the composer wanted to keep things flexible for the game designers so they could place the Dervish enemy into the game at any point in time. If the game’s background music were to incorporate other types of scales, then the Dervish’s shot melodies would have to modulate to accommodate these changes of key. This seemed like a difficult and time-consuming feat outside of the scope of our project, so rather than going that route all background music tracks are limited to one scale. By composing the background music for the game with care and with these firm limitations, the Dervish’s melodies can compliment the musical soundtrack at any given moment of time.

While the game’s reliance upon 4/4 rhythmic time (a simple meter) is a limitation it’s still possible to write music using a compound meter based upon a 4-beat structure, i.e. 12/8. This allows the composer to create more rhythmic contrast by subdividing the game’s beat structure by either 2 & 3. For example, Suara’s demo level exhibits a 12/8 time signature.

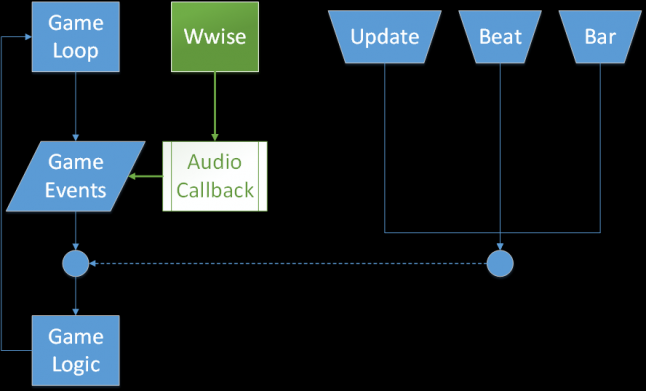

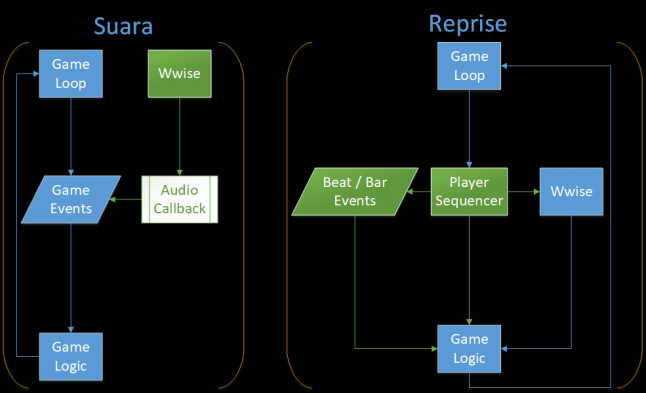

To get all of this working in engine, we had to create a custom system. This diagram shows a very high-level overview of how our engine was structured.

We have our normal game loop where events occur each frame, and each event executes its respective game logic to make the gameplay work. Using specific callbacks from Wwise, we were able to intercept information about the music, such as when beat events and bar events occur. These events alongside our normal Update event were the 3 main driving events in our engine.

Once we had this information, we were able to use it to sync gameplay with the music playing at that moment. All of the enemy movements and attacks were based on beats and bars. Since we had the duration of both beats and bars, we were able to make sure all of the animations started and stopped at specific moments, and lasted a specific duration. We created a very flexible event and tweening system, which allowed us to not only change parameters over time, but also to have sequences of functions over time for animations.

Working on a game like Suara caused us to develop a different mindset when implementing game logic. Instead of everything happening each frame on Update as usual, we started asking ourselves: What happens each beat? What happens each bar? What data do we need to know on Update? What is restricted to tempo? These questions really helped get the programmers and designers to think in parallel with the music of the game, so we could all be on the same page. The music directly changes the gameplay, so we had to make the logic work with whatever audio data was thrown at it.

Once these main systems were implemented, it was easy to simply test with new music and see how the game played differently. We had to keep in mind that since the music directly changes the game, the overall level design was kept to world shapes and hand-crafted waves of enemies. But none of the other gameplay logic could be hand-placed or hard-coded. We took a little more liberty with the boss sequences, but overall the gameplay itself was based on the music.

Player agency in music/rhythm games is an important element that operates across a very wide gamut of design ranging from rhythmic accuracy (Crypt of Necrodancer/ Patapon) to totally free player agency (Suara/ Ape Out) where imprecise or freely controlled player controls do not directly decrease the player’s chances of winning.

Most music/rhythm games operate on a fundamental beat or tempo principle that guides the player with audible feedback about whether they are doing the right or wrong rhythmic pattern to progress and win. This audible feedback is often dependent on how closely the player matches their actions to the game’s rhythmic structure determined by factors such as game audio synchronization and the music’s tempo, beat, or rhythmic patterns.

Suara, was at first intended to be a music/rhythm game that involved two conflicting elements: a game world where enemies are directly driven by music with a rigid (albeit malleable) tempo, juxtaposed against player mechanics governed ultimately by free agency (i.e. an action game). The player’s timing/ agency incorporates completely different laws outside of the gameplay music’s basic rhythm or tempo.

Our initial approach to the game’s design embraced the player’s free agency, which ultimately lead to flattening out the design of the player’s attacks; no matter when the player attacked they dealt the same amount of damage. As the game evolved, the player’s mechanics were given more attention and new systems were brought in to make gameplay more engaging, fun, and challenging. These new systems included giving the player bonuses to the strength of their attack when they attacked accurately on the beat. In addition, giving the player strength bonuses by consistently (and with rhythmic accuracy) hitting consecutive musical beats.

Rhythmic attack bonuses are added up to when the player consecutively matches four beats in a row and then they start again.

The game evolved to where it may be impossible to beat the game without using these rhythmic accuracy bonuses augmenting the strength of the player’s attacks. Ultimately, to make the game’s design more fun we ended up utilizing timed player actions, which are at the heart of so many music/rhythm games. However, from a gameplay standpoint the player is never penalized for attacking freely, but their actions are strengthened when they are performed accurately along with the background music’s beat.

Our approach to designing the player’s attacks was inspired by the music and sound design principles from Ape Out (Devolver Digital, 2017). Ape Out immediately appealed to us because we saw that our game faced some of the same music and sound design challenges. Both Ape Out and Suara attempt to fuse music/rhythm games with action/arcade games and both use musical sounds for sound effects. Ape Out ’s background music incorporates a raucous, jazz, big-band musical style. When the player (a brutal and angry gorilla) attacks and punches out enemies the sound effects use musical sounds: drum fills and cymbal crashes or flourishes that punctuate the player’s landing of vicious attacks. Ape Out’s percussive musical “sound effects“ work brilliantly juxtaposed against the game’s jazz big-band background music. The free, punctuated, accented approach to musical rhythm highlighted by the player’s mechanics in Ape Out is indicative also in the drummer’s freedom in cymbal flourishes and drum fills permeating that particular musical style of big-band jazz.

Inspired by Ape Out (as well as cartoons like Batman & Robin, etc.), in Suara we also chose to use cymbal crashes as musical sound effects enhancing player attacks. We opted to use cymbal crashes (along with some sonic sweetening) when the player lands an attack on the beat. When the player attacks with rhythmic accuracy and hits the beats (up to four in a row), each subsequent player attack in that beat sequence is given more weight with a heavier, sustained, crash cymbal sound to indicate that the player is rhythmically “on a roll.”

By tying cymbal crashes/stronger player attacks to strong musical beats we were able make things rhythmically or musically make sense. These features also serve to compel the player to sonically collaborate with the game’s soundtrack through their addition of cymbal hits or punctuations. Due to the dynamic nature of the game soundtrack’s tempo, the player has to learn or get to know the sound and rhythm of the game’s soundtrack in order for them to improve at the game. In Suara, due to our use of an essentially linear soundtrack, the player effectively gets better at the game by learning the repeated structure and sonic signature of the background music. Subsequent plays of this linear music soundtrack help the player gain a closer understanding of the music’s tempo and structure.

A profound take-away from working on this project came when we began working on our new project, Reprise, (an audio-reactive FPS game) and thinking about how we wanted our new music system to work. Reprise has a very different and unique set of musical requirements that forced us to think outside of the box of the groundwork laid out by Suara. With new technical demands, we knew that we had to rethink how we would approach music synchronization for Reprise. The following is a demo video of the beta version of Reprise:

This opened us up to the idea of using the game engine (Unreal) to control the music synchronization instead of Wwise. There are unique reasons based upon the game’s design for why we opted to synchronize our audio system with the engine instead of Wwise. Here is a diagram of how the two scenarios work.

With Suara, our engine was set up to let Wwise drive all of the music sync. We got all of our information from Wwise, and the engine used it to sync gameplay. With Reprise, we are controlling the sync on our own. Since we are allowing the player to customize their own music, there is a whole system in place to keep track of that data, and this system internally creates its own beat and bar events for sync throughout the engine. These events tell Wwise when to play all of the correct music that the player has set up. In addition to the sync, we are also receiving metering information from Wwise, which after being run through an algorithm tells the game when the weapons should be shooting. So while the main drive this time around is within the engine, we are still querying Wwise for very useful runtime information used in gameplay.

When using the game audio middleware, callbacks must be made to communicate with the engine to keep it in sync with the audio. This can be a powerful tool given to the composer who is now directly driving the game with their music. However, this can also be dangerous; if the composer makes a mistake (say, incorrect tempo metadata) then the game could potentially be difficult to play or be practically broken. This puts a lot of pressure on the composer to do great work with attention to detail. On the other hand, using the game engine to drive music synchronization may potentially put many creative synchronization decisions outside of the hands of the game composer. For this reason, it is imperative that there is a talented audio programmer who is willing to help the composer with game synchronization, or composers in this situation must familiarize themselves with the game engine’s music synchronization system and know how to harness its controls.

We would like to thank GameSoundCon for initially inspiring us to put this research together for a talk we presented at GameSoundCon 2017. Thanks to DigiPen Institute of Technology for helping to facilitate this project and our teammates on team DandyLion for helping make this project a success. We invite you to download a free full version of Suara along with the original soundtrack.

Read more about:

Featured BlogsYou May Also Like