Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

This blog post will discuss the use of biometrics in the gaming industry. It will also discuss my research about: Enhancing the Player Experience Using Live Biometric Data to Adapt a Mood in a Virtual Reality Game Environment.

In the gaming industry, the player experience is one of the most important game elements. If this is not up to par, the visuals and gameplay can be undermined. The quality of the interactions between the game and the player is often seen as the definition of player experience, eventually resulting in the enjoyment of the player. What is often forgotten is that players want a pleasant experience. Thus the primary goal of a game developer should be delivering a satisfying player experience.

I am working on a master thesis related to the player experience; I am trying to discover if the player experience can be enhanced by using live biometric data to adapt the mood of a virtual reality game environment? This innovative technique could offer a unique gameplay experience while improving the player experience. The implementation of biometric measurements could introduce the gaming industry to personalization, and it could improve the accessibility in games. Before we dive into my research, I think it is interesting to shortly discuss how biometrics are currently used in the gaming industry.

A non-intrusive way to track player experience is by using biometric measurements through a controller. A research team at Stanford University modified an Xbox 360 controller to include biometric sensors that could gather the biometric data of the player, like heart rate, rate of breath, deepness of breath, and movement. The Xbox 360 controller is not the only altered controller to have biometric sensors; Sony has patented a controller with biometric sensors.

Biometric data is mainly used in research testing specific game features, events, or social interactions. However, biometric data can also manipulate in-game data; however, this technique is still in development. Live biometric data can be unreliable. For example, the heart rate of the player can go up because of emotional investment or physical activity. There are examples of biometric data being used to either control a 2D character like in Snake or Flappy bird or control a 3D character like in World of Warcraft, Portal 2, and racing games.

Portal 2 controlled by biometric measurement by Graz Brain-Computer Interface Lab

Another approach to using biometric data in games is to dynamically modify game elements, for instance, the difficulty level, with biometric data, which produces adaptive gameplay built on the skill of the player. For this, models need to be created that link the emotions and biometric data with the skill level of the player.

A different and more exciting approach to using biometric measurements is described in the research paper ‘A framework for the manipulation of video game elements using the player’s biometric data’ of Seixas (2016). The used sensors include ECG sensors, EDA sensors, and an accelerometer. Seixas managed to adjust four different factors based on the measurements, adjusting the movement speed, adjusting the damage taken, adjusting the mood, and attack. The movement speed adjusts using the valence; a stronger positive emotion means a faster movement speed. The same applies to strong negative emotions, meaning a slow movement speed. The level of arousal of the player can influence the damage taken by an enemy; a greater stress level equals a stronger attack from the enemies.

Seixas uses the circumplex model to adjust the mood of the game. With a happy or engaged state of the player, the game would play upbeat music, and the world would become bright. Whereas if the player was calm or scared, the game would become darker with a more scary music piece.

The shooting mechanic was updated using a threshold for the data; the player attacks once the threshold is exceeded. The player can still move and aim with the assigned keys on a keyboard. There is no attack button assigned since the threshold in the biometric data does this.

Biometric mood-changing done by Seixas

For my master thesis, a VR game environment was needed. This environment needed to evoke emotions in the participant, which would be visible as a stress spike in the biometric data; however, this does not show whether the emotion was positive or negative.

With the purpose of understanding which emotions the environments evoke, a survey was made. The first part of the survey held a couple of general questions about age and gender. The second part of the survey consisted out of 15 different mood boards. For each different mood board, participants had to rate the pleasantness of the environment from one to five, with one being highly unpleasant and five being highly pleasant. The participants also had to note the emotions closest to the emotions evoked by the picture.

Graph that shows the emotions for each mood board

The VR environment must evoke both negative and positive feelings; the mood switching should be able to be triggered multiple times, which might be hard to achieve when presenting the participants with environments that are mainly perceived as calm. This only left the cornfield environment. Eight of the 20 participants mentioned a cornfield being scary at night and peaceful during the day. In order to add a gameplay experience, the VR environment became a corn maze instead of a cornfield.

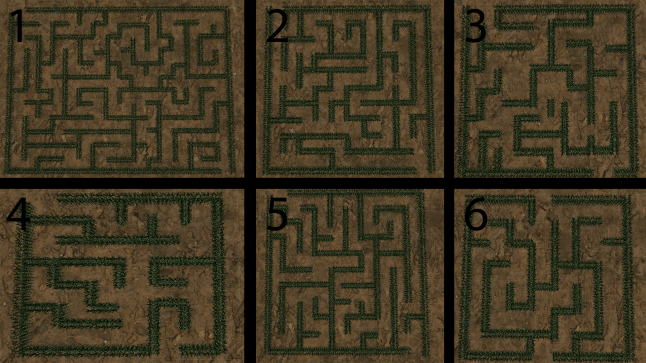

Initially, six different mazes were created, each having a different size. These mazes were play tested by two people to get a general idea of how long it takes to reach the end. Based on these times, the fifth maze became the maze used in the VR environment; this made the experience not too long and not too fast.

Six different versions of the corn maze

Six different versions of the corn maze

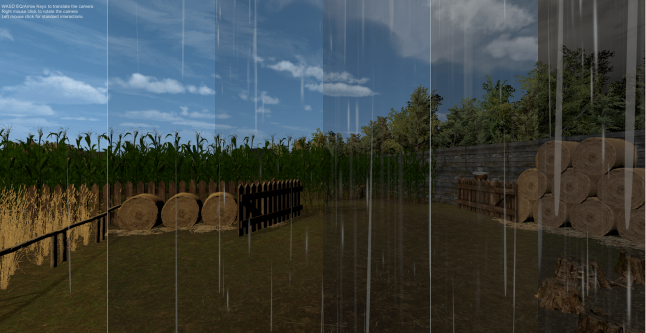

The VR environment consisted of a starting area that is fenced off, the maze, and the end area. The fence to the maze was closed at the start because the Empatica E4 needs some time to get a baseline. I used be using the Empatica E4 to measure skin temperature and the GSR levels of the participants. Once the calibration was done, the gates would open. The VR environment was decorated like a farm.

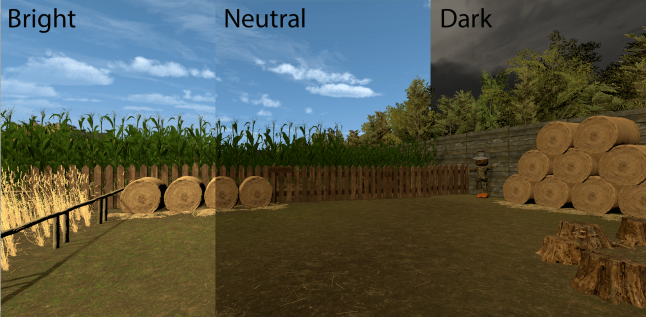

The mood changing consisted of a couple of different aspects, a weather system, a skybox change, a lighting change, and a sound change. The weather system consisted of rain, wind, clouds, and thunder. Two skyboxes were used, one bright and one dark. Based on the mood of the participant, the bright skybox faded slowly into the dark skybox. Three sets of lighting, with each its own post-processing effect, were created next. One happy and light, one neutral, and one dark and scary. The background music gets scary, the rain gets louder, and the thunder starts when the participant feels more stressed. These were faded over time depending on the mood of the participant. This concluded the creation of the VR environment

All different rain states of the maze All different lighting states of the maze

All different rain states of the maze All different lighting states of the maze

The main source of data collection for my master thesis was the pre-& post-experience surveys. These surveys gather data about what the participant felt before the experience, after the experience, and their player experience.

The data that Empatica E4 measured was not be saved; the priority lay with getting the Empatica plug-in to work with Unity. The plug-in was created by Boode W; this plug-in used five rules to determine whether the data showed a possible stress moment.

The five rules:

The period between the stimulus and response was between one and five seconds; this is called the latency, which is on average around three seconds.

There was a drop in temperature after the peak.

The time between the onset of the stimulus and the peak is called the rising time; the latency affects the rising time.

Steeper slopes to the peak indicate a more intense stress moment.

Recovery time, the time it takes to go from the peak to 50% recovery, lay between one to ten seconds.

When it was determined to be a stress signal, the Python plug-in would signal Unity. That signal would be used to manipulate the mood-changing value.

For this studies a reasonable number of participants for a proof of concept would be 20 participants; this would count per group, meaning that around 40 participants were needed. It was unnecessary to limit the participants to a specific demographic, for instance, a specific age group. Seeing how different demographics reacted to the experience was part of the research. The choice of participants was also heavily limited due to a COVID-19 related lockdown.

The Empatica E4 by Empatica

I am very interested to see what type of results this research will provide and if it could actually make a difference in player experience. If it does, I see a couple of interesting use cases for the integration of biometrics in the gaming industry. Once my thesis is done, I will write a follow-up article in which I will discuss the research findings and allow you to read the thesis yourself.

Thank you for taking the time to read through my article, and I hope you find it just as interesting as I do!

Read more about:

Featured BlogsYou May Also Like