Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

During game development, we’re a little in the dark about how players might experience and respond. That’s where UX research comes into play.

During game development, we’re a little in the dark about how players might experience and respond to the projects we’re working on. That’s where UX research comes into play to help cast a light in the darkness — so we can look before we leap and make measured decisions up front, rather than unexpected reactions later. At the same time, if something isn’t going as expected UX research can help us to right the ship and get back on track.

Hello everyone, we are Maria Amirkhanyan and Anna Dedyukhina, we are UX researchers at MY.GAMES. In this article, we’ll talk about why UX research should be carried out in game development, the benefits it holds, how conducting it differs at various stages of development, explain in detail the research methods generally used, and we’ll describe a number of cases where these approaches have been applied. In MY.GAMES we’re driven by product success, and our focus is on creating games that become leaders within their categories. UX research helps us achieve this — the results of the research allow us to improve the user experience, respond to the needs of our audience and solve problems quickly.

To properly understand UX research, we’ll first need to define UX itself. UX, or “User Experience” is the complete experience a user has when interacting with a product. In the realm of video games, players are the users, and the game is the product.

UX is often discussed in tandem with UI (User Interface), but UX is actually a broader concept: UI primarily concerns the interface, while UX involves core gameplay, meta gameplay, all the mechanics, setting, the conceptual goal of a game, the complexity of the game, and so on — in general, absolutely everything with which the player can interact.

As hinted, UX touches a large number of gameplay aspects: the convenience of gameplay and interface, an understanding the rules of the game, the difficulty curve, intuitive sense of control, and much more. Further, each of these aspects has its own idiosyncrasies for different genres and audiences.

Nice design, but users endure a really bad UX

There are many factors that influence player comfort, and we conduct UX research to try and understand them all.

It’s important to mention that UX research isn’t about detecting and dealing with bugs or anything directly involving the technical components of a game. Instead, UX research is about actual player perception of a game, its mechanics, and interface.

UX research comes in handy during the development process, because, normally, we’d never really know how real players will think, what they would do, or the reasons behind some of their behavior. UX research allows us to make informed development decisions and to save time and money. (After all, it’s more convenient to know in advance how to do things right, instead of later redoing everything a thousand times.)

Additionally, UX research is valuable during the lifecycle of a game. Over time, any game will tend to lose part of its audience. While the most active users will describe their reasons for leaving, some others rarely leave feedback, so we can only guess their motivation for doing so.

Let’s have a brief description of the UX research process:

We select a player that matches the target audience of our product.

We ask them to play our game the way they usually do. (Otherwise, users tend to test the game and try to “break” it — but we’re interested in their usual behavior).

At this point, we observe the player and their emotions. Tracking emotions is difficult, so sometimes we use things like AI to track emotions via facial expressions, or psychophysiological tools like electroencephalography (EEG) or galvanic skin response (GSR) equipment. EEG allows us to more accurately determine emotions and GSR helps identify how tense or excited a player is. But unlike EEG, GSR cannot identify if excitement is positive or not, we might see something, but we find the reason by interviewing players. Sometimes, we’ll also use an eye-tracking system.

We request players fill out questionnaires.

We ask questions (many questions). We’ll give specific examples in the second article in the series.

That’s it, finished!

In this illustration by UX Lab’s Ekaterina Lisovskaya, opossums demonstrate the research process

UX research often allows us to find problems that aren’t obvious to developers. Let’s get specific to illustrate: a good example of such a problem that made it into the final game is the mini-map in Cyberpunk 2077. In the game, the mini-map is too zoomed-in and cannot be zoomed out. When a player is driving to a marker, they often fail to make a turn in time, since the map does not move away during movement. As a result, players crash and get upset. Of course, if you’re looking at the mini-map non-stop, you can compensate for this, but then players would miss all the beauty of Night City.

https://www.youtube.com/watch?v=ZtgnAabn15k

Because of the mini-map, players constantly miss turns; bad physics and pedestrians jumping under the wheels only make the situation worse.

Could this feature have been implemented better? Sure, and it has been. For instance, in GTA V, the map zooms in and out based on whether a player is inside a vehicle or going at it on foot. This allows players to plan and anticipate their movements without any problem, since all turns are easily visible on the mini-map in advance.

In GTA V, the scale of the mini-map changes depending on the speed of transport

In terms of UX, we can steer clear of issues like the aforementioned Cyberpunk 2077 mini-map quirk by following a few rules during the development stage:

Meticulously observing player behavior

Taking into account the original purpose of a mechanic

Keeping in mind the kind of behavior we expect from players

Checking if the game provides the necessary conditions for a player to be able to perform the desired actions

This problem could’ve been avoided if the developers had done a competent UX study — but they probably didn’t. There are various reasons for this: perhaps there was not enough time, not enough budget, other problems were prioritized instead, or they didn’t have time to fix neither technical problems, nor UX problems.

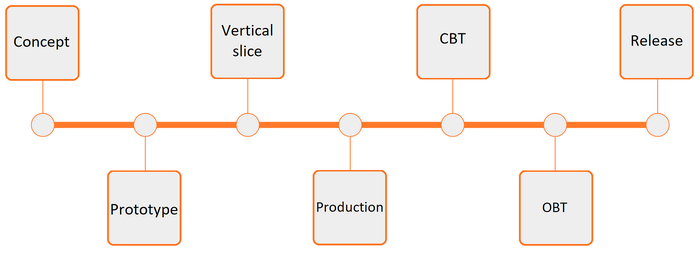

In the gaming world, the development timeline usually looks like this:

During each of these stages, we can conduct UX research, although the form it takes can be different depending on the point a project is at in the timeline. Let’s take a look at each of the stages above in terms of conducting UX research.

Concept. Any product begins development with an idea and a concept. We think about the genre, the content, what we expect from the game, and we also create documentation, prepare art, and take into account the type of monetization.

At this early stage can we already be researching something? Yes! We can test our hypotheses about the audience, and study this (what players like or dislike). It’s possible to decide which features should be added to the game (and which shouldn’t). We could also choose a setting, and try to understand whether our target audience will like it (for example, casual players are unlikely to be happy with the gloomy setting of Darkest Dungeons).

Prototype. Prior to this stage, perhaps we could only “chat” with players and discuss ideas, but with the help of a prototype, users can actually try features out. Moreover, you can give them a task to see how they’ll solve it, for example, “Let’s say you need to upgrade your character, how would you do it?”.

With a more developed visualization, it will be easier for a potential player to imagine a feature. The better testers understand how a feature works, the more reliable the results of the study. At this stage, we continue to test our ideas, discuss what we want to add (and in what format), and judge if we’re moving in the right direction.

Vertical Slice. Finally, the project is playable with some typical gameplay! This is the “golden stage”. We can collect feedback from our vertical slice, understand what the gameplay feels like, how features function, the convenient and inconvenient things for players, and so on. At this time, we’ll also start active game playtests.

Production. This is the longest stage of development. Levels and locations are added and the world is developing. And this all can (and should) be tested. Of course, if you ‘ve added just one small detail, this is probably not a good enough reason for running testing, but big things are definitely worth checking out with players. You can test some features locally or run quarterly large tests during this development phase.

Closed and open beta tests. By this time, the game has a lot of content and only requires polishing. You can finally give game access to a large number of users. We’ll combine our methods: we conduct detailed tests with some players, while others play on their own, then fill out a questionnaire. At this stage, you can understand how the audience is evaluating the gameplay, visual appearance, and the game as a whole.

It’s also worth thinking about features according to criteria of importance and implementation quality. For example, if a feature is not good enough, and it’s not particularly important to the players, then you should ask yourself: “Do I need to keep it?”. If a feature is important, but the quality of the implementation is low, then it definitely needs to be worked on first.

Release. It might seem like you can relax after release, but in fact, work is just beginning! Next up is game localization, support, operations, updates, patches, DLC, retention efforts, and so much more.

Many of the methods described below will be familiar to those familiar with UX research in general. However, it’s important to note the specifics that distinguish UX research involving video games from banking applications or online stores.

Each of the methods is worthy of a length article of its own, but in this post, we’ll briefly outline each of the main approaches: how to use them, and what to consider whether you’re about to conduct research for the first time, or for the n-th time.

Playtests are the main research tool in the gaming context; they allow us to learn about a complete gaming experience, and evaluate both the individual features and the overall impression of a full-fledged product.

As part of a playtest, we invite people to play our project or prototype and ask them to share their impressions. Meanwhile we observe the playtesting process, ask questions, and identify important problems and insights. Unlike a usability test, we don’t interfere with the game process in order to avoid distracting the participant, and we ask our questions after the session or during pauses (for example, while a screen is loading).

Playtests allow you to detect problems with basic mechanics, interfaces, meta gameplay, visual quality and authenticity, general impressions and many specific things that interest the team.

Playtests can differ in terms of format and the presence (or absence) of a moderator:

Format:

We can have a remote format (via Zoom, Discord, and so on) where participants can share their screen using the app or stream with YouTube or Twitch. The advantage here is that the respondent can play in a familiar environment, and moreover, the geography of possible participants greatly expands — we can collect data from foreign players or residents of remote places. The main disadvantage is that it’s more difficult to establish contact between moderator and participant, which also makes it hard to detect non-verbal signals.

We can also do an offline format where a participant plays right next to us in the office. The advantage is that we can closely observe the process, right down to how they use the controller. The disadvantage is that a participant tends to be more stressed and may feel uncomfortable in an unfamiliar environment, or play differently when using someone else’s equipment.

Moderation:

With a moderated format, a moderator observes the game and can engage in real-life communication with the participant. This is a plus because it increases our chances of collecting the most in-depth information and picking up on unexpected insights. The disadvantage is that it’s time-consuming and quite expensive.

An unmoderated format is conducted on third party platforms. The respondent independently goes through the game and records their screen, giving comments along the way. At the end, they’ll fill out questionnaires and answer open questions. The advantage: this takes little time and it’s possible to interview many people. The disadvantage: it’s more difficult to gain certain insights, because there is no way to ask the player something directly during the play test.

Case study — HAWK: Freedom Squadron

With HAWK: Freedom Squadron, the team received feedback that their game was boring and inferior to competitors; this was despite the fact that their sales figures suggested the opposite.

To make sure that this feedback wasn’t just a random person’s unfounded opinion, the developers decided to playtest HAWK and two other competitors, in particular, to evaluate player engagement at the start of each experience. Players spent 15 minutes in each game discussing their experience and completing the GEQ and MDT questionnaires. The order of games was random to avoid any influence on their perception.

In the end, the initial comment was confirmed — the game was indeed inferior to all competitors in terms of emotional engagement. After three months of improvements and changes, the team retested the updated version and had reached top levels of engagement, as well as increasing their retention rates.

An interview is a study which involves direct communication with a player in a dialogue format in order to gain a deeper understanding of their motivations, impressions, and thoughts. An interview is often conducted as part of another research method (for example, during a playtest, we always start with an interview about the player, and we end with a discussion of the game).

During an interview, you can ask any questions of interest to developers: how do users buy games? What do they expect from the project based on marketing materials? What do they think about certain mechanics and meta gameplay elements?

Interviews can be conducted both online, and offline, depending on the capabilities and objectives of the study. Sometimes you can even ask questions via quick messages: doing so makes it easier to find a convenient time and gives the participant a chance to answer whenever they can; on the other hand, this practice lengthens the process and reduces the data reliability — there’s higher chances of getting a “well-weighed” and “desirable” answer, rather than an actual, true first impression.

Case study — a multiplayer FPS

With this case, the team was facing reduced returning player conversion, and the developers wanted to understand how to fix their problem.

Resolving questions like these require deep discussion with participants; you need to find out their experiences, desires, and problems with the game. Because of this, we decided to conduct interviews and focus groups in tandem with questionnaires to assess different group characteristics and needs.

Different types of players were invited: beginners who played the game and left (as well as those who are now actively playing the game), and experienced players who recently left the game, (as well as those who returned to thame game after a break).

From each of the surveyed groups, we ended up with data on their needs, barriers, and attitudes towards various aspects and features of the game. Based on those results, it became clearer what motivated players to return to the game, as well as what discouraged them from doing so. Additionally, studying newcomers to this game helped highlight the strengths and weaknesses of the project, the shortcomings of the tutorials, and the complexity of some systems.

During a diary study, respondents play or interact with a product over several days. This is useful, for example, if we want to explore issues with player retention after the first day, or player impressions of a quest line that takes a few hours to complete.

Diary studies can, of course, be carried out both offline and online. In most cases, an online format is preferred. This type of research is also divided into several types:

Unmoderated: participants play and record each session. At the end of each session or day, they complete a questionnaire which provides the researchers with feedback, and next they can compare the experience of different days. As with other methods, the main disadvantage is the inability to ask flexible questions.

Moderated: a moderator observes the process and discusses all important issues during the playtests. You can get a lot of insights, but it’s long and very expensive.

Hybrid: a moderated session (or sessions) is conducted and supplemented with unmoderated playtests with questionnaires. For example, we communicate with the players and observe the first hour of the game, and the rest of the week the participants play independently and leave feedback in questionnaires. Or they always play on their own, but at the end we have a call and discuss all the questions we have. This option is useful, as you can collect most of the data without the participation of the researcher, and then ask important questions to get some insights.

Case study — Pathfinder: Wrath of the Righteous

For Pathfinder, during the beta stage, it was important to understand the target audience’s attitude towards the new game, if they liked the prologue, and whether the main mechanics and features were clear.

We quickly understood that we couldn’t collect such impressions in one playtest run — we needed to let the players play for quite a long time. As a result, we decided to conduct diary studies where respondents played three days for two or three hours in order to complete the prologue and form a comprehensive impression of the game.

We invited the target audience to take part in the test, these included Pathfinder players (computer and desktop), as well as CRPG lovers.

In the end, we learned that players didn’t understand character presets and abilities; during the prologue, they learned mechanics via huge, difficult-to-understand texts, and while the most important skills were highlighted in the character traits interface, that didn’t help much, because it was just too confusing. Upon receiving the results of this research, the team significantly redesigned the interface elements, making them more intuitive and understandable — this improved the player experience and retained engagement.

A usability test (or UT) is an experience assessment tool that allows you to evaluate the feasibility of key scenarios of player behavior, the clarity and convenience of the interface, and other hypotheses. You can test interfaces and prototypes, websites, and other related products.

It’s important to note that you can test the interface outside the game — for instance, with a separate prototype, since the focus of the method is precisely the interface, and not the accompanying gameplay.

Tests assume the presence of specific hypotheses and tasks that our players perform. For example, it’s important for us to check the navigation of menu sections and character leveling — in this case, we can give the player the task like “Imagine that you need to level up your hero. How would you do it?”

Upon completion of the task (successful or unsuccessful), the player is asked whether or not they succeeded, how difficult it was, and we also make more targeted queries that highlight specific pain points and behaviors.

It’s worth noting that playtests often include elements of UT, where a moderator, after a free game session, gives specific tasks to test hypotheses and scenarios. In terms of format, UTs, like playtests, can be conducted both offline and online, moderated and unmoderated.

Case study — a news website

When developing the site, the team wondered if the current news feed was convenient. In order to understand how users interact with the news and what difficulties they experience, they decided to conduct a UT with an eye tracker (a tool for recording a respondent’s eye movement, identifying the direction and duration of gaze fixation on particular interface elements) to understand how their users read the news.

They found out that the current “double” news feed (news items with text headings on the left news items with large photos in the center) didn’t work well: users ignored the central part or were forced to mentally “jump” from one block to another. Taking this into account, the team decided on a complete news redesign, creating a single feed with filters so that all the news was in one place, and uninteresting ones could be hidden.

Questionnaires allow you to collect specific data on important issues, summarize impressions and compare them at different stages of the game. They are the main tool for quantitative research, but also an important part of qualitative research. (I’ve already mentioned them several times when discussing various methods, especially unmoderated approaches.)

In addition to custom questionnaires, you can also use standardized tools:

The Microsoft Desirability Tool (MDT)

The Game Experience Questionnaire (GEQ)

The Presence Involvement Flow Framework (PIFF)

The Core Elements of Gaming Experience Questionnaire (CEGEQ)

As part of this research, either one or a combination of several questionnaires can be used. To illustrate, we like to use MDT: it allows you to assess the overall evaluative impression of a game through a list of adjectives.

In addition, we also utilize GEQ, which operationalizes and measures the most important characteristics of the game in a universal format:

Sensory and Imaginative Immersion (the game universe)

Competence (ease of performing gaming tasks)

Flow (dynamics, immersion in the game)

Tension

Challenge (motivation to act)

Negative displays of emotion

Positive displays of emotion

These results are then conveniently able to be compared between games (or over time) to detect changes and improvements.

Case study — Athanor

With mobile card game Athanor, the team wanted to understand how their games were perceived and whether they needed work. Of course, it was impossible to cover such a complex question with simple questionnaires, so the method included a playtest with eye-tracking and interview elements. GEQ was measured both before and after the game session in order to compare the expected and perceived results.

We invited mobile game players who were fans from a range of genres:

Fans of farm games

Fans of construction games

Fans of battlers

Fans of card games

According to our GEQ, the games seemed more difficult than at first sight. At the same time, there were differences of perception between the different groups. The game turned out to be too difficult for “farmers”, unsuitable for “builders”, but quite interesting and difficult enough for “battlers”. At the same time, the main target audience of the card game players — fans of card games — considered Athanor too boring.

Before conducting research, any team should ask themselves some questions: “What do we want to know? Do we need general feedback on the pace of the game, on the clarity of the meta game play, on ease of controls, or on how our players navigate the menu? Or are we trying to understand if players like the balance update in the new patch?”

Depending on the goals and hypotheses of the study, as well as its audience, the approach to tests and the duration of the study may be different.

When beginning any research, we formulate some hypotheses — questions and assumptions about how the current version of the product is perceived by the player and how they will interact with it. It’s important to collect hypotheses from all those involved: developers, designers, support, and business folks. After accumulating all the hypotheses, it’s then necessary to define the general ones and most important ones that can be fit into one study. If it turns out that the hypotheses are all about different things and there are many of them, it makes sense to carry out separate studies.

Here are some examples of hypotheses:

“Players cannot find how to upgrade their character”

“Players don’t understand who is an ally and who is an enemy on the map”

“Players don’t like the dialogue of the characters”

The more specific the hypothesis, the more accurate the questions we’ll ask players, and the better the final results of the study.

Regardless of the method chosen, our research needs a scenario, that is, a document that describes the expectations of respondent behavior and what questions will be asked. A scenario isn’t a fixed template, but an auxiliary document for the session with the respondent, which helps you not miss any important points.

The classic scenario usually includes the following parts:

● The lead-in: introduce yourself to the respondent and outline the rules of the study. Make clear that the test will be recorded, elaborate on NDA restrictions, possible difficulties with prototypes, the importance of candid answers, and so on.

● Getting to know the respondent: establish contact with the respondent and clarify some data with them. At this stage, it’s extremely important to win over the respondent, and let them freely express their opinion. At the same time, this stage is an ideal time to make sure that the respondent has been correctly selected: do they have relevant experience? Are they familiar with relevant games or terminology needed to participate?

● Tasks/questions/topics: this includes a description of the main content of the study. The interview scenario may feature key topics and questions for discussion and a playtest scenario, the set of tasks and hypotheses to be tested.

● The final part is drawing up the conclusions, collecting additional feedback, and saying goodbye to the respondent.

The team also needs to decide how much the player should play in order to answer questions of interest.

On average, the first session test in mobile games lasts 10–20 minutes, while for more complex PC games, it can take up to 1.5–2 hours; in this case, you can limit yourself to one playtest.

If the game is multiplayer, it’s important to think through the playtest process. It’s necessary to gather enough people for the game session, and think about replacements or solutions in case someone is unable to start the game or simply doesn’t show up.

It’s better to gather all participants in one space (for example, in a general chat or channel) in order to promptly communicate and solve problems. We like to use Discord for group tests — it’s convenient for communicating with everyone at once and you can separate people into different groups.

If you realize that it will take more than two hours to test all the hypotheses, then you should think about conducting diary research, or dividing the research by features.

At the start of each study, the hottest topic is the audience: who should we invite to the test? How many participants should there be? How much will it cost?

Depending on goals, the degree of prototype readiness, the desires, and capabilities of the team, it’s possible to conduct tests with external respondents or colleagues, friends and acquaintances (friends & family). Conducting research with friends and family is faster and cheaper to organize because we don’t have to pay our respondents.

Meanwhile, developers and designers can provide useful feedback on technical details and share their insights from professional experience. However, it’s important to remember that such feedback is not always close to the impressions of an inexperienced player. Additionally, the team must be prepared that internal research tends to give lower scores and harsh feedback. We invite real players to external research: the potential target audience could be gamers from another game if new experience is evaluated, or drawn from our current players if experienced user impressions are important. Selection requirements differ from project to project and should reflect the target audience, for example:

Location

Gender

Age

Platform

Frequency of game sessions

Experience in specific genres or games (or absence of experience)

When conducting playtests, it’s also important to follow technical requirements — if a game or prototype only runs on high-end devices, then you need to look for people with the relevant equipment. You also need to make sure that a respondent has a fast and stable Internet connection for the test.

The number of respondents depends primarily on the design of the study, finances, and the structure of the target audience. If a team wants to evaluate some indicators or features and compare them later then you need to conduct a quantitative study on at least 60 people.

If it’s important to understand the reasons for player behavior, their motivation and thoughts, then a qualitative study is needed. Each specific target group should include at least four or five people in order to obtain valid data.

Correct selection of the target audience for the game test is one of the most important parts of preparation: for example, in Elden Ring and other souls-like games, the target audience is quite “hardcore” — these gamers like challenges and like to go through games using trial and error. If we decide to test a game’s onboarding process, and we pick only casual players, we’ll surely get negative feedback and lots of misunderstandings, because the first mini-boss will easily kill the player to show how difficult the upcoming player path will be.

But this doesn’t mean that we should only invite hardcore respondents who know how to work with these types of games. This would also distort the data about the opening section, since experienced players might not notice the over-complexity or lack of information, as they are already used to playing games like this.

Case study: a multiplayer FPS

The team wanted to test the onboarding process for both newcomers and returning players after a significant interface remake.

When selecting the criteria for participants, it was important for us to correctly pick people with relevant interests and the presence/absence of experience. The team also set priorities regarding their target audience, choosing mainly male players from the two geographical markets where the game has the most popularity.

We settled on 2 groups of players, male, with ages ranging from 18–35.

Group 1 was composed of 8 total newcomers to the game (4 from each geographical market). These players were gamers who actively play online PC shooters (no less than 5 hours per week), and who had never played the multiplayer FPS we were testing before.

Group 2 was composed of 8 total returning players to the game (again, 4 from each geographical market). These players had played the game we were testing more than 8 months before the test, and thus had not been exposed to the update. Additionally, all these respondents had reached at least the 20th rank (this requires a significant time investment and implies extensive game experience.)

Depending on the capabilities of the company, research can be carried out by professionals within the company (UX researchers), trained team members (e.g., designers) or external agencies.

In terms of financial cost and budget, quite often money is most often spent simply attracting respondents. If we need more participants, and especially if we need specific, unique requirements from them, the organization of the project will be more expensive and more difficult.

Here’s some estimates based on our experience (a project with a simple recruitment, 2022 prices).

Internal research:

Payment for one English-speaking (USA, Europe) respondent per hour: $60. (Recruitment platforms typically charge 50% as a service fee.)

Payment for one respondent from Asia (Japan, Korea, China): about $90–100

Agencies:

USA/Great Britain: $13,000–23,000

Japan/Korea: $25,000–40,000

If the budget is tight, you can do the following:

Search for respondents yourself: in this case, you won’t have to pay a commission fee to the platforms, but the process will be slow and will require a dedicated employee (or even several)

Invite friends and coworkers as respondents: friends and family tests have their limitations, but are always much cheaper

Reduce the scale of the study: ask yourself if you need to research all and everything by asking the player to take a two-hour playtest? Or can the key hypothesis be tested in a shorter session? Think about whether it’s important for you now to cover all the TA groups — maybe you should concentrate on the core or potential players.

During development, testing and improving a game is always worth it. Plus after release, we can get some clear analytics that we can use to track what’s happening with players and understand why they churn.

But despite the benefits of UX research, it’s still not a magic bullet. Research can help save time and budget, optimize development, and facilitate decision making, but all the information received must still pass through the team’s expertise filter.

When starting your research, it’s always important to know the questions you’re aiming to answer and how your results will be used. Only good, thought-out preparation (on the part of both researchers and the product team) will allow you to choose the right method and respondents, as well as obtain relevant insights.

You May Also Like