Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In this first article in a new series, college professor and user research Ben Lewis-Evans takes a look at different methods of game user research, offering up a handy guide to different ways you can collect useful information about your game.

April 24, 2012

Author: by Ben Lewis-Evans

[In this first article in a new series, college professor and user research Ben Lewis-Evans takes a look at different methods of game user research, offering up a handy guide to different ways you can collect useful information about your game.]

This article, and its forthcoming followup, is intended to give a rough idea to developers of several different methods that can be used in games user research.

However, many, many books have been written on research methodology and I cannot cover everything. Therefore these two articles cannot be taken as completely comprehensive.

In the first of the articles I will be covering a few general points about Games User Research and then discussing three methods, focus groups, heuristic evaluation and questionnaires in some detail.

So, before getting really started, what is games user research in the first place? Well, let's start by comparing it with Quality Assurance (QA). QA is a well-established part of software development, and is often carried out by professionals within a development team. These folks are, generally speaking, aimed at finding bugs and making sure the game runs smoothly.

However, because those working on a project usually carry out QA, it means they have an investment in it, and are familiar with it. This can cause problems when it comes to evaluating the usability and experience of a game. What seems obvious and fun to someone that has been working on a project may be completely alien and frustrating to a new user.

However, because those working on a project usually carry out QA, it means they have an investment in it, and are familiar with it. This can cause problems when it comes to evaluating the usability and experience of a game. What seems obvious and fun to someone that has been working on a project may be completely alien and frustrating to a new user.

This is where games user research methods come in, a field that is all about the user and their experience of the game -- in particular, the big question, "is it fun?"

To really (over) simplify things, it could be said that QA and test is about the software, and how it functions when dealing with users, and game user research is about the user and how they function when dealing with the software. Notice I say just it is about how the user functions when dealing with the software, and that it is NOT about testing the user (more on that later).

So how is this done? And what is fun, anyway? Well, there has been plenty written on fun already, so let us just say that fun has quite a few dimensions. Fun can be something that is easy to use, but it can also come along with a struggle and a challenge. It can arise from an engaging experience, a compelling experience, or a relaxing one.

All this means fun is a subjective variable, which changes from person to person, and situation to situation. However, due to the emotional component of fun, what can be said is that if your players are having fun, then it is likely they can tell you about it -- if only you know how to ask.

Okay -- I'll get to that. But before I get into the fine details, please bear with me as I outline some general principles to keep in mind:

Okay -- I'll get to that. But before I get into the fine details, please bear with me as I outline some general principles to keep in mind:

Get the right players

Whatever methods you choose, make sure you get the right sample. For most methods, this means getting representative users, aka the people that you expect will play your game.

If you have the time, perhaps there is some advantage to getting as wide and large a user group as possible (if you really think that everyone will want to play your game), but given that you are likely to be constrained by time (and money) it is generally best to concentrate on getting as close a sample of the target type of player you are after as possible.

The game is being tested, NOT the user

Secondly whenever doing this type of research make sure it is clear to the user that they are not being tested. The research is about improving the game, not the user, so the user shouldn't be made to feel inadequate if they can or cannot do something. In principle it is all valuable information.

This can be hard to do sometimes, as you are the ones designing the game, and it can be uncomfortable to hear others criticising it. But try your best to not be defensive or judgemental (i.e. avoid thinking the problem exists between the keyboard and chair.)

What do you want to know?

Whenever doing research, you should be clear about what you want to know. You will be playing the game yourselves as you work on it, and you should have design documents, so you know how things are supposed to work. So don't just plonk people down with the game and come up with questions on the fly. Work out what areas you think might be problems and know what you want to ask about before it's time to test.

Please note: I am not saying you go out there with preconceptions and already "know" the answers you want; rather I am just making the point that you should be at prepared and know what you're looking for. Otherwise, you get a mass of data that may not be of any use to you at all. At the same time, be open to surprises. You never know what might pop up.

Test early and test often

The next point is considered one of the most important by user researchers, and that is to test as early as you feel it is possible to do so. This can be difficult, as it feels bad letting your baby out into the hands of users before it is 100 percent compete. But really, the sooner you can test the better -- test with paper prototypes, for instance!

The primary reason for this is that it is much easier to change the game if you find an issue early in development rather than late. Once you have made the changes, you should test again. That said, it is also a good idea to make sure that the product isn't too buggy; you want to test the experience of playing the game, not the experience of crashing due to bugs.

One extreme example of this approach is the Rapid Iterative Testing and Evaluation (RITE) method, developed by games user researchers at Microsoft. In this method a test is run (usually via behavioural observation -- which will be covered in the second article in this series), and changes are made to the game as soon as problems or issues are detected, before the next participant arrives. This can occur perhaps even after just one user has been tested.

Listen to and act on problems, but not necessarily the solutions

When dealing with your users, you should be open, and listen to the problems they are raising. You can also listen to the solutions they give to those problems -- but they are likely to be less useful to you. You are the game developers, and you know what is possible with the technology, time, and resources you have. The users won't. So observe, do the research, and treat it seriously when it reveals issues, but take suggestions from users as to how to solve the issues with a grain of salt.

Games user research is just another source of data

Often when articles such as this appear online, there is much gashing of teeth and angst about taking the art out of game design and instituting "design by committee". I can understand this worry; however, much like QA, user research is just another tool to improve your game. It shouldn't dominate your design or suppress your artistic talent; rather, if done correctly, it should augment your talent and give you new insight.

Are you still with me? Great. Here, then, are the research methods that I will cover, in varying degrees of depth, in the rest of this document: focus groups, heuristic evaluation, and questionnaires/surveys.

This method can be something of a dirty word (and is definitely part of that whole "design by committee" problem). So let us deal with it first and get it out of the way.

You are probably familiar with focus groups, even if you've never seen them used in person. Basically this is where you get a bunch of people, have them play your game, and then put them in a room to talk about it. They can be free to talk about what they like and what they didn't like, but you also have a facilitator in the room who can ask specific questions of interest.

This process can also be used quite early on in development, where instead of getting people to play the game, you give a presentation or talk about ideas for the game and get feedback on that.

The advantages of focus groups are that they involve a lot of people, so you can get more feedback. They can also be somewhat efficient, as everyone is together in one place, and the facilitator can ask follow-up questions. So if someone mentions they like or dislike something in particular, you can gain a bit more detail on why.

Although at the same time if you are not careful, focus groups can get away from you, and end up wasting far too much time. To avoid this last problem you really need a good facilitator to run a focus group. The facilitator has to be strong enough to guide the conversation to areas that are useful, but not dominate the discussion.

Probably the biggest risk with a focus group, and one of the reasons why focus groups are not often used, is that just one or two members of the focus group can dominate the discussion. Due to group pressure, you may not hear from other people who have valuable insight into the game. Focus groups also have a tendency to get more into discussing solutions for issues rather than just the issues themselves. This is not what you want.

Finally, focus groups are a subjective method, and all you have to go on is what people say -- and as much as we like to judge people on the "attitudes" they hold, and think that they predict behaviour (they usually don't), we all know that what people say is not always what they actually do.

Pros

More people can mean more feedback (although see cons...)

Gets everyone together in one place

Allows for follow-up questions

Can be useful when discussing concepts

Cons

A good facilitator is required

Strong voices may take over and reduce feedback overall

Too many solutions, not enough issues

What people say is not always (or even often) what they do

Heuristic evaluation is where you get an expert (or experts) in games user research, get them to play your game, and then they evaluate it on a set of criteria (heuristics). Kind of like a scientific game review... Kind of.

Basically, to do this, the expert(s) will use a list of heuristics, which are basically rules or mental models, and give you feedback on whether your game fits these heuristics, and where problems might come up. These heuristics can vary, but here is a selection of some possible heuristics listed in a 2009 article [PDF link] by Christina Koeffel and colleagues:

Are clear goals provided?

Are the player rewards meaningful?

Does the player feel in control?

Is the game balanced?

Is the first playthrough and first impression good?

Is there a good story?

Does the game continue to progress well?

Is the game consistent and responsive?

Is it clear why a player failed?

Are there variable difficulty levels?

Are the game and the outcome fair?

Is the game replayable?

Is the AI visible, consistent, yet somewhat unpredictable?

Is the game too frustrating?

Is the learning curve too steep or too long?

Emotional impact?

Not too much boring repetition?

Can players recognize important elements on screen?

The article itself lists over 29 of these heuristics, and goes into much more detail than I have provided here, so I recommend reading it if you have the time.

The good thing about heuristics, in my opinion, is that even if you aren't an expert, they can provide a list of things for you to think of when you look at your game. For instance, does it provide enough feedback to players that their actions are affecting the world? Does it force players to hold the controller in an awkward fashion? And so on. Again, this may seem like common sense stuff, but it really is amazing how often so-called "common sense" is not common at all.

Now, one obvious advantage to heuristic evaluation is that you only need to use a small number of experts (just one in some cases), and being experts they know what they are talking about. This also leads to the problem that you do need experts, and where do you find those? Plus have you found the right one(s)? The types of heuristics used can vary by expert, and of course should fit your type of game -- so this is obviously important.

Also sometimes experts can be a bit too expert and miss stuff that might be a problem for novices. This is because as we become more experienced at something we no longer need to consciously consider everything that we perceive and do, whereas someone who is still learning is still thinking about what they are doing all the time. This is why generally if you want to see something done well you watch an expert, but if you want to learn how to do something it is often best to ask a novice.

Pros

Smaller number of people needed

Relatively fast turn around

Experts are expert

Cons

Where do you find experts?

Did you find the right one?

Experts can be too expert

I am sure you know what a questionnaire is, but do you know how to design and use one correctly? Mountains of books have been written on this, but hopefully I can make at least some important points clear.

First of all, when do you use questionnaires? Well, they are usually used to evaluate subjective views about your game, particularly value judgements. This may vary from specifically asking players about their favorite weapon to open-ended questions asking for general comments on the experience.

Questionnaires can be given to players during the game, which means the experience is fresh, but this risks interrupting the flow of the game (if possible, find natural down times to ask). They can also be used after a gameplay session is over. The big advantage of questionnaires is that they can be given to many people, and as such you can end up with lots of nice data to examine (in theory).

Before I go into the detail of constructing your own questionnaire, there are some pre-existing questionnaires out there aimed at evaluating the fun of gameplay experiences.

Examples of such questionnaire are the Game Experience Questionnaire (GEQ) for examining gameplay experiences and the affect grid [PDF link] or the manikin system [PDF link] for rating emotions. These pre-existing questionnaires can be great, because they are usually well-written and reliable. However, they also have a tendency to be more academic in nature, and care should also be taken that they fit your game, so modify them if necessary.

So how do you go about designing your own questionnaire? Here are four steps that I hope can help you.

Step 1. Work out what you want to know

As stated already, all of these methods require that you know what information you are after. But this is extra important when it comes to questionnaire design. You usually don't get any chance to follow up on people's answers, so you want them to be as clear as possible, and for the information you gain to be what you are after (and neither too little nor too much!)

So, brainstorm, make lists, do whatever is best for you in terms of getting down what you want to know. Then cut it down to only what you really, really need to know. Be focused!

A mistake that novice researchers often make is to ask for everything simply because the opportunity is there. However what this results in is a mess of data that will take forever to be analyzed, and may not produce a meaningful result. You don't really want your questionnaire taking more than 15 to 20 minutes to answer (this is not a target, by the way, but a maximum).

Step 2. Design the content

The design part is the meat of the process and can be further broken down into a few things to consider.

Questions or statements? Do you want people to answer questions, or rate statements? This is pretty straightforward but still important. Basically, questions are good for gaining information, for example:

How challenging was the Horrible Bog Beast?

1 2 3 4 5 6 7

Very easy Very Hard

Questions can also be worded in the form of an instruction. For example, "Rank the levels you just played in order from 1-6, where 1 is the one you enjoyed the most, and 6 is the one you enjoyed the least." It's effectively just like asking a question about the ratings for each level individually.

On the other hand, getting people to rate statements is more typically used to assess value judgments or agreement with ideas, so an example would be something like:

The Horrible Bog Beast is an interesting enemy to encounter.

1 2 3 4 5 6 7

Strongly Agree Strongly Disagree

Either questions or statements are fine; however, use them where they are best, and don't switch between the two types too often.

Language use. It is incredibly important that you use clear, everyday language. Avoid jargon. This is vital because you want to be sure that the people you are testing understand what you are asking. Many people will just answer anyway, even if they don't understand a question (be honest -- I am sure you have done it) and the data you get may not be useful at all. So be blunt, be direct, and ask for exactly what you want to know.

When you offer alternative answers to your questions (for example if they have to select from a list of consoles they own), these should be relatively exhaustive. So in other words there shouldn't be any alternatives that you haven't thought of. Adding an "other" where additional options can be filled in can help here, but it is best if this "other" option isn't used too often.

You should also be careful not to ask what is essentially the same question, but in a different way. Remember the goal is to keep the number of questions down. Also avoid asking questions that are phrased negatively. So "I like the jumping mechanic" and a gradient from agree to disagree rather than "I don't like the jumping mechanic". In the case of negatively-phrased questions, people filling your questionnaire have to select "agree" to disagree, and "disagree" to agree. It seems silly, but this can confuse people.

Also avoid leading, double barreled, and loaded questions. A leading question is one where the person filling it in is lead or biased to give a certain answer, e.g. "This game was fun. How fun was it?" This example is, of course, over the top, but you would be surprised how often leading questions can slip into questionnaires, so just try and keep your wording direct, and neutral. Don't assume. Ask!

Double (or multiple) barreled questions ask about more than one thing at a time. Here's an example from outside the world of games -- from a national referendum held in New Zealand:

"Should there be a reform of our justice system placing greater emphasis on the needs of victims, providing restitution and compensation for them and imposing minimum sentences and hard labour for all serious violent offences?" YES/NO

As you can see, there are at least six questions here; should there be a reform, should it place greater emphasis on the needs of victims, should it provide restitution and compensation, should it impose minimum sentences, should it impose hard labour, and should it be for all serious violent offences? But you only get to say yes or no once. As I say, this question was put to the whole of New Zealand, and really ruined my first ever experience of voting.

Loaded questions are ones that make moral judgments or make assumptions that are unfounded; an example of this type of question also comes from a New Zealand referendum where it was asked:

"Should a smack as part of good parental correction be a criminal offence in New Zealand?" YES/NO

This question is loaded in that it uses a moral term like "good". It is also ill-defined, as it uses the term "good parental correction" (whatever that is), and finally it is misleading in that if you are anti-smacking you have to say yes (agree) and if you are pro-smacking you have to say no (disagree).

Closed or open? Questions can be either open, as in the player can say whatever they like, or closed, where there are only a few limited options to select.

Open questions allow for richer data to be collected, as they let players give as much feedback as they want. However they can also give as little as they want, and often without direction, the answers may be vague.

One way to get around this if you do use open questions is to make sure you have time to read them before the end of the session, in case you want to ask for clarification on any points people bring up. However this is difficult if the questionnaire is being given remotely, such as when it is being used via email or online.

Closed questions on the other hand let you have much tighter control on the answers your participants give, and come in several flavors (scales):

Dichotomous: This is for simple yes/no questions. So usually it used to collect stuff like yes/no, true/false. It is nice, direct, and precise. However, it is also not very data rich. So generally speaking such questions are used just to collect demographics, or when you really want to force a choice between two options.

A dichotomous choice in Diablo III

Continuous: This is where you ask people to give ratings along a continuum or sliding scale (like when you are doing face customization in games). The big advantage of a continuous scale is that it gives a lot of resolution. However that much resolution is hardly ever needed (is there really a difference between a rating of 96.43 and a 93.21?).

A (semi) continuous scale in Saints Row: The Third

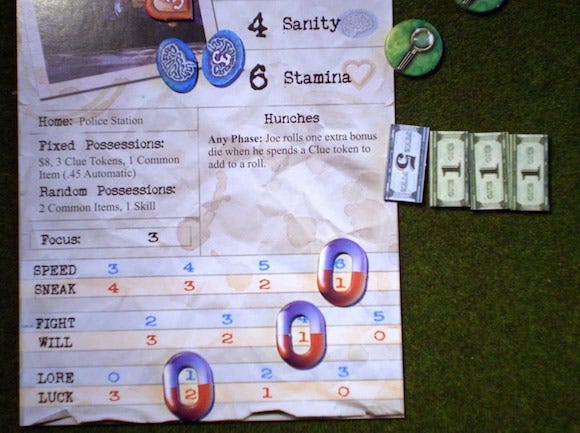

Interval: This is probably the type of scale we are most familiar with. Here, you give a rating along some sort of scale with each step being separate/discrete from the other (rather than continuous).

This does lower the resolution of the scale when compared to a continuous scale, but it is much clearer in terms of allowing for comparison of results. In my opinion, a 1-7 scale is probably the best way to go (over say a 1-5, or something larger) as it allows for some good resolution, while not being too broad. Others may disagree

Several interval scales in Arkham Horror

If an interval scale is used, then it can also be broken down further into several types: numeric/categorical, Likert, or semantic.

A numeric scale is simple; it just asks for a number, and is used often to rank things.

Likert scales you have probably seen many, many times and are usually for when you want to see how much someone agrees or disagrees with a certain statement, e.g. 1-7 where 1 is strongly disagree and 7 is strongly agree

Semantic scales are when you want to simply ask for a rating related to something or a value judgment, e.g. 1-7 where 1 is poor and 7 is good.

Each of these types of scales can be used where necessary, although generally speaking, it is best not to mix scale types too much. Otherwise people filling in the questionnaire might get confused and provide a rating that they think is using an agree to disagree dimension but is actually asking for poor to good.

Finally, with interval scales, you can either make them unipolar, where you ask for varying levels of one variable e.g. 1-7 where 1 is not very exciting and 7 is very exciting, or they can be bipolar where you contrast two different variables, e.g. 1-7 where 1 is very boring and 7 is very exciting. Unipolar scales zoom in on one area a bit better, but bipolar scales give people filling in the questionnaire more room to express their opinions. As with before, try to not mix these styles up within the same questionnaire too often, or at all.

Step 3. Put it together

You have your questions, so now's the time to put it together. First, work out what medium you are going to use. Will it be collected via a computer (say a through a web interface), or via paper? Collecting via a computer is preferable if possible as it means that there will be less data entry later on, however sometimes the portability and ease of paper can win out.

Give yourself plenty of time to do this, and if you are planning on using a computer-based survey, there are many companies online that offer this kind of service. In particular I hear that SurveyMonkey is a popular choice, although I have not used it myself.

You should next consider what order to put your questions in. Generally speaking I advise putting the easy questions at the start. This gets people rolling, and as long as your questionnaire isn't too long, they should be more prepared to tackle the bigger questions you want to ask later.

Then try and cluster the remaining questions in a sensible fashion, such as by subject, or what they refer to within the game. Don't ask a bunch of questions about the weapons, then move on to the boss, and then go back to the weapons.

Also check to see if answering some questions excludes other questions or could cause new questions to be asked later on. If so, make sure this occurs. In other words, if you ask "Do you own an Xbox" yes/no, make sure later on the people who answered "no" aren't asked to list the top ten Xbox games that they own.

Step 4. Test it

No plan survives its first contact with the players, so first test your questionnaire yourself. If you are using a computer-based questionnaire, make sure the data that comes out the end looks okay. Give it to a few other people (preferably people who are not too familiar with the game, or are not designers) and ask them to fill it in (with you outside of the room so you can't help them), and then ask for their comments on the clarity of the questions. Essentially, user test it, and then change anything that might be wrong (and then test again...) This is a great deal of work, I know, but once you have a good questionnaire, you can possibly use it again in the future (or at least cannibalize it for juicy parts).

Pros and Cons

There is much more that could be said, but perhaps this is already too much for a "primer" -- so I will move on. The best thing about a questionnaire is that you are asking the same questions to everyone, so you get consistent quantifiable data that you can compare between people.

However they lack follow-up, in that you can't ask people why they selected a certain rating, they are not objective, and you do need quite a few people if you want to draw completely solid conclusions from them. That said, even when testing with just a few people, providing a questionnaire can give you some ideas about what those individuals thought.

Pros

Consistent

Quantifiable

Relatively quick to administer

Can be used on a large scale

Cons

Can lack follow-up

Not objective

Really at their best with large sample sizes

It can take a while to put together a good questionnaire

Hopefully that is more than enough for this first article. While again this article is in no way comprehensive, I hope it has proven to be useful. In the upcoming part of this rough primer, I will be covering interviews, observational methods, game metrics and biometrics.

Finally, if you are interested in games user research or work in the area, then please consider checking out the IGDA Special Interest Group for Games User Research (GUR-SIG) on LinkedIn (just search for the group). It is a great place to get together and discuss GUR with others working in or around the industry.

Read more about:

FeaturesYou May Also Like