Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Balancing-optimizing a game's design to minimize the entry barrier for new players and maximize retention / engagement is an issue of growing importance. Balancing is especially important in PVP games, where player experiences are highly interdependent.

One issue of increasing importance to game developers is understanding how elements of a game’s design, like the rules of play, can be manipulated to optimize a particular feature of a game’s return (output), such as enjoyment to players.

Old school

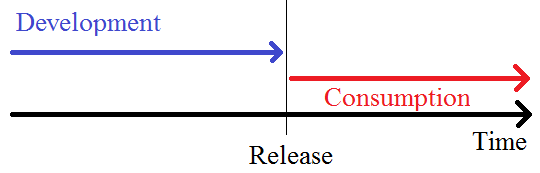

In the past (over a decade ago), this question was typically asked as an afterthought, post release. The production process was fairly linear, offering little advantage to internalize player feedback and incorporate that feedback into game design during the production timeline to improve it. That is, there was no period where the development of the game coincided with the players’ consumption of it.

The process essentially entailed making a game, hoping that you had properly guessed what your target audience wanted, and then observing the realization as a success or loss ex post - a one-way street that cannot be iterated upon, unless you are making a sequel/series of the same genre/class. A naïve simplification of the “old school” development process can be visualized as:

You develop the game, run some front-end marketing to get the name out and convert users to purchase (if F2P, to play), engage users with a rewarding experience, and hopefully, retain them long enough to cash in on more exposure from current player / media content “referrals.”

The development feedback loop: new school

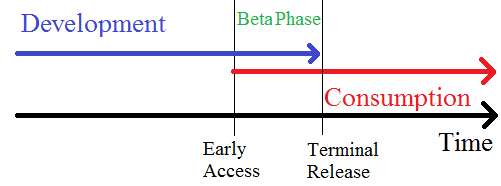

When games are released to the public providing early, but preliminary access to an unfinished product in alpha and beta phases of development, there is an important period of time where the production timeline (specifically development) intersects with the players’ consumption timeline.

This phase provides an important opportunity for data taken from player consumption (playing the game) to be internalized in production and development decisions to impact future consumption – despite the way the diagram has been drawn, sometimes this phase continues for the entire life of the game. Given that the current industry norm has been increasingly characterized by the continuous beta release model, there are now many elements of games that can be altered in real time during the production phase, allowing developers a bit more flexibility in changing some (but not all) parameters of the game environment in an effort to give players exactly what they want. This may be as easy as changing a few lines of code to control, externally, how resources are distributed in a particular PVP map to increase interaction. It may be much more difficult, on the other hand, to alter the core rules of the game (ie: stat/attributes systems, terrain generation engine, etc.) without being completely invasive into the user experience. This highlights an important trade-off faced by developers using player analytics to optimize gameplay: more precision in the data usually comes at the cost of added computational complexity and more importantly, invasiveness into a player’s experience, and should be approached with some degree of caution (but another blog post on that).

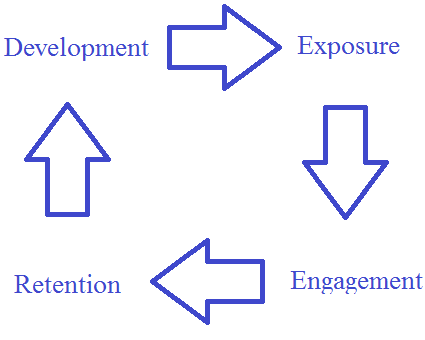

Savvy developers have correctly identified that the continuous beta release model affords them a cyclical feedback loop between development, exposure, conversion, and retention. When new information becomes available about player preferences/behaviors, this new information can be internalized and conditioned upon (often referred to as a Bayesian approach) to alter the variable aspects of development in a more precise and deliberate way to further improve exposure, engagement, and retention so that the process of making a game never really reaches any terminal state (again, this is a naïve simplification of the process and I’ve omitted some steps such as conversion to monetization, etc.):

The remainder of this article, focuses on one particular aspect of how design optimization can be used to take advantage of this feedback loop – balancing.

Why balancing is important

Players (and people in general) tend to exhibit some preference towards fairness (or equity) – a well-balanced game acknowledges this preferences in order to maximize exposure, engagement, and retention by effectively reducing the barrier to entry for newer players and maintaining (at least some illusion of) automated fairness throughout the course of gameplay. A first experience with a game that provides no equitable chance at success is sure to deter entry and stagger retention, and it is the experience in this first play that tends to determine whether or not your game is balanced in a way that reduces the barrier to entry for newer players.

For non-social games, balancing may be as simple as making sure that NPCs don’t put too much of a hurting on a player (or vice versa!). However, with social games played with and against (many) real non-AI people, balancing becomes much more complicated - one must account for players’ heterogeneous preferences for social interaction, the nature of which depends jointly on deterministic elements of the game environment (which devs can control) as well as unpredictable elements of human actions between players (which devs cannot). Although this may seem like a daunting task, there are a few key rules of thumb that can be followed to effectively help balance the experience offered to your PVPers.

Equal opportunities doesn’t have to mean less variation

There are many academic studies that provide strong evidence in favor of the fact that players (and people in general) have a preference over equity and fairness. While balancing ensures some degree of fairness, it is maintaining the illusion of fairness that is key in reducing the barrier to entry for newer players. “All men are created equal…” may be an exaggeration of a rule of thumb to follow when it comes to starting everybody off on a level playing field. You don’t want to go overboard – giving everyone the same sets of weapons, appearance, or capabilities can make for very boring interaction, especially for veteran players that want to differentiate themselves as such. This highlights an important trade-off faced by developers: preserving equity between players vs. creating a compelling experience for your target audience.

Starting players off with equal potential across capabilities doesn’t mean that we cannot allow players to self-select to create variation early on, provided

that the choices of the variants are equally available for all players

and/or

variants are randomized across all players using equivalent probability distributions so that players all face the same fundamental uncertainty.

We turn to issues related first to 1) and then to 2)

Avoid pay-for-power

A wise man once said (no reference, sorry), if it looks like a duck and quacks like a duck, then it’s probably not a horse. An important element of balancing involves limiting the ability for players to create inequities through pure monetary expense, hence, creating asymmetries in the choices between players with varying liquidity, violating condition 1) above. Even schemes that are not explicitly pay-for-power, but seem like pay-for-power (experience multipliers, etc.) should be avoided in this manner. Keep in mind, this point may be of second order importance if your objective in design is primarily geared towards revenue maximization through optimal monetization mechanisms with little regard to optimizing actual gameplay experience – but don’t ignore the interrelatedness between these optimization goals. However, if your goal is to retain and convert n00bs to thoroughly engaged veteran players, this is an important balance-monetization trade-off that is faced by developers. Keep in mind that my philosophy when it comes to game design is to first and foremost focus on designing an engaging and fun experience. If monetary objectives are prioritized, design typically suffers at the expense of lost degrees of freedom. If a game is engaging, then the money will come and should motivate little (and secondary) concern – stick to selling aesthetics, where real variation that doesn’t adversely cause gameplay inequities can easily be monetized. We now return to point 2) as illustrated above.

Masking inequities with randomization and leveling the playing field with variation

If we (you and I) flip a fair coin and I let you call it in the air – winner pays the loser $1 -, then before the coins is flipped, you and I both face the same ex ante fundamental probability. In expectation, we each break even since half the time you win and the other half, I win (probabilistically). However, after the coin is flipped just once, there is a clear winner and a clear loser ex post (after the fact) – someone had to pay the other $1, and this is what is observed. Many may say that the coin flip is not a balanced (equitable) way to allocate, because after the fact, someone gained and someone lost. However, there was no inequity before the fact. We both faced the exact same coin flip in expectation.

Some games will use a “luck” attribute to mask (or layer) the effects on randomized outcomes - rather than explicitly paying for a consumable or an attribute, you pay for the likelihood of an attribute to fluctuate in a helpful, but somewhat random fashion. If you purchase a “luck” booster, hits will do damage with 20% more likelihood – some gamers are risk averse enough to make this purchase (since it resolves some uncertainty, like an insurance policy), but beware the effects that it has on other players who feel the wrath of the inequity. There are examples where variations of “luck” can be implemented to allow for “beneficial inequities” to arise (from a balancing perspective). For example, randomizing damage with luck allows for chance moments where a weaker player can deal a death blow to a more advanced player, similar to the blue turtle shell phenomena that is referenced in the later section (but the blue shell is more systematic than random). If you’re forced to explicitly allocate advantages to players exogenously (where the players don’t determine the allocation), try and utilize randomization to mask the inequities that result ex post.

More experienced players in PVP games will have an advantage in knowing details about particular map locations and static (non-changing) features of a game relative to newer players. Randomizing / varying as many of these elements as possible allows developers to level the playing field in this regard. Spawning in random locations or spawning randomly at varied, predetermined locations means removing advantages from players expecting to re-locate in a systematic fashion – an advantage that a first time player would not have.

In many PVP games, developers have the opportunity to match players up using sets of predetermined criteria. When it comes to balancing, the easiest heuristic to utilize (given network restrictions) is to match players from a queue into a game randomly. A more specified subset of random matching that is commonly applied selects players to a particular server (or set of servers) according to geographic location. However, it could also be optimal in some instances to match particular players to one another on a server based on experience or even skill (assuming we have adequate metrics of either), or to use matching to “churn out” socially toxic players that otherwise, would adversely drive away others. As a newbie, you definitely don’t want to be thrown into a match with experienced veterans who will eat you alive. Early on, it is important to allow newly exposed players the opportunity to win. If players are generally risk averse, then losing tends to “hurt” psychologically more than an equal winning “helps.” Thus, matching randomly with the condition that only low level players are selected to play together can help to alleviate this sting, especially if there is a tutorial mode that is an instructional pre-requisite to playing PVP (see Team Fortress 2).

Using concave return functions: lessons from economic growth theory

This last point may be the most technical, but generally the most important to understand. It is also built on important foundations in the economic literature on production and growth, but the idea can be applied in many dimensions of game design and we focus here on attribute-mechanism design.

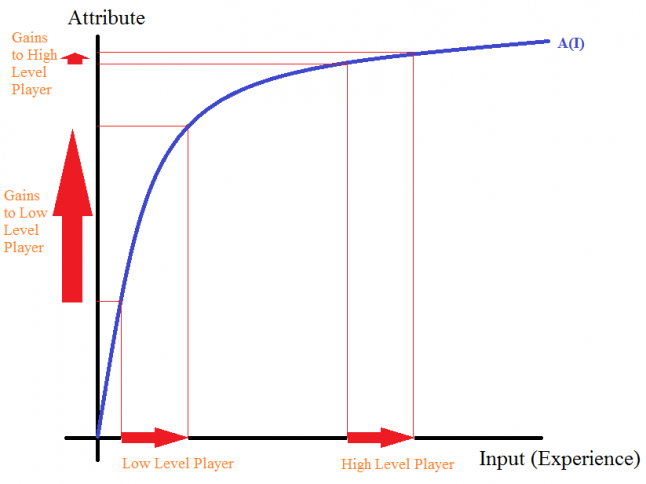

A basic attribute mechanism defines how a player’s character’s attribute sets scale as a player progresses. That is, a player’s attribute characteristics (ie: strength, speed, magic ability, etc.), is some mathematical function of the amount of experience and investment that player has accumulated with respect to a particular attribute. I will argue why utilizing an attribute function that is concave in the inputs that determine the level of the attribute (ie: experience, etc.), provides a general rule of thumb for ensuring a low barrier to entry for new players and a high level of growth for new players relative to experienced players engendering engagement and retention early on. The figure below shows a basic input-output relationship formalized as the blue mathematical function where the dependent variable, the attribute, is a function of the input (experience, and we consider the simple case of only 1 input). The attribute function has a very particular, concave (down) shape, and the shape has two important implications for balancing.

For an equal gain in the input experience, a low level player’s attribute increases more relative to the increase in the attribute of a higher level. That is, for an equal gain in experience, the less experienced player’s attribute grows faster and the more experienced player’s attribute grows slower. As all players grow/invest, they all face a decline in their attribute’s rate of growth (known as diminishing marginal return).

An implication of this mathematical fact is that this type of concave attribute structure exhibits a “catching up effect” – players that have accumulated less experience will grow at a faster rate relative to those that have accumulated more experience, and on average, catch up to the more experienced players who have slower growing attribute sets.

If attributes take the form of a discrete leveling system, then increasing the amount of experience required to level up as one levels up functions analogously (assuming an equal boost in potential attributes per level up.)

We have applied this idea to designing an attribute structure that engenders equality in the long term by allowing asymmetric growth in the attributes set in the short term, but the idea goes much further: structuring mechanisms that provide equity at the outset and growth opportunities to new players make for a balanced game environment where less experienced players will grow in ability towards more experienced players (holding all other factors equal).

Back to old school: the blue turtle shell phenomena

Perhaps the most noteworthy example of an early implementation of this idea not explicitly applied to designing an attributes system is that of the blue turtle shell in the racing genre Super Mario Kart, the blue turtle shell often appeared as a “question mark box” item for players who were in disadvantaged positions (usually last place). The end result of the blue turtle shell, which would seek out and destroy the current race leader and anyone getting in the way was allocating a relative advantage to the player in last place (analogous to having the least experience in my attributes example) and a relative disadvantage to any player in the lead. I’m not advocating for creating such inequities in the rules structure in general, but the point of the blue shell phenomena is that it resolved the issue of having no room to grow for a less skilled or less experienced player and somewhat randomly, would give them an advantage. If all players face the same likelihood of winding up in last place, then they all faced the same likelihood of finding the blue turtle shell ex ante (similar to how the coin flip was fair in expectation). Structuring a mechanism that allows for the catch up effect, whether it is some sort of constrained systematic randomization or explicit attribute design system has positive implications for reducing the entry barrier for newer players and for ensuring that they have higher than average growth prospects to foster engagement and retention.

In conclusion

Collecting and applying analytics to user data is the first pre-requisite step in adequately monitoring how much inequity or imbalance your design incentivizes. At what level are players churning out at the highest rate? Why? Measuring choices of attribute investments, wins/losses, kills/death, etc. can also be very useful criteria to employ in constrained matching of players beforehand. Once you’ve designed your game, iterating and refining your attributes system to weed out systems that generate player frustration, inequity, or other imbalances are a crucial next step, and will be the topic of a future post.

Balancing gameplay for PVP has been an issue around as long as people have been playing games with (and against) one another (even board games suffer from this dilemma). While there is no exact formula for how to balance-optimize your PVP game, I hope this post has made you think more about the important trade-offs faced in development as well as the importance of employing randomization, designing attribute systems that are concave in their inputs, and preserving equity in the choice structure at the outset. All of these elements of design imply long-run catch up, minimizing the barrier to entry for new users and creating a high growth, stimulating experience early on.

Dr. Levkoff is a behavioral scientist and the chief economist at Nerd Kingdom. He also holds joint appointments as a lecturer in both the Department of Economics and the Graduate School of International Relations & Pacific Studies at UC San Diego.

Read more about:

Featured BlogsYou May Also Like