Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

The latest issue of the Rendered newsletter talks about Epic's acquisition of a major photogrammetry tool, Microsoft's Hololens Army Deal, and brings a brief overview of "assisted creation" techniques and tools.

Epic acquires Photogrammetry Tool, Microsoft Hololens Army Deal, and a brief overview of "Assisted creation" techniques and tools

Epic Games has acquired Capturing Reality, makers of the (best-in-class) photogrammetry software RealityCapture. This move is… interesting, maybe even more so than the fact they just had a billion dollar raise.

Many of Epic’s acquisitions have focused on horizontal integration, buying up middleware companies like RAD Game Tools, Kamu, and Quixel (amongst others) to bolster Unreal’s feature set. As far as I know, this is the first time they’ve bought a company that make standalone software that’s more targeted at vertical integration, attempting to own the whole stack of photoreal capture.

Unity made a similar acquisition recently with their purchase of PlasticSCM, a source control tool used by many major game studios.

A way to read it is that both of these companies are starting to peek outside of their standard wheelhouse, snatching up companies that exist below and above Unreal in a project lifecycle (Epic's own move to build out the Epic Games Store is at the top of the stack).

Epic maintains that RealityCapture will still be available as a standalone product:

Epic plans to integrate Capturing Reality’s powerful photogrammetry software into the Unreal Engine ecosystem, making it even easier for developers to upload images and create photorealistic 3D models in instants. Capturing Reality will continue support and development for partners across industries like gaming, visual effects, film, surveying, architecture, engineering, construction and cultural heritage. This also includes companies that do not use Unreal Engine.

Again similar to PlasticSCM here — Epic can build platform affinity over time, providing a route to Unreal from RealityCapture, even if you used RealityCapture and weren’t an Unreal user in the first place.

This is also a bit of the dirty laundry of photogrammetry — generating geometry is [relatively] easy, but finding a distribution platform to share that with others is nigh impossible without game engine knowledge. Unreal 5 + Nanite is a perfect match.

For more thoughts on this, I recommend checking out this article by Mosaic51 that gives a good overview the wider ecosystem of photogrammetry, or this video from GameFromScratch that’s a great intro to the most common photogrammetry tools.

Microsoft will be fulfilling an order for 120,000 AR headsets for the Army based on their Integrated Visual Augmentation System (IVAS) design. The modified design upgrades the capabilities of the HoloLens 2 for the needs of soldiers in the field.

File this a bit under “Rendered #2 Follow Up” (along with NYPD robot police dog with a LiDAR sensor attached to its head).

The thing that most bums me about this news, is that this will likely never be brought up again. It won’t make press conferences, it won’t be in the video montages where Microsoft sells its vision of a better future, it won’t be listed as use case on the Hololens website, etc. The official Microsoft blog post even eschews the insane size of the deal, instead making it sound mostly like a few emails are going back and forth.

The size of the deal is notable, as the stated number of 120,000 is more than double the total number of Hololens headsets sold. As the only real MR headset in circulation (sorry Magic Leap), this effectively means that the market for these headsets is now warfare. Not medical applications, not factory work, but bold-faced violent warfare.

How Quaint

There’s no lead article for this, but it’s a trend I’ve been tracking a bit of that I thought would be worth calling out. People are starting to feel out the edges of what easy-access ML (Machine Learning) can mean for creativity, and are starting to sketch out a few options.

The first “generation” of creations, like the image above, relied largely on applying some technique directly to some other piece of art (or picture to whatever) and seeing what the output is. The above is an example of “style transfer”, but many other ideas in similar form appeared — basically taking the direct output of the process as the end goal.

However, what’s been emerging recently is the what I think is the second generation of this sort of tech, defined mostly by its situation in with other tools. Or more that the technique is not an end in and of itself, but is more of a means to an end for another result.

This manifests in a few ways. One is that major tools are simply getting ML functionality baked into them. Unity has a new tool called “ArtEngine” that promises “AI Assisted Artisty” through a suite of tools that help you develop game art assets (though worth pointing out AI is not necessarily ML!). Nuke recently announced its latest version would also have ML-powered tools:

The Nuke example gets closer to the next video, where ML-based tools (like EbSynth) are being sort of “hacked” to assist traditional video workflow tasks like removal, rotoscoping, etc.

And lastly, this great thread on Twitter by James Shedden goes through using EbSyth as part of a workflow in a way that makes it almost invisible (but also crucial!). He does an initial “style sketch” for the piece, and then creates a 3D model in Blender that acts as the target for a style transfer frame. Those are all then comped together for the final output!

Beautiful! I think tools like this will slowly worm their way into most (if not all) visual media creation tools, as the upsides are immense. They are definitely not without downsides (many introduce unwanted artifacts that need to be cleaned up), but what’s cool is that its helping to create a new tool in creator toolboxes. Not the tool as the “first generation” operated, but one that helps people find new modes and ways of artistic expression.

Or’s back! Read on for the latest!

In every issue, Or Fleisher digs through arXiv and random Github Pages sites to surface state-of-the-art research likely to affect the future of volumetric capture, rendering, and 3D storytelling.

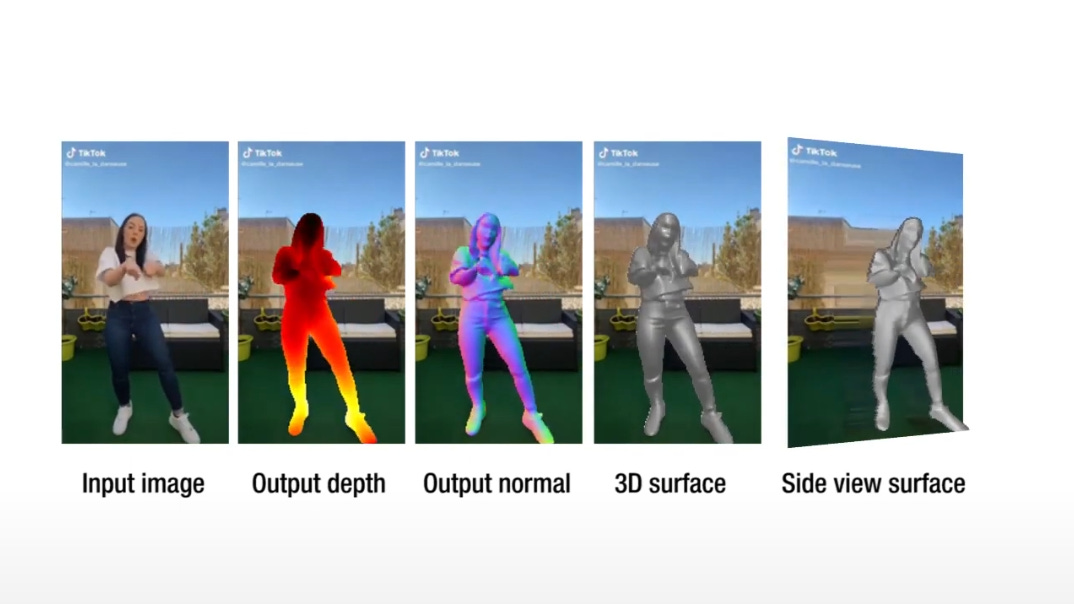

3D reconstruction of humans from monocular video has always been challenging. This paper presents a model architecture for predicting the depth and surface normals of humans in video from RGB video inputs.The results are quite impressive and alongside the code the authors also released a TikTok based dataset of humans used to train the model.

Why it matters

We have seen 3D human reconstruction from a single view improving over the past couple of years, and this is another step at improving the fine shape detail (and ability to reconstruct clothing).

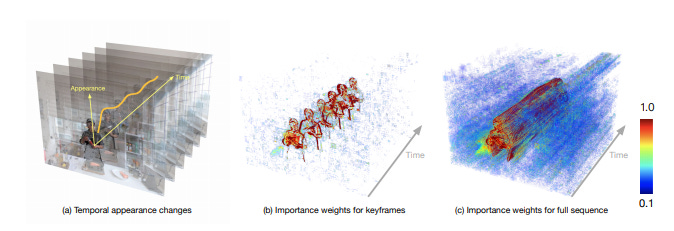

Neural radiance fields are the hot new research topic on the block. Simply put, NeRFs are an implicit way to represent 3-D information inside of a neural network, which can then be used to synthesize novel views of the scene. This paper takes that same approach up to the next level, by allowing the model to learn temporal data and generate new views of the scene over time.

Why this matters

Neural radiance fields have proven to be able to cope with variance in changing lighting, transparencies and detail. This paper however, is the first demonstration of using that idea to encode multiview data over time.

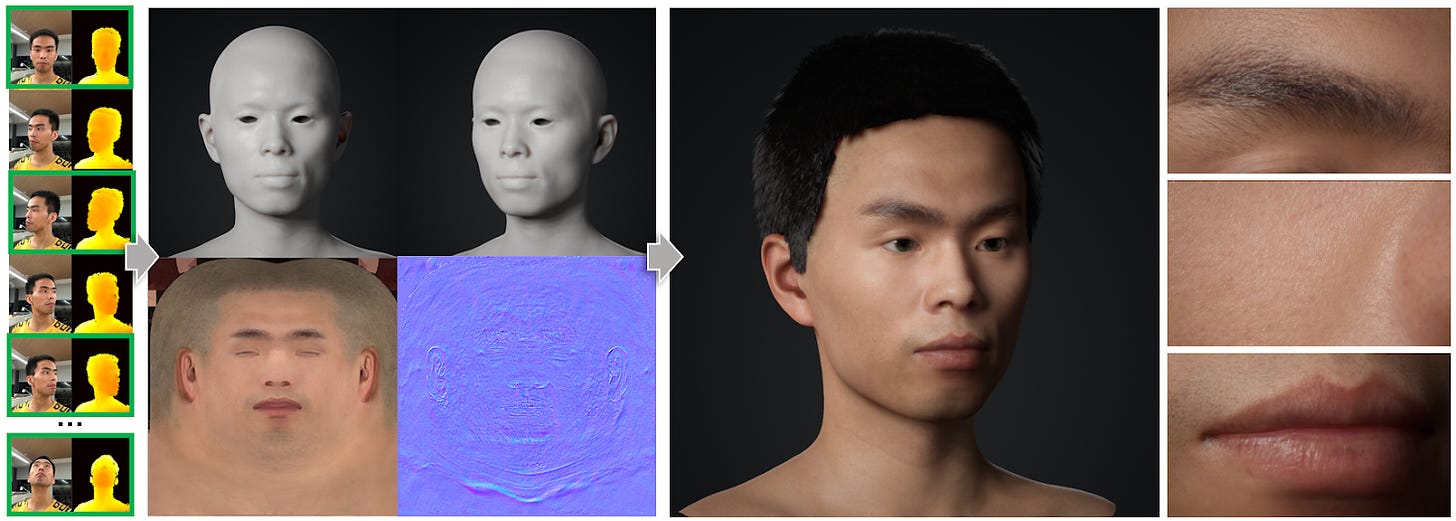

This paper proposes a network architecture that is able to take in a couple of RGB and depth inputs, and produce a detailed, textured face reconstruction. The results border uncanny, but the research proves quite capable at tasks such as an ability to synthesize and fuse textures, normal maps and fine detail representations.

Why this matters

RGB-D face facing cameras are becoming increasingly common in mobile devices, and this paper shows stunning results using only a few selfies from such devices. The ability to reconstruct high quality 3D facial assets is promising.

Everest Pipkin made an appearance in Rendered 2 with their Anti-Capitalist software license, and is back again with a gold mine of tools for game development and software creation worth checking out.

Meshlete is an open-source library for generating meshlets

“Meshlets are small chunks of 3D geometry consisting some small number of vertices and triangles…Splitting the geometry processing to meshlets instead of processing the mesh as a whole has various benefits and has better fit with batch-based geometry processing (vs post-transform cache model) in modern GPU architectures as well.”

Meshlets are a hot topic right now in 3D graphics/rendering, and having an open source library to generate/play with them is great!

This is a SUPER interesting podcast listen that goes in depth on the creating of the latest Microsoft Flight Simulator game. Jörg talks deeply about their tech-stack, and how MFS is essentially doing “sensor-fusion” across the entire planet, combining photogrammetry, satellite imaging, predictive modeling, tidal patterns, etc. This game flys (hah) under the radar due to it’s niche market, but the work being done here is truly ground-breaking and widely applicable to anyone building 3D words (or the world, in MFS’ case) at scale.

Playstation VR getting revamped for PS5

Balenciaga “Afterworld” Unreal Featurette

HTC Vive Pro Lip and Body Trackers

“Hex” is SideFX’s Youtube show highlighting Houdini creators

Inside Facebook Reality Labs: The Next Era of Human-Computer Interaction (also 1/5th of Facebook is working on AR/VR)

Waymo offers license for its LiDAR Sensors

Thanks for much for reading the newsletter. Additionally, if you enjoy reading Rendered, please share this newsletter around! We thrive off of our community of readers, so the larger we can grow that pot of people, the better. Thanks for reading!

Read more about:

BlogsYou May Also Like