Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

My article with a big general guideline about game controls design.

This is a repost from my blog.

For the last recent years, my designer’s job was closely related to the design of complex game controls. Surprisingly, it was quite hard for me to find good general guidelines. I had to solve some pretty complicated design challenges and study a lot of different sources until I was able to develop some principles that I currently use in my job. I think that they’re worth sharing and might be useful for anyone who’s dealing with controls design tasks.

The topic overall is, perhaps, too big for just one article, so I’ll also be giving external links to other resources with more details when it’s possible.

So, I would define the three main principles:

Accessibility – the game controls should be easy to learn and use, and take into account human’s physical and cognitive limitations.

Intent Communication – the game controls should communicate the player’s intent in a way the player expects and create a feeling of full control.

Expression Space – the game controls should give the player enough expression space for mastery, and keep the sufficient level of variety.

Let’s look at these principles in more details.

If we want our controls to be easy to use, the first thing that we need to take into account is our hand limitations.

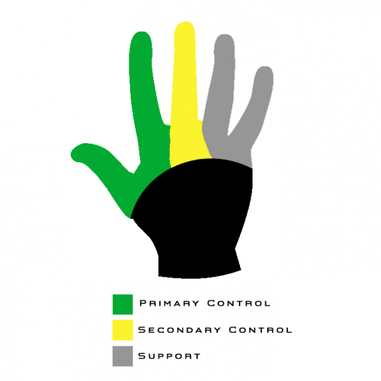

There are three main “finger groups” that we need to keep in mind during controls design:

Primary control – thumb & index fingers. Flexible and precise, usable for primary actions (shoot, jump, etc.).

Secondary control – middle finger. Flexible but not so precise, usable for primary hold actions (aiming mode, [w] for walking, etc.).

Support – ring & pinkie fingers. Weak and not very flexible, can be used for secondary actions.

Let’s try to apply our knowledge of hands limitations for the more practical task – designing of the game controls layout. In order to do that, we can use a Fitt’s Law, which for our case we can formulate that way: “the fewer the distance to the button and the bigger the button, the more accessible the button is.”

Combining Fitt’s Law and knowledge of hand limitations, we can formulate the basic principle for controls layout design:

The most frequent actions should be in the most accessible places and match primary control group of the player’s hand.

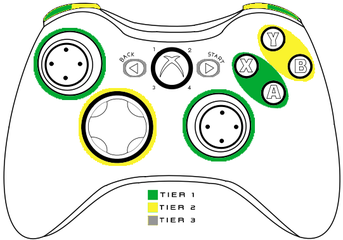

For the gamepad, it might look like this (as an example, we can use very common Xbox 360 Controller):

Tier 1 | Tier 2 | Tier 3 |

|---|---|---|

A/X buttons | B/Y | Start/Back |

Sticks | DPAD |

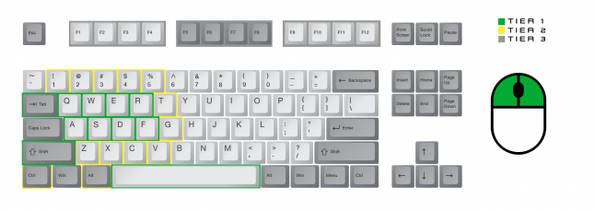

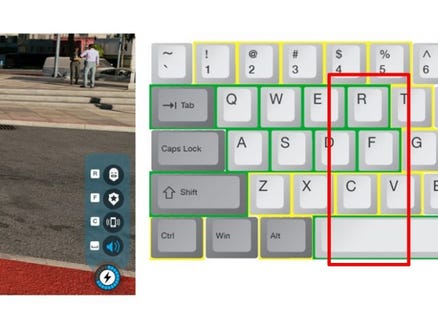

For the keyboard, I would divide them this way (it’s also was my internal guideline for Watch Dogs 2 PC controls layout):

Tier 1 | Tier 2 | Tier 3 |

|---|---|---|

WASD + Q/E/R/F | Numerical keys 1-5 | F1-F12 |

Space/Shift/Tab | Z/X/C/V/T/G | Right side of the keyboard according to the Fitt’s Law (the shortest finger trajectories) |

LMB/RMB/MMB | Ctrl/Alt | Any action that requires to move the hand |

As a small practical example, we can look at the keyboard hacking interface for Watch Dogs 2 PC.

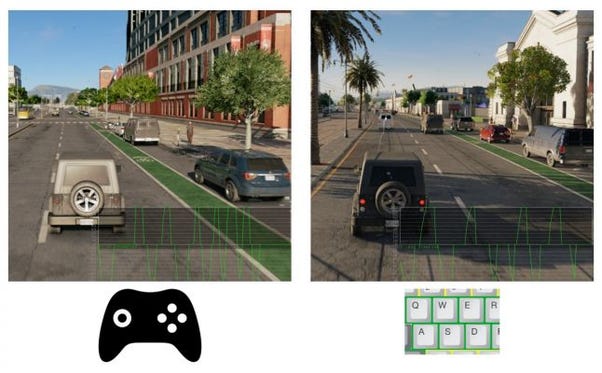

Here’s how it looks like with the gamepad – all the buttons can be accessed with the right hand thumb, and to activate the “hacking mode” the player should hold LB button with the left hand index finger. Such controls layout allows to use the hacking simultaneously with character movement as fast contextual actions.

On the keyboard, hacking actions are in places with the fastest access with thumb and index fingers (R, F, C, Space), and “hacking mode” can be activated with the mouse (which makes hacking more precise than the gamepad controls). The final playest result for such control scheme was 4,7 out of 5.

There’s always human attention limit for a number of simultaneous actions, even if they’re physically possible (for example, it’s and extremely hard to simultaneously drive the car, control the acceleration level, and also aim and shoot – even if your gamepad physically allows this).

Of course, there are different types of actions, with different attention demands. I would divide them this way:

Primary actions – require active decision-making, the main “Verbs”/basic mechanics that the player use. Might include more than one basic inputs (aim & shoot, moving & jumping, etc.). Require constant attention from the player.

State change – actions that switch control modes (hold button to aim, hold the button to run, etc.). Slightly increase the overall level of required attention. Very often, “hold” actions.

Contextual actions – appear from time to time in the context of the primary “Verbs” (reload the weapon, interact, use a special ability, etc.). Require short-term periods of high attention from the player.

From practice and numerous observations, the maximum limit of simultaneous actions (for each hand) is:

One primary action

One state change

One contextual action

So, by both hands, the player can simultaneously control (~approximately) two primary actions, two state changes, and two contextual actions. We should also keep in mind that one hand is always primary, so actions that require more precision should be assigned to the primary hand.

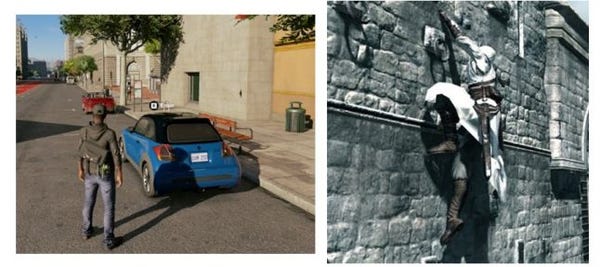

Let’s get back to our GTA 5 example.

Here, we have:

Car steering (primary action)

Accelerate/Brake (primary action)

Shooting mode (state change)

Aiming & Shooting (primary action)

As we can notice, in this episode, the player has to use three primary actions simultaneously: control the position of the car, its acceleration, and also shoot. Technically, it’s possible to do it with the gamepad, but the attention limit doesn’t allow to do it effectively. And time pressure and speed make it even worse. There’s a possibility that in this specific mission, such complicated controls were designed deliberately, but it’s also a good example what can happen if your game controls require too much attention from the player.

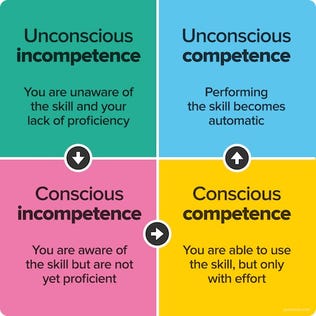

Is there a way to overcome these limitations? Actually, the answer is “yes.” We can move player actions to automated state. If the player repeatedly performs a certain activity, eventually, it will be moved to a habit and become automated. Automated activities require much less attention from the player to perform. Still, we should remember that automated actions can’t help us with the physical limitations of the hand.

A good example of automated activity can be classical WASD. Technically, it’s four actions that require three fingers to operate. But we perceive them as one control for moving, and have a common habit, learned in many games.

But how to help players to automate their actions? There are two ways: Grouping and Standard Conventions.

People learn and memorize by making patterns. To decrease memory load and improve learning, divide controls into logical groups:

Similar actions should be in one group – all move actions are in one group, all combat actions in another group, etc. Grouped actions that are related to one basic mechanic (combat, driving, navigation, etc.) are much easier to move to automated state (WASD).

Groups should take in account hand limitations – should match accessibility tiers.

Groups should be consistent, if you have more than one layout – similar actions in different layouts should work on the same button (ex: “Sprint” on [Shift] in On Foot layout and “Nitro” on [Shift] in Driving layout).

Two biggest groups are the player’s two hands – if you have two important actions (or groups of actions) that the player should use simultaneously, divide them between two hands, it will make memorization easier.

Another way to improve learning is using standard conventions that are common to the genre. In many cases, players’ standard conventions have priority over the physical accessibility of the button.

If you design an innovative mechanic, you might not have a standard convention for it. In such case, use mental models from the real life. These mental models should have spatial and physical similarity with the player’s actions with the input device (trigger to shoot, an upper button to [up], a lower button to [down], etc.).

One of the examples of such approach is a hacking panel design for the mouse & keyboard controls in Watch Dogs 2 PC.

The hacking mechanic in Watch Dogs 2 was quite innovative, and there was no good standard convention for that, so we made an order of hacking actions in the panel spatially similar with the physical keyboard buttons. Playtests showed that even such control scheme was non-standard at first, people could learn it pretty quickly and used very effectively.

Also, it’s useful to remember that people are imperfect and might have very different ideas how to interact with your game, so – playtest, playtest, playtest! Look for the player’s insight, find what players really do.

Let’s move to probably the most important part of the game controls – communication of the player’s intent.

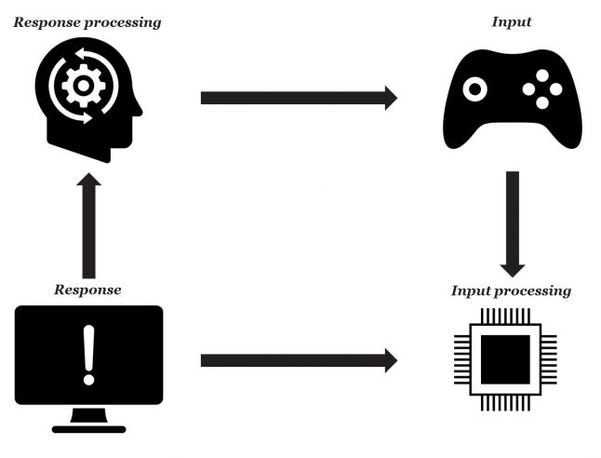

Here’s how the player’s action cycle looks like in general:

The player sends the input signal, then this signal is processing by the system, the player sees the response on the screen, process this response and sends new input signal. All this creates an ongoing correction cycle (you can find out more about the theory behind that in Donald Norman’s “Human action cycle”).

Good controls should not break the correction cycle and don’t frustrate the player on any of interaction stages.

On the input stage, the next thing after the accessibility (which we already covered before) is a concept of affordance (or affordances). Affordances display the state of the system and allow the player to learn what to do without trial-and-error process.

There are two main ways to use affordances in games:

HUD – external UI elements that communicate what can be done with the object (control reminders, crosshair state, etc.). External HUD can even be a part of the game’s narrative (Animus in Assassin’s Creed, for example).

Game World – using of “Form Follows Function” principle when the form of the game object communicates what can be done with or by this object. It can be level design (“climbing” points on the building, barrels with “explosives” symbol, etc.), or the character animations/items ( the smaller character is more agile, the bigger weapon is slower, etc.).

The main principle here – any interaction in the game should have affordance.

If you want to know more details on this topic, I would highly recommend to read “Beyond the HUD,” Master of Science Thesis from Erik Fagerholt and Magnus Lorentzon.

After the player sent the input signal, the system should process it. Here, we have a contradiction: players usually expect that the system should do approximately what they want, but controller sends precise input to the system.

The player’s hands are analog and not very precise, and sending of the raw input value might lead to frustration. In other words, the player wants to communicate intention, not the raw signal. In most cases, to avoid the player’s frustration and communicate intent properly, we need to filter the raw input signal.

There are two main ways to do it:

Curves – filter signal strength depends on time/speed.

Control Assists – predict the player intention and help to perform desired actions (autoaim, climbing assists, etc.).

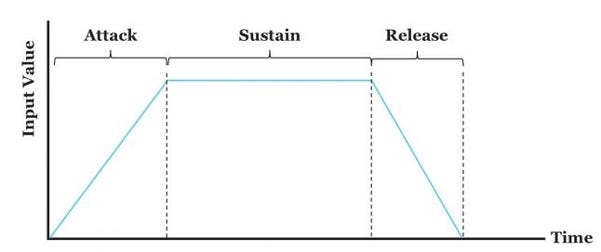

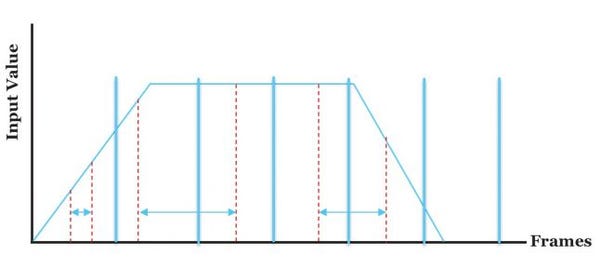

One of the most common curve examples is ADSR curve.

It contains four stages:

Attack – time from the moment the button was pressed to the maximum input value.

Decay – a short-term increase of the input signal strength before reaching the maximum stable value. Usually, it’s not used in games.

Sustain – maximum input value while the button is pressed.

Release – time from the moment the button was released to the minimum input value.

Using of such curve makes character or car movement much more natural and fits very well to the classical animation principles.

Let’s look at Watch Dogs 2 PC example.

On the left, you can see the gamepad input curve. To mimic this signal for the keyboard, we used an input curve that takes into account how long the steering button was pressed, which is allowed to turn the digital input from the keyboard into an analog signal. The curve is quite fast, with a very short delay time (the game has pretty arcade-style driving), but even such subtle mechanism makes control of the car much more natural and smoother.

The keyboard buttons are not analog, but our hands and fingers are analog! The player can press the keyboard buttons with different force (and therefore, time), so adding of such curve allows to measure how intense the player press the steering buttons on the keyboard.

Different players have really different controls skill level, and in most cases, this skill is way far from pro-gamers. Nevertheless, people want to win and express their intent successfully, and that’s where control assists can help us.

There is another contradiction, though: regardless of the skill level, players want to have a feeling of mastery and don’t want to feel like they’re cheating. To solve this contradiction, control assist should work in possible boundaries of the player’s mistake. Good assist still requires some skill from the player to perform an action but solves mistakes, related to the uncertain nature of the player’s hand and input device (people cannot press the button with perfect timing, cannot control the aiming perfectly, and so on). Until the assist fits the player’s expectations how the system should approximately react to the input, it would not be feeling as “cheating.”

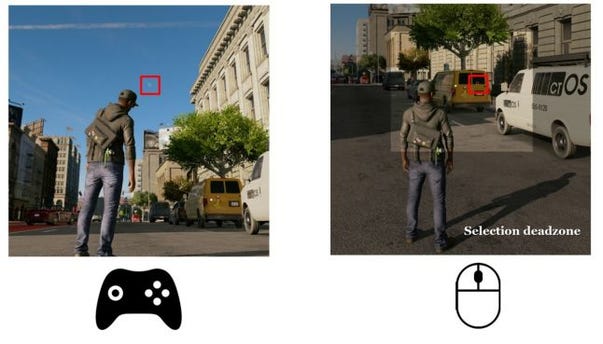

The starting point here is the input device: the more precise is the input, the less should be the strength of the assist. Let’s look at a more specific example.

There’s aim assist for hacking in Watch Dogs 2, which selects different objects for hacking by the quite complicated algorithm. With the gamepad, it works for the whole screen (you can automatically select an object nearby and look at completely different direction). It feels ok with the gamepad, as the camera control with the stick is not very precise, and the player needs to select objects for hacking fast.

With the mouse, such automatic selection was quite annoying for the player, as it often selected different objects that the player expected (players expected better precision and less help). To solve this, we modified boundaries of possible mistake and decreased the hacking assist selection zone for the mouse. Playtests showed that it was the right decision and hacking became much more comfortable with the mouse & keyboard.

The main metric for any game controls that defines the player’s comfort is Responsiveness. It provides the key point for any good controls – predictability.

To achieve good responsiveness, we need to be aware of three main topics:

Perception window – human perception limitation.

Technical limitations – what increase response time.

Feedback – how the game indicates the consequences of the player’s actions and builds expectations from these actions.

Average time required for the player to perceive the state of the game world and react to it is around 240 ms.

It consists of three stages:

Perceptual Processor – 100 ms [50-200 ms]. On this stage, the player should recognize that something is changed.

Cognitive Processor – 70 ms [30-100 ms]. On this stage, the player should process the information from the previous stage and decide what to do next.

Motor Processor – 70 ms [25-170 ms]. On this stage, the player should send the input signal to do some action.

If you want to know more detail on this topic (and know more about other human limitations), look for “Human processor model.”

As we can see, for the game controls to be comfortable, the system should have a response time no more than 100 ms.

To achieve 100 ms< might be not so easy as it seems. There are some technical setbacks on the way:

There’s always 1-2 frames delay from the hardware.

Complex signal filtering adds delay.

Long animations and non-interruptible actions might not fit into 100 ms< perception window and create the feeling of “lag.”

V-Sync adds 1-2 frames delay.

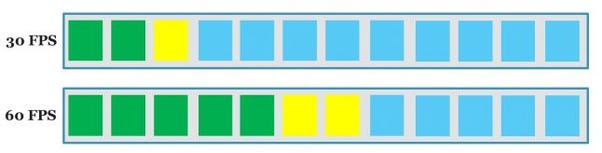

There’s also one very important point: for the player, it’s not ms – it’s frames! For human perception window of ~80-100 ms, maximum comfortable lag on the screen is around:

2-3 frames max for 30 FPS.

5-7 frames max for 60 FPS.

The actual biggest difference between 30 and 60 FPS is not visual, it’s a level of responsiveness from controls. Of course, you will notice some visual differences in 30-45-60 FPS range, but the higher the framerate, the more subtle the difference. The feeling from controls on higher FPS, though, might be so much better that some player might not want to go back to lower framerate.

But even the higher framerate does not solve all the responsiveness issues: the player sees the system response on the screen every frame but can press the button faster than the time of the frame.

That was our case for Watch Dogs 2 driving with the keyboard. Originally, driving input from the keyboard had update every frame, but even with the carefully tuned curve, it still was not responsive enough. Player tried to use very short presses (shorter than one frame) to correct car position. As a result, we lost part of the player’s input, which was especially uncomfortable on low FPS.

As a solution, we started to gather more detailed input data: even if the button was pressed for 30-50% of the frame time, the game counts it and transforms into final (weaker) signal during screen update.

We also started using this approach for other game controls, which allowed them to be responsive enough regardless of screen frame rate.

Feedback builds a mental model of any interactive object in the player’s head, it answers on “what is it?” question. Depends on the feedback, responsiveness expectations from different actions/objects may vary.

Usually, the higher the accuracy needed to complete the action, the faster response the player expects. For actions that may require high precision, like shooting or jumping, the player will be expecting a faster response (as it was with Watch Dogs 2 keyboard driving).

Visual feedback includes animation, visual effects, and HUD. Usually, it’s the main feedback channel.

Animation plays the main role in the building of physical properties of the object, especially the sense of weight. Animation has a long and rich history, and there are set of well-established animation principles (look for “12 basic principles of animation”) that can also be used for games. What’s interesting about classical animation is that the principles related to character movement, fit really well to the principles of good controls design. For example, A(D)SR curve is a clear implementation of the “slow in, slow out” principle; principles like “anticipation” and “exaggeration” might be very important in communicating the character’s actions and results of these actions.

Also, when you’re tune your controls, believable movement arcs is one of the key components in creating of great perception of the controls. Complex objects like human body or car do not move in straight line, and the player has pre-conception about it. In Watch Dogs 2 PC keyboard driving, the default steering sensitivity that felt right was the same sensitivity that produced the most believable motion curves.

Combined with animation, visual effects help to communicate interaction between objects. Games with rich visual effects also often called “juicy,” when even small player actions generate a lot of response from the game, making it fell more alive. In many cases, visual effects are a clear extension of “anticipation,” and “exaggeration” principles of animation, and enhance such actions (like preparing for the attack or particle effects from the hit).

Actions that are clearly communicated and shown, that are exaggerated and enhanced by rich visual effects, players find more fun and believable.

Speaking of HUD design, it’s a huge topic by itself for one article, so I would highly recommend to read “Beyond the HUD” thesis, it’s still relevant today and contains a lot of useful information. What I’d also like to add regarding HUD that it can be used to overcome some human perception features that cannot be translated into the game. It might be hard to navigate in the 3d environment on 2d screen, so we usually have minimap; it’s hard to know where the sound came from, if the enemy is shooting at you, so we have direction indicators, and so on.

Audio feedback usually serves as secondary and supportive feedback channel for visual feedback (especially, for the cases where the player may have doubts based only on the visual shape of the object).

Let’s look at one of such cases:

In Watch Dogs 2, there are two similar cars that actually belong to completely different classes. Both look like some small city cars, but when you try to drive the car on the left, you’ll hear the sound of the engine, you’ll feel different handling and acceleration – which will allow you to clearly define this car as a “sport” class.

Another important type of the audio feedback is so-called “barks,” generic AI dialogues that indicate the status and actions of NPCs (“Taking cover!”, “Searching,” etc.). It can be a big topic by itself, so I recommend GDC lecture about barks.

Some games go further and make audio feedback almost equal to the standard visual channel. One of the great examples is “Overwatch,” and I highly recommend to look at Blizzard’s GDC 2016 presentation about “Overwatch” sound design.

The main type of the tactile feedback is usually gamepad rumble, but we should remember that the shape and type of the control device is also matters. If you’re making a multi-platform (or multi-input) game, keep in mind that the responsiveness expectation for the same actions might be completely different for mouse & keyboard, touchscreen and gamepad (and even for the different types of gamepads; and there’s also Steam controller). It may lead to big changes in playstyle: for example, mouse & keyboard players might have more aggressive playstyle in games with shooting mechanics. On the other hand, the gamepad players might use more complex action combinations due to the better controls grouping on the device.

Lastly, I’d like to look a bit at the feedback as an instrument of the long-term player learning. There’s a great talk from the Game UX Summit 2016 from Anne McLaughlin about using of signal detection theory in game feedback design, which I highly recommend.

Games communicate meaning through interaction. That’s the biggest difference of games from any other media or form of art. We’re not selling an experience directly, but tools for players for getting this experience by expressing themselves. And game controls is the primary expression tool.

We’re slowly going towards gameplay design area and its relation to the game controls, and the first thing to remember here is that the player wants to communicate intent through controls, not to learn how to use controls.

We can imagine game controls as a language, where “move,” “jump,” “shoot,” etc. are verbs, and controls combinations are sentences. To successfully communicate with game systems, the player should learn this language, and if the language is unclear (verbs are not familiar to the player), has a lot of synonyms with very little difference in meaning (mechanic duplicates), and spelling and sentence structure is hard (verbs are hard to execute and cannot be combined into patterns) – the player will have to spend a lot of efforts to learn the language instead of interacting with the game.

I would recommend Jonathan Morin’s lecture on this topic if you want to know more.

Mastery is one of the key psychological needs in PENS framework, and the game controls contribute the most for the feeling of mastery. “Easy to learn, hard to master” – we heard this phrase many times, and it usually means a game with controls that are simple and familiar enough to clearly communicate the player’s intent, but with a big room for mastery. From the Accessibility section, we know how to do game controls “easy to learn.” Now, we need to make them “hard to master.”

Let’s look at some examples, how we can do this:

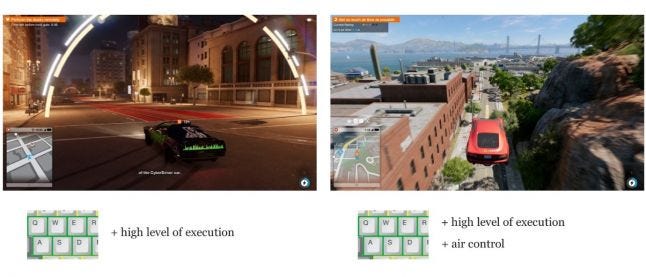

The most direct way (speed/reaction challenges, time pressure, etc. – everything that requires a higher level of execution of the primary actions).

On the left, it’s just usual free roam driving, without the significant requirement to the player’s skill. On the right, the basic controls are the same, but the player is required to do complex stunts and evade the police.

The same input actions but in different control states ([WASD] for ground controls, the same [WASD] for air controls, etc.).

In addition to complex stunts (on the left), we can add air control to expand space for the player mastery (on the right).

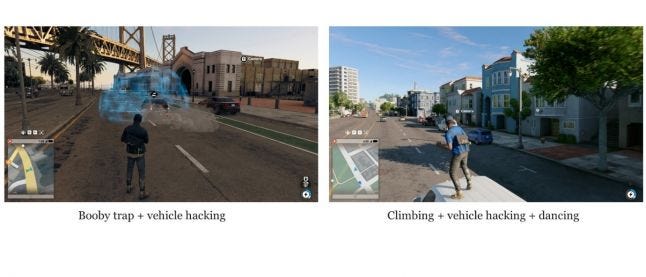

Input patterns that give basic controls new dimension. For example, in Watch Dogs 2, you can place explosives on the car, hack its steering and make a moving booby trap (on the left). Or, you can climb on the vehicle’s roof, hack its steering, and then dance on the roof while driving San Francisco streets (not the most useful input pattern, but you see the room for expression, right?).

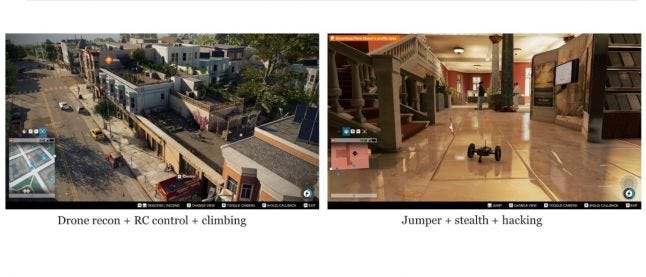

Changing goals and context, we change the very set of necessary control skills. For example, level on the left requires a combination of drone recon skill to finding a right way, then the player needs to find a scissor lift and hack it to get to the roof, and then, finally, climb all the way up. All these are basic controls, but when they put in different context and combined in an interesting way, it gives the fresh experience to the player. On the contrary, level on the right requires a completely different set of skills: stealthy usage of the Jumper drone which is able to do some “physical” hacking. Basic skills again, but stealth context delivers the fresh experience.

“Game Feel: A Game Designer’s Guide to Virtual Sensation,” Steve Swink

“Beyond the HUD,” Master of Science Thesis, Erik Fagerholt, Magnus Lorentzon

“Level Up! The Guide to Great Video Game Design”, Scott Rogers

(GDC 2009) Aarf! Arf Arf Arf: Talking to the Player with Barks

(GDC 2016) Overwatch – The Elusive Goal: Play by Sound

(GDC 2010) Great Expectations: Empowering Player Expression

(Game UX Summit 2016) Human Factors Psychology Tools for Game Studies

Read more about:

Featured BlogsYou May Also Like