Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

In training game design students, a major obstacle was proving to prospecting employers they are capable of creating good design. This article summarizes 2 years of research in training designers to prototype and test their design on their own.

(This is a repost from http://www.hilmyworks.com/how-design-students-are-taught-to-prototype-on-their-own/)

I am a lecturer teaching Game Design in KDU UC Malaysia, and about four years ago I undertook the task to train game design students. While the School provided various forms of training for the design students - student projects with artists and programmers, classes on various aspects such as World Creation, Level Design and Systems Analysis - there was an issue regarding training designers I had to address.

The Problem Statement

There are three problems I found with training design students:

Group projects could fail in ways that a designer couldn't control. The designer could do good work and the game could still fail due to ineffective management, lack of resources or team conflict. The opposite could also be true: a good game may not be the result of a good designer, but intervention from the other members. The success of a team game project is not a reliable way to measure the ability of the designer.

Regardless of what a University student studies, a Degree graduate is supposed to graduate alone. It simply will not do to have a design graduate that says 'I'll need a programmer and an artist to prove my design will work'. As a former IGDA Chapter Coordinator, I have met designers who cite that exact statement as a reason for why they couldn't find work. That's not an acceptable situation for a game design graduate.

I have noticed that our local game dev students I've had generally are inexperienced presenters. Richard Carrillo in his GDC2015 talk on designing design teams clarified what I realized over time: only a subset of game designers - termed by Carillo as Salespersons - can sell ideas to others. The rest will struggle. Designers are required to be articulate - they have to explain their work to their fellow developers - thus design students have to learn to communicate on a higher level than their fellow classmates. Obviously, they'll have to learn to communicate on that level, but it is possible to have students who have a good design in mind but could not communicate it properly, thus being unable to prove their design works.

In summary, I will have students who have to graduate alone, show expertise in creating work that can only be proven by others working on it, and may be unable to communicate what he and she can do.

I noted this issue since 2012, and thus went looking for solutions.

Two Possible Solutions

There were two implementation paths that seemed potentially useful: 1) Prototyping + Analyzing Feedback and 2) A More Efficient GDD Process.

1) Prototyping and analyzing feedback. The simplest approach is the "design-test-iterate" mantra mentioned by Kim Swift of Portal's fame. Ideally, design ideas need to be tested and improved based on test analysis. The keyword here is validation: confirming what was conceived actually works. So the question is: what is the most effective way for a designer to validate his or her design?

One developer I posed my problem to back in 2013 was Chris Natsuume of Boomzap. His answer was simple: any designer who'd want to design a game would have tried to make it. It doesn't matter whether it's done on a simple game engine or a paper prototype; they should have taken the step to see whether or not the design works. This fits his company's approach (see "Quick and Dirty Prototyping: A Success Story" by Paraluman Cruz) and he has cited on Reddit that his approach to ideas is to prototype them.

One useful example from this was Blizzard's development process in creating Hearthstone ("Pen, Paper and Envelopes: The Making of Hearthstone" by Mark Serrels). There was a clear example of designers working alone as they had no artists and programmers available for a time, and they generated a working, playable prototype on their own.

But the newest members of Team 5 weren't prepared for what came next; no idea how far Eric and Ben had taken it. It subverted almost every idea Team 5 had about the traditional process of game design.

Eric pointed to a computer in the corner of the room, running a flash version of Hearthstone. In everyone's absence Eric and Ben Brode had been busy. They had essentially prototyped and then built everything Hearthstone was going to be in Flash.

In short: the game was already finished.

"We pretty much pointed at the computer and said — 'the game is done'," laughs Eric. "Just remake that game over there."

It was the end result of that rapid, brutally efficient iteration process.

This was a strong indicator to me that a good ability for a designer to have is to be able to implement their own designs to demonstrate to others. Handily, it also solved the communication problem: the best way to convince others your game design works is to show it working.

Another key element I was advised to implement was feedback analysis. Again, while asking around in 2013 in Casual Connect Asia - a game dev conference held in Singapore - I was given advice that another good ability companies seek in designers is the ability to adapt a design to feedback. That means collecting feedback, analyzing what the feedback meant, devising a design solution and implementing it in the game.

Researching on this, I found interesting examples: Shigeru Miyamoto and Sid Meier designed based on feedback.

In 2009's GDC, Satoru Iwata gave a talk on Miyamoto's design process. One method he highlighted was called "Random Employee Kidnapping": Miyamoto would randomly pick employees to play a prototype and watch how they play "Over The Shoulder". ("Nintendo's Development Secrets, Cloud Gaming & new Killer NIC - GDC '09" by Anand Lal Shimpi & Derek Wilson).

In Polygon's article on the development of XCOM ("The Making of XCOM's Jake Solomon" by Russ Pitts) it was revealed that not only did Sid Meier worked on his designs based on feedback...

One of the lessons Jake Solomon learned over the years from Sid Meier is that, as a designer, you can't ignore the feedback. Feedback is fact. If someone has a feeling about your game, that feeling is irrefutable. It is something you must take into account. And if enough people have the same feeling, then there's a problem and it must be addressed.

...but he also created prototypes on weekends to solve design issues. By himself.

"[Meier] said, 'Look, OK. We are both programmers,'" Solomon recalls. "It was a Friday. He says, 'Let's go home over the weekend. I'm going to write a prototype. You write a prototype. We'll get back together. We'll play each other's prototypes and we'll see what we think.'"

Meier and Solomon, both workaholics, spent their respective weekends writing XCOM strategy game prototypes and then compared notes the following Monday. The decision: They would do it Solomon's way. The team immediately began working on turning Solomon's real-time prototype into the final version that exists in the game — with a few additions stolen from Meier's turn-based version.

I would be remiss to not teach design students how Shigeru Miyamoto and Sid Meier do game design, and since they do a lot of prototyping + testing, that's what I need to introduce.

2) A more efficient Game Design Document (GDD) process. When I was referencing design books and other developers on how to teach game development back in the early 2000s, a common advice was to create Game Design Documents (GDD). When I was a Producer, the GDD was essential to the process, from answering RFPs (Request For Proposals) and maintaining a Master Document on what development should work on.

Even back then, there was an issue on 'wastage': designs that were detailed and implemented, but did not make it into the final game. In company discussions, there was an interest in figuring out how to reduce 'wastage' in order to minimize wasted development work.

In teaching the current game design students, another problem cropped up: design documents were not being read. Even my game design lecturer colleagues agree that there is an issue on GDDs not being read by development team members themselves. Chris Natsuume also cited this, recommending made prototypes over written design docs. Yet, since companies ask for GDDs to assess designers, the process is maintained.

However, when researching Miyamoto and Meier, another fact came up: they do not rely on GDDs.

"IGDA Leadership: Firaxis' Caudill on doing it 'Sid's Way'" by Chris Remo.

"There is absolutely no design document whatsoever" when it comes to Meier's work, Caudill said. "The game design document lives in Sid's brain. The publisher would say, 'Can we have the document?' and I'd say, 'Well, I'd have to chop Sid's head off.'"

As a result, Firaxis goes to great lengths to ensure the in-progress prototype is never broken, because when it is, it slows down Meier's ongoing design process, which proceeds at an uncommonly rapid pace.

G4TV's Live Blog: GDC 2009 Keynote - Nintendo President Satoru Iwata

9:23 BL: 2. Personal Communication - Miyamoto never creates a design document. Instead, he gathers a small team and talks it through with them.

9:24 BL: 3. Prototype - Miyamoto prefers to create a prototype with a very small team (sometimes just 1 programmer) to produce a playable prototype with a "core element of fun". Miyamoto has multiple projects in this stage at one time.

Obviously there were documented examples of paper specifications by Miyamoto, but it seems the GDD is not the primary process in creating a fun game for these developers. I have to admit that after relying on GDDs for almost a decade - and seeing how it's used in development and business - the idea of planning out a game design without a GDD was a mindbender.

But facts are facts. And I have a job to teach game design students designers create games. If certain successful designers that has made a difference in the industry favor a particular workflow, I need to teach that.

From research, it was clear the reason GDD did not seem necessary was because a prototype answers a lot of questions that would be written in the GDD. A intern documented a Senior Designer, Kent Kuné's prototype demonstration ("On Prototyping and Coding Your Own Ideas" by Adriaan de Jongh) and detailed why prototyping solved various communication issues.

"But Kent decided to make a prototype of the main character to get a practical view on his vision, as well as showing (rather than telling) the team what was in his mind. He understood that 1) his words fell inherently short when he had to accurately express his vision to the rest of the studio. As the game designer, he asked them to do things with his ideas without understanding how they were doing it. (Making beautiful things with code, art, and audio is a mysterious craft to many). And on top of that, he knew that 2) his vision of the game was very likely to change the moment he would see it in front of him.

Kent's prototype showed his vision of the playable character extremely well. By programming the prototype himself, he was able to make a thousand tiny choices with his vision of the character in mind, without ever having to put his vision to words. And, because he had created the prototype, he could now talk about and show the rest of the team everything he coded, from the general structure to the tiniest detail: how his vision changed over the course of creating the prototype; how he iterated on the acceleration; how quickly the head rotated relative to the body; where the pivot points were located; how the feedback of the gun worked; and many other things."

Not only would a prototype help other developers on the team understand implementation specs that would be difficult to put into words, it also offers an opportunity for design students with communication difficulties. They can just let the prototype speak for itself.

I was willing to reduce the importance of GDDs in my classes as other design lecturers already cover documentation for game development in their classes. Teaching a variety of approaches would also benefit students. However, I can't ignore a GDD's role in tracking the work that needed to be done in a game. There has to be a minimum guide that would keep a designer's progress on track, so that planning and execution can be compared. I thus wondered if there was such a thing as a minimum design document: a small document format that is fast enough for designer to adapt as needed, yet contains enough details to track what is being developed for the game.

With both solutions in mind, I started looking for design methodologies that would tell me how to implement the two solutions.

It turns out there's one method that covers both really well in one process.

The Cerny Method

I've read snippets of Mark Cerny's works in Wired ("Exclusive: The American Who Designed the Playstation 4 and Remade Sony" by Cade Metz) and heard of the legendary "70-row-long Spreadsheet" ("Naughty Dog Designer Maps Out Uncharted 2 Development" by Shaun McInnis) that was cited as the only design document they had. It wasn't until I needed to solve the two cited problems that I found the Cerny Method to be the solution I needed. "Method" as Mark Cerny calls it (http://www.slideshare.net/holtt/cerny-method) provided two Preproduction Deliverables.

1) A Publishable First-Playable after rapid prototyping, as a proof of concept.

2) A Macro Design Document before any Micro Design Doc work is done, as confirmation on what's needed in the game.

Method made it clear that Production and Preproduction are two separate phases, and only certainty of what to develop will move the development team from Preproduction to Production. Therefore, I surmised what a prototyping designer should do is to clarify what works and what doesn't work by experimenting with prototypes. While experimenting, the designer should maintain a Macro Design Document of the prototype's features to track the changes during a Design-Test-Iterate process. This goes on until the designer is able to generate two finalized Preproduction Deliverables, and these should crystallize what are the working, fun features of the game.

In theory, that's how I thought it should work. The next step is to run this with the students and confirm how well it works.

So back in 2013, after interviewing developers and conducting preliminary research, I started an implementation run with the design students.

Class methodology

This was the process I came up with:

- Identify a pillar feature of a genre, and figure out how to implement it easily in a simple game engine.

- Guide students on implementing the pillar feature, but let them embellish it however they will.

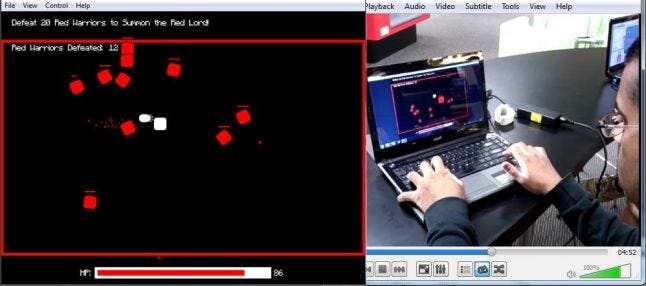

- Follow the Method and get them to do weekly playable builds, and video test them. Video recording by handheld mobiles, most grungy method there is. That's to teach students they don't need sophisticated software to do good testing. This also emulates Miyamoto's "Over The Shoulder" testing practice.

- Students show videos of their testing and demonstrate - based on evidence - what was working and not working with their current build. Here, the lecturer can determine - by comparing the recorded videos and the students' notes - whether or not the students were making good assessments on how the players are reacting to their games.

- Lecturers and students discuss what is the best way to respond to the reactions. Student retain the option to design as they wish, but they are required to demonstrate using subsequent testing sessions to prove their designs are successful.

- Students go and iterate their build, and the cycle repeats.

The amount of iterations can be from 5 weeks to 10 weeks, depending on the complexity of the game. To make this work, students simply cannot plan a game that'll take a few weeks to flesh out: they have to make it work every week. This fits the Agile Methodology mindset, where they have to work based on deliverable features, and kill features that they cannot deliver.The tool of choice is Stencyl (http://www.stencyl.com/), a 2D visual-scripting game engine that was easy for non-programmers to learn and outputs SWF files, allowing easy distribution.

Results from 2013-2014:

I started implementing and iterating the Method adaptation on mid-2013.

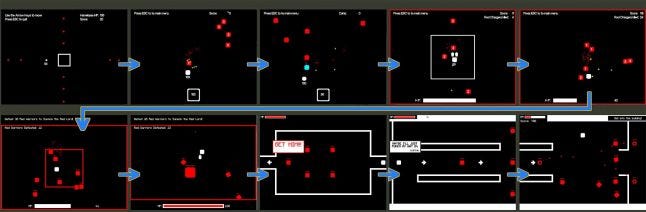

Top Left : Early iteration of a platformer Top Right: Later iteration of the same platformer, with text aid to help players understand what to do (as Usability Testing proved it was necessary) Bottom: Testers trying out the game in a Usability Testing process.

That session showed results. The designers were forced to keep their implementations simple as they needed to show the designs were playable weekly. Since the designs were playable, testers were able to point out what was wrong with the designs easily. Since feedback came early, the designers were able to respond and tweak their designs to generate more positive feedback.

The real treasure from the first year of this process was the establishment of the most basic mistakes a designer can do. By requiring Usability Testing, the designers learned very quickly that teaching players how to play is more important than trying to show how cool how the game is..... because players give up playing games they couldn't understand. Usability Testing became a humbling process where they saw players not understanding how the game mechanics worked, and since this confusion was recorded on video, they can't ignore that fact during review. Since the problem was established, the designers can be directed to investigate ways to fix the problem in the next iteration. Recorded video also documented moments of delight: in the image above, bottom left, the tester was exclaiming how liked the way the character spun around during jumping. Since the assets done is a rough state - the 'spin' was simply three frames of black 'eye' dots moving in front of a rectangle - the designer learned that he could create delight for the player with very simple steps. This is handy as it provides evidence that making players happy need to be an elaborate affair.

The first few semester trying Method established standards on what designers should and should not implement for their prototypes, and I was able to teach that for future classes.

This page http://gamedev.kdu.edu.my/404/ (Not a No Page Found page. As college humor goes, that's the name the students voted on) contains works from design students using the Method in 2014. Made on 2015 for a Promotional exercise (in a class by fellow staff and game designer Johann Lim, with assistance from the Program Leader Yee I-Van) and the students scrounged their older game files to build their promotional pages.

The games are of varying quality, but since they were done using Method, all of them are proven playable. Since they were built by solo designers, they each stand as a example of what an individual designer can do.

Take the first game: Ponch. (http://gamedev.kdu.edu.my/404/ponch/2015_ponch.htm) The version on the site is the 10th iteration. That version is the defined Publishable First Playable a.k.a the version that designer can share with others so that they would understand what the game is about.

If the designer is asked 'how did you design this game' the designer can show 10 iterations of builds, from the base mechanics test to the final iteration. By having these builds ready, the designer can easily show how he or she evolved their design until the final publishable form. Since each build is playable, any prospecting employer can check and understand the state of the iteration by playing it.

(The fact that Stencyl publishes into small SWFs is one of the reasons this tool is chosen: it allows the design student to store and share a volume of playable builds. A design student can easily carry the set, provide a link, or even embed the files into websites. The minimized friction in sharing playable concepts made communication easier.)

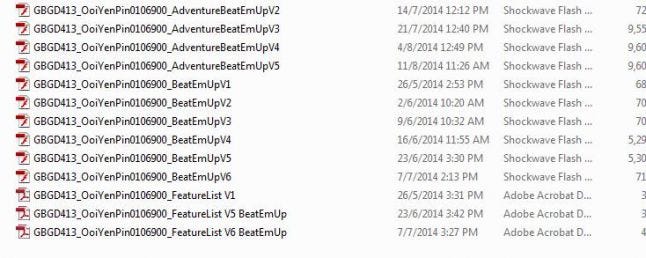

Each change was tracked by a Feature List document (above) that indicated what features were planned and which feature did he work on/improved/cancelled. The key behind this document is that the designer should not waste time on the details. He or she should only indicate what they're working on (yellow), what was done (green) and what was cancelled (red). The design student above added disabled (grey) for features that were implemented but were disabled in order to deliver the build that week.

By marking the state of the features, I was able to see how the design student thought about building the game: what features they obsessed about, what features they could clear easily, and what they gave up on - long yellow bars before the final week - after long periods of implementation. For example:

Three features were developed for completion in the Good-to-Have section (Coin Pickups, Score and BGM) were disabled when the designer tried a different approach on Iteration 6. Out of those three, only one feature was brought back.

The designer tried multiple variants of enemies (Good-To-Have). He made it work on Iteration 3, disabled it on Iteration 4, and killed them for good in Iteration 6. Note that the base enemy was completed and confirmed in Iteration 1 (Must-Have), but it can be seen that some experiments, even though they were technically complete, did not pan out for design.

When he tried newer enemies in Iteration 6 & 7 (Good-To-Have: Bosses, Red Warrior Behavior, Red Lord Behavior), he cleared and killed the features a lot faster.

The designer wanted a special attack. He worked on it four weeks straight, killed it, brought it back to work on it again, and for the Final Publishable version, killed it. He clearly wanted it, but after working so long, he had to make a call to nix it in order to make the final delivery work.

This is a designer learning by experience how to do Production. He is learning every week the value of every feature: how appealing the feature is vs how difficult it is to make. He has to make a call when to kill a feature no matter how much he wanted it. He disabled certain features - even though they were complete - in order to submit a playable build in one of the mid-iterations.

What's described just now could easily be situations within Production with a team, where features could be worked on for weeks before they had to be killed, leading to expensive wastage. Here, the designer is taking the responsibility to clarify whether or not the feature he came up with is truly necessary for the game. The process teaches the designers to thoroughly test their proposed features before passing it to a Production team.

This approximates Sid Meier's "Finding the Fun", (http://www.gamasutra.com/view/news/114402/Analysis_Sid_Meiers_Key_Design_Lessons.php) the process where the designer performs an analysis on their design to determine the fun within.

Good games can rarely be created in a vacuum, which is why many designers advocate an iterative design process, during which a simple prototype of the game is built very early and then iterated on repeatedly until the game becomes a shippable product.

Sid called this process "finding the fun," and the probability of success is often directly related to the number of times a team can turn the crank on the loop of developing an idea, play-testing the results, and then adjusting based on feedback.

At the end, what the designer has is a set of green features. These features are the ones the designer has in his or her final iteration. By compiling the green features into a specification sheet, the designer would have my understanding of what a Macro Design Document is: A series of design elements that has been tested and proven to work together to provide compelling gameplay.

Since it has been tested and the final build can prove whether or not game is holistically fun, there is a strong assurance that any development on features listed in the final Feature List will not be wasted.

Furthermore, six out of ten iterations went through Usability Testing. This means the designer has an archive of test results to demonstrate the basis of their design decisions. This is more powerful than argumentation; the designer would be able to show a link between a problem in design, a solution implemented and a result from testing the next iteration.

In other words, say other people on the team would argue on the necessity or lack of a feature. Instead of debating with the designer, they can play previous builds that has the feature, or check out test videos to see why the feature didn't work.

If the request is for a new feature, the designer can be allocated time to do more iterations. My design students average between 3-8 hours of development time per iteration, which translates to 0.5-1 working day. The team can make a decision on whether or not to allocate more iteration time to refine more features, and the possibility of adding extra members to speed up the development of an iteration.

The designer therefore has four deliverables to demonstrating good design:

A Publishable First-Playable that communicates what the game is about, that can be played without assistance.

A Feature List a.k.a Macro Design Document indicating what are the critical features within the game.

A Development Log within the Feature List showing the evolution of design, indicating how the designer thought about the design of the game.

An archive of weekly builds and test videos, demonstrating proof of what worked and what didn't in each iteration.

Does it solve the problem?

The three original problems were:

Game design graduates need to show proof their designs would lead to a good game.

Game design graduates need to show they can do work without needing others.

Game design graduates need to communicate their design ideas when they are inexperienced in communication.

This is how this implementation of Method solved these issues.

By creating prototypes and conducting usability testing, the design students can demonstrate to others how their designs worked, and prove it works with a Publishable First-Playable build.

By learning to prototype and conduct testing by themselves, the design student could test design ideas and analyze the results, even to the point of creating working versions of their designs. They can do this on their own, thus studios don't need to allocate extra resources to let the designers do work.

The collection of deliverables (Final build, iteration builds, test videos and a development log) will allow others to direct questions on specific parts of the project, and since the design student personally worked on the project, they can provide specific answers to specific questions. More importantly, playable builds and test videos communicate plenty of information and all the design student has to do is to append explanations to them upon request.

Thus this is the result from researching the prototyping process for individual design students up to the end of 2014.

Read more about:

Featured BlogsYou May Also Like