Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In this technical piece, the team behind idiosyncratic MMO success EVE Online discusses precisely why sharing a single world between all of its players makes sense.

August 9, 2010

Author: by CCP Team

[In this much-referenced technical piece originally published in Game Developer magazine late last year, the team behind idiosyncratic MMO success EVE Online discusses precisely why sharing a single world between all of its players makes sense.]

Most of the larger massively multiplayer online games use separate instances, or shards, of the game's universe in order to manage player populations and server issues. We feel that a single shard should be the natural choice of any MMO developer, and that's what we do with EVE Online.

When you ask the question "Why a single-sharded architecture?" it's also informative to look at the deeper question: "Why have shards?" There are two main reasons why a developer chooses a sharded implementation of a game -- lack of content and technical challenges. These are actually inter-related.

Most current MMOs take place in environments essentially limited by strong physical constraints: avatars moving across earth-like landscapes or within enclosures like buildings. Furthermore, within these specific environments, players are confronted with a multitude of scripted activities such as quests and NPC encounters that only take place there.

The most limiting physical constraint concerns avatar density. This is both a technical problem and a usability problem. Players do not want to constantly navigate an overcrowded environment. In order to keep avatar density within reasonable limits, you either need a very large playing field or a limitation on the number of players in a given field. Both of these options are restricted by the amount of content you can design, and since content is the biggest cost in modern games, this quickly becomes a financial limitation.

The obvious solution is to have procedurally-generated content, such that you can essentially have a playing field as large as you want. The drawback with that approach is that you will most likely never reach the same artistic level displayed in hand-crafted environments, and scripted activities might become repetitive and lack context.

The real solution to this problem is to embrace the notion that in an MMO, just like in any other social network, players are the content. Once that is accepted as a fundamental design guideline, it becomes easier to navigate the challenges involved in creating and maintaining a single shard architecture and actually gives the advantage to that design model.

Looking more closely at this assumption, we can identify two types of content generated by people: material content, which we describe as persistent user-created assets within the world, and social content, here considered as persistent patterns of social interactions.

The first one is easy to comprehend. However it is implemented, persistent player-created content can populate large playing fields and make the world more "meaningful" for large groups of other players. This is the case in RTS games, where the backdrop may be relatively bland and automatically generated.

In EVE, for example, a lot of the high-end gameplay revolves around conquest and control of territory in unregulated areas of the map. By choosing where to place primary space stations, players shape the topography of the strategic battlefield. In selecting the position of those stations' supporting starbases and the configuration of their offensive and defensive systems, they shape the tactical context in which critical battles occur.

The second type of content, social content, is the most potent, but also requires careful design. The field of social interaction encompasses a very wide range of activities and concepts:

Pure socialization, such as chat and messaging

Combat between players or cooperative combat against the environment. This scales all the way from 1v1 combat to conflicts between factions numbering thousands of players

Economic activities

With socialization, the main "content" is the social tapestry that materializes in buddy lists, membership of player associations, or guilds and forums. For all of these, a single shard adds to the richness of the content because players don't need to be split between servers; they can discuss issues and share experiences that arise in the shared world that are relevant to the whole player base rather than a specific server -- essentially giving them a shared history as a whole society rather than a disjointed one based on smaller server populations.

Furthermore, gaining fame becomes much more rewarding due to the size of the audience, thus strengthening the impetus to do so. The technical challenges to creating a single shard communication infrastructure should not be underestimated and we address them later in this article.

As part of CCP's efforts to nurture the development of the functioning society formed by EVE's player base, a democratically-elected player council was formed to act as representatives of player interests in the development process.

The single-sharded nature of the game enables the formation of a single coherent society and makes it much more likely that the elected players will form a representative cross-section of the interests of the electorate. Because everyone is sharing a single server, and thus a single social context, the community has a common baseline for discussion and debate, and famous figures are more likely to be known to the entire player base rather than just fragments thereof.

We have also seen the benefits of single-sharding with combat, in the form of increased complexity of conflicts in terms of both space and time. This heightened complexity results in a variety of roles within a conflict and less routine in waging it. In EVE we have had wars involving tens of thousands of players, pitched against each other for several years.

Whether a player contributes as a grunt on the front, a middle-man in logistics or as a long term strategic planner is up to them. The size and longevity of such conflicts clearly sets them apart as true content. Instead of being ephemeral and soon-to-be-forgotten skirmishes, these have become epic stories that fascinate players and build up reputation and true in-game power. Again, providing for the sheer scale of these encounters involves technical challenges, both on the server and client side.

For instance, one of the key pivotal wars in EVE (referred to without apparent irony as "The Great War") is generally agreed to have started in late 2005 when an old, established alliance of player corporations decided to eliminate a new but growing power for political and ideological reasons. The conflict snowballed, expanding its theatre of war, ebbing and flowing as some groups surrendered or collapsed and fresh ones joined the fray.

The organizations directly involved in the fighting at this stage contained, between them, just over 30,000 player characters. And, just as many groups were drawn into the fighting by the opportunity to settle old debts from previous wars, events from these three years of continuous warfare will likely fuel future conflicts.

Finally, there is the economy. Even though many people don't realize it, the economy is truly the pinnacle of social interaction. This is of course assuming that it is player-driven rather than being dictated by the designers. It is only in a truly player-driven economic environment that price fluctuations of items and commodities realistically start to reflect the sum total of the socio-economic landscape of the world. The market becomes a mirror of the activities of all participants in the game and it acts to change players' actions by its reflection.

Such a player-driven system doesn't strictly require a single shard to function, but it is catalyzed by the extended size inherent to single-sharding. A small economy will be manipulated by a few strong players and exposed to large fluctuations and instabilities. The larger the economy gets, the more resilient it becomes. Once beyond those instabilities, it truly starts to reflect the macro-economic landscape of the game-world, becoming an allpervading, autonomous, and ever-changing mass of social content that no designer could ever think of hand-crafting.

In line with our design goal to have a single shard in EVE, we quickly decided to go with procedurally generated content for the physical landscape of the game's universe. Fortunately, space lends itself rather well to that. The natural distance scales in a typical galaxy make aggregation points emerge naturally, so that the whole logic of a solar system can easily be run within one process space. For clients this goes down to an even finer granularity, as they only need to physically simulate their closest surroundings.

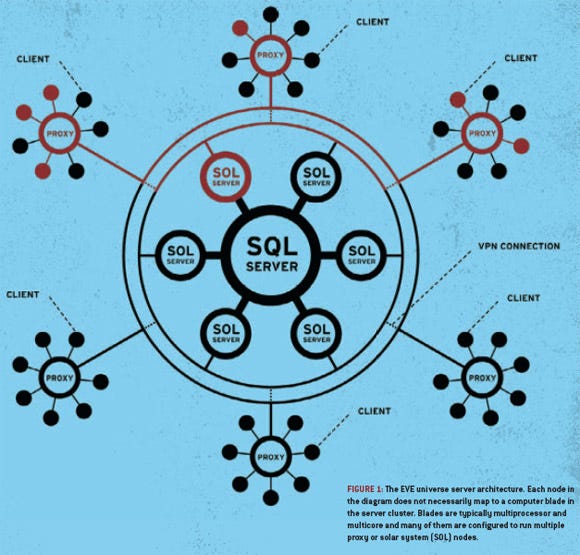

But apart from that, the whole back-end logic abstracts the notion of servers, such that requests within specific game logic (either on client or back-end) are transparently mapped to different nodes depending on context. We have a distributed logic running on top of a cluster of nodes. All data manipulated by these nodes is read and written to a single database that binds the whole world together (see Figure 1).

Some might argue that a single solar system thus acts as a kind of shard, but that is not correct. Any player from the global player base can enter any solar-system, and all economic activities will have immediate repercussions to the whole economy.

Furthermore, all the social structures mentioned above are truly global and transparently cross system boundaries. The solar system does introduce "crowding" problems, but these are problems that cannot really be solved except by game design.

For all practical purposes, the whole cluster acts and feels like a single process space. But this is not to say that this approach hasn't had its challenges. Here are a few.

As we noted earlier, player density can be a real challenge from a technical perspective as well as a game usability perspective. In EVE, we run into two general kinds of harmful player clustering which cause design headaches. The first is what used to be called the "Yulai problem."

A few smart players determined that the Yulai solar system was particularly well connected to the various areas of the star cluster, and started selling their wares there in bulk. Those few canny marketeers on their own weren't a problem, but it didn't stay that way for long -- as more buyers visited the system more sellers set up shop there.

As more sellers flocked in it became more desirable to buy there. Pretty soon it seemed like the entire playerbase was shopping in Yulai, and that system's population made up a noticeable percentage of the total online playerbase at any given time.

In an effort to curb the growth of this trade hub before it started causing serious server issues, we made some changes to the map by shifting jump routes around to lessen the appeal of Yulai. Within a few months the "Yulai problem" became the "Jita problem," as players figured out the new best location and moved all their business there instead.

The formation of such hubs seems to be an inherent human phenomenon, and while we still have regular design discussions about effective ways to distribute market activity more evenly without distorting the market itself, it's eventually something that we solved with hardware and software solutions.

The second clustering issue is almost the polar opposite to the problem of market hubs -- that of huge battles for strategic objectives. Whereas hubs are permanent and predictable fixtures, fleet-sized combat tends to be transitory and unpredictable. In our case at least, the emergent gameplay that delivers such value regularly compels huge political power blocs numbering thousands of players to make spirited attempts to beat the life out of their rivals.

Without warning a particular system's population will shoot up from maybe half a dozen players to over 1200, generating a huge spike in server load before rapidly dropping back down to its original level. The abstract design solution to this is to spread the combat out across multiple systems simultaneously, but this is directly opposed to the ageless military principle of "hit them with everything we've got." It is an ongoing challenge to figure out how to implement this in practice in such a way that it's actually beneficial for military commanders to split their forces under the majority of common circumstances.

A large-scale single-sharded environment is not without its unique challenges on the code side either. Here are several of the problems we faced in our implementation.

Running out of memory. Over the years, maximum memory usage on the nodes that run EVE's solar systems (Sol nodes) have been steadily increasing with the increased population and expansions to the game. In particular, solar systems such as Jita have been pushing the process memory usage up. When EVE launched, it ran on 32-bit Windows Server 2003, giving us a virtual memory limit of 2GB for each process. Later we upgraded to a 64-bit OS and this increased the limit to 3GB. But sometimes even this would not be sufficient, so we decided it was time to make the server processes 64-bit.

Initially we were slightly worried, as the physics simulation has to stay in sync on the client and the server. We were uncertain whether it would drift apart due to different code being generated for the complex mathematics involved. Eventually careful testing revealed that this would not be a problem and that the algorithms were numerically stable in such a mixed environment.

The release of the 64-bit binaries was without incident. In fact, performance increased quite a bit, due to the better optimization possible with a larger number of registers in 64-bit mode. Baseline memory consumption did rise, of course, because all pointers were now twice the size. However, we were finally free from the virtual memory constraint of 3GB. Now each process can allocate as much as it wants and never run out of address space.

As for physical memory, it turns out that the 4GB of physical memory on each machine -- which typically runs two Sol node processes -- is quite sufficient (as seen in Figure 1). Most of the allocated virtual memory lies dormant and there is little paging. We still haven't seen a node die because of page thrashing.

Programming asynchronous systems and distributing execution. Running logic on top of a cluster of distributed nodes means that a typical function call might cross process boundaries and even go across the public internet before returning. This calls for a lot of asynchronous programming, which is notoriously cumbersome to implement. This is where Stackless Python comes to the rescue.

At CCP we firmly believe that we need to make programming as simple and intuitive for the game programmer as possible. We decided early on that in order to create a complex game such as EVE we would need some kind of multi-threaded programming. We also recognized that using scripting for game-level code was necessary simply to get things done. We were very fortunate to come across Stackless Python at a very early stage in development and ended up using it for all game logic.

Stackless Python is a variation of the Python programming language that introduces the concept of "tasklets" and "channels." A tasklet is a thread of execution that is independent of OS threads, and tasklets communicate and synchronize with the help of channels. They consume no more memory than their execution stack and don‘t require kernel mode switching, which makes them very fast.

With impunity, a programmer can create a new tasklet to fork off any processing he or she needs to. Tasklets in EVE use cooperative scheduling, meaning that we only switch to another tasklet at known switching points. This virtually eliminates the need for complex locking and synchronization, as is often the case with multithreaded programming.

A particular case where this programming model shines is with I/O. Efficient I/O makes use of a nonblocking interface to the OS. On Windows, this often takes the form of sockets and I/O completion ports: A thread starts an I/O operation and then polls an I/O completion port to see when it is done. But from the programmer‘s perspective this is extremely complicated and error prone. A programmer just wants to send() and recv() and not worry about event loops and such things.

To facilitate this, we present the Python programmer with a blocking I/O interface that is in fact only taskletblocking. When a tasklet executes a socket.recv( ) function we issue an asynchronous I/O request to WinSock. We then suspend the tasklet, allowing another tasklet to run. Later, when we notice that an I/O request has completed, we prepare the result for the blocked tasklet and make it runnable again.

This way we can program discrete pieces of game logic with their own straightforward execution paths that just behave as you would expect, while behind the scenes we suspend and reschedule their execution, making good use of the operating system‘s resources.

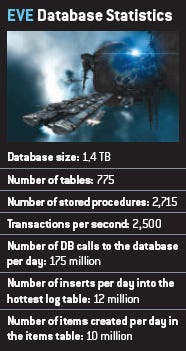

Designing and maintaining a real-time database. We chose a relational database to be at the center of the server architecture. The key focus with our database design is to keep things simple, as we strongly believe that simple is incredibly powerful, and easier to maintain. All important DB usage goes through stored procedures to make things as efficient as possible. There is not a single trigger or a cascading foreign key in the database, simply because we don't want complex magic happening behind the scenes. We want things to be visible from the source code, whether it is a child record delete or an update of related records.

We also have strict coding guidelines for DB code that we have generated over the years. We have inhouse experts reviewing DB code checkins and forcing developers to fix code not done correctly. Monitoring the database usage is extremely important, and we store all kinds of statistics for that purpose in the database as well. We track how often each stored procedure is called per day, how often an index is scanned, and so on.

Looking at such statistics usually tells us right away if a developer has committed code that has sent the database into a frenzy. We are running a real-time game on a huge scale, which means speed is top priority, and we can use tricks which would not be allowed in other situations -- similar to the operations of a bank (although recent events in Iceland might suggest otherwise).

One example of this would be when we use "read uncommitted" when loading a solar system configuration, knowing there won't be any inserts or updates to the data we are reading so we can allow ourselves to read with no locking. Another trick would be that we most often only need to allow the user to filter data within a day. For example, we do not need to allow a user to select all records from the player journal between 14:00 and 15:35; it is sufficient to allow filtering by only a date. In that case we simply need to keep track of the clustered key at 00:00 every day and use that in our queries. This means we don't need to index on date/time columns in every nook and cranny, making things faster and slimmer.

While having only one database creates performance challenges, it also makes some things easier. Having a distributed database system, where one database stores all characters and other databases store each shard, brings all kinds of complexities that we don't have to deal with. There is no need to replicate data between databases, nor to call multiple databases nor move characters and belongings between sharded databases and so on.

With the performance demands above, maintaining excellent performance of the database hardware can be quite a task. It is the central point of our virtual world, so any latency or slowness at this level reflects across the entire universe of EVE.

Ensuring that the database servers have headroom in all of the key performance areas is critical -- the main bottleneck that we have had to overcome is I/O performance of database storage.

Over time, we have successively moved away from traditional fiber channel disk array storage to much faster solid state storage devices. Initially this was done for our hottest tables only, but we have recently moved the entire database to solid state drives. This approach has helped us to maintain an environment of virtually no database lag, and we still have a huge ability to scale up.

An area that has required constant attention and work from our operations team is how much we can break down a heavily laden area of the game world into chunks, and spread this load over multiple nodes. Currently at this level we can allocate a maximum of one server node to power an entire solar system, and one server node runs mostly on a single CPU core, splitting off networking and other asynchronous operations to another core.

The design headaches that occur around the "Jita problem" mentioned above are specifically where we start to run into this limitation. When thousands of players go into the Jita system, or engage in fleet battles, we have often had trouble finding enough CPU power to handle the immense amount of processing required to keep the game simulation running lag-free. In the server room we keep Jita running on its own dedicated machine -- the biggest, meanest blade server that we can get our hands on.

Software-based improvements like StacklessIO and 64-bit server code (see "running out of memory" above) really made a huge difference to our capacity in this area. Last year saw a three-pronged assault in our "War on Lag," where we rolled out StacklessIO, EVE64, and some top of the line server hardware almost simultaneously.

Software-based improvements like StacklessIO and 64-bit server code (see "running out of memory" above) really made a huge difference to our capacity in this area. Last year saw a three-pronged assault in our "War on Lag," where we rolled out StacklessIO, EVE64, and some top of the line server hardware almost simultaneously.

The result was that our capacity in Jita went from around 600 players to 1,200 players -- a 100% improvement in capacity in under 6 months. Work continues in this fashion in order to again double this number, because we know that we will need to.

As we have seen, running an MMO in a single shard introduces strains on system architecture, lowlevel runtime, databases, and operations, and it even affects the game design level. Moreover, as the number of players grow, the strains will show up in different, sometimes unexpected places.

As such, the development of a single shard game is a never-ending task, constantly needing innovations and clever solutions to keep it growing.

But pushing the limits is also a source of innovation, and leads to discoveries that are both enjoyable from a professional point of view, and also add new dimensions to the player's experience. So the answer to question "Why a single shard?" could simply be "Because it's challenging and rewarding for everybody" -- and that is what gaming is all about, isn't it?

Read more about:

FeaturesYou May Also Like