Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Aaron Davidson, Server Lead Founding Developer at Jawfish Games, discusses the pain points and complexities that the company faced while creating and maintaining their platform that supports real-time, multiplayer gaming across iOS, Android, and the Web.

18 months ago, Jawfish was a concept scrawled on a napkin. In that short amount of time, we’ve turned into a competitive casual gaming company rivaling major industry players – but we set out to build a platform no other gaming company had done before. Other casual game companies have postured about their foray into the real-time, multiplayer gaming space, but few offerings have yet to appear. Why? Because the technology is significantly more difficult to build than traditional casual games.

Building a platform to support real-time, multiplayer gaming for simultaneous play across iOS, Android and the Web has not been easy – it has however, been extremely rewarding and insightful. I’ve been building games for 20 years, but there were a few things that still caught us off guard. Here’s what we learned:

Running in the cloud is full of surprises

We’ve been quite satisfied running all of our systems on AWS, but it has not been drama-free. If you’re a full-stack developer used to running on dedicated hardware, the transition to the cloud can be difficult.

When performance issues crop up, we're left wondering what could possibly be going on under the hood. As a cloud consumer, you get very little visibility into what is happening on your host machine and network. Sometimes your instances can start misbehaving, and if you push new code to production frequently, it can take a while to figure out if it was your changeset, or a bad neighbor.

When we first released we were too quick to lay the blame on AWS. There was an issue of strangely itinerant lag that impacted about 15% of the concurrent users for up to an hour at a time. Rolling some of our services onto new instanonces would solve the problem for a while, but then they’d recur. It turned out that some connections from China were dropping (not surprising) and the call to Java’s socket.close() was blocking for up to an hour (extremely surprising), causing an internal queue to fill up and slow down whoever else was connected to that service. We fixed the queuing and were more defensive around socket closing, but many hours were wasted blaming AWS, cycling instances and rolling servers.

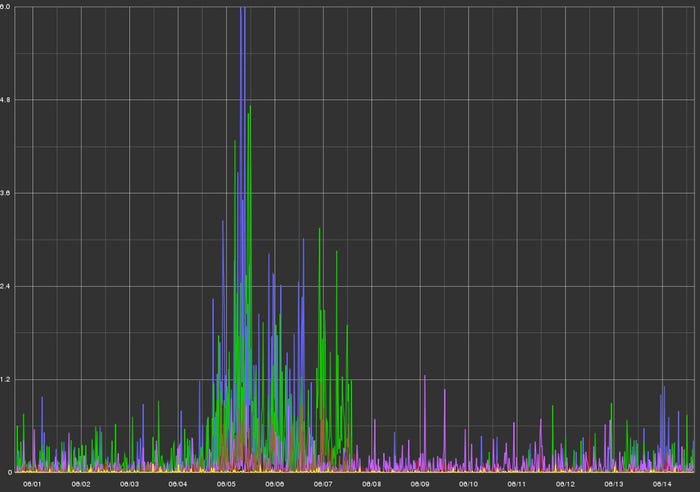

On the flip side, we recently deployed a large update to one of our games and the very same day, performance issues started to crop up. Our services would massively load spike every few minutes. The CPU would shoot to 100% with the load average going into the fifties, despite the tangible load on the system (number of users, games in play, etc.) remaining constant. Because this coincided with our deployment, we blamed the new code. It took several days of scouring our recent code changes and several fruitless debugging sessions before we finally tried rolling our services over to new EC2 instances — of exactly the same type and configuration. The problem magically went away.

Three days of mysterious server load spikes, resolved by just moving to new instances

That said – most of the time, things run smoothly. The flexibility of the cloud makes it ridiculously easy to scale our clusters up and down, in and out, as each game requires. It’s an absolute godsend for performance testing. We can just spin up a huge performance-testing cluster for a few hours and simulate 100,000 concurrent players hammering on the game system, then tear it down when done. We would need a massive budget to replicate that kind of performance test if we were self-hosted, and the hardware would spend most of the time idle.

Invest early in tools to visualize and manage your system

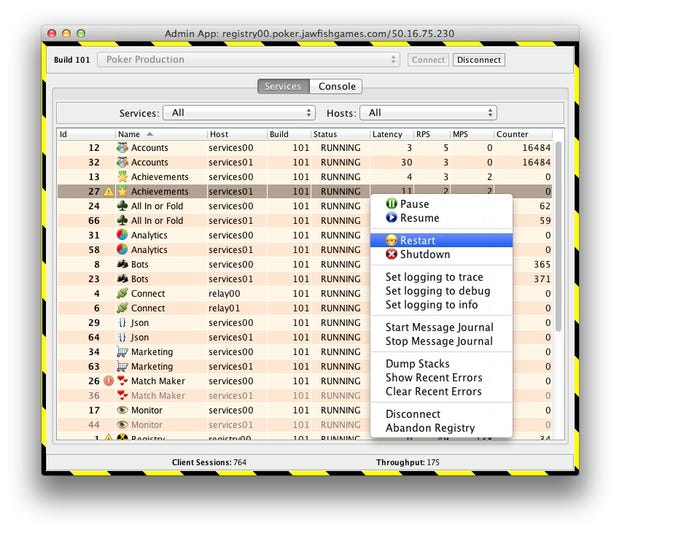

When we projected the number of service types and redundant service process we wished to run, it was clear that ssh’ing around – invoking shell scripts and digging through log files – wasn’t going to be much fun. The ability to manipulate our cluster from one place and to spot potential issues at a glance was not just a cool feature, but a step on the shortest path to successful release and subsequent live-ops.

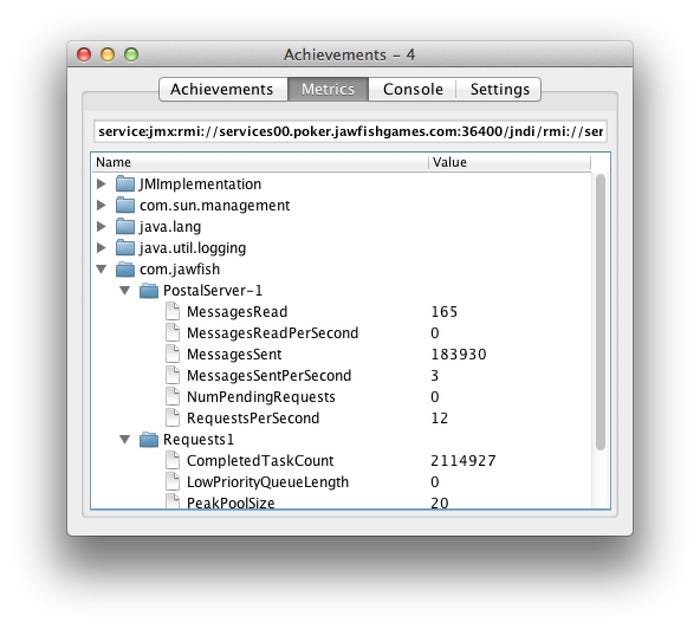

We developed custom GUI tools for cluster administration that pay huge dividends in productivity today. We can visually browse and manage the entire game system, and color-coding our real-time performance metrics helps us quickly spot potential issues. Once you have tools like these, you won’t know how you lived without them.

Our Admin App lets us view and manage a game cluster. The police tape lets us know we’re connected to a production system, and not a development system.

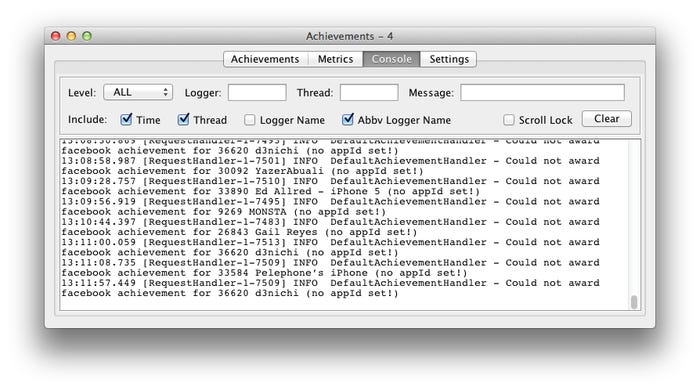

Browse the real time metrics exported by the service

View the service log files in real time

We also hooked up three wall-mounted television sets to Raspberry Pi’s in our office. The Raspberry Pi drives a rotating dashboard of statistics and charts for all to see. It’s not only a visual log for us, but guests can also interpret the data in an aesthetically pleasing way.

Our Raspberry Pi powered dashboards

Mostly Stateless, FTW

For most services, it’s a no-brainer to use a stateless data model. Most of our live data is stored in a Couchbase cluster, which provides reliable, low-latency, consistent NoSQL operations. Whether we’re querying user account data or updating a player’s achievement progress, most services can quickly fetch a document by a unique key, modify it, then write it back. It can be tempting to try and contort the entire system into a purely stateless one, but ultimately, we decided to keep a stateful model for the bits that are critically real-time. Real time multiplayer games are very concurrent-write-heavy. Optimistic locking degenerates very quickly under these conditions, even when the round trip to the database is 1 or 2 milliseconds. The tradeoff is that if an EC2 instance goes belly up, the in-progress games hosted on that instance will be lost. However, given that the rest of the system is stateless, nothing else is impacted and the players can quickly get placed into fresh games on other hosts.

We’re quite proud of the Jawfish platform we’ve built. It provides a full-featured and performant base for writing scalable multiplayer games for any platform. We can quickly write new games as services, and reuse the existing platform services for each title. Cloud hosting gives us immense flexibility, but real-time games can be sensitive to the noisy cloud environment.

Aaron Davidson is Jawfish Games’ server lead founding developer. He holds an MSc. in Computer Science, specializing in Artificial Intelligence for poker. Prior to Jawfish, he was the lead server engineer at Full Tilt Poker, one of the largest online poker sites in the world, that at its peak hosted over 200,000 concurrent players.

Read more about:

Featured BlogsYou May Also Like