Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Player-facing testing has rapidly changed from bug-finding to simply an early release model. In this article, indie developer Robert Hoischen explains how his studio set up a gamified bug-testing system using your player base.

The indie elephant in the room, Minecraft, has changed the perception of what a "beta" truly entails in the mind of the average gamer. No longer do people hearing the word "beta" expect the buggy, frustrating, horribly broken, and not-fun-at-all experience they should. Instead it is now viewed as a supposedly incomplete early development milestone with a high degree of playability and quite a bit of polish. "Who does the actual beta testing of these milestones, then?" you ask. Most probably not the people coming to an indie-game dev forum stating that they are qualified beta testers because they've "beta-tested Minecraft."

Let us get some definitions clear-cut first. A beta version is a feature-complete, but not yet bug-fixed, nor polished version of a given working package in development. That might be a single little feature in a game, or a full software suite. As the example above already has shown, this definition is far from being universal, but herein thou shalt see it as the undeniable, utmost truth. With this definition, Notch's brilliant creation was released in no more (and no less) than well-polished milestones, probably internally alpha and beta tested prior to the release to the self-proclaimed "beta testers."

How have these recent changes in mentality affected the beta testing requirements of future game-related products? The answer to that question very much depends on target audience, but in general, the freely available workhorses out there drift increasingly towards the "I just play around" category.

While Blizzard Entertainment has found a perfect use for these people, they could become a potentially harmful ingredient in any small- or medium-scale testing team. Overestimating how much (quality) work will be done per unit time and tester quickly leads to frustration and delays, causing, in turn, loss of trust and ultimately, sales.

Many indie teams seem to have solved this issue by not planning much at all and/or testing everything by themselves -- a rather workable, but far from optimal, solution.

This blissful ignorance comes as no big surprise - there is a lot of work involved in setting up proper testing infrastructure, finding the right people to do the testing, and communicating the ever-changing aims of the tests. This leads us to the gist of this write-up: POMMS-based beta testing.

The acronym stands for Project-Oriented Modular Motivational System and was incubated by the author as a tool to bring structure and clarity into the chaos that was called beta testing at our little indie game dev studio Camshaft Software, currently working on Automation: The Car Company Tycoon Game. This system implements modern game design elements into the testing process itself, a concept usually connected with the buzzword "gamification." The POMMS-based approach to testing attacks some of the biggest challenges in beta testing: the ability to plan ahead, tester coordination, tester motivation, testing focus, tester flexibility and individuality.

The system itself is quickly explained: Well-defined subprojects are outlined, and a specific amount of "points" is awarded for every task a tester completes towards realizing the subproject goals. This score is the so-called tester power level, and when it's over 9000, your subprojects are either too long or the amount of work per point too small.

Depending on the contents of a subproject, a meaningful subproject could be anything between 4-16 weeks long, with a single point being the equivalent of around 15 minutes of work. Within any given subproject, each tester has to reach a certain minimum score to continue participating in beta testing. The highest scoring testers are awarded stars that are cumulative across subprojects. It is up to the developers to decide what the rewards for attaining these stars are. For example, they could give testers special privileges, a place in the software's credits, provide them with special forum statuses, etc.

If a tester achieved more than the minimum score in a subproject, but didn't reach star rankings, the final score is carried over to the next subproject. This carryover does not count towards reaching the next subproject's minimum score, but towards the placement in the star rankings. This rewards testers who continually deliver solid work over several subprojects, as they will grab a star eventually, and by the end of the project, they will not feel like they have been anonymous testing machines after all.

Each subproject focuses on a specific aspect of the software, while attempting to not narrow down activities too far. A good example of this would be the addition of V8 engines to the Engine Designer in Automation. Within eight weeks' time, this subproject encompasses checking 2D/3D artwork, playtesting and balancing scenarios, gathering real-life engine data, polishing up entries in the bug-tracking tool, checking all new strings in the game, and last but not least: good old bug reporting.

At the start of each subproject, a subproject-specific scorecard is defined. Every tester has a single post with this scorecard in the beta forum's "Hall of Heroes" thread, which each individual continually updates as work progresses. The following is an example of such a scorecard in the V8 subproject mentioned above.

Tester ID: PaLaDiN1337 (4*)

Tester Status: ACTIVE

Current Score: 17 (+0)

Scenario Testing: (V0.88) S1, S2, S4, S5, S7, S8, S11

Tracker Entries: ID28804129, ID28796925, ID28786633

Engine Data: "Ford 4.6L Modular V8 3V"

Engines Tested: "Ford 5.4L Modular 'Triton' V8 DOHC"

Forum Excellence: "Proof-reading helps, 2p"

Achievements: 3x "Bug-tracker clean-up, 1p"

Keeping it simple is the name of the game. Tested a scenario? Get a point. Researched engine data? Get a point. Posted a bug report? Get a point. The system needs to be painless to administer, simple to use, easy to understand, and progress readily visible.

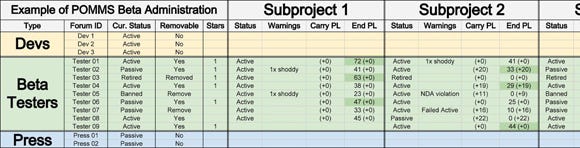

At the end of each subproject, the Hall of Heroes thread is locked and scores are transferred to a spreadsheet that lists all testers, their current status, and their power levels, along with carry-over scores -- all displayed as a timeline throughout different subprojects.

Click for larger version.

Not all activities can be planned for, or set up well in advance, and some tasks may pop up as development chugs along. For exactly this purpose there is the Quest Board -- a subproject-specific, continually updated section attached to the subproject announcement post in the Hall of Heroes thread. It allows for quick shifts in testing focus if required, and makes the testing system dynamic if need be. Here, testers will find non-standard tasks that yield achievements and points when completed. These tasks could be things like "clean up this spreadsheet for us," or "find some information on this topic," or maybe even "find additional testers that don't suck."

Testers do have a life -- or at least sometimes pretend to -- so more often than not, they may need to either take a break from testing or even retire. To accommodate this, there are passivity rules that allow for taking breaks, but quickly get rid of people that either never get started working or decided to not care anymore without notifying the development leads.

The "Rule of Thirds" does not only do well in photography but also here: being new to the team, coming from a passive status, or a failed active status, testers need to achieve at least one-third of the subproject's minimum score within the first third of the subproject to not be expelled. The administration of this system is rather trivial, too; changing a single flag in a spreadsheet, and checking the corresponding score cards of all arguable candidates one-third into the subproject.

The key benefits of POMMS to the developers:

Guiding: Developers can gauge and plan how much testing will be done on average.

Rewarding: The system creates a positive working environment for both devs and testers.

Flexible: The communication and the creation of new tasks is simple and efficient.

Manageable: The system is easy to administer and user participation is self-regulating.

The key benefits of POMMS to the testers:

Guiding: There is no guessing what things to do next, only options.

Rewarding: There are clear measures of progress and the tester's value to the team.

Flexible: There are always tasks that fit all kinds of tester preferences.

Manageable: The system is easy to understand and the scorecard simple to maintain.

A potential concern with POMMS as presented above would be that testers are rewarded only for quantity, but not quality. This is averted by having very high minimum requirements placed on what counts as a point. For instance, a bug report must be filed in the bug-tracking tool and contain at least a proper title, meaningful tags, and a detailed description of how to reproduce it.

The quality of the work done by the testers is only controlled indirectly -- evidence of shoddy work will often surface sooner rather than later. This system also reinforces the much needed trust between the testers and the developers, where direct control would only hurt the system as people start to be afraid of making errors instead of posting what they find. We all make mistakes; it is the density of mistakes that matters, not their absolute number.

Another important issue is the scalability of the system. Switching between testing systems is a difficult and tedious prospect, and once a system such as POMMS is set up, the developers have to be certain that it is not outgrown any time soon.

To date, our implementation has shown a very favorable, almost logarithmic scalability for small numbers of testers up to about 50. As an estimate, managing 100 testers is about twice as much work as having only 10 testers, although many more than 100 testers would not be easily manageable without automated tools.

Many testers battling for the very same points can lead to pretty stiff competition. This is specifically the case for focused bug reporting, which always works on a first-come, first-served basis. At first glance, this seems to be a good thing for the developers, as progress is rapid, but ultimately may harm the overall quality of the testing environment. This problem can generally be circumvented by always providing rewarding alternative testing activities via the quest board for less competition-minded people.

One easy-to-miss point is the immense importance of system fairness. Basically, your freshly implemented POMMS beta testing behaves like an MMO, and the testers are its players. Even the tiniest imbalances and unfairness will surface, multiplied by 10k in magnitude, to then be thrown into the developers' faces in a way that would suggest the tester's life just has been destroyed. At times it is scarily similar to the average MMO forum.

The crux is to make sure the scoring and reporting system is 110 percent fair. Make no exceptions to rules, because if you do, everyone else will cry for exceptions too. Initially, your testers will be on your side, but that can change very quickly if you don't handle the set-up of the system and its maintenance with great care. Always be transparent with any changes to rules or structures, and explain the why and when. Show appreciation for the work people do and accept that things can go wrong on both sides. Even minor direct and personal acknowledgement and appreciation from the devs toward individual testers can work wonders for motivation and the testing climate.

In the six months since our first implementation, the conclusion that can be drawn so far is very positive. About 90 percent of the testers very much like working within the framework of POMMS, while 10 percent mainly complained about its competitive nature and left. This is unfortunate, and probably due to several mistakes that were made during the setup phase, before the rules were fair to both competitive and non-competitive testers.

Comparing the amount of work that is done by the testing team now to how much was done during the time we ran an unstructured beta, we have effectively multiplied productivity by 10. Efforts are coordinated without much shouting, and the general atmosphere is positive albeit a bit competitive at times.

In summary, the herein described testing structure comes with huge advantages for small and medium scale software productions, entailing only few complications. It does not require a lot of manpower once set up, and has proven to be very efficient already in its first implementation.

Read more about:

FeaturesYou May Also Like