Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

I previously covered Jeffrey Lin's GDC talk on Riot's research-informed management of toxic player behavior in League of Legends. As follow-up, Jeff graciously took part in an extended Q&A session to discuss his work. Some very cool stuff.

This and other posts can be viewed at Motivate Play.

As part of our coverage of this year's Game Developers Conference, I offered a summary of Jeffrey Lin's (Lead Designer of Social Systems for Riot Games) talk on research-informed design and his teams's work managing toxic player behavior in League of Legends. Recently, Jeff graciously took part in an extended Q&A session with me, in which he further discussed his history as an academic and gamer, the infrastructure of Riot's research teams, the influence on previous literature on their research, and the results and implications of some of their work. Very cool stuff. Below, the interview in full.

JC: Could you tell us a little about your background as both a researcher and player of games?

JL: I love games. It didn’t matter whether it was the Atari, NES, or SNES, I used to play every game I could get my hands on for every console (or PC) with my younger brother. Some of my favorite games were the quirkier titles like E.V.O: Search for Eden and Legend of the Mystical Ninjas 2 on SNES. I also admittedly spent years of my life in Ultima Online, Everquest, and World of Warcraft where we played at an extremely high-level competing for world firsts. More recently, I used to play competitively in Warcraft 3 and love playing MOBAs like League of Legends.

I remember the exact moment I was drawn into a career in research. I was in a college class taught by Dr. James T. Enns, and he was showing us visual illusions like the “rotating snakes” and illustrating how subtle flaws in our visual systems create these perceptual illusions; but, importantly, how such flaws teach us so much about how our brain works. I was enraptured and pursued a career investigating visual systems and mapping out how our visual perception of the world is represented in our brain.

At Riot Games, my research focus varies a lot because it’s all about collaboration with other research teams on a variety of topics from sociology and social psychology to anthropology, cognitive neuroscience, and behavioral economics. Some of the questions we are interested in include: How do we measure the tolerance levels (for negative behaviors) for every individual player? How does online culture and language evolve over time, and how can we influence its evolution? How do we identify what types of behavioral interventions work best for different categories of player?

JC: Many of our readers are both players and academics themselves. Could you share with us your experience of transitioning from the academy to industry? What was that process like? What are the major differences you've noted in researching at a university vs. researching for a professional gaming company?

JL: During my PhD, I followed the work of Dr. Daphne Bavelier, who studied the surprising effects of video games and how some types of games could enhance cognitive abilities, such as visual search and multiple-object tracking. One of her students joined my lab at the University of Washington and we spent many late hours playing games, brainstorming, and developing experiments to study the effects of video games on cognition. In 2009-2010, I decided to apply for the Penny Arcade Scholarship and was lucky enough to win a scholarship that funded several studies on video games for the rest of my PhD. Around this time, Mike Ambinder from Valve Software gave a talk at the University of Washington and we struck up a friendship. After several lunchtime debates on the future of gaming, he offered me a job as an experimental psychologist.

I was never your classic scientist archetype. My research interests and papers ranged from visual attention and how attention is captured across space and time, from exploring how multiple object tracking is represented in the brain, to memory and how different ways of presenting imagery could influence familiarity with a particular brand. As a scientist, I wanted to solve the most difficult problems and it didn’t matter what field the problems originated from—that was my idea of a dream job.

In many ways, the transition into research for a professional game studio was fluid because the focus transitioned from exploratory research to advance the field, to exploratory research to creatively solve problems. Some scientists might focus on a particular technique or topic, and thoroughly investigate it with a series of concise, robust experiments; however, in industry, you focus on a problem and then decide what relevant research tools might be applicable in deriving a solution.

It’s extremely vital for a scientist to be pragmatic; at a game studio, competition is fierce and there is always a research group at another studio trying to invent the next groundbreaking algorithm or feature. One of the most valuable skills I’ve learned is the ability to call it quits—to recognize a bad idea, scrap it and start over. In addition, the ability to recognize when there was enough research to move on and simply launch the product instead of continuously pushing for the next 1% of improvement.

JC: How many people comprise the research group at Riot? What sort of variety of research backgrounds - in terms of areas and methodologies - do you have on the team? Does Riot typically have specialized teams working on distinct research questions / game issues?

JL: Riot’s research team is a pretty amorphous bunch—we’re spread across a large number of teams. For example, on the player behavior team we have PhDs in Cognitive Neuroscience and Brain & Cognitive Sciences and Masters in Bioinformatics and Aeronautics. However, a team that collaborates closely with us is the User Research Team and they have people (like Davin Pavlas) who have PhDs in Applied Experimental / Human Factors Psychology. Depending on the problem, we loop in different teams and different technologies; for example, across our core members we have expertise in psychometrics, qualitative methods like surveys and focus groups, eye-tracking, and even functional neuroimaging. For some of the more sophisticated player behavior features, we’ll rely on both Davin’s team and a Predictive Analytics team to build some models to inform the features.

JC: What is the general process for going from experimentation to implementing a new design feature? How and to what extent do the researchers interface with other groups, such as production staff, designers, and QA/testing? How much input do people outside of the team have regarding research experiment design and execution?

JL: On a day-to-day basis, we have an overwhelming amount of data that represent the general player behavior ‘health’ of League of Legends. These data and reports allow us to have a solid pulse on the current state of the game and quickly isolate problems that are remaining, or that crop up over time. For example, early last year we noticed that there was a high number of Leavers in low level matches; through a few simple studies, we hypothesized that players were simply unaware that leaving matches was debilitating to your team in League of Legends. The solution to that problem was pretty simple—when players tried to leave a match, we popped up a message that highlighted the fact that League was a team sport and players that leave could be penalized. We saw an 8% drop in leaving rates at low levels after that inclusion.

JL: On a day-to-day basis, we have an overwhelming amount of data that represent the general player behavior ‘health’ of League of Legends. These data and reports allow us to have a solid pulse on the current state of the game and quickly isolate problems that are remaining, or that crop up over time. For example, early last year we noticed that there was a high number of Leavers in low level matches; through a few simple studies, we hypothesized that players were simply unaware that leaving matches was debilitating to your team in League of Legends. The solution to that problem was pretty simple—when players tried to leave a match, we popped up a message that highlighted the fact that League was a team sport and players that leave could be penalized. We saw an 8% drop in leaving rates at low levels after that inclusion.

In a more complex example, we’ve recently started focusing on the problems around Champion Select in League of Legends. For those that aren’t familiar with League, players are placed into teams with up to four other strangers, who then have to negotiate and cooperate on a cohesive strategy (such as who wants to play which champions) all in the 90 seconds before a match begins. Numerous studies from psychology have shown that this is a recipe for disaster because time pressure creates a more hostile environment and it is naturally quite difficult for five strangers to come together, negotiate, and agree on a team strategy. What we noticed was that a significant number of games start off on a bad note because of arguments that originated from Champion Select.

Before we ever began prototyping potential solutions, we spent weeks researching the problem space. For example: What are the variables that correlated most highly with hostile Champion Select lobbies? We worked with Davin’s User Research Team to map out player expectations in Champion Select, and what players currently enjoyed (or hated) about Champion Select. We even visited MIT and Harvard to share our data and learned lessons and sought their advice and insights about the problem of Champion Select and what the latest research suggests for encouraging cooperative behaviors.

On the player behavior team, we have enough inquisitive minds that everyone is involved with the research process—it doesn’t matter if you are an Engineer, Designer, Researcher or Artist. One of the things I love about being a member of this team is the fact that I learn something new every day; having the passion and desire to always learn is one of our most powerful traits at Riot. On the player behavior team for example, one of the exercises we do every week is spend some time as a team going over a classic psychological study and thinking about how it could be applied to game development.

JC: The findings you shared with us all at GDC last month were quite remarkable, in terms of their effects on player behavior as well as the simplicity of the various interventions tested. I'm curious - did any particular theories inform your simple, but powerful designs? For instance, you noted that some classic priming studies (like that of Bargh) were insightful in coming up with the Optimus study. Did your hypotheses regarding shielding players or providing more detailed feedback on bans similarly draw upon any specific literature of theorists? It seems there would be some relevant work within fields like social psychology, cognitive science, institutional analysis, and behavioral economics.

JL: There are some obvious cases of classic literature inspiring experiments and features on the player behavior team at Riot; for example, “Optimus Priming” was a classic priming study that applied psychological priming on a pretty epic scale — over 30 million players. The results have been powerful — who would have thought that presenting a single sentence, phrased appropriately on the loading screen, could shape player behavior in a game and reduce something like verbal abuse by as much as 5%? Scientists have known about results like this in the lab; however, the industry is still catching up. I can’t wait until industry is not only caught up, but leading scientific forays in the future. There are simply some phenomenon that you cannot study in the lab, but is possible in online games and communities — I know there are insights here that can make games more fun, but also trigger breakthroughs in many other fields.

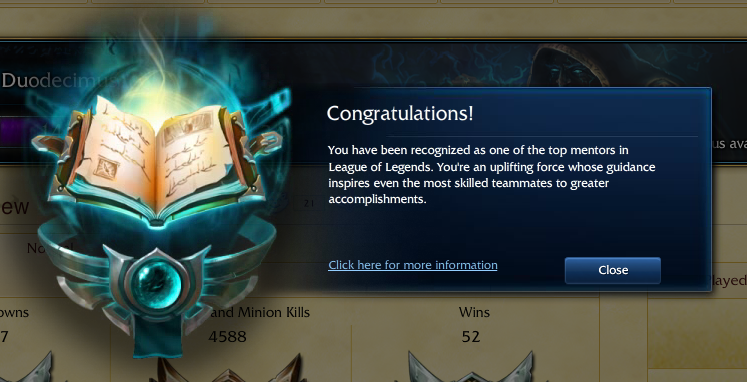

The Honor Initiative is a feature that allows players to praise each other and positively reinforce sportsmanlike behaviors; Honor was inspired by numerous studies on positive reinforcement and how it can be leveraged to effectively shape behaviors. It was also inspired by a real-life study conducted by the Royal Canadian Mounted Police detachment in Richmond, Canada, where I lived most of my childhood. The superintendent at the time challenged the conventions of the policing system. Instead of having a reactive police force, he encouraged his staff to venture out and seek positive behaviors — then reward the citizens with a positive ticket. An example of a positive ticket granted free entry for a movie at the local theatre. Surprisingly, youth recidivism reduced from 60% to 8% and youth crime was cut by 50% while the cost of the program was a tenth of the traditional policing system. We’ve seen similar results with our Honor Initiative, and have recently re-focused much of our development efforts on more systems that focus on positive reinforcement, thereby showing players what sportsmanlike behavior looks like instead of focusing on punishment.

In saying this, a large number of our experiments are exploratory. With scientists from so many different fields, we brainstorm ideas that are informed by our prior fields, but have rarely been tried before on a large-scale. For example, we recently tried a feature in League of Legends where some negative players were placed into a Restricted Chat Mode. In this mode, players play League of Legends with limited chat resources — a set number of messages per game that increases over the course of a match. Theoretically, we were interested in forcing negative players into a situation where they had to constantly weight their decisions against resource allocation — do they continue to use their chat messages for negative behaviors, or do they opt to use their limited resources for cooperative play because they ultimately want to win the match? We’re still digging into the results of this experiment, but it’s been promising. One of the surprises we’ve seen is that a large number of players are self-aware, and recognize that they have outbursts of rage and negative behaviors — but they need a bit of help. Thousands of players started asking the player behavior team to allow a manual opt-in to Restricted Chat Mode to help with their own behavioral outbursts.

One of our latest features is called Behavior Alerts — it’s a system in the game that detects patterns and spikes of negative behaviors and then delivers immediate feedback to the player; this particular experiment was inspired by B.F. Skinner’s work on reinforcement schedules, feedback loops and the shaping of behaviors, but also Solomon Asch’s classic experiments on conformity. The feature is going live within the next patch or two and we’re very curious to see how this shapes player behavior in League of Legends — we truly believe that for many players in online games, feedback is the key to more sportsmanlike play. Players aren’t inherently bad; sometimes they just need a nudge in the right direction.

JC: As covered in your GDC presentation, the Tribunal system has been quite the success in reforming players and reducing repeat offenses. I was wondering if you could tell us a bit more about the conceptual origins of the Tribinual system. How did the concept originate and how did it progress into what we see now? Was it informed by previous research or was its development largely a matter of exploration / intuition?

JL: The Tribunal was originally the brainchild of Steve “Pendragon” Mescon, and Tom “Zileas” Cadwell. They were inspired by crowdsourcing research and how effective and accurate the “wisdom of the crowd” could be. When you think about a system like this, it makes sense. Every voter in the Tribunal brings a different background and life experience and makes decisions according to their mental framework; given enough voters, it’s as if the system made a decision informed by thousands of informed opinions which are themselves formulated from thousands of different data points — you can see why the resulting verdicts tend to be pretty accurate.

JL: The Tribunal was originally the brainchild of Steve “Pendragon” Mescon, and Tom “Zileas” Cadwell. They were inspired by crowdsourcing research and how effective and accurate the “wisdom of the crowd” could be. When you think about a system like this, it makes sense. Every voter in the Tribunal brings a different background and life experience and makes decisions according to their mental framework; given enough voters, it’s as if the system made a decision informed by thousands of informed opinions which are themselves formulated from thousands of different data points — you can see why the resulting verdicts tend to be pretty accurate.

When the player behavior team formed and adopted the Tribunal, one of the first exercises we did was infuse the system with classic lessons from the psychology of feedback. For example, we knew that clarity and speed of feedback play critical roles in shaping behaviors — so we took a pretty big risk and implemented Reform Cards. These were ‘report cards’ that we sent to every player that was banned, and they highlighted the exact chat logs, item builds, and game evidence that led to the player’s ban. From this feature alone, we saw 7.8% more players improve their behaviors after receiving a Reform Card.

Early in the Tribunal’s lifespan, we used to reward players with in-game League of Legends currency for completing cases; however, one could argue that this was the wrong motivator for the task at hand. We ran a quick experiment that removed the currency reward for 30 days and saw a noticeable 10% drop in Tribunal voters. However, we then introduced a feature called “Justice Reviews” that provided players who vote in the Tribunal with feedback about their specific contributions to the community; for example, how many toxic players they removed from the community and how many toxic games they prevented. We saw an astounding 99% increase in Tribunal voters since Justice Reviews went live. In many ways, this series of experiments really explored the motivations of players when they visit the Tribunal — it wasn’t about currency (which otherwise could be called an extrinsic, tangible reward). Instead, players that visited the Tribunal wanted to make a difference in the community; in other words, they were driven by intrinsic motivations.

JC: Going back to the Optimus study for a moment - the handful of results you covered at GDC were quite compelling. Is your team prepared to maybe share any additional findings regarding the various types, locations & font coloring of feedback provided to players? Additionally, you noted that some of the results observed may perhaps be due to a spotlight effect. Could you comment a bit more on that? Any thoughts on how the team might further investigate that possibility?

JL: We’re not quite ready to talk more about Optimus just yet! However, we have seen spotlighting in effect for previous features. For example, when we launch new Tribunal features, we typically see a spike in report rates — this is a classic spotlighting effect where players have an increased awareness of the Tribunal and the ability to report negative behaviors and therefore report more negative behaviors for a brief period of time.

JC: The inherent value of your work - both in terms of improving the player experience and contributing to the company's bottom line (which are likely not conflicting goals) - seems obvious. In your opinion, are there any other developers tapping into research informed-design in a similar manner? How do you see the role of in-house researchers expanding or changing in the next five years? Do you think developers will increasingly come to value the contribution researchers can bring to game design?

JL: Some of the most interesting discoveries in scientific history happen when people cross-over into a new field; I believe it’s only a matter of time before more scientists, economists, educators, and those across other more varied disciplines cross-over into this industry. In fact, there are already strong research teams at Microsoft and Valve Software.

But, I think we’ll see a new hybrid of developers in the future. At Riot, most of our scientists aren’t in “scientist” roles — all the scientists on my team are Game Designers and hardcore gamers and scientists on other teams are engineers or managers for example. Being a former scientist doesn’t define role or capability, it just equipped us with a large toolkit that allows us to tackle a variety of critical problems. In this new generation, more and more scientists will also be hardcore gamers, and it’s going to be exciting to see how games evolve as industry and academics learn how to co-exist as one team.

Read more about:

Featured BlogsYou May Also Like