Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

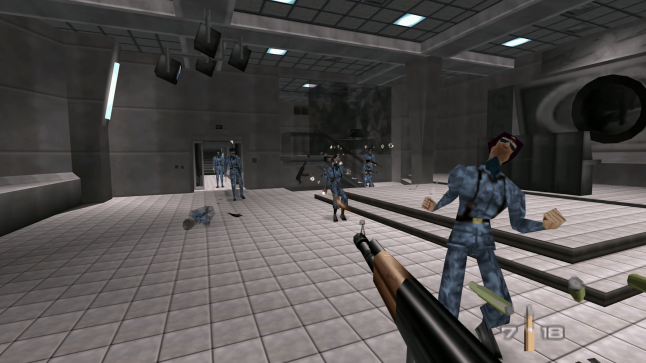

One of the most influential FPS titles of all time, GoldenEye defied expectations and set the standard for NPC AI for future generations.

AI and Games is a crowdfunded series about research and applications of artificial intelligence in video games. If you like my work please consider supporting the show over on Patreon for early-access and behind-the-scenes updates.

Note: All screenshots and video footage in this article and the video above are taken from an emulated copy of the game. Specifically, the GEPD edition of the 1964 emulator plus the GLideN64 video plugin.

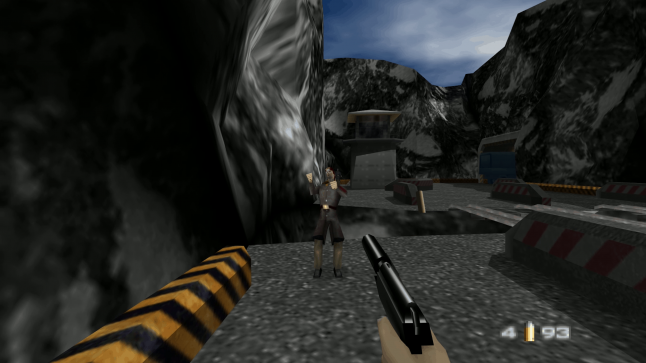

GoldenEye 007: one of the most influential games of all time. A title that defined a generation of console gaming and paved the way forward for first-person shooters in the console market. In this article I'm winding the clock back over 20 years to learn the secrets of how one of the Nintendo 64's most beloved titles built friendly and enemy AI that is still held in high regard today.

Upon its release in 1997, GoldenEye 007 not only defined a generation, but defied all expectations. A game that Rare, Nintendo and even the Bond franchise holders MGM had little faith in. Released two years after the launch of the film it's based on and one year after the consoles release to market, it seemed doomed to failure, only to become the third best-selling game of all time on the platform behind only Super Mario 64 and Mario Kart 64, selling over eight million copies. Not to mention earning Rare a BAFTA award for developer of the year in 1998.

It's a game that carries a tremendous legacy: defining the standards of what we would come to expect from first-person shooters for generations to come, specifically in AI behaviour. AI characters with patrol patterns, enemies that call for reinforcements, civilians that run scared, smooth navigation and pathfinding, a rich collection of animations, emergent dynamic properties of play and so much more. It not only defined a generation, but also influenced the games that succeeded it, from Half Life to Crysis, Far Cry and more.

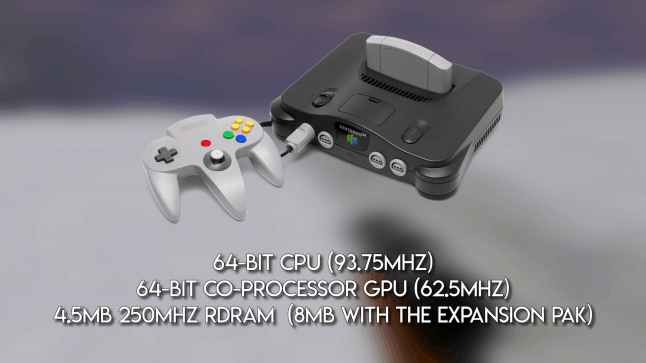

I'm interested not just in how the AI was designed, but how the hell they even got it to work? If you consider many of the AI techniques I've explored such as finite state machines, navigation meshes, behaviour trees, planning technologies and machine learning, none of these were established practices in the games industry when GoldenEye was released. Not to mention the fact the Nintendo 64 is pushing 25 years old and when compared to modern machines had minimal CPU and memory resources. How do you build AI and gameplay systems to run so effectively on hardware that was so tightly constrained?

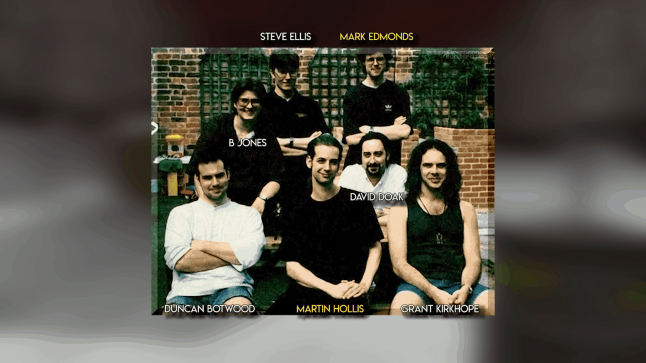

To find out the truth, I reached out to the ultimate undercover informant: Dr David Doak. David was a pivotal member of the development team for both GoldenEye 007 and - perhaps fittingly for this series - is immortalised as the non-player character players must meet in Facility: the 2nd level of the game. During our interview we explored NPC AI, alert behaviours, sensor systems, navigation tools, performance balancing and much more. So let's get started in finding out how it works.

Winding back to the beginning, much of what GoldenEye became was devised by two people: Martin Hollis - the producer and director of the game - and Mark Edmonds who was the gameplay and engine programmer. Hollis had originally been inspired by the likes of Virtua Cop, where enemies would jump into frame, take shots at the player and then back off or hide or react to being shot in a dynamic fashion. However, Hollis sought for the AI to be more engaging and reactive, transcending the standard set by DOOM in 1993.

"The important thing is to show the player the AI. There’s no point having sophisticated AI that the player doesn’t notice.

Your NPC’s can be insightfully discussing the meaning of life, but the player won’t notice if the game requires that they swing around a corner and fill the bad guys with bullets. So the intelligence has to be evident. The game mechanics have to showcase the AI. The level setup has to showcase the AI. And it all has to make an actual difference in actual gameplay."

[Martin Hollis, 2004 European Developers Forum.]

This resulted in guards and patrolling enemies - forcing a more tactical consideration for entering environments. Vision and audio sensors that enabled AI to react to player behaviour - or remain unaware if stealth was employed. Plus friendly and civilian characters that would react to the players presence and either cooperate or run away.

When David Doak joined the project, a lot of the core engine components for 3D movement, rendering and simple AI behaviours were already established by Edmonds. However, over the course of the final two years of the projects character movement, much of the AI behaviour and other core gameplay systems were established, with a lot of moving away from being player-centric, establishing patrol patterns, moving into alarm states, using control terminals, urinals and much more.

To achieve this, Mark Edmonds built an entire scripting system within the C-written codebase, which was constantly being added to and updated as Doak and other members of the team experimented with ideas. Quite often a new idea would be proposed to Edmonds who would ponder the feasibility of it all, then go away and have a working version of it in-engine a day later. This scripting system allowed for developers to link together numerous precompiled actions into sequences of intelligent behaviour based on very specific contexts. These behaviours would spool off their own threads for execution and release resource as soon as possible once the next atomic behaviour was executed, then wait until it's possible to update once more. These scripted behaviours are not just for the friendly and enemy characters, but even systems such as timed doors and gates and the opening and closing cinematics for each level that take control of the player and other characters in the world.

If you dig into the GoldenEye Editor originally developed by modder Mitchell "SubDrag" Kleiman, these AI behaviours have been reversed engineered into editable Action Blocks for you to build your own behaviours. Enemy guards can react to a variety of different sensory inputs, ranging from being shot, to spotting the player or another guard being shot while in line of sight, as well as whether the guard can hear gunshots happening nearby. In addition NPCs can opt to surrender based on probability but more critically on whether the player is aiming at them, with behaviours shifting in the event the AI knows the player isn't targeting them anymore. As I'll explain in a minute, the visual and audio tests required for these behaviours run on tightly constrained and stripped down processes that still achieve impressive results, while running on the N64's limited hardware.

Based on these conditions, guards can execute a variety of different behaviours, including side steps, rolling to the side, fire on one knee, while walking, while running and even throwing grenades. Much of the rationale for these actions is driven by where the player is relative to them and their current behaviour.

It is for all intents and purposes a rather simple Finite State Machine, whereby the AI exists in a given state of execution into a behaviour until an event in-game forces a transition to another one. This same principle was later employed in Half-Life, which came out the year after GoldenEye in 1998 and the team at Rare were aware of the impact their game had on Valve's critically acclaimed shooter. Given Valve told them so themselves:

“My favourite moment was meeting the original Valve guys at ECTS, a UK trade show, in 1998 and them joking that GoldenEye had forced them to redo a bunch of stuff on Half-Life. They went on to do all right.”

- David Doak, GamesRadar, 2018.

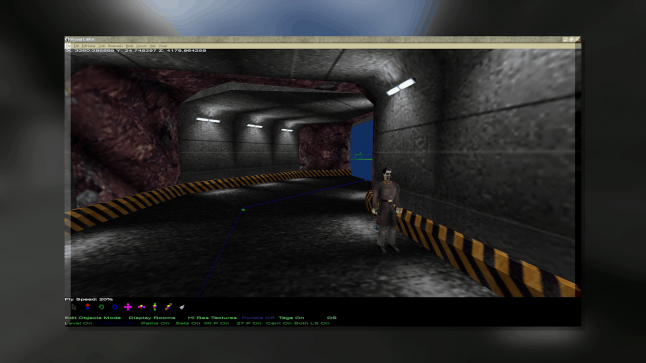

Now the two most critical elements of the Goldeneye engine for controlling the AI is the camera, as well as what's known as the STAN system. For ease of rendering, levels are broken up into what are known as 'rooms', with even large open levels such as the Severnaya surface broken up into smaller chunks. This helped maintain performance as a form of occlusion culling, whereby only rooms in the cameras view frustum would be rendered. But in turn, AI - for the most part - would not execute their primary behaviours unless they've been rendered by the camera at some point. This helps execution costs down, but also lends itself to some interesting opportunities which I'll come back to later.

But the key system that intersects with the room and rendering behaviour is what was known as the STANs - which is just a four-letter abbreviation of 'Stand'. Developed by Martin Hollis, STANs are polygonal meshes in given room that would be flagged for use as a STAN and would know which room or hall it is placed within. If an AI character was standing on top of a given STAN, it would only be able to see the player if the STAN the player was standing on was in the current or adjacent room and ran a simple validator that checked whether two STANs could 'see' each other. This allowed for a more cost-efficient way to run sight tests, rather than running 3D ray traces that would prove a lot more expensive to run on the hardware. This works remarkably well, but does break in some specific certain circumstances, a notable example is the guards on the dam which can't see you until you approach their walkways and this is because the STANs at the top of the ladder can only see those at the bottom, meaning you can mess around as much as you like in proximity, but they won't notice. The second noticeable instance is the spiral path in the caverns, where the NPCs see the path in front of them rather than what's on the other side of the chasm.

Now with these STANs placed all across each map, there is the opportunity to build atop these polygons that are darted across the map to build a navigation system. In modern games we typically use navigation meshes, which provide a full polygonal surface that dictates where characters can move across a map. Nav meshes didn't exist in their current form back in 1997, so for GoldenEye the team took the STAN system and added atop it what was referred to as PADs. PADs would sit atop STANs but also be linked to one another, effectively creating a navigation graph through the map. Characters know what room they're in and can then run a search for a PAD in a destination room if they're reacting to a nearby commotion, or move across the STANs while in their current room to items of interest.

This is topped off by the audio sensors. These run a simple proximity check to based on characters being within a certain radius of a sound occurring. But it's also reliant on the type of weapon and the rate it's being fired. While a silenced PP7 doesn't attract attention, a standard PP7 is loud, but the KF7 Soviet is even louder, with continuous fire leading to the activation radius reaching maximum level, with only the rocket launcher and the tank cannon being deemed louder.

All of these AI tools and systems are very impressive, it shows a intentionality of design that sought clever and effective means to address specific elements the team wanted to see in the game. But there are still many other elements of how GoldenEye works that required individual bespoke behaviours to be crafted, almost on a per level basis. NPCs did not actively hide behind cover, so levels such as the Silo and the Train required unique scripts to be created for them to stand near specific PADs on the map and react differently depending on whether it was a half or full sized object.

The NPCs are not aware of one another in the map, hence levels where you have to protect Natalya Simonova not only had to ensure that she could move around the level, but custom behaviours were built to ensure enemies don't shoot at her en masse and also aim at the player. Having characters like the scientists freeze at gunpoint and then run away or even Boris Grishenko who would do as he's instructed until you stopped pointing the gun at him are more bespoke behaviours that needed to be built for that specific context. This means that many of the principal characters from the story have different custom AI behaviours written to solve specific elements of a given level, with Alec Trevelyan having unique behaviours built for him in all six levels he appears in: Facility, Statue, Train, Control, Caverns and Cradle.

Plus there were issues of level design and pacing that needed to be addressed. The Severnaya exterior levels proved problematic for balancing the AI positioning, so custom level scripts spawn in enemies beyond the fog range that run straight towards the player. But also if enemies are too far away, they simply disable themselves and may later reactive once more.

Arguably my favourite feature, is how GoldenEye is built to punish louder and more aggressive gunplay if the player isn't progressing through the level fast enough. Remember how I said earlier that NPCs don't activate their AI behaviour if they haven't been rendered yet? Well, in the event a NPC can hear a sound, but it's not been rendered, it will spawn a clone of itself to investigate the source and will continue to do so until either the disturbance has ceased or the camera has finally rendered the character. This is particularly noticeable in levels such as the Archives, where you can easily get trapped in the interrogation room at the start of the level by continually spawning clones.

In order to better pace the overall experience - as well as accommodate for the lack of aiming fidelity in using the Nintendo 64 controller - the transitions and durations of animations were tweaked heavily throughout testing to allow players a better chance of prioritising targets either using auto or precision aim mechanics.

With GoldenEye released, this rendering and AI toolchain was put to good use in later titles, with Rare's spiritual successor Perfect Dark employing the same systems but included new additions. As mentioned before, GoldenEye NPCs only go into cover a custom behaviour was created for them to do so, but in Perfect Dark enemies are capable of calculating cover positions - including dynamic ones created by anti-grav props. Plus having experimented with vertex shading lighting, vision sensors are impeded by the lower visibility. This same process was later adopted at Free Radical Design for the Timesplitters franchise - given the studio was founded by several of the GoldenEye development team - and required the STAN/PAD system to be built to better support verticality in level design.

While over 20 years old, GoldenEye set the standard that Half-Life any many other shooter franchises have sought to replicate to this day. I hope having watched this video you can appreciate the depth of the underlying systems and the level of dedication and craft required to elevate the project to what it became in the end.

Read more about:

Featured BlogsYou May Also Like