Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In the continuing saga of the development of the game that made Blizzard's name, Patrick Wyatt discusses the first-ever online multiplayer match of the RTS, as well as explaining exactly how the developer finally got the funds to pursue the game that would make its name.

November 12, 2012

Author: by Patrick Wyatt

ArenaNet co-founder and former Blizzard employee Patrick Wyatt recounts the chaotic, spectacular dev process behind the original Warcraft. This is Part 3 of the story, crossposted from his blog. You can visit Wyatt's blog to read Part 1 and Part 2.

The first-ever multiplayer game of Warcraft was a crushing victory, an abject defeat, and a tie, all at once. Wait, how is that possible? Well, therein lies a tale. This tale grew organically during the writing to include game AI, the economics of the game business, fog of war and more. Read on if you have lots of free time!

After six months of development that started in September 1993, Warcraft: Orcs vs. Humans, the first product in what would eventually become the Warcraft series, was finally turning into a game instead of an extended tech demo.

For several months I was the only full-time employee on the project, which limited the rate of development. I was fortunate to be assisted by other staff members, including Ron Millar, Stu Rose, and others, who did design work on the project. And several artists contributed prototype artwork when they found time in between milestones on other projects.

The team was thinly staffed because the development of Warcraft was self-funded by the company from revenues received for developing titles for game publishers like Interplay and Sunsoft, and the company coffers were not deep.

At that time we were developing four 16-bit console titles: The Lost Vikings 2 (the sequel to our critically-acclaimed but low-selling, side-scrolling "run-and-jump" puzzle game), Blackthorne (a side-scrolling "run-and-jump" game where the lead character gets busy with a shotgun), Justice League Task Force (a Street Fighter clone set in the DC Comics universe), and Death and Return of Superman (a side-scrolling beat 'em-up based on the DC Universe comic series of the same name).

With the money received for developing these games and other odd jobs the company was able to pay initial development costs.

For most of the history of the game industry, independent game development studios -- which is to say studios that weren't owned by a retail game publishing company -- usually funded their projects by signing contracts with those publishing companies. Publishers would "advance" money for the development of the project. In addition to advances for development, publishers were responsible for publicity, marketing, manufacturing, retail distribution, customer support and so forth.

Back in the early '90s, there were many more retail game publishers than exist today, but the increasing cost of game development and especially of game publishing led to massive industry consolidation due to bankruptcies and acquisitions. When you think of a retail game publisher today you'll probably think of Activision Blizzard, EA, or Ubisoft, instead of the myriad mid-sized companies that existed 20 years ago.

As in all industries, the terms of contracts are drawn up to be heavily in the favor of the people with the money. This is the other golden rule: "he who has the money makes the rules". While in theory these agreements are structured so that the game developer is rewarded when a game sells well, as in the record and movie industries publishers capture the vast majority of profits, with developers receiving enough money to survive to sign another agreement -- if they're lucky.

When I mentioned "advances" paid by the publisher, the more correct term is "advances against royalties", where the developer is effectively receiving a forgivable loan to be repaid from royalties for game sales. It sounds great: develop a game; get paid for each copy sold. But the mechanics work out such that the vast majority of game titles never earn enough money to recoup (pay for) the advances. Since development studios often had to give up the rights to their title and sequels, these agreements are often thinly disguised work-for-hire agreements.

To aim for better deal terms, a common strategy employed by development studios was to self-fund an initial game prototype, then use the prototype to "pitch" a development deal to publishers. The longer a developer was able to self-fund game creation the better the eventual contract terms.

Perhaps the best example of this strategy is Valve Software, where Gabe Newell used the wealth he earned at Microsoft to fund the development of Half-Life and thereby gain a measure of control over the launch schedule for the game -- releasing the game only when it was a high-quality product instead of rushing it out the door to meet quarterly revenue goals as Sierra Entertainment (the game's publisher) desired. More importantly, Gabe's financial wherewithal enabled Valve to obtain ownership of the online distribution rights for Half-Life just as digital downloads were becoming a viable strategy for selling games, and led to that studio's later -- vast -- successes.

The downside to self-funding a prototype is the risk that the developer takes in the event that the game project is not signed by a publisher -- oftentimes resulting in the death of the studio.

The company I worked for -- at that time named Silicon & Synapse -- was self-funding Warcraft, along with another project called Games People Play, which would include crossword puzzles, Boggle and similar games found on the shelves at airport bookstores to entertain stranded travelers.

By developing two games that targeted radically different audiences, the company owners hoped to create multiple sources of revenue that would be more economically stable compared to betting all the company's prospects on the core entertainment market (that is, "hardcore" gamers like you 'n' me).

Of course spreading bets across diverse game genres also has risks, inasmuch as a company brand can be diluted by creating products that don't meet the desires of its audiences. One of the great strengths of the Blizzard brand today is that users will buy its games sight-unseen because they believe in the company's vision and reputation. That reputation would have been more difficult to establish had the company released both lower-budget casual titles and high-budget triple-A+ games, as did Sierra Entertainment, which is now out of business after repeated struggles to find an audience.

In any event, creating Games People Play turned out to be a misstep because developing a casual entertainment product was so demoralizing for the lead programmer that the project never matured and was later canceled. Or perhaps it wasn't a mistake, because the combination of Warcraft and Games People Play convinced Davidson & Associates, at that time the second largest educational software company in the world, to purchase Silicon & Synapse.

Davidson & Associates, started by Jan Davidson and later joined by her husband Bob, was a diversified educational software company whose growth was predicated on the success of a title named Math Blaster, in which a player answers math problems to blow up incoming asteroids before they destroy the player's ship. It was a clever conjunction of education and entertainment, and the company reaped massive rewards from its release.

As an educational title, Math Blaster may have had some value when used properly, but I had occasion to see it used in folly. My high school journalism class would write articles for our school newspaper in a computer room shared with the remedial education class; my fellow journalism students and I watched in horror as remedial 12th graders played Math Blaster using calculators.

As asteroids containing expressions like "3 + 5" and "2 x 3" approached, those students would rapidly punch the equations into calculators then enter the results to destroy those asteroids. Arguably they were learning something -- considering they outsmarted their teachers -- but I'm not sure it was the best use of their time given their rapidly approaching entry into the workforce.

With good stewardship and aggressive leadership Davidson & Associates expanded into game manufacturing (creating and packing the retail box), game distribution (shipping boxes to retailers and intermediate distributors), and direct-to-school learning materials distribution.

They saw an opportunity to expand into the entertainment business, but their early efforts at creating entertainment titles internally convinced them that it would make better sense to purchase an experienced game development studio rather than continuing to develop their own games with a staff more knowledgeable about early learning than swords and sorcery.

And so, at a stroke, the cash-flow problems that prevented the growth of the Warcraft development team were solved by the company's acquisition; with the deep pockets of Davidson backing the effort it was now possible for Silicon & Synapse (renamed Blizzard in the aftermath of the sale) to focus on its own titles instead of pursuing marginally-profitable deals with other game publishers. And they were very marginal -- even creating two top-rated games in 1993, which led to the company being named "Nintendo Developer of the Year", the company didn't receive any royalties.

With a stack of cash from the acquisition to hire new employees and enable existing staff to jump on board the project, the development of Warcraft accelerated dramatically.

The approach to designing and building games at Blizzard during its early years could best be described as "organic". It was a chaotic process that occurred during formal design meetings but more frequently during impromptu hallway gatherings or over meals.

Some features came from design documents, whereas others were added by individual programmers at whim. Some game art was planned, scheduled, and executed methodically, whereas other work was created late at night because an artist had a great idea or simply wanted to try something different. Other elements were similarly ad-libbed; the story and lore for Warcraft came together only in the last several months prior to launch.

While the process was unpredictable, the results were spectacular. Because the team was composed of computer game fanatics, our games evolved over the course of their development to become something that gamers would want to play and play and play. And Warcraft, our first original game for the IBM PC, exemplified the best (and sometimes the worst) of that process, ultimately resulting in a game that -- at least for its day -- was exemplary.

As biologists know, the process of evolution has false starts where entire branches of the evolutionary tree are wiped out, and so it was with our development efforts. Because we didn't have a spec to measure against, we instead experimented and culled the things that didn't work. I'd like to say that this was a measured, conscious process in each case, but many times it arose from accidents, arguments, and personality conflicts.

One event I remember in particular was related to the creation of game units. During the early phase of development, units were conjured into existence using "cheat" commands typed into the console because there was no other user interface mechanism to build them. As we considered how best to create units, various ideas were proposed.

One event I remember in particular was related to the creation of game units. During the early phase of development, units were conjured into existence using "cheat" commands typed into the console because there was no other user interface mechanism to build them. As we considered how best to create units, various ideas were proposed.

Ron Millar, an artist who did much of the ideation and design for early Blizzard games, proposed that players would build farmhouses, and -- as in the game Populous -- those farms would periodically spawn basic worker units, known as (Human) peasants and (Orc) peons. The player would be able to use those units directly for gold mining, lumber harvesting and building construction, but they wouldn't be much good as fighters.

Those "peons" not otherwise occupied could be directed by the player to attend military training in barracks, where they'd disappear from the map for a while and eventually emerge as skilled combatants. Other training areas would be used for the creation of more advanced military units like catapult teams and wizards.

The idea was not fully fleshed out" which was one of the common flaws of our design process: the end result of the design process lacked the formality to document how an idea should be implemented. So the idea was kicked around and argued back and forth through the informal design team (that is, most of the company) before we started coding (programming) the implementation.

Before we started working on the code, Ron left to attend a trade show (probably Winter CES -- the Consumer Electronics Show), along with Allen Adham, the company's president. And during their absence an event occurred which set the direction for the entire Warcraft series, an event that I call the "Warcraft design coup".

Stu Rose, another early artist/designer to join the company (employee number six, I believe), came late one afternoon to my office to make a case for a different approach. Stu felt that the unit creation mechanism Ron proposed had too many as-yet-unsolved implementation complexities, and moreover that it was antithetical to the type of control we should be giving players in a real-time strategy (RTS) game.

In this then-new RTS genre, the demands on players were much greater than in other genres and players' attention could not be focused in one place for long because of the many competing demands: plan the build/upgrade tree, drive economic activity, create units, place buildings, scout the map, oversee combat and micromanage individual unit skills. In an RTS, the most limited resource is player attention; so adding to the cognitive burden with an indirect unit creation mechanism would add to the attention deficit and increase the game's difficulty.

To build "grunts", the basic fighting unit, it would be necessary to corral idle peasants or those working on lower priority tasks to give them training, unnecessarily (in Stu's view) adding to the game's difficulty.

I was a ready audience for his proposal, as I had similar (though less well thought-out) concerns and didn't feel that unit creation was an area where we needed to make bold changes. Dune II, the game from which the design of Warcraft was derived, had a far simpler mechanism for unit creation: just click a button on the user-interface panel of a factory building and the unit would pop out a short time later. It wasn't novel -- the idea was copied from even earlier games -- but it just worked.

Stu argued that we should take this approach, and in lieu of more debate just get it done now. So over the next couple of days and late nights, I banged out the game and user interface code necessary to implement unit creation, and the design decision became a fait accompli. By the time Ron and Allen returned, the game was marginally playable in single player mode, excepting that the enemy/computer AI was still months away from being developed.

Warcraft was now an actual game that was simple to play and -- more importantly -- fun. We never looked back.

In June 1994, after 10 months of development, the game engine was nearly ready for multiplayer. It was with a growing sense of excitement that I integrated the code changes that would make it possible to play the first-ever multiplayer game of Warcraft. While I had been busy building the core game logic (event loop, unit-dispatcher, pathfinding, tactical unit-AI, status bar, in-game user-interface, high-level network code) to play, other programmers had been working on related components required to create a multiplayer game.

Jesse McReynolds, a graduate of Caltech, had finished coding a low-level network library to send IPX packets over a local-area network. The code was written based on knowledge gleaned from the source code to Quake, which had been recently open-sourced by John Carmack at id software. While the IPX interface layer was only several hundred lines of C code, it was the portion of the code that interfaced with the network card driver to ensure that messages created on one game client would be sent to the other player.

And Bob Fitch, who was earning his master's degree from Cal State Fullerton, developed the initial "glue screens" that enabled players to create and join multiplayer games. My office was next to Bob's, which was mighty convenient, since it was necessary for us to collaborate closely to integrate his game join-or-create logic to my game-event loop.

After incorporating the changes, I compiled the game client and copied it to a network drive while Bob raced back to his office to join the game. In what was a minor miracle, the code we'd written actually worked, and we were able to start playing the very first multiplayer game of Warcraft.

As we started the game I felt a greater sense of excitement than I'd ever known playing any other game. Part of the thrill was in knowing that I had helped to write the code, but even more so were two factors that created a sense of terror: playing against a human opponent instead of a mere computer AI, and more especially, not knowing what he was up to because of the fog of war.

One of the ideas drawn from earlier games was that of hiding enemy units from sight of the opposing player. A black graphic overlay hid areas of the game map unless a friendly unit explored the area, which is designed to mimic the imperfect information known by a general about enemy operations and troop movements during real battles.

Empire, a multiplayer turn-based strategy game written almost seventeen years before by the brilliant Walter Bright (creator the "D" programming language), used fog of war for that same purpose. Once an area of the map was "discovered" (uncovered) it would remain visible forever afterwards, so an important consideration when playing was to explore enough of the map early in the game so as to receive advance warning of enemy troop movements before their incursions could cause damage to critical infrastructure or economic capability.

The psychological terror created by not knowing what the enemy is doing has been the demise of many generals throughout history, and adding this element to the RTS genre is a great way to add to the excitement (and fear) level. Thank Walter and the folks at Westwood who created Dune II for their savvy!

As many gamers know, computer-controlled "Artificial Intelligence" (AI) players in strategy games are often weak. It's common for human players to discover exploits that the computer AI is not programmed to defend against that can be used destroy the AI with little difficulty, so computer AI players usually rely upon a numeric troop advantage, positional advantage, or "asymmetric rules" in order to give players a good challenge.

In most Warcraft missions, the enemy computer players are given entire cities and armies to start with when battling human players. Moreover, Warcraft contains several asymmetric rules that make it easier for the AI player to compete, though most players would perhaps call these rules outright cheating.

One rule we created to help the computer AI was to reduce the amount of gold removed from gold mines to prevent them from being mined-out. When a human player's workers emerge from a gold mine, those workers remove 100 units of ore from the mine and deliver it back to the player's town hall on each trip, and eventually the gold mine is exhausted by these mining efforts. However, when an AI-controlled worker makes the same trip, the worker only removes eight units of ore from the mine, while still delivering 100 units into the AI treasury.

This asymmetric rule actually makes the game more fun in two respects: it prevents humans from "turtling", which is to say building an unassailable defense and using their superior strategic skills to overcome the computer AI. Turtling is a doomed strategy against computer AIs, because the human player's gold mines will run dry long before those of the computer.

Secondarily, when the human player eventually does destroy the computer encampment, there will still be gold left for the player to harvest, which makes the game run faster and is more fun than grinding out a victory with limited resources.

Most players are aware of a more serious violation of the spirit of fair competition: the computer AI cheats because it can see through the fog of war; the AI knows exactly what the player is doing from moment to moment. In practice, this wasn't a huge advantage for the computer, and merely served to prevent it from appearing completely stupid.

Interestingly, with the long popularity of StarCraft (over 14 years since launch and still played), a group of AI programmers has risen to the challenge of building non-cheating AIs. Aided by a library called BWAPI, these programmers write code that can inject commands directly into the StarCraft engine to play the game. Programmers enter their AIs in competitions with each other to determine the victor. While these BWAPI AI players are good, the best of them are handily beaten by skilled human opponents.

As a person who had played many (many, many) strategy games before developing Warcraft, I was well aware of the limitations of computer AIs of that era. While I had battled against many computer AIs -- sometimes losing, many times winning -- I was never scared by AI intelligence, even when battling the terrible Russian offensive in the game Eastern Front by Chris Crawford, which I played on a friend's Atari 800 until eventually the audio cassette tape (!) that contained the game was so old it could no longer be read.

These games were fun, exciting, and most certainly challenging, but not scary. But something changed when I played the first multiplayer game of Warcraft.

The knowledge that I was competing against an able human player -- not just in terms of skill and strategy, but also in terms of speed of command -- but was prevented from seeing his actions by the fog of war was both electrifying and terrifying. In my entire career I have never felt as excited about a single game as I was during that first experience playing Warcraft, where it was impossible to know whether I was winning or losing.

As a massive adrenaline rush spiked in my bloodstream, I did my best to efficiently harvest gold and lumber, build farms and barracks, develop an offensive capability, explore the map, and -- most importantly -- crush Bob's armies before he could do the same to mine.

This was no test-game to verify the functionality of the engine; I know he felt the same desire to claim bragging rights over who won the first-ever multiplayer game of Warcraft. Moreover, when we had played Doom together at Blizzard, I had won some renown because, after a particularly fierce game, Bob had become so angry at me for killing him so frequently with a rocket launcher that he had vowed never to play me again. I knew he'd be looking for payback.

As our armies met in battle, we redoubled our efforts to build more units and threw them into the fray. Once I discovered his encampment and attacked, I felt more hopeful. Bob's strategy seemed disorganized and it appeared I would be able to crush his forces, but I wanted to leave nothing to chance so I continued at a frenzied pace, attacking his units and buildings wherever I could find them.

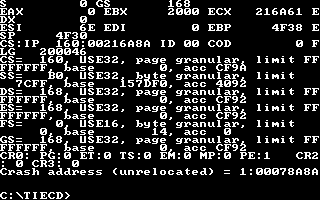

And then... crash.

As any programmer knows, the likelihood of new code working properly the first time is close to zero, and so it should be no surprise that the game crashed. The game's graphics scrolled off the top of the monitor and were replaced with the blocky text of the DOS4GW "crash screen" so familiar to gamers in the era before Windows gaming. Now we have the far more sophisticated Windows Error Reporting dialog, which enables the player to submit the crash report, though occasionally players see the dreaded Blue Screen of Death, which is remarkably similar to those of old.

After the crash, I leaped up from my chair and ran into Bob's office, yelling "That was awesooooommmme!" immediately followed by "...and I was kicking your ass!" So I was surprised to hear Bob's immediate rebuttal: to the contrary, he had been destroying me.

It took a few minutes for our jangled nerves to return to normal, but in short order we determined that not only did we have a crash bug but also a game-state synchronization problem, which I termed a "sync bug": the two computers were showing entirely different battles that, while they started identically, diverged into two entirely different universes.

Someone who hasn't worked on programming network code might assume that the two Warcraft game clients would send the entire game state back and forth each turn as the game is played. That is, each turn the computers would send the positions and actions for every game unit. In a slow-paced game with only a few board positions, like Chess or Checkers, this would certainly be possible, but in a game like Warcraft, with up to 600 units in action at once, it was impossible to send that volume of information over the network.

We anticipated that many gamers would play Warcraft with 2400 baud modems, which could only transmit a few hundred characters per second. Younger gamers who never used a modem should take the time to read up on the technology, which was little removed from smoke signals, and only slightly more advanced than banging rocks together. Remember, this was before Amazon, Google, and Netflix -- we're talking the dark ages, man.

Having previously "ported" Battle Chess from DOS to Windows, I was familiar with how multiplayer games could communicate using modems. I knew that because of the limited bandwidth available via a modem it would have been impossible to send the entire game state over the network, so my solution was to send only each player's commands and have both players execute those commands simultaneously.

I knew that this solution would work because computers are great at doing exactly what they're told. Unfortunately, it turned out that many times we humans who program them are not so good at telling computers exactly the right thing to do, and that is a major source of bugs. When two computers are supposed to be doing the same thing, but disagree because of a bug, well, that's a problem.

A sync bug arises when the two computers simulating the game each choose different answers to the same question, and from there diverge further and further over time. As in time-travel movies like Back to the Future, small changes made by the time traveler while in the past lead to entirely different futures; so it was that games of Warcraft would similarly diverge. On my computer my Elvish archer would see your Orcish peon and attack, whereas on your computer the peon would fail to notice the attack and wander off to harvest lumber. With no mechanism to discover or rectify these types of disagreements, our two games would soon be entirely different.

So it was that the first game of Warcraft was a draw, but at the same time it was a giant win for the game team -- it was hella fun! Other team members in the office played multiplayer soon afterwards and discovered it was like Blue Sky, the pure crystal meth manufactured by Walter White in Breaking Bad. Once people got a taste for multiplayer Warcraft, nothing else was as good. Even with regular game crashes, we knew we were on to something big.

All we needed to do was get the game done.

Tragically, we soon made an even worse discovery: not only did we have numerous sync bugs, but there were also many different causes for those sync bugs. If all the sync bugs were for similar reasons, we could have endeavored to fix the singular root cause. Instead, it turned out there were numerous different types of problems, each of which caused a different type of sync bug, and each which therefore necessitated its own fix.

When developing Warcraft, I had designed a solution to minimize the amount of data that needed to be transmitted over the network by only sending the commands that each player initiated, like "select unit 5", "move to 650, 1224", and "attack unit 53". Many programmers have independently designed this same system; it's an obvious solution to the problem of trying to synchronize two computers without sending the entire game state between them every single game turn.

These days, there are probably several patents retroactively trying to claim credit for this approach. Over time, I've come to believe that software should not be patentable; most any idea in software is something that a moderately experienced programmer could invent, and the definition of patents requires that patents be non-obvious. 'Nuff said.

I hadn't yet implemented a mechanism to verify synchronization between the two computers, so any bug in the game code that caused those computers to make different choices would cause the game to "bifurcate" -- that is, split it into two loosely-coupled universes that would continue to communicate but diverge with increasing rapidity over time.

Creating systems designed to detect de-synchronization issues was clearly the next task on my long list of things to do to ship the game!

You know the ending to this story: Warcraft "eventually" shipped only five months later. It seemed an eternity, because we worked so many hours each day, encountered so many obstacles, overcame so many challenges, and created something we cared for so passionately. I'll continue to explore those remaining months in future blog articles, but so much was packed into that time that it's impossible to squeeze those recollections into this already too-long post!

Read more about:

FeaturesYou May Also Like