Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

What puts the F.E.A.R into game technologists? This Gamasutra interview with a Monolith duo discusses F.E.A.R. 2: Project Origin's tech underpinnings, from workflow to AI and beyond.

Seattle-based, now Warner Bros-owned Monolith Productions, whose history dates all the way back to 1997's FPS Blood, has had a long tradition of delivering immersive and well-regarded first-person experiences -- this generation coming up with both of the Condemned games, and F.E.A.R., one of the most successful attempts to blend horror and first-person shooting yet released.

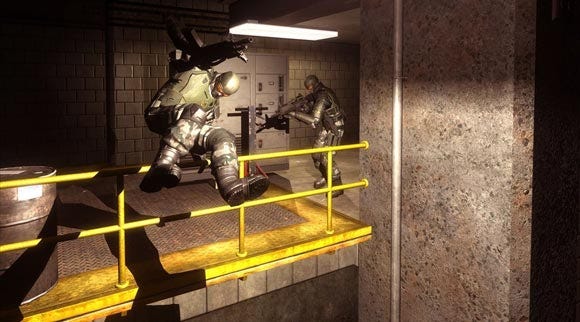

Now, the developers are working on F.E.A.R. 2: Project Origin, which begins about thirty minutes before the original game's ending. Players will again battle the paranormal manifestations of a wrathful and powerful psychic girl, taking on the role of a special forces agent who will have the same superhuman reflexes seen in the first F.E.A.R. The game is scheduled to release for PC, Xbox 360, and PlayStation 3 next February in the U.S. and Europe.

To find out more about the game's technological basis, we spoke to John O'Rorke, engine architect and principal software engineer for Monolith, and Matthew Rice, senior software engineer, AI, in a two-part interview.

What follows is a detailed look at the decision-making process at Monolith when it comes to tech; how features are decided on and become implemented, as well as a frank discussion of the future of current-gen engine technology.

It also examines the current state of game AI, taking in a discussion of the features of the latest iteration of Monolith's AI technology in F.E.A.R. 2, and what the future holds.

It sounds like there are a lot of pretty high level features; coming on from the prior game, what were your priorities? Was the engine improvement driven by the demand of what was going into F.E.A.R. 2? And, if not, what drove them?

JO: For F.E.A.R. 2, we really wanted to just crank up the destruction, and the overall visuals, and the environments, because that's really what made F.E.A.R. 1 unique and special. So we just wanted to take that and bump it to the next level, and so, it was dictated by the needs of the project.

We focused on "How do we get five to 10 times as many objects in spaces? How do we do some really cool new lighting stuff? How can we make the environments feel more real? And also make it run off of a console, with limited memory, and stream everything, and all that other fun stuff?"

Obviously, F.E.A.R. is a horror-based game, so atmosphere is very paramount for it. But to execute on horror, a multidisciplinary approach is required, because obviously the tech has to be ready to support these things, but art and design have a big say in what's scary, and how it's going to look. So how do you balance those disciplines when updating an engine, and picking and choosing what features to concentrate on?

JO: That's a really good question; that's something that a lot of people tend to overlook when analyzing technology. It really doesn't matter what the cool new feature is unless there's an adequate workflow for it. Technology development is expensive, but not nearly as expensive as content creation.

And so, there have been techniques, and research, and prototypes that have been done, that we've had to scrap just because there's no way in the world that we could create a workflow that would allow our artists to populate that and still stay within timeframe and budget -- but, I say that, though there are also a lot that have panned out, and resulted in some pretty cool techniques.

One of the things that we did for F.E.A.R. 2, for example, was textured volumetric rendering, and we were able to come up with a nice workflow for that, that was pushed by engineering -- just something that the graphics engineer and I were playing around with, and we came up with a technique, and we prototyped it, it turned out really well. We pushed it to the artists, and then they started incorporating it into a lot of spaces. They really help pull out the atmosphere.

And then there's always the converse, of course, where the artists say, "Well, what if we can do this," and then there's back and forth about, "Oh, okay, here's what you're really trying to do; here's a solution that we can put in there for that, and see how you'd like it to work for your workflow," and so it's very much a two-way street in terms of ideas and stuff like that.

F.E.A.R., obviously, and F.E.A.R. 2 are on this technology, but what other games from the studio have been running on this generation of your engine?

JO: It went F.E.A.R. -- F.E.A.R. was our kind-of new technology; new renderer, physics, and all sorts of stuff like that; Condemned was basically our migration onto the consoles, with the 360 launch title; Condemned 2 was an increment on the console technology and move onto the PS3; and F.E.A.R. 2 is a further refinement of all the graphics and performance, and a couple of new features that we're working on, too.

When you're talking about your engine development, obviously you guys have game projects going; your flow is that as a project gets towards wrapping, you start working on the engine improvements that are going to go into the next title. So, I'm assuming that's why you are able to incrementally list the upgrades that happen per each game title you just mentioned.

JO: Yeah, it's very much a leap-frogging of systems; it takes longer than game project to rewrite all of these systems, so we pick the biggest systems, or what we really want to hone for the title, or what needs major extensions to support the game, rewrite those for that title. Then those are usually pretty solid, so we switch to a different set of systems, and so on.

Obviously, graphics are paramount; you talked about how with Condemned 2, you moved onto the PS3, and then with the new version you're upgrading to the point where you are now. We're pretty far into the 360 at this point, and even the PS3 has at this point been out for a couple years; how do you see the progression of this generation? Is there a lot of room to improve the visuals, first of all?

JO: I wouldn't say "a lot". I think there's maybe 20 percent more improvement to visuals to be had; even internally, we've got some pretty good looking visuals, but we've identified areas where we can change underlying technology to allow the artist to push even more for future stuff. And we're making really good use of the hardware, so it's just a matter of finding new ways and clever ways to eke out that last 10 to 30 percent of the hardware.

I don't think you're going to see a radical change of visuals, except in the artistic arenas, as the games continue to look more and more alike due to similar horsepower, and limiting factors such as content creation time; games are probably going to try to use artistic styles and things like overlays, tints, and other effects to just stand out visually from each other.

Is there a place in the current generation where you see a lot of room for improvement, from an engine perspective, that's not visuals?

JO: Multi-threading. There's a lot of processing horsepower in there, but it's still taking a lot of time to figure out how to start using that, and that's something that's going to be an ongoing challenge for a lot of game companies.

And future technologies will increase the core counts dramatically; then you have to find ways to make the technology work across that. And that will, I think, open up some interesting opportunities, once we make it past that initial hurdle.

As that becomes available, what is the primary benefit of it, from a game engine perspective?

JO: Basically, it's going to allow for the games to continue scaling with Moore's Law. PCs have really stagnated, in terms of processing power. So, you're going to see continual rises in the number of physical objects, the amount of complexity within the scene; hopefully it will offer a lot more procedural and robust effects.

And, generally, the progression of technology in games is: there's one game that does a new effect in a really smoke-and-mirrors kind of way; like, "Oh, we've got fire! (But you can only view it from this angle, because it's a sprite...)" And then someone will build up on that, and make it a little bit more robust and versatile; like, "Oh, now you can have fire anywhere in a level! (But it's still just a sprite...)" And then, over the course of several more iterations, it will become fully robust and operate in all situations, and so on. And that's generally the evolution pipeline of new features and technologies.

By having more horsepower, it basically allows us to continue moving more and more stuff through that pipeline, such as fluid dynamics, or dynamic fire, and other things like that; increased physics. But unless we can overcome how to use all of these processors, we're going to quickly stagnate, and not be able to produce more and more features.

Do you guys use middleware? To what extent do you use middleware?

JO: We use Havok for our physics; we use Scaleform for our interface; we use Bink for video; and we use GameSpy for matchmaking on PC and PS3.

What are your feelings about integrating middleware solutions with your technology?

JO: I don't have a solid rule; I think it's a case-by-case basis. How much would it cost to develop internally? How much is it to license? What's the risk and stability of the company that we're licensing from? What's the pain of integration? And what's the value-add and opportunity cost? So, it's a very case-by-case basis.

In situations like Havok, it's kind of a no-brainer; it takes a lot of specialization to create a full physics simulation, and a lot of maintenance on that system, which can be very difficult to come by. And the pricing is pretty reasonable, and they've been around for a while; they've got a pretty competitive product, and good support, so, we went with them.

We've turned down other packages, though, because we were concerned that the company may not be around in three years, they may not be able to provide us updates in a reasonable manner, their pricing was ridiculous compared to the engineering and maintenance that would occur, or their solutions just weren't that complete.

Some developers have a very philosophical bent on whether or not to use middleware; it sounds like you're very practically driven, though.

JO: Yeah. I mean, at the end of the day, it's "What's going to make the best game, and best utilize our resources?" Because if we have to spend three engineers doing a mediocre physics simulation, then have to have those three guys constantly maintaining and optimizing it, or we could have those three guys implement something that is really cool and unique, and then just implement physics -- not that that's trivial, by any means, but it's not three engineers' time -- then the game is going to be better. So, yeah, we try to be pretty practical, here.

And the same thing, when it comes to deciding what features you're going to update, it must be driven very much by practical considerations, as much as it is driven by a blue sky of what you'd like to see. How do you prioritize?

JO: One: Where do we want to be as a company; what do we want to own? And we have to have that in order to deal with larger types of investments, such as, for example, if we really want to own AI, we need a large AI staff, and we need to do years and years of development which is impractical for a single game title. So we need to have an understanding of long-term priorities, and road maps.

And then we try to identify games, and make sure that our priorities are in line with that, and meet the priorities of the game -- which systems are causing us the most pain -- and come up with, basically, a list, and do what we can; match the resources to them. So it's pretty practically-based, but there are a lot of factors that go into deciding it.

All of the titles that you've been developing recently, and also in terms of your company's history, for the most part seem to be first person titles. How much of that is a priority in terms of your technology, and how much of that is just a priority in terms of your company's overall core competency and what people like to make?

JO: I'd say primarily core competency. There are some differences in technology between, say, first and third person games, but not a huge amount, so we are looking to expand out in the future a little bit more. But, you know, we've made pretty good first person shooters; that's what people keep paying us to make. So, we haven't really had to break the mold too much, in terms of that, and, you know, if you're good at something, stick with it...

The biggest chunk of features on the list you provided about the engine is graphics-oriented.

JO: The main reason for that is that it tended to be what people care about and see. There's a huge number of underlying system things, like memory management, performance tracking, streaming, threading, etcetera, but people generally don't see those, and therefore, you know, out of sight, out of mind.

Do you guys do any data-mining on gameplay, to find out how users are interacting with the game -- either from a playtest perspective, or even in the retail product?

JO: We do. We do in the play test; we don't do any in the retail right now. It's something we've been doing over the past year or two, and it's a really great trend in the industry. There's a huge amount of information to be had, and some of it is so obvious once you have the data sitting right in front of you, but can be so difficult to spot when you're just not looking for it.

Tracking things like player death, when they use health packs, where they shoot, damage. We track a whole bunch of other stuff that is recorded during playtesting, and then we can analyze that information.

We have an on-site play test lab, and they do a lot of really interesting stuff, where they record the people, they ask them questions, have them fill out their excitement level at different stages, and stuff like that; and over time, you get very, very clear graphs of fun. It's turning fun into a relatively objective data point. And we've been able to use that to really make the game quite a bit better.

It's kind of funny, but I think that's true; it sounds a little weird when you say that -- "turning fun into an objective data point" -- but I think there's definitely an element of that. It's becoming clear that fun is something that you craft, so of course there's logical improvements to it. I mean, the initial spark of inspiration, or great ideas, drive the creativity of a product, but it's the ability to actually tweak and improve it that can turn it into something really special.

JO: And I still think there's a key difference in the type of stimulus you can receive from a game, whether that's intellectual creativity, or adrenaline-pumping excitement, but once you get down to that, there's obviously some very clear cut ways to measure that, and to determine if you're succeeding in them.

What do you see? What is "an improvement" to AI? What is your expectation of where AI is going, and what is achievable within this generation?

MR: I think you've seen a couple different things that have happened in this generation, and they'll continue on. In terms of smaller, tactical scale AI, you've seen mild improvements in terms of the way they plan, and the way they challenge you in combat. But even more than that, they look better while navigating, and they look better while moving through the space. Navigation has increased across the board, and the way they interact with the environment has improved across the board.

Globally, across the entire games industry, you've seen more AI in games. Games like Assassin's Creed. We're getting to that level where you're moving through large throngs of crowds. You just didn't see that before; in previous generations, you'd step into a nightclub and it's be barren, devoid, but now you're actually seeing fully populated cityscapes.

So there have been big improvements in AI, in general, this past generation. There's only so close that you can get before you get to the uncanny valley, in terms of games, and I think we're approaching that right now. So, there will be a big leap at some point soon, when things such as the great small-scale tactical AI that a lot of games currently have, gets integrated with the crowd AI that you're seeing.

And you're also seeing other things, like people are experimenting with AI spawning, and spawning dynamically based on the player's condition and health, to try and tempo the game differently every time you play it.

You're talking about stuff like what Left 4 Dead is attempting.

MR: Yeah, exactly. We've talked about that here at the office, and that's some place where we see an aspect of AI developing.

More than just governing the way characters behave, and the way NPCs behave in the game, AI could be more impressively used, or maybe more effectively used, in things like that.

MR: In terms of making an individual AI encounter better? To some extent, we're reaching the same problems that we're having in graphics. Every generation, the technology gets better, right? We're supposed to be able to push more polygons; we're supposed to have more shaders on the screen. The problem with that being that you now have to have artists create X amount more content. So I think we're going to start seeing, in terms of graphics, you're starting to see a slowdown. Between the PS1 and the PS2, there was a huge leap, I feel, in graphical fidelity; and then less so between the PS2 and the PS3.

And I think we're kind of reaching that same point with AI. I mean, we can make the AI incredibly much more complicated, but it still requires animators to create thousands of animations, versus hundreds of animations; it requires the character artists to create far more detailed maps, and when you create far more detailed character maps, players expect full facial animation, which requires even more artist content.

And then the AI needs to know more about the world, in order to behave that much better, so that means that the level designers spend a lot more time carpeting a level with AI hints. So, one of the big events that I think we'll see soon is a lot more automation in the way that AI is placed in the game. Which doesn't necessarily mean a direct influence on the way the player perceives it, but it'll be much easier for the game makers to make the game, which means that they'll be able to focus more time on improving the AI in other areas.

Is some sort of proceduralization really what is required to stay in step?

MR: Oh, yeah. I definitely feel that. Left 4 Dead is, obviously, leading the charge on that. For instance, an example is the way nav meshes are made. AI moves throughout the world based on these nav meshes are placed; and it used to be, four or five years ago, that everyone placed nav meshes manually. You had to have a level designer go in there and put each little polygon into the world, and that polygon represented a space that the AI knew about, and can navigate through.

Now we're seeing these things happen more dynamically. Basically, there are companies out there that are developing tools that they're using internally, in which the level designer doesn't have to add a nav mesh at all; it just carpets the area with a nav mesh for any given AI size.

Same thing with animation: a huge part of what makes the AI look smart is the animations, and it used to be that animation systems were, you can play one single animation, and then it would play the next single animation; and if you wanted to play one animation that blended nicely into another animation, you either had to line the animations up perfectly, or you had to have some cheap generic blend.

Now we're seeing animation systems come online, both middleware packages and solutions that companies are making proprietary internally, that are much more complicated; they will actually take into account what the AI is doing, like whether or not he's attempting to lean into the turn, or whether or not he's slowing down from a massive run, and choose animations appropriately. So, that's a form of automation that, to have that same effect, would have taken a character artist and an animation engineer a great amount of time for each individual character, because of the large number of animations that we have.

So there's automation coming. I don't know if we'll see automation in terms of the behavioral aspects of AI, in terms of what the AI decides to do; more automation in "the AI has decided to do these things, and it can now do these things better".

Something that was discussed is destructibility of the environments. In F.E.A.R. 2, how destructible are the environments, and how does that impact the AI's behavior?

MR: We fill the space with a lot of particles. We blow apart geometry, in terms of like, holes being blown out of walls, corners being taken out of pillars and whatnot, as well as different destruction levels for different prefabs in the world. For example, a car will have many different states that it will go through.

What you won't see in this game, and what you won't see in a lot of games, is a lot of physical pieces that fly around the environment and are very large. We certainly have bottles and cans and stuff that fly around, that don't really impact the AI.

So, you notice in a lot of games -- Gears 2 -- it's actually pretty sparse in the environment. That's because -- well, I imagine there are a lot of reasons, but one of the reasons is because the AI traditionally has a difficult time of dealing, intelligently, with a car that has just moved ten feet and is now in its nav mesh. And, again, these are things that have solutions that are coming online; both middleware is providing it as well as we internally are developing, working on systems so that the AI will intelligently handle such problems.

Returning to dynamic systems, as in Left 4 Dead, what about dynamic difficulty systems?

MR: In things like Left 4 Dead, and other titles, you will see the AI, if they see the player struggling with combat, they will perhaps spawn less of the AIs; perhaps spawn less of the difficult AIs, like you'll see less tanks, perhaps. I've actually seen, on Left 4 Dead servers, in playing, all of the sudden, when I'm playing in advanced, it kicks me down to normal because I'm not performing very well.

So those are the things that I think we'll see. Because the goal is to not punish the player; everyone agrees that no player wants to be punished. It's fun to get defeated every once in a while, and change your tactics up, but it's unfun to just be like water breaking against rocks the whole time.

Is it more challenging to implement?

MR: Not necessarily. You just really need to keep heuristics across gameplay sections, of how the player's doing. I think what you'll see is the difficulty levels, perhaps the three different difficult levels that you're used to seeing, will still be there, but they'll just be hidden behind the scenes.

As a designer, we'll still have the hit points for this character, and the accuracy this character, whether or not they play it on easy, medium, or hard. All that you'll see is, it'll be completely opaque to the player what difficulty level he's playing on. The game will dynamically switch between hard, easy, and medium, depending on what he's doing.

And, really... you never know what a game's difficulty levels are between developers. And, there are harder sections of the game than the beginning of the game, so what may be fine for you at the beginning, is not fine for you later in the game.

Or maybe there's a particularly poorly designed area in the game that is a lot more difficult than the rest of it; in which case, it would be nice to go, you know, "You've died three times in this area; we're going to kick you down to medium for let's say the next few minutes, and maybe we'll bump you back up to hard after a little bit." But don't tell the player about it. The point is that the player just wants to feel successful, and that he's moving through the content.

Do you find that, beyond things that are obvious -- we talked about hit points, accuracy -- there are untapped difficulty managing solutions?

MR: One thing is grenades. If a player is hunkered down in a particular location, we'll have the AI throw more grenades. That's one area where we could temper down; the AI could throw less grenades if you are in an easy setting, so you'll have more time to hunker down.

In a game like F.E.A.R. 2, when you activate slow-mo, there's a certain ratio of how fast the player moves versus how fast the AI moves. That's something that could be adjusted as well. Maybe if you're on easy, the player moves faster relative to how the AI moves, so you're more successful.

Another thing is penalties for death. If you have a checkpoint saved, and you have half your health, you progress forward. If you die, maybe if you're on an easier difficulty level, we will refill your health for you when you reload that checkpoint -- even though you only had half your health when the checkpoint happened.

I was wondering about AI squad behavior as well.

MR: We have a system where what we call "activity stats" basically looks at the environment, looks at where the player is, looks at where the AI is, and decides, "You know, it would be really beneficial for the enemy AI if enemy A would go to this cover node, and enemy B would go to this cover node, and we'll try to flank him." So it's like a way of coordinating the AI to fight against the player effectively.

Is that engrossing for the player? Is that how you look at it, when it comes to making these cooperative AI? It seems to be the general gist; it presents more challenge, and it makes the game feel more realistic, because the enemies are working together.

MR: Yeah, well, you definitely want that sensation. I mean, you definitely don't want a guy standing out in the middle of the open, and you want the feeling of "These guys are working against you." Particularly, we have callouts, which are just the things that the AI says, and it's great when you see a guy get damaged, and he says, you know, "I'm down!" and the other guy yells, "I've got your back!" and he will move in to attempt to cover him. It just feels like you're fighting against a more intelligent enemy.

Can you tell me what the "goal-oriented action planning system" is?

MR: Right, G.O.A.P.S.! That's the way that the AI decides what behaviors to use. A standard -- one of the more standard methodologies for choosing behaviors in games is what's called a "state machine"; basically it's just a linear chain of actions that the AI designer has said, "You will do these things in this situation," and he goes ahead and does those things. It doesn't necessarily allow the AI to be very flexible.

So we have this goal-oriented system that involves two aspects: There is a list of goals that the AI wants to accomplish -- a typical goal would be "kill enemy"; another one would be, "get to cover"; a whole bunch of those things. The goals don't actually do anything aside from make the AI decide that he wants to do these things. There is a flat list of actions that the AI will choose from, and they will solve for these actions backwards.

For instance, let's say that the AI wanted to kill the enemy. That would mean that there are a whole bunch of actions that satisfy the requirement for there being a dead enemy; let's say, "Attack with ranged weapon", right? He has a ranged weapon. That's a pretty easy chain right there, like, "I want to kill somebody; this action kills somebody; I'll go ahead and do this action."

Where the power comes from is the fact that those actions themselves can have conditions that they need to have met. So, "attack with ranged weapon" may have conditions that say, "I have to have a weapon, and I have to have it loaded. Go find me more actions that satisfy those requirements." The AI just decided at this point that he's going to attack with a ranged weapon; he now has to figure out how he can get a ranged weapon, and how he can get it loaded. So, at that point, he may find another action, which is "go to this weapon", and then he may find another action which is "reload your weapon".

So, that whole chain that I just described to you, of him doing three things in a row -- which is going to pick up a weapon, loading a weapon, and then going to attack the player -- that was not a directed thing that the level designer, nor that the AI engineer had to program; it was just the fact that we have these aggregate actions that the planner can pick from at will. Does that make sense?

Yeah, it does, totally. How complicated can those actions get? I mean, obviously, some of it's limited by the nature of the game -- obviously, guns, grenades, cover.

MR: Yeah. The actions themselves, the individual actions that the AI can take, we try to keep them pretty small and pretty atomic, this way they can be used by other goals, let's say. I mean, the "go-to node" action can be used by a lot of different goals; it satisfies a lot of different things. And you're talking about how complex an actual chain can be?

Obviously, it makes a lot more sense for, as you said, the individual actions to be as granular as possible, but how complicated can these overarching goals get?

MR: More often than not, they are not complicated. That's the baseline. I mean, more often than not, he has a weapon, so all he needs to do is he needs to get to cover, and he needs to shoot at you from cover.

It's the unique situations that we have that have more complex chains -- like, you know, we didn't have to code anything in order for him to pick up a weapon, so if the AI happens, let's say, to throw an incendiary grenade at the player: As part of the "I'm on fire" behavior, he drops his weapon. We didn't have to go in there and code anything for him to go pick up his weapon; he just knows to go pick up his weapon because he wants to kill the enemy, and that's something in that plan.

There are more complicated behaviors, from an architectural standpoint, that don't necessarily seem complicated from the player's point of view. So we may have a complicated chain of like four or five different actions that happen in a row, but from the player's point of view, it's really just him displacing to another cover node, patting down an ally who had been on fire, or reacting to a shock grenade. They can get complicated behind the player's back, but it doesn't necessarily look that complicated to them.

That's the best outcome, actually, to a player, isn't it? That's kind of the weird thing about AI. You're probably doing your best job when the player doesn't notice it.

MR: Oh yeah. I mean, if the player notices the AI, there's two cases: Either he's done something great, or he ran against the wall for the last three seconds. And, generally, good AI is not noticed; great AI is noticed, but that's far more rare.

I think we've made some substantial improvements from F.E.A.R. 1 to F.E.A.R. 2 -- that does not necessarily mean that the AI is any more difficult to kill, it just means that the environment is richer, and the player is more engaged in the combat. Because, I feel, we've made the AI seem a little bit more realistic. He's not more difficult, he's just more realistic.

You do, to an extent, have some companion-type AI characters who fight alongside you in games, and that's also been a little bit touchy in general. There are a lot of complicated issues there, whether it's how effective they are, how effective they aren't -- intentionally, or accidentally.

MR: Yeah. I mean, one of the main problems is that it's difficult for the AI system to understand the verbs of the player, or player intent, you know? There are some games -- I feel like Rainbow Six does it particularly well, and we do too -- if you narrow the focus of the engagement, so that pretty much the only thing you can do is stand on one side of the environment and fight the AI on the other side... then it becomes very successful, because the AI really knows what the player is trying to do: he's trying to kill the bad guys.

Halo does not have this problem, but one example of a problem that other games have is, when an AI tries to jump into a Warthog, it's simple stuff like, "Does the player want to jump into the driver's seat? Or does he want me to jump out of the driver's seat so that I can jump on the turret?" You know, it's just this complicated thing where the AI is trying to magically figure out what the player is trying to do.

Multiplayer players have problems with that, too! (laughs)

MR: Yeah, exactly. It's a problem overall. Hopefully it's mitigated with multiplayer by voice chat -- and perhaps that's something that we'll see. Like I said, I think Rainbow Six and those do it kind-of well, and I think that's because the actions that the companion AI does is directed by the player. Like, the player explicitly tells the AI: "Go break down this door!" It's not up for the AI to decide whether or not that happens. So, if there's some direction that the player can give the AI, I think that the problems get a lot smaller.

This article was first published December 18, 2008. It has been updated in 2024 for formatting.

You May Also Like