Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

I spent that last two months teaching a bot to create illustrations for Magic: The Gathering cards designed by another bot. How I built Urza's Dream Engine to generate art for RoboRosewater cards and the tournament that humans played with automated cards.

[This post originally appeared on my blog]

Urza’s Dream Engine is a neural network project I’ve been working on for a few months. It’s a bot that creates art in the style of Magic: The Gathering cards. It began as an effort to create art to go with the cards created by the amazing Twitter bot, RoboRosewater, and grew into its own beast. It was my first foray into machine learning as well as my first project focused on producing still images.

You can see some of the output on the site for the project here: http://andymakes.com/urzasdreamengine/

Although Urza’s Dream Engine grew a life of its own, it culminated in a booster draft, held at Babycastles in NYC on Nov 18th 2017, using the RoboRosewater cards paired with my bot’s art. This was a game played by humans, but designed and illustrated by machines. In this post I’ll go through my process step by step starting from the initial idea to the Babycastles event.

The Urza’s Dream Engine site has downloads for the images, cards, and print-and-play booster packs used at the Babycastles event.

This whole project started because I was enamored with a twitter bot called @RoboRosewater. I certainly wasn’t the only one; as of this writing, the account has 23,500 followers. RoboRosewater is a neural network bot created by Chaz and Reed that has been running since 2015. Each day it posts a new Magic: The Gathering card generated by a machine learning algorithm. Basically, it is a program that has been trained on all of the existing MTG cards (roughly 30,000 over the course of the game’s two decade run) and which now attempts to create new ones in the same vein. The bot itself only generates the text for these cards.

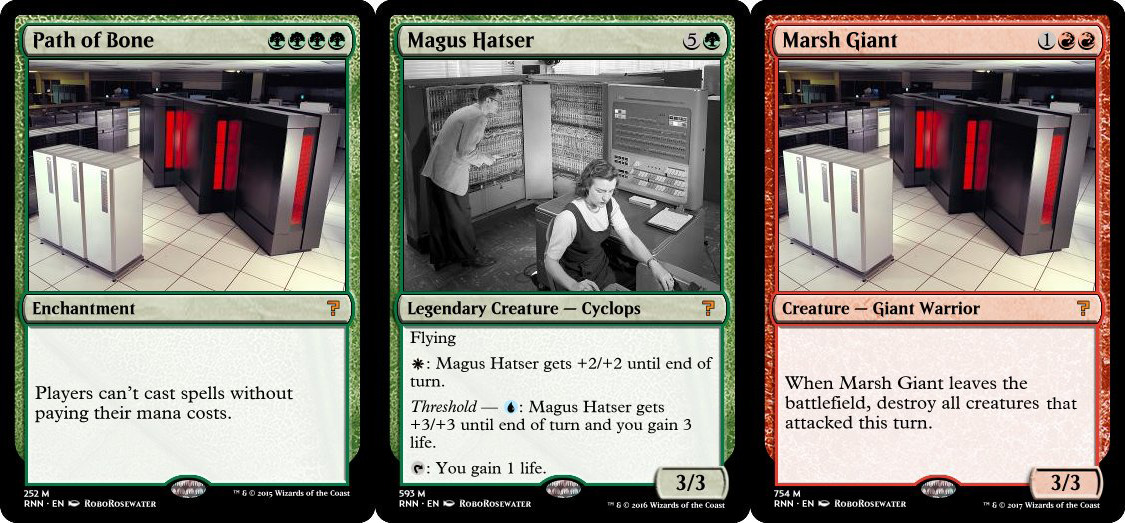

Most of the cards it makes are borderline nonsense (but still very fun), but a surprising amount of the output is actually playable. Maybe broken in the sense that the card is too strong or too weak, but actually legal within the (fairly complex) rules of the game. Even these legal cards are still alien, like a glimpse into a bizarre alternate reality of the game. Some are amusingly pointless (like a card that states that players must pay the cost of the spells they cast). Others staple three seemingly unrelated abilities together. And some have genuinely interesting effects that as far as I know have never been used in the game’s long run. If you like Magic or bots I highly recommend following this account.

Given my interest in exploring unusual parts of the game in my Weird: MTG series, holding a RoboRosewater booster draft seemed like a natural fit. I had something of a convenient problem though: the cards posted on the RoboRosewater account use stock images of computers as their art. They basically all looked the same, and trying to play with them would be hugely difficult as card art is an important identifying feature, especially when playing with a new set. Imagine having to read 20 identical cards on a table every time you are thinking about your next move.

I say a “convenient” problem because I am usually keen to try a new project, and the challenge of creating illustrations for these cards sounded exciting. The text was generated via automation, so I knew the images should be as well. Early on I thought about trying to create an openFrameworks sketch that would cut up and recombine the existing art from the game, but it didn’t feel quite right. The cards were created by a neural network so the art should be as well. I’d never messed around with neural networks myself, but I was as blown away by Google’s Deep Dream algorithmas anybody else so now seemed like the time to learn!

I’m a total script kiddie when it comes to machine learning. People get their PhDs creating and working with these techniques. I’m a game designer who likes to tinker. As a result, I tried setting up a lot of different neural nets on my laptop (non-GPU enhanced, but I wasn’t going to let that stop me). With most, I ran into some roadblock after 5 or 6 hours that couldn’t be solved by importing a new Python library, and I’d move on to the next one. Finally I had success with a Tensorflow implementation of Deep Convolutional Generative Adversarial Networks by Taehoon Kim. I followed the instructions on the GitHub page and trained the bot on some sample images of faces. After tweaking some of the source code to work on my machine, I produced a set of my own slightly-off faces and I was off to the races.

Part of how neural networks function is that before they can attempt to replicate a style, they must first train on an existing dataset to learn what the thing is. This data set is no trivial thing. In order to work well, the data must be ordered (being of similar type and presented in the same way) and there must be a lot of it. The sample set that came with Kim’s Tensorflow implantation contained 200,000 images of celebrities–all from the neck up, facing the camera and cropped to be the same size.

Fortunately, the sheer volume of data associated with Magic: The Gathering is huge, probably rivaled only by major sports like baseball. It is the first & longest running collectible card game. It first launched in 1993, and Wizards of the Coast, the company that produces it, has released multiple new sets every year since then. This means that there are currently over 30,000 unique Magic cards. This pool, while small in terms of what would be best for neural net generated art, is still larger than any other game I could hope for. Of course, the art in the game is also not nearly as uniform as the celebrity data set I described above, but I didn’t need perfect output. I was ok with things getting weird and I wanted to see what the bot could do.

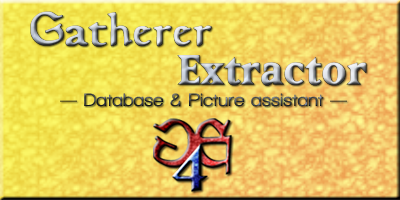

First things first, I needed to get that data. Luckily, I am not the first person who wanted to scrape info from all existing MTG cards. There is a piece of software called Gatherer Extractor that will download text and images within certain parameters from Wizard’s official MTG online card database, Gatherer. This software, release for free by MTG Salvation user chaudakh, prepares an Excel spreadsheet file with all of the requested information and can also download the card-scans (the image showing the full card). To my delight it also had a check-box to crop the card image to just the art, saving me a Python or openFrameworks script to do the same thing.

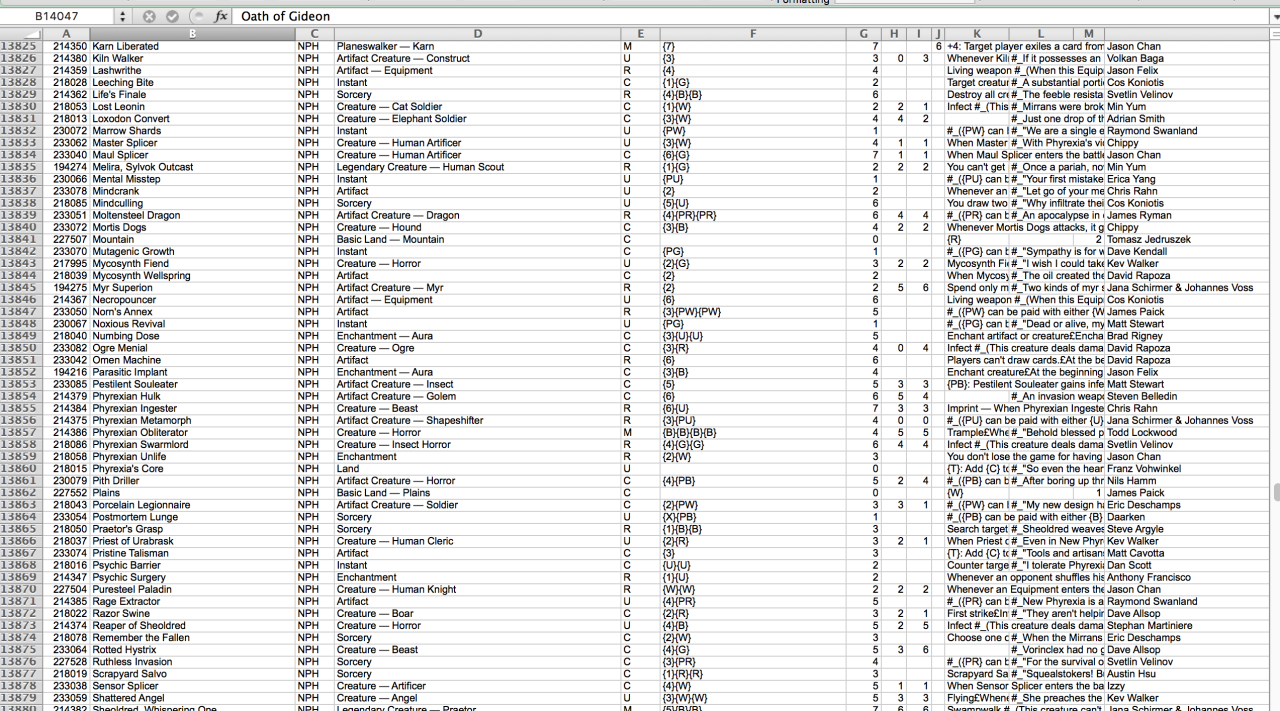

I tested Gatherer Extractor with a single expansion set, and after seeing that it did what I wanted, I set it to work pulling the card text and image of every card in the game. The resulting spreadsheet makes my computer chug a little bit, but it had everything, cross-referenced with the image associated with each card.

Card art in Magic: The Gathering is dependent on a lot of things, but there are some game factors that are frequent indicators of aspects of the illustration. Card color is a big one. Red cards tend to lean toward warm colors in their palette, blue cards toward cool colors, etc. Creatures also tend to look different than artifacts, which look different that sorcery spells (or so I thought–more on that in a bit).

Initially I had hoped to have the bot simply train on all of the card text and art so that it could learn the difference between, say, a red creature and a white enchantment itself, but I soon realized that this was more than I could do with my setup (or at least with my experience level). It became clear that the best course of action was to sort the art beforehand by whatever metric I wanted to use, and then have the bot train only on those images. To do this, I built a simple Python script that would let me set some parameters (for instance “blue creatures” or “enchantments”) and would then scan the spreadsheet to find all cards that met the criteria. For each card that fulfilled the search query, the image associated with that card was copied to a new folder to use as the training set.

The first training set I tried was all creatures with the “goblin” creature type. This resulted in a little under 400 images for the bot to train on. After a few hours the results were still rubbish. After more experimentation I would learn that I had made two errors: 400 images was a minuscule dataset, and a few hours was not nearly enough time to train, even with a good data set.

I switched gears and tried white creature cards, figuring that color was the major indicator of the palette used in the art and that creatures would at least all have a main subject in the art (as opposed to sorceries or enchantments). I let it run for four days this time. As it worked, it would spit out a sample image every hour or so. I was able to watch a formless mess turn into something more discernible. (The sample images are produced in an 8x8 grid, so each sheet contains 64 separate images produced by the bot).

This bird-fish giant was the first image produced by Urza’s Dream Engine (then unnamed) that I really loved. It’s still one of my favorites.

At this point, I knew things were working. I messed around with the settings for other groups, but as far as the code I was using, very little changed. The biggest modification was that I shifted from breaking up the images by card type and started using color as the only criteria for sorting. Contrary to my initial assumption, I realized that nearly every card in Magic has a central figure in the art, not just the creature cards. In order to illustrate the magical effect of a spell or enchantment the art shows a central figure either reaping the benefits of or being victimized by the spell in question. Since there was little artistic difference between them, there was no reason to make smaller training sets.

These three cards all represent spells in the game, but the art could just as easily go with a creature, since each one focuses on a character.

The next two months or so were spent with the bot quietly running in the background of my laptop (and absolutely destroying my battery life when unplugged). I worked on other projects, most notably finishing up my sequencer toy Bleep Space with musician Dan Friel. During this time I occasionally posted the images the bot created on this Tumblr and on Twitter. The images resonated with a lot of folks and I always enjoyed hearing what people saw in them. The images are abstract enough that while conveying the general vibe of MTG art, they function a bit like a Rorschach test, allowing for many interpretations.

In order to make the images a bit more human-readable, I used a few Photoshop actions that I could run as a batch. The main one I used took the 8x8 grid of images that the machine learning bot spat out and cut the image into 64 individual images. These images were then blown up slightly to be a bit easier to see and so that they would eventually fit nicely onto the RoboRosewater card frames.

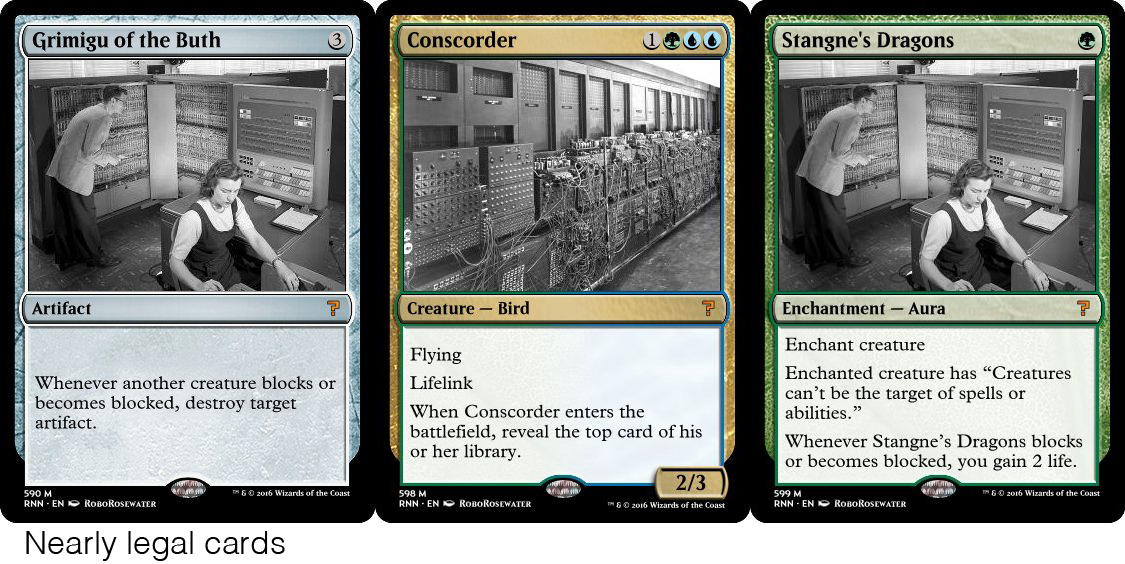

Around this time, the images were featured in an article on Waypoint (The games culture wing of Vice) by Cameron Kunzelman. As I noted in the article, one of the things I was enjoying most about the bot was that it was generating images that seemed to have some of the flowing style of Rebecca Guay, my favorite illustrator for MTG.

On top are Guay’s illustrations for Bitterblossom and Regenerate. On the bottom are two of the pieces created by Urza’s Dream Engine.

On top are Guay’s illustrations for Bitterblossom and Regenerate. On the bottom are two of the pieces created by Urza’s Dream Engine.

I was collecting and displaying the images, but I still wanted to do something with them. My background is in interaction, and that hasn’t changed. My original plan was to pair my images with Roborosewater cards and hold a booster draft with them, and that was still what I wanted to do. My previous Weird MTG events had been much simpler (a booster draft using cards from Legends and Arabian Nights, a tournament only allowing cards from 1994, and a cube draft made of “rare” cards that were worth 25 cents or less). Most of these had involved printing proxies (printed cards used in place of real cards that are too valuable to actually play with), so I had a tool chain in place for that, but the scale of this one was much bigger, and it involved creating the card images before printing them.

A booster draft is a specific type of Magic tournament that involves creating a deck on the fly. The typical tournament type, called “constructed,” involves players building a deck at home with cards they own and bringing it to the tournament. Booster drafts are of a type of tournament, known as “sealed”, where players come empty handed and are given unopened MTG products to build their decks with. The “draft” part of “booster draft” comes from how players build their decks at these tournaments. The players sit in a circle, and each player opens a booster pack (containing 15 cards), selects one card to add to their pool, and passes the remaining cards to the player next to them. This process continues until each player has 15 cards, at which point the players open their next pack. Each player starts with three booster packs, so at the end each player has 45 cards to use in building their deck (not all of the cards need to be used), but because they drafted cards from the packs going around, the card pool for the tournament is actually much larger and players can exercise significant strategy in building their pool of 45 cards.

Being more interested in playing than actual deck-building (and being pretty awful at deck-building), booster draft has always been my favorite format. It also lends itself well to an event that uses cards that nobody owns or could possibly own. It did, however, mean that I had to construct booster packs for players.

The first thing I needed to do was create the pool of cards to draw from. To do this I went through every image that @RoboRosewater has every posted (roughly 830 at the time). To get started, I sorted the images into four categories. Here are my criteria and a ballpark value on how many cards posted by the bot fell into that category

Legal: Cards that could be played as written. I’d say roughly 40% of the cards posted by RoboRosewater were legal.

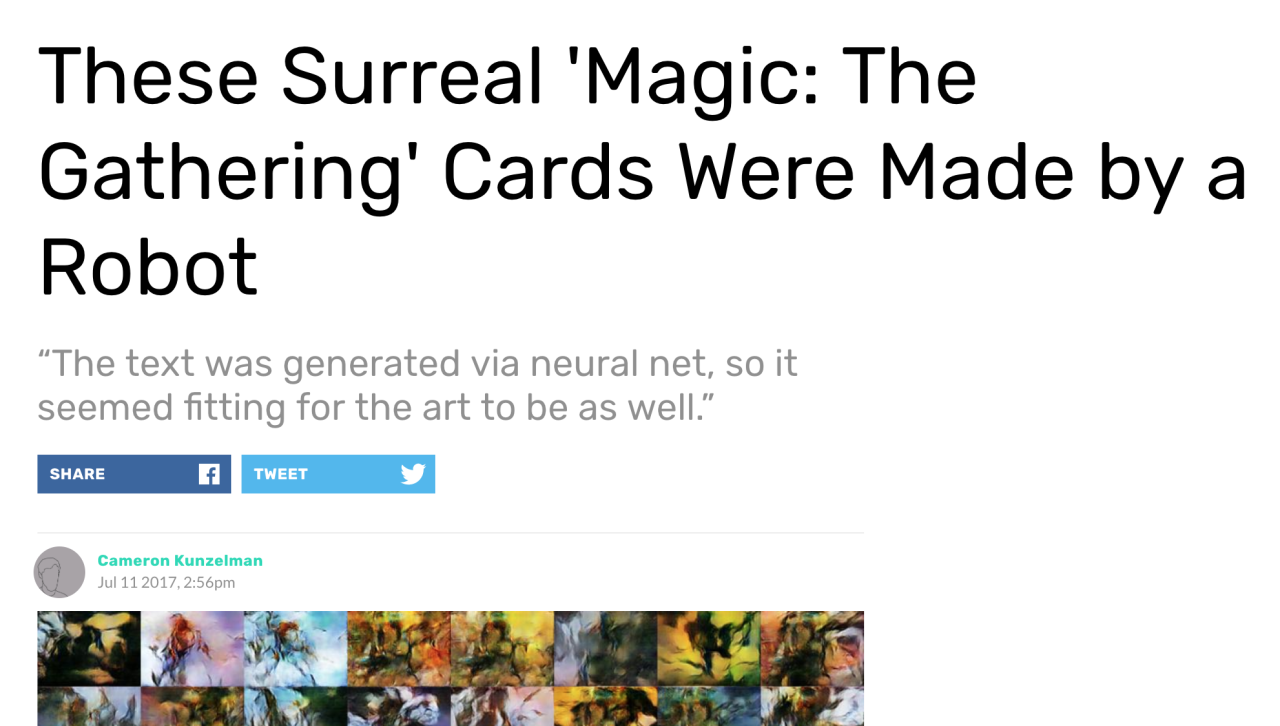

Legal but Functionless: Cards that could be played but effectively did nothing. While these are fun to see printed, they essentially represented dead cards in a booster pack. This category was small, making up only about 5% of the cards. (Yes, there are niche cases where these cards would do something, but the use case is so narrow as to effectively be 0 in a sealed format)

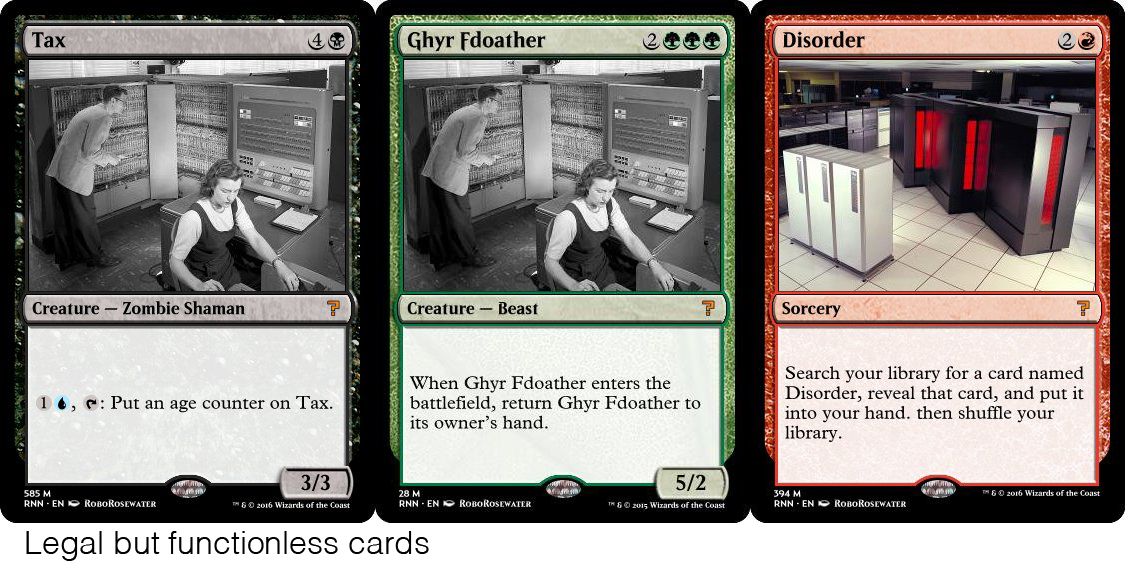

Nearly Legal: Cards that were not technically legal within the rules of the game, but which had only one obvious interpretation. These had to be things that could be fixed with a slight edit that would not be dependent on me making design decisions for the card. I was pretty strict about not editorializing, so anything that could potentially be interpreted multiple ways was pushed to the next category. The result was that this also made up about 5% to the total cards.

Illegal: Cards that simply did not work within the rules of the game. These cards tend to be collections of Magic terms that do not work in any comprehensible way in the game (they also tend to come from shorter training period as indicated by the art on the card). The longer the text on the card, the more likely it was to veer into this territory. This was the biggest category, with about 50% of the posted cards landing here. While these cards can be great fun to see–the close-but-no-cigar nature of their wording exists in the same vein as videos of robots falling down–they wouldn’t work for this event.

Power level did not factor into the decision making at all (besides avoiding cards that basically did nothing). My goal was not to create a balanced environment, and in fact, I think that trying to “correct” for the weird power levels created by the bot would have undermined the authenticity of the event. All of the cards in the “legal” or “nearly legal” categories were initially included, even cards like Teferi’s Curse that threatened to stall out games.

A quick note: it may seem at first glance that having less than half of the cards be legal suggests some kind of failing on the part of RoboRosewater and its creators, but this simply isn’t true. Magic: The Gathering is a deeply complex game with many accumulated rules and abilities on cards over the years. The fact that RoboRosewater can create any cards that are legal is astounding. The fact that it can do it on a regular basis is all the more impressive. I would be over the moon if I made a similar project for any game that was able to succeed 40% of the time. I certainly did not include even 10% of the total output of Urza’s Dream Engine on my website for it. Furthermore, the purpose of the bot is to create interesting cards. They were never meant to be played, so my metrics for what would work for this event are not the same ones the bot’s creators were using.

After sorting the cards into these groups, I broke them down by color. Booster drafts work best when each color is represented somewhat equally. I found that black was the weakest with only 40 viable cards, while white and green were overrepresented with around 60 each. Although I was reluctant to apply my own design decisions to the game, I decided that removing some white and green cards in order to keep some color balance was a good idea. I bumped a few of the cards that were in the “nearly legal” category from those colors and pulled a few more that were borderline functionless. This was the extent of my own design decisions for the draft.

The result was a 308 card set. Definitely a large set, but not so big that players in a draft won’t see some of the same cards go around more than once. Because the cards generated have no rarity level (typically common, uncommon, rare & mythic), all cards were equally likely to show up in a given booster pack.

Now that I had all of the RoboRosewater cards sorted by color (or type in the case of artifacts and land), it was time to combine them with the images that Urza’s Dream Engine had created. Once again, I wanted to maintain automation’s control over the output. My purpose in this process was to curate and facilitate that automation. I wanted a program to randomly pair cards with art of the same type.

To do this, I built an openFrameworks application that would accept an input card image folder and an input art image folder. Once given these folders (for example, red RoboRosewater cards & red card images produced by Urza’s Dream Engine), it would randomly select from the two pools, combine the images and save the output as a new image. As it did this, it would remove both the card and image from the pool to guarantee that there would be no repeats.

As I mentioned earlier, the abstract images produced by Urza’s Dream Engine lend themselves to multiple interpretations. This wound up being a serious boon when paired with the cards. Between RoboRosewater’s liberal use of abilities and fascinating names, and my own bot’s hazy images, nearly every pairing felt like it made sense, even though it was random beyond the color of the card. Although I knew the power of the human mind to seek connections and narrative is an amazing thing I was pleasantly surprised by just how right everything felt.

Luckily, this was not my first Weird MTG event that involved making proxy boosters. I’ve created a tool for myself that does exactly this which is available for free. This tool draws cards from input folders and creates PDFs of print-and-play ready booster packs. The printable cards include a small number in the bottom corner to identify what pack they belong to. The purpose of this is to preserve the randomness generated by the program, including the rule that no pack contain duplicates of the same card.

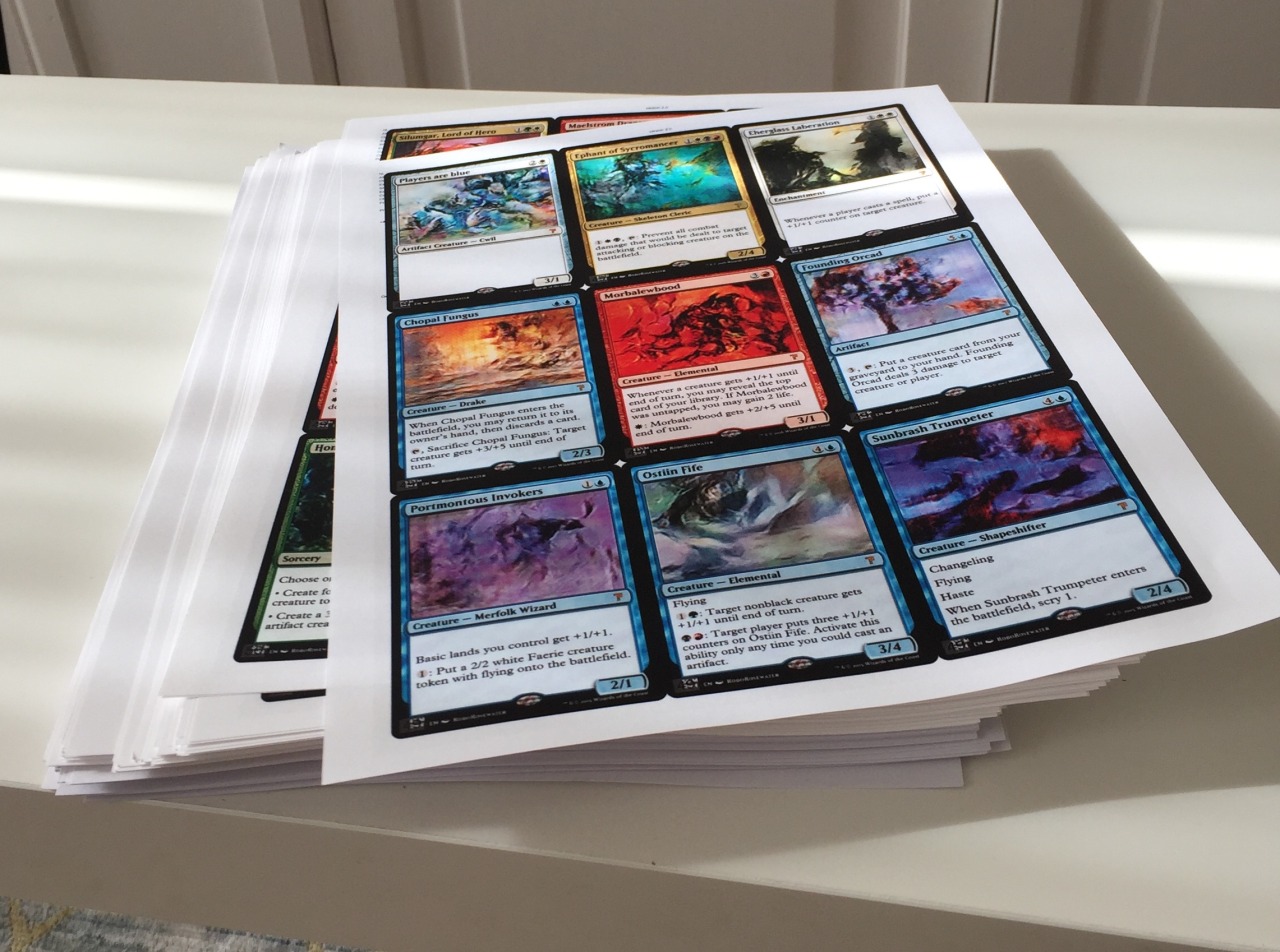

I printed a total of 72 packs. Enough for 24 players. The resulting stack had some real heft to it.

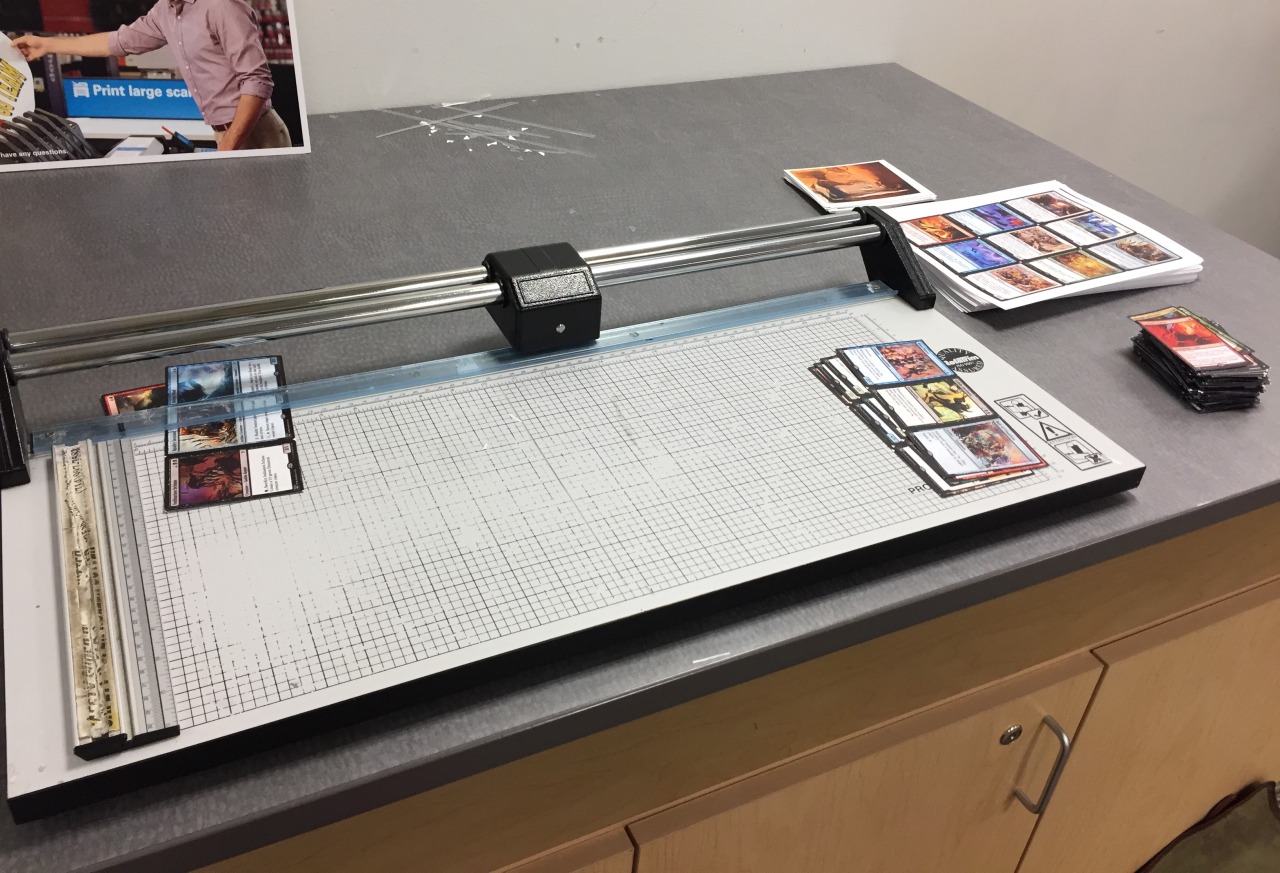

I took the whole thing to Kinkos and got to work at the paper cutter. It took a little over an hour to cut everything out.

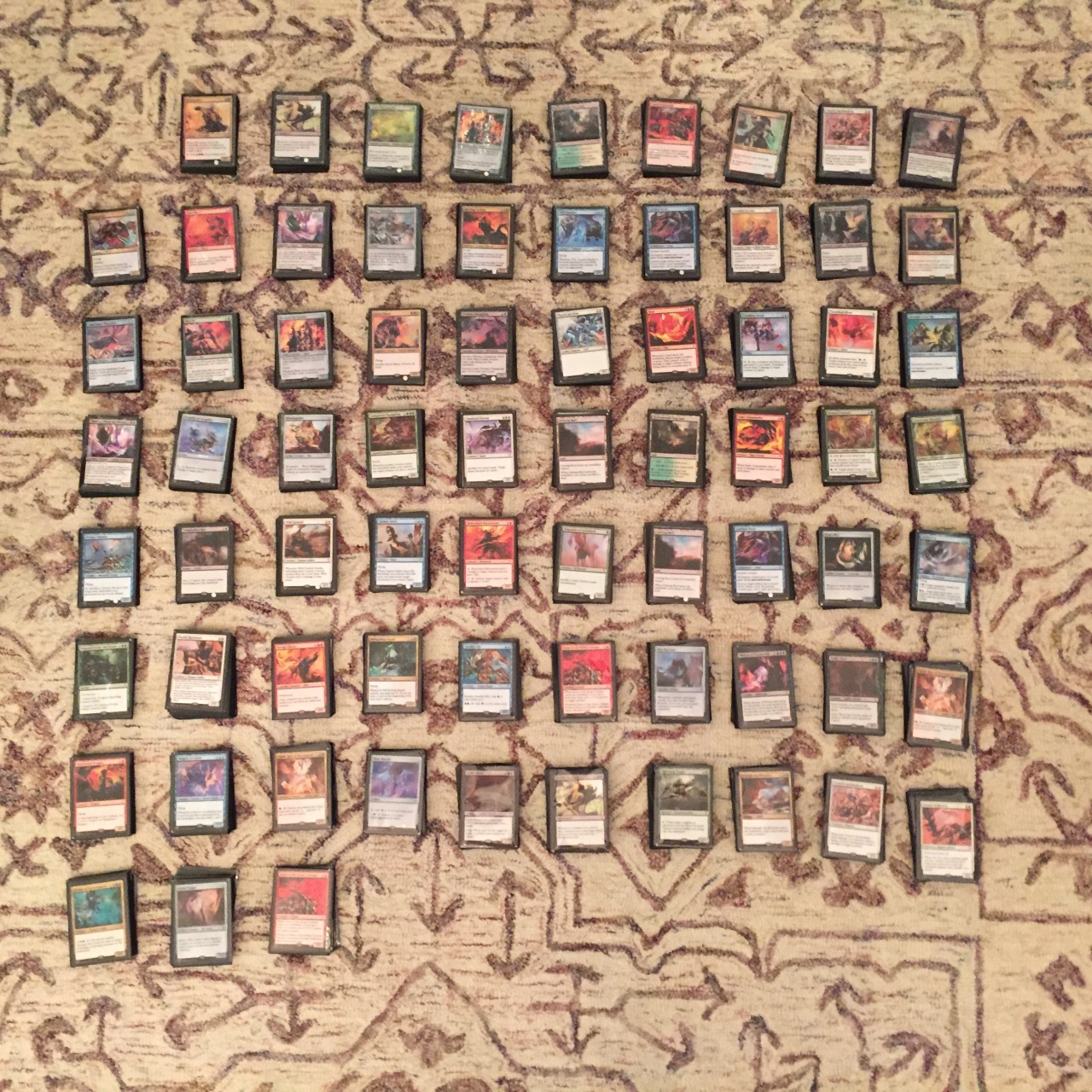

Once I got home, my partner Jane and I watched shows while sleeving cards. Because these proxy cards are printed on paper, they do not have the weight to be played with by themselves. To get around this, they must be put in a protective card sleeve using a real card as a backing to give them the necessary weight. These sleeves are very common in the game, and are typically used to allow players to use valuable cards without worrying about scuffing them or having something spilled on them. I buy them in bulk.

Once they were sleeved (at the expense of nearly every common magic card I own), they were ready to be sorted into their packs. This is where the little ID number at the bottom of the card comes in handy. Because I am usually cutting multiple sheets at a time, the order gets a little shuffled as cards wind up not next to their neighbors, but actually the card that was in the same location on the sheet above or below it. I started by grouping everything by their tens place (0-9, 10-19 etc).

Once they were in more manageable piles, I got to work placing them into their actual packs. At the end I had 72 stacks of 15 cards for 1080 total cards, enough for 24 players to draft. After counting each pile to make sure it had the right number, I put them in baggies that would act as surrogate wrappers. I packed up a bunch of basic lands along with them (for players to use when building their decks) and the setup for the event was complete.

In the week leading up to the event, I posted about it a lot. I was having great fun creating sample packs that not only showed the Urza’s Dream Engine art, but also asked players to think about the RoboRosewater cards in a game context rather than just as abstract individual cards. I also made sure to tag RoboRosewater in these posts. I had previously attempted to reach out the the developers on the both on Twitter and on MTG Salvation, the forum where it had originally been posted. There was no info on either about who actually made the bot and I wanted to be sure that I could credit them and that I had their blessing.

Luckily, a few days before the event at Babycastles, I received a Twitter DM from the RoboRosewater account asking if there would be any pictures of the event. I was thrilled to hear from them, and honestly a bit starstruck. I was happy to know that they approved of the event and was glad for the opportunity to ask about how they wanted to be credited. I want to give a huge thanks to Chaz and Reed for making the bot that got the ball rolling on this project.

As a prize to give away at the Babycastles event, I ordered two custom playmats from Inked Gaming with a collage of some of the images generated by Urza’s Dream Engine. It’s a small thing, but it was fun to see them, and I hope they’ll get some use from the folks who won them.

The event itself was fantastic. I knew the cards would be interesting to play, but I wasn’t sure they would be fun. It turns out that as weird as the draft environment was, it was also very playable. The set offered multiple viable strategies both for deck building and playing. There are a few leftover packs and I’m looking forward to doing it again. A photographer, Lippe, took some lovely pictures of the event.

Magic is a game that I love, and this was a fascinating way to interact with it. It was also my first glimpse into machine learning and image generation as a whole. I’m very please with the result both of Urza’s Dream Engine and the resulting booster draft. Finally I want to extend another thanks to Chaz and Reed for creating RoboRosewater and giving me an tool to build around.

Read more about:

Featured BlogsYou May Also Like