Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Throwing--it’s one of the first things you do when you try out VR. It's also one of the most inconsistent experiences. My baby-tossing game demanded better. So here's how I made throwing better for a game where throwing is a central mechanic.

Throwing--it’s one of the first things you do when you try out VR. You take that virtual coffee cup and chuck it. The cup, donut or ball goes off spinning crazily. Before you know it you’re trying to hurl potted plants at the tutorial-bot.

Some of your throws go hilariously far. Some go pathetically low. One or two actually ding the beleaguered NPC. Maybe our first lesson in VR is that it's actually kind of hard to throw accurately. When I had this experience, I’d assumed it was because I’m bad at VR. We accept that mastering a control scheme is part of a game’s learning curve. But when throwing with the same motions yields wildly different results, you've got a recipe for frustration.

A hard overhand pitch falls short…

…while a flick of the wrist might send things flying.

Over the summer, I’ve been working on a casual action game called Rescuties. It’s a VR game about throwing — and catching — babies and other cute animals.

In VR, if the world reacts to us in a way that contradicts our intuition, we’re either breaking immersion or frustrating the player. As a game that’s all about throwing, Rescuties couldn’t rely on a mechanic that was inherently frustrating.

The frustration is built into the design of these games. In a nutshell, it’s hard to throw in VR because you can’t sense the weight of the virtual object. Approaches vary — but most games have tried to respect the physics of the virtual object you’re holding as faithfully as they can. You grab an object, apply some virtual momentum to it in the game, and off it goes.

Here’s the problem: there’s a disconnect between what you feel in your real-life hand and what’s going on in the virtual world. When you pick up a virtual object, the center of mass of that object and the center of mass you feel in your hand have some real separation. Your muscles are getting bad info.

If you were just pushing your hands in this or that direction when you threw, this separation wouldn’t matter much. But you bend your arm and rotate your wrist when you throw. (The key to a good throw?--“It’s all in the wrist!”) As you rotate your real hand, you end up applying excessive momentum to the virtual object as if you were flinging it with a little spoon.

This is a very unforgiving phenomenon. In many VR games, you can “pick up” an object up to a foot away from your hand. With a press of a button, the object is attached to your hand, but stays at that fixed distance, turning your hand into a catapult. The difference between three inches and twelve inches can mean a flick of the wrist will send an object across the room or barely nudge it forward.

In Rescuties, you’re catching fast-moving babies — and you’re in a rush to send them onto safety. The old approach resulted in overly-finicky controls and a frustrating, inconsistent experience. Why can’t I just throw like it feels like I should be able to?

The crux of a more successful throwing strategy is to respect how the controls feel to the user over what the game’s physics engine suggests.

Rather than measuring throwing velocity from the virtual object you’re holding, you measure it from the the *object you’re holding* — the real life object you’re holding, i.e., the HTC Vive or Oculus Touch controller. That’s the weight and momentum you feel in your hand. That’s the weight and momentum your muscle memory--the physical skill and instinct you've spent a lifetime developing--is responding to.

The way you bridge the physical weight to the virtual is to use the center of mass of the physical controller to determine in-game velocity. First, find where that real center-of-mass point is in-game. The controllers are telling you where they are in game-space; it's up to you to peek under the headset and try to calibrate just where the center-of-mass is. Then you track that point relative to the controller, calculating its velocity as it changes position.

Once I made that change, my testers performed much better at Rescuties — but I was still seeing and feeling a lot of inconsistency.

(See this article for an interesting discussion on trying to convey virtual weight to players — an opposite approach that skips leveraging our physical sense of the controllers’ weight in favor of visual cues showing the player how virtual objects behave.)

When precisely does the player intend to throw an object?

In real life, as we throw something, our fingers loosen, the object starts leaving our grasp and our fingertips continue to push it in the direction we want it to go until it’s out of reach completely. Maybe we roll the object through our fingers or spin it in that last fraction of a second.

In lieu of that tactile feedback, most VR games use the trigger under the index finger. It's better than a button -- in Rescuties, you'll see that squeezing the trigger at 20% makes your VR glove close 20% of the way, 100% makes a closed fist, etc. -- but you're not going to be able to feel the object leaving your grasp and rolling through your fingers. The opening-of-the-fingers described above is simply the (possibly gradual) release of the trigger. I found that I wanted to detect that throw-signal from the player as soon as the player starts uncurling their fingers. Throws are detected when the trigger pressure eases up--not all the way to 0% or by the slightest amount, but by an amount set experimentally.

The chart above charts the trigger pressure over time of a grab-hold-throw cycle. In this case, the user is not pressing the trigger all the way to 100% -- a common occurrence as the HTC controllers' triggers go to ~80% and then you have to squeeze significantly harder to make them click up to 100%. First the trigger is squeezed to pick up an object. Then pressure holds more or less steady as the player grips the object and winds up for a throw — here you’ll see the kind of noise you get from the trigger sensor. The player releases the trigger as the object is thrown or dropped.

The signal noise and the heartbeat of the player can make the trigger strength jitter. That calls for a threshold approach for detecting player action. Specifically, that means the game detects a drop when the trigger pressure is (for example) 20% less than the peak trigger pressure detected since the player picked up the object. The threshold has to be high enough that the player never accidentally drops a baby -- a value I found through trial and error with my testers. Similarly, if you detect a grab at too low a pressure, you won’t have the headroom to detect a reliable throw/release. You’ll get what I got during one of my many failed iterations: freaky super-rapid grab-drop-grab-drop behavior.

Measuring the right velocity and improving the timing mitigate throwing inconsistency quite well. But our source data itself--the velocity measurements coming from the hardware--are quite noisy. The noise is particularly pronounced when the headset or controllers are moving quickly. (Like, say, when you’re making a throwing motion!)

Dealing with noise calls for smoothing.

I tried smoothing the velocity with a floating average (also known as a low-pass filter) — but this just results in averaging the slow part of the throw (wind-up) with the fastest part of the throw (release) — at least to some extent. My testers found themselves throwing extra-hard as if they were underwater. (This is what I tend to feel in Rec Room.)

I tried taking the peak of the recently measured velocities, so my testers saw their babies flying at least as fast as they intended — but often not in exactly the direction they intended because of the last measured direction was still subject to the noise issue.

What you really want to do is take the last few frames of measurements and observe what they suggest — i.e., draw a trendline. A simple linear regression through the measurements gave us a significantly more reliable result. Finally, I could throw babies where I wanted to, when I wanted to!

Measure throwing velocity from the center of mass of where the user feels the center of mass is — i.e., the controller.

Detect throwing at the precise moment a user intends to throw — i.e., fractional release of a trigger

Make the most of the velocity data you’ve measured — take a regression for a better estimate of what the player intends.

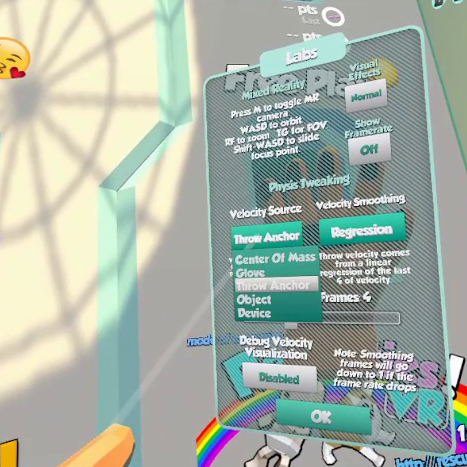

If you’re curious about testing these approaches and/or challenging them, there’s a “Labs” menu in Rescuties where you can toggle these various throwing modes on and off, switch how velocity is measured, and control how many frames of measurements are used in the regression/smoothing.

This problem is by no means solved. There’s a remarkable diversity among VR games on throwing experience and their respective players have been happy enough. When it came to throwing babies in Rescuties, I wanted to make sure the physical expectation of our muscle memories’ matches the virtual reality of the arcing infants as best I could. It's better than when I started -- but I'd like to make it even better.

So suggestions and criticisms of the above approach are welcome: you can always hit me @bigblueboo or at [email protected].

Read more about:

Featured BlogsYou May Also Like