Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Closed APIs and massive compute requirements are large hurdles for universities.

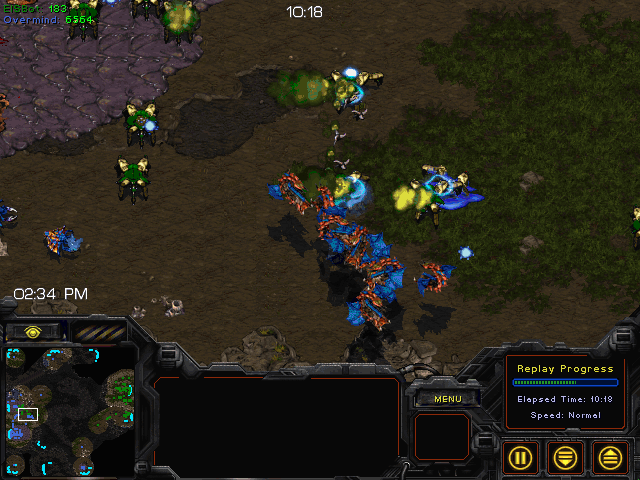

UC Berkeley’s Overmind winning the first StarCraft AI Competition

OpenAI Five is a huge step forward for AI, but it’s also really intimidating for AI researchers. Never before has there been so many open tools for building AI systems, but it also feels like the barrier to entry for academics has actually increased over recent years. I posted an open call for any interested parties to build the best StarCraft AI possible back in 2009, and it was open to anyone interested in AI. Now, it seems like you need to have access to closed APIs, massive compute power, and historic training data to make advances in AI.

I’m putting this argument out there as a devil’s advocate, hoping that I can be proven wrong. I’d like to see more open competitions, and area’s where researchers without massive compute resources can continue to make advances in AI. Here’s some of the main issues I’ve seen with recent advances.

OpenAI, to my knowledge, is using an API to build and train bots that is not available to academic researchers. If you’re a grad student that wants to build a bot for Dota2, then you’ll need to wait until the current competition ends and an open source version is eventually made available. For games likes Go, which have seen great progress with deep and reinforcement learning, this issue is not a problem. But if you’re a grad student that wants to work with video games that have large and active player bases your options are extremely limited. The DeepMind API for StarCraft 2 seems like a great option, but you’ll still have other challenges to face.

Dota 2 does provide a scripting interface, enabling bots to be written in Lua. However, this limited interface does not enable bots to communicate with remote processes and save data about games played.

Work Around: Find games with APIs that you can use. I used the Brood War API during grad school to write a bot for the original StarCraft. It was a huge hack and I somehow managed to get it working with Java, but I was extremely lucky that this project was not shut down by Blizzard. It’s great seeing the open source community continue to evolve both BWAPI and the Java version.

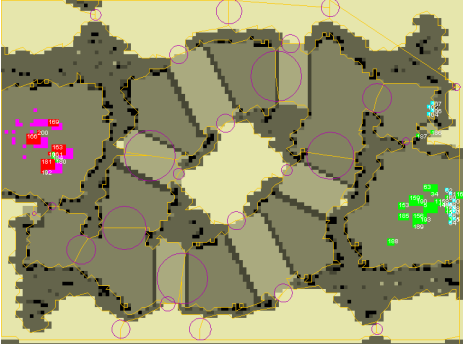

Bot debugging from an early BWAPI-Java prototype.

OpenAI is spending massive amounts of compute power to train bots, and efforts by DeepMind to train Go bots were also substantial. This is an issue that OpenAI had addressed in a blog post, but it’s also discouraging for academics. It’s unlikely that students will have massive cloud computing resources available for training bots that perform at professional levels.

Work Around: Find sub-problems that you can solve. This is actually something that OpenAI did previously, by focusing on 1on1 matches and then slowly progressing up to 5v5 by relaxing more and more constraints. I included a tech-restricted matchup in the original StarCraft AI competition, but it wasn’t as popular as the full game option, in part because there weren’t human opponents knowledgeable about this version of the game for participants to train against.

One of the sub-problems in the original StarCraft AI Competition.

One of the goals with my dissertation research was to build a StarCraft bot that learns from professional players, but now that some time has passed I realize that a different approach may have been move effective. Instead of showing my bot how to perform, I could have specified a reward function for how the bot should act and then let it self train. But, I didn’t have a good simulation environment, and had to rely on the sparse training data that I could scrape from the web at the time. This is especially a problem when you are working on sub-problems, such as 1on1 in Dota2, where professional replays simply don’t exist.

Work around: Mine data sets from the web, such as professional replays from sites like TeamLiquid. This won’t work for sub problems, but you can always bootstrap the replay collection with your own gameplay to get things rolling. I used third party tools to parse replays and then built an export tool with BWAPI later on.

Exhibition match at the first StarCraft AI Competition.

I’ve provided a pessimistic viewpoint for academics and hobbyists in game AI. It may seem like you need connections and massive compute power to make advances in game AI. It helps, but it doesn’t mean you’ll make progress without some great direction. Here’s some recommendations I have for making AI competitions more open moving forward:

Open up APIs for bots to play against human opponents

Provide a corpus of replay data

Integrate with open source and cloud tooling

It’s now easy to get up and running with deep learning environments thanks to tools such as Keras, and environments like Colaboratory that make it easier to work with other researchers on models.

In a way, my initial call for research on open AI problems failed. It was an open problem, but I didn’t fully encourage participants to be open in their approach to the problem. Now, great tools exist for collaboration and it’s a great time to openly work on AI problems.

Ben Weber is a principal data scientist at Zynga. We are hiring! This post is an opinion based on my experience in academia and does not represent Zynga.

Read more about:

Featured BlogsYou May Also Like