Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

My thoughts on the challenges DeepMind will face if applied to StarCraft

AlphaGo recently played against 9-dan professional Go player Lee Sedol. The AI won the first three games against the human opponent, achieving victory in the best-of-five tournament. With this challenge accomplished, the DeepMind team is looking for new problems to use as a testbed for the system. Demis Hassabis, co-founder of DeepMind, expressed interest in StarCraft as a challenge.

Building expert-level AI for StarCraft: Brood War remains an unsolved research challenge. The best performing bots achieve a D+ rating on ICCup, which is impressive given the level of play on this system, but still far from the skill of even the B-team members on professional teams. I started the AIIDE StarCraft AI Competition in 2010 with the goal of getting more researchers to evaluate their bots against each other and the challenge of evaluating bots against human players. The competition is now organized as an annual event, with a man-versus-machine exhibition. The best performing bots still have a long way to go to defeat expert players.

The AIIDE 2010 Man-Machine Exhibition match

Before discussing the challenges in StarCraft, I’d like to briefly discuss how I understand that AlphaGo works. The system is powered by DeepMind, which uses a convolutional neural-network and a form of Q-learning. AlphaGo extends this by using Monte Carlo tree search to evaluate board states. The neural network is bootstrapped using examples from expert human players, and then self trained using reinforcement learning. One of the breakthroughs in AlphaGo is in the knowledge representation. The system uses autoencoders to create knowledge representations that significantly outperform hand-crafted solutions. Additional details on DeepMind are available on Google’s publication page.

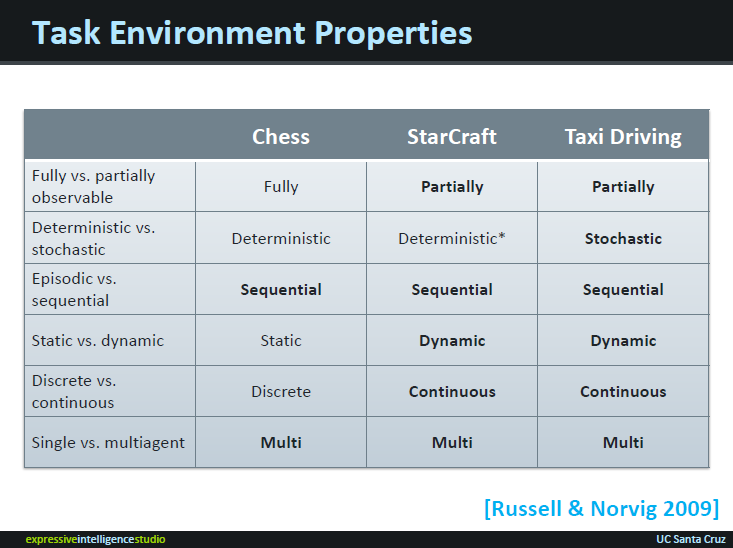

StarCraft is a great testbed for AI, because it presents many of the challenges necessary for performing real-world tasks. As part of my dissertation, I classified StarCraft in terms of Russell and Norvig’s task environment properties. The results from this analysis are shown in the figure below, with real-world properties highlighted as bold. The only difference between StarCraft and real-world activities such as taxi driving is that StarCraft is a deterministic environment. This example lists Chess as a conventional AI challenge, but all of the properties are the same for Chess and Go.

Here are the main challenges I see in applying DeepMind to StarCraft.

Fog-of-War: StarCraft is an imperfect information environment. You can only view areas of the map where you currently have units positioned. This is why the environment is said to be partially observable, while games such as Chess and Go in which the full game state is visible at all times are said to be fully observable. In order to deal with this challenge, DeepMind will need to be able to deal with the possible space of actions that the opponent may be performing, both at strategic and tactical levels. An expert AI needs to be able to predict what build order the opponent is pursuing, as well as identify where the opponent is likely to launch tactical strikes.

Decision Complexity: StarCraft has an enormous state space. Analysis is often done in terms of decision complexity, which is the number of different actions that can be performed at any given time. In StarCraft you can have hundreds of units, which can each perform different tasks, resulting in a huge decision complexity. This decision complexity is reduced by human players by following initial build-orders and using squads to group units together. DeepMind will need to develop novel knowledge representations that enable the system to efficiently reasons about the possible space of actions, using different levels of abstraction.

Evolving Meta-game: StarCraft has an evolving meta-game in which new build-orders become popular over time, and then phase out as new counter build-orders are developed. This is a property of StarCraft that also holds true for Go, since strategies in Go have evolved over time. One of the key differences in expert StarCraft gameplay is that there is a rotation of maps used each season, where different maps are better suited for different types of gameplay. For example, when I was a big Brood War spectator in 2010, Flash was able to take advantage of the macro-favored maps that reward early expansion. DeepMind would need to develop capabilities for adapting to new pools of maps, which could be done through bootstrap learning, or by identifying common patterns across maps.

Cheese: In order to do well in StarCraft, you need to be prepared for a wide variety of tactics from your opponent. Some of these techniques are referred to as cheese, because they are all-in approaches that attempt to achieve an easy win. For example, cannon rushes are a common way to try to get a quick victory over an unprepared opponent. An expert player needs to be able to handle a wide variety of exploitive tactics from players in order to consistently win. Generally, high-level players are less likely to uses these techniques, but they are commonly used in multiple-game series in order to surprise opponents. Essentially, these means that an AI needs to handle a lot of different edge cases for what an opponent might be doing. In over to overcome this challenge, DeepMind should be trained against a wide variety of different opponent skill levels, in order to make sure the space of possible strategies and tactics is covered.

Simulation Environment: StarCraft is closed source, making it quite challenging to run simulations. One of the techniques used by AlphaGo is reinforcement learning, which involves a huge amount of simulation. In order for DeepMind to overcome this limitation, it’s likely that novel abstractions of the state-space will need to be developed.

Real-time: StarCraft is a real-time strategy game, requiring players to perform a variety of actions in real-time. One of the aspects of high-level StarCraft gameplay is a large number of actions per minute (APM). High APM is necessary in order to maximize the utility of your units. For example, kiting enables players to deal damage to enemy units while minimizing damage to the attacking units. In order for DeepMind to play effectively, the system requires capabilities for performing tasks in real-time with precise timing. One possibility is combining other AI techniques, such as behavior trees or finite-state machines with deep learning.

Applying DeepMind to StarCraft would present a number of interesting research challenges. I think this would be a great challenge for Deep Mind to take on, because StarCraft is a testbed with many real-world properties. Here’s the main breakthroughs I would expect to see in a version of DeepMind that could defeat Flash or Jaedong:

New mechanisms for handling uncertainty in the world state.

Novel abstractions for reducing the decision complexity of the game.

New bootstrapping methods for tracking the evolving meta-game.

Extensions with other AI techniques for handling real-time actions.

StarCraft may be hard to solve, because the competitive players have mostly moved on to new titles. However, there is still an active competitive community on ICCup that can be used for training.

A great overview on the current state of AI for RTS games by Ontañon et al. is available here.

Read more about:

Featured BlogsYou May Also Like