Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

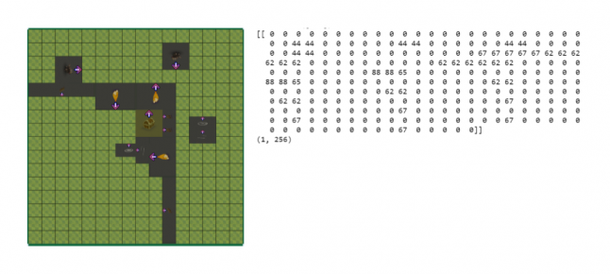

We discuss here how we generated game levels using artificial neural network in our game Fantasy Raiders. In addition, we suggested you to consider how the machine learning can provide new perspectives and inspirations to the game designers.

Written by Seungback Shin and Sungkuk Park, programmers at Maverick Games

Over the past few years, recent advancements in the field of artificial intelligence have been led by machine learning methods based on representation-learning with multiple layers of abstraction, so called “deep learning”. Such fields of research have drawn public and media attention by Go, the ancient Chinese board game. Though Go’s complexity is often compared to the complexity of life, the machine AlphaGo has surpassed Lee Sedol the world champion of Go using deep reinforcement learning. It’s a fascinating fact that such AI research has been applied to games and this received such public attention. It’s also worth mentioning that Demis Hassabis, one of the developers of AlphaGo, was a lead programmer of Theme Park (1994) and a AI lead programmer of Black & White (2001). The games and the recent progresses in AI might be somewhat correlated.

This is a postmortem, the log of what our team has tried in order to generate levels in Fantasy Raiders using various artificial neural network methods. Previously, level generation was the process of encoding the game developers’ knowledge into algorithms with some probabilistic techniques. However, in Fantasy Raiders, we made a machine to learn and generate levels based on our data. As a result, we believe that we have just found a clue to solve the problem of level generation, not the general answer for it. To share our knowledge with other game developers, we’re going to cover the process of our research from beginning to end.

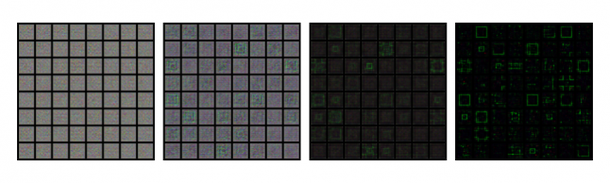

[PIC 1. Level generation using neural network]

As a first step of this research, we had to be confident that the artificial neural network could learn the levels of Fantasy Raiders. So, we had to start simple: estimating difficulties of levels that we already have.

Fantasy Raiders is an RPG that aims to provide new levels for each player by his or her skills and tastes to make their gameplay continuously engaging. The unit of a level is a room that is completed by itself. You may find a similar example in The Binding of Isaac (2011). For convenience, we'll use the term “level” to refer to each room in Fantasy Raiders.

The game recommends the next level (room) for the player with the right difficulty based on the player character’s condition, and the difficulty of the current level (room). The difficulty of each room is estimated by an algorithm evaluating the NPCs and props of the current level.

[PIC 2. The difficulties of the levels recommended in the series of levels]

The easy part was to apply numbers of NPCs or their HP (Health Points) in estimation. However, evaluating interactable props in the room was a relatively harder problem to solve. That’s why we tried to find out to see whether the artificial neural network could replace such heuristic algorithm or not.

For a machine to learn, it needs data. To classify levels automatically by their difficulties, the unit of the data must be the pair of “level - difficulty”. However, evaluating each level by an algorithm cannot produce any meaningful results because its results are limited to the algorithm itself.

At first, we considered a plan to make a gameplay bot to play each level and evaluate its difficulty by the result. However, it was nearly impossible to accelerate the gameplay fast enough for reinforcement learning. So, we dropped the plan and asked 3 game designers of our team to evaluate all the levels we have, 20 levels per day, in 2 months in a row, in sum 1,000 levels total, which is measured on the 5-Point Scale.

[PIC 3. An example of Level Difficulty Estimation]

After the data labeling was done, we captured every single image of the level editor screen which is an abstracted version of each level. We made a machine to learn the data set of the pair of “level editor captured image - difficulty”. We used level editor capture images because they’re more distinctive than in-game screenshots in low resolution so that it’s more efficient in learning speed and the quality as input data. We chose CNN (Convolutional Neural Network) in this process that is preferred in image classification over the other neural networks. To evaluate its performance, our proposed baseline was the formula made by one of our game designers.

Prediction based on the formula made by a game designer: 42% Accuracy

Prediction based on level editor captured image (CNN): 62% Accuracy

Even with a standard CNN model, the accuracy was improved by 20%. We tried to adopt other complicated CNN models several times, but there was no meaningful outcome. The limited amount of data (Nearly 1,000 in total) affected the results.

[PIC 4. Difficulty prediction using CNN]

Along the way, inspired by David Silver et al.’s Mastering the game of Go with deep neural networks and tree search (2016) and Hwanhee Kim’s Do Neural Networks dream of procedural content generation? (2016) from Nexon Developers Conference 2016, we felt the need to vary input data to get better results. According to the paper, the input datasets of AlphaGo in 2016 includes how many turns have been passed from the start, and how many stones have been killed from the start, and the contextual and processed information about Ladders besides the arrangement of black and white stones. Similarly, in Hwanhee Kim’s research, NPC and terrain information are also processed for game stage difficulty estimation.

The level editor captured images are made up with graphic elements because it must be human-readable. Not all the information of each NPC, object or prop is represented on the graphic elements. So, we reclassified the parts of the information that could affect the level difficulty with the help of the fellow game designers. Any information values with the same quality are classified into a group that has its unique value in one of R, G, B, or A. The more information values we have, the more accuracy we may expect, but the level generation difficulty also increases. That’s why we ended up with four major information values to use in difficulty estimation process by trial and error.

[PIC 5. Level editor captured image (Top, RGBA) and encoded image (Bottom, R,G,B,A). Each image has been calibrated in color with 128 grey to enhance the visibility of image on display.]

Prediction with encoded images (Logistic Regression - baseline): 61% Accuracy

Prediction with encoded images (CNN): 71% Accuracy

Using different input data, the accuracy was improved by 10% with the same model structure. Moreover, each image size required on learning process is reduced in size 64 times from the previous so that it could be learned faster.

[PIC 6. Encoded image of the in-game levels in Fantasy Raiders]

Through difficulty estimation, we could be confident that the neural network can learn the features of any levels. Based on what the machine has been taught so far, we moved on to the next stage: level generation.

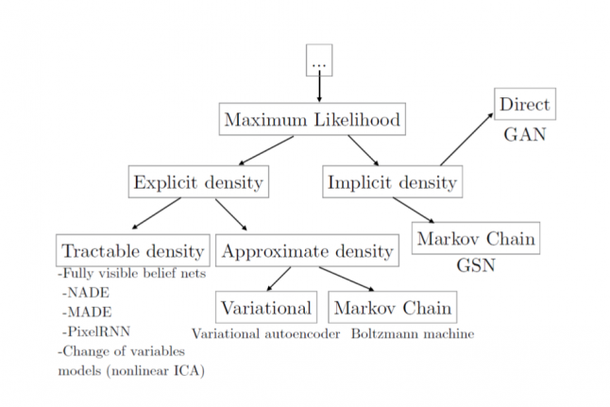

Research on image, voice, or text generation by machine learning have been conducted extensively. Because we made the captured images as input data to our model, we started with GAN (Generative Adversarial Networks) among other generation models, that has been widely adopted with good performance in many cases.

[PIC 7. Taxonomy of Generative Models - Ian Goodfellow (2016), Figure 9. “NIPS 2016 Tutorial: Generative Adversarial Networks”]

Since GAN was presented on 2014, and GAN is combined into DCGAN with CNN in late 2015, various versions of GAN has been generated and over 10 versions can serve on image generation purpose. (If you’re interested in how diverse things can be generated by GAN, checkout the-gan-zoo.)

[PIC 8. Anime characters generated by GAN - Yanghua JIN, “Various GANs with Chainer”]

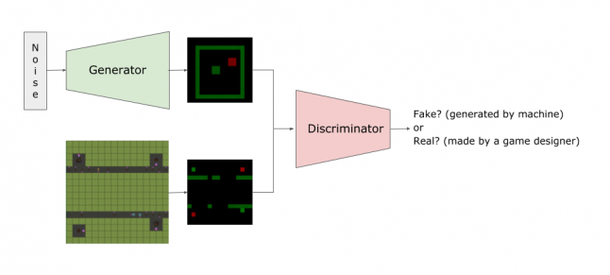

In the training process for level generation, we adopted encoded images as we did in difficulty estimation. After the training was completed, a generator created an image so that a decoder could decode that image to a level.

[PIC 9. GAN training process for level generation - The Generator and Discriminator are neural networks. The Generator tries to deceive the Discriminator that a level generated by machine is made by a game designer, and the Discriminator tries to sort out the levels made by a game designer from the levels generated by machine. Repeating this process, the Generator is able to generate more and more similar level made by a game designer.]

[PIC 10. Generating levels after the training is completed.]

What concerned us the most was the limited amount of the data we’ve got barely 1,000 in total because the most basic basic database MNIST includes more than 60,000 in total. The first trial using DCGAN failed. Most of other trials using other recent GAN models that had shown astonishing performance in image generation also failed in generating levels, even if it succeed, it generated very limited types of levels at most.

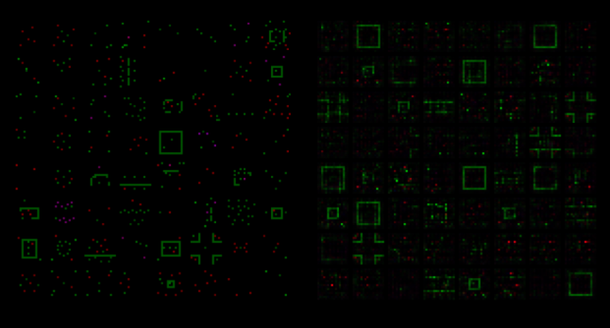

[PIC 11. Example of an image resulted from a failed training - Sampling in 8 x 8 levels on training. After 5,000 repetition with a batch size of 16 (Left). After 50,000 repetition with a batch size of 16. (Right)]

We almost gave up at that time thinking that the failures were due to the small datasets, however, the training succeed with DRAGAN, which is a stable version for GAN training.

[PIC 12. Level images generated with DRAGAN trained with 1,000 datasets. Only the most basic levels were generated at that time.]

The DRAGAN still, however, couldn’t generate more complex levels due to the small datasets.

Even though 1,000 levels are such small datasets in machine learning, however, they have been made in 2 years by several game designers. We couldn’t increase the number of datasets manually at once. So, we tried to increase the amount of data using the method generally used in the other machine learning researches: we increased the datasets from 1,000 to 6,000 by rotating each level 90, 180, 270 degrees about the origin, replacing the types of NPCs, objects, or props.

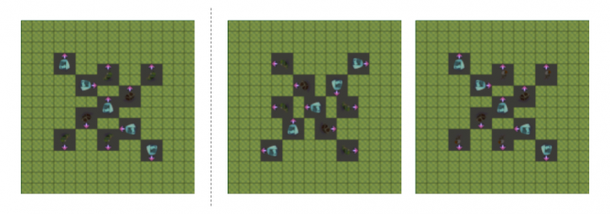

[PIC 13. Example of Data Augmentation - Original version (Left), Rotated 90 degrees clockwise version (Middle), NPC replaced version of NPC (Right)]

After thousands of iterations with 6,000 datasets, the model had finally started to generate more complex levels.

[PIC 14. The more training was repeated, the machine generated more complex levels that are similar to the ones made by game designers]

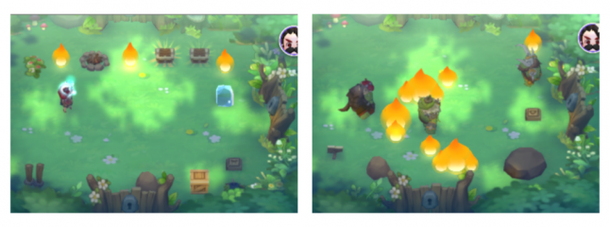

[PIC 15. Level made by a game designer (Left). Level generated by GAN (Right)]

From the early development of Fantasy Raiders, We've been researching generative grammar hoping that the automated level generation would replace manual level making someday. So, after we ensured that GAN generated more complex levels, we adopted CGAN, which generates data by conditions, to generate levels by difficulties.

As we mentioned above, we had only 1,000 levels that their difficulties are estimated manually by our fellow game designers. Due to the small size of datasets, we couldn’t make more complex levels. To solve this, we multiplied the data using Data Augmentation. However, those 5,000 levels were too many for the designers to evaluate each of them manually.

So, we adopted semi-supervised learning method: in such case that only partial data are labeled, the Discriminator decides which level is made by game designers or the machine for all the levels, while it doesn’t decide the difficulties of the augmented levels. For more information about this method, see "Improved Techniques for Training GANs".

[PIC 16. Levels generated by CGAN with the same seed value but varied difficulties.]

The level generation using GAN worked fine. It worked fine on learning any characteristics of form in levels, however, it didn’t on learning any contextual information in levels.

[PIC 17. Generative model using GAN could learn any characteristics of form in levels, but it couldn't learn any contextual information in levels - A fence made by a game designer (Left). A fence generated by generative model using GAN (Right)]

There might be other possible reasons, however, most importantly, it was because of the characteristics of our input data. Generally, images are made of a series of values that a small difference doesn’t make a big change. However, in our level editor captured images, it’s rather discrete data and any small difference in values can make a huge difference. Because of the fact, our input data (the level captured images) was a kind of sentence rather than an image.

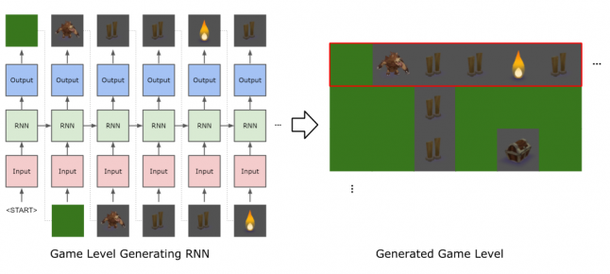

So, we came up with RNN, which is often used on sentence generation among other generative models, and we went LSTM, one of the different versions of RNN (We've recently heard that there’s other research case using GAN to produce discrete values in sentence generation, but we’ve never tried that one yet.)

[PIC 18. Level generation using RNN]

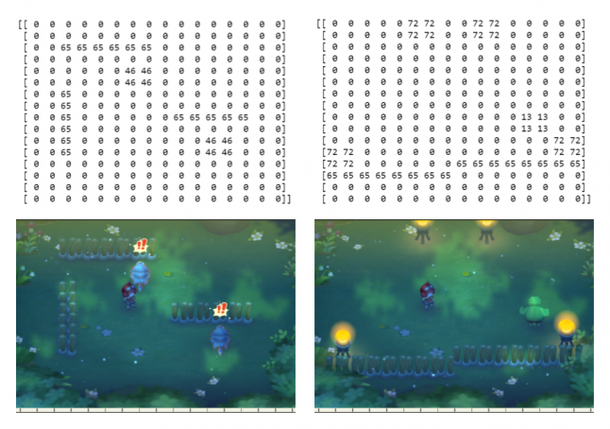

For LSTM, all levels must be translated to strings. Because we had already encoded all the levels, we had no problems on the translation process. We had to split the encoded image and merge them into one-dimensional string.

[PIC 19. Translating level to strings for RNN training]

To generate levels by its difficulty, we added difficulty information on the level translated into string. After all the repetitions, the LSTM started to generate levels with learning not only the characteristics of form but the contextual information.

[PIC 20. Levels generated using RNN - Generated strings (Top). Levels decoded from strings (Bottom)]

The RNN model generated more similar levels to the ones that are made by game designers, however, it couldn’t generate the levels with any closing fence surrounding the center or the corner well, which seemingly requires more understanding of space.

We doubted the hyperparameter at first on the problem, and we tried to vary the hyperparameter, but it didn’t work at all. RNN couldn’t generate any levels with understanding in two-dimensional space.

While in seeking a method that could make the best out of benefits of RNN with improved understanding contextual information on space, we found PixelRNN that is an image generation solution with RNN (Later, we moved to PixelCNN with improved performance in learning speed).

As input data, PixelRNN required images not sentences, however, we had already got through all the processes generating encoded images for GAN training before.

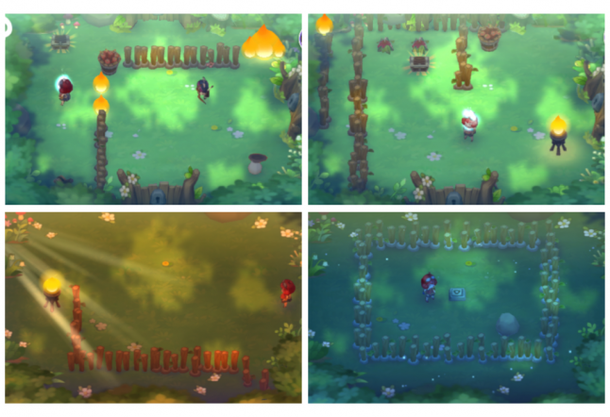

And then, finally, PixelRNN generated new levels with two-dimensional understanding of space and the other contextual informations. Those generated levels contained square and crossing fences, which are Fantasy Raider’s iconic props, and they were hard to hardly distinguishable from the ones made by game designers.

[PIC 21. Levels generated by PixelRNN placing fences in various shapes and arrangements.]

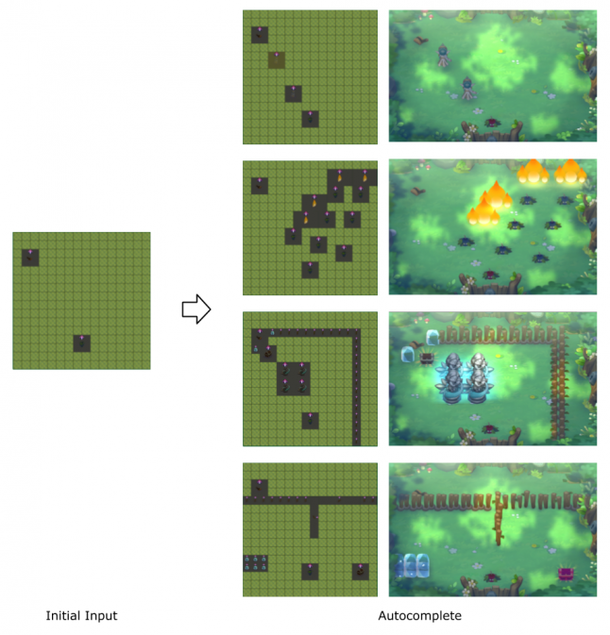

Since the automated level generation has been started, We've been collecting feedbacks from game designers from our newly trained neural network model every week. What we found interesting was the fact that some designers are inspired by the machine generated levels and they try to create new levels from there.

Based on the observation, we thought it must be a good idea to make a level together: human plus machine. Inspired a research of Sketch-RNN, we added a level editor mode that helps a game designer can generate a new level with machine learning model.

[PIC 22. If a game designer make a choice, the machine recommend any NPC, object, or prop for the game designer.]

[PIC 23. If a game designer makes some choices, the machine decides the rest of them for the game designer.]

Fantasy Raiders is in development based on Unity, and the level editor is also based on Unity. For this reason, A level is instantiated in JSON and sent to the server, and the server makes change on it and send it back to Unity in JSON.

(The Unity provides an SDK - Unity Machine Learning Agents for the developers to use reinforcement learning in Unity. However, its Python API is limited. we hope the Unity extends its Python API in the SDK so we can use them in other machine learning methods other than reinforcement learning.)

For now, in later development phase of Fantasy Raiders, level generation isn’t just a technical issue for engineers like us, but an issue for the game designers who are already in participating to improve the training results. With all the obstacles we had including ones that we didn’t even mentioned above, not only technical knowledge but also our understanding about our game Fantasy Raiders helped to got through them.

As we said it before, we believe that our trials can’t be the general answer on level generation using neural network. We're not still quite sure that the generation technique using neural network would replace the procedural content generation in present. However, it’s hard to deny that it brings new perspective and inspiration on content generation techniques.

Wish us good luck to meet you again with new findings!

[PIC 24. The first level generated by machine learning in Fantasy Raiders.]

Generative Models: A brief introduction to generative models.

Generative Adversarial Nets in TensorFlow: A short and easy introduction to how GAN works.

The Unreasonable Effectiveness of Recurrent Neural Networks: A concise introduction to how RNN works.

RNN models for image generation: Introducing image generation using RNN.

Teaching Machines to Draw: The classic example of a neural network application on creative fields. This example shows how we should approach to neural network not just a tool for automation but a method for inspirations.

AlphaGo: The source of inspirations for games and neural network.

Artificial Intelligence and Games: A different perspective on game A.I. Especially, in content generation, its reference Procedural Content Generation via Machine Learning (PCGML) covers many researches of game content generation cases using machine learning.

Read more about:

Featured BlogsYou May Also Like