Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

How do you perfect your custom-created game tools? Sega and LucasArts veteran and Robotic Arm Software founder Goodman discusses tips and tactics for methodology when you roll your own tools.

[How do you perfect your custom-created game tools? Sega and LucasArts veteran and Robotic Arm Software founder Goodman discusses tips and tactics for methodology when you roll your own tools.]

In game development, it is often some of the most technical (programmers) making tools for the some of the least technical (everyone else). It's no wonder that many of those "least technical" struggle with the tools that are handed to them.

The tools themselves are designed by the people who understand the underlying systems, but not necessarily those who will be using them.

Usability determines how easy software is to use, how quickly the desired results can be achieved by its users and how error-free those results are. Techniques to test and improve usability have been successfully used in other types of software development, and are slowly making their way into ours.

There are probably tools in your pipeline that could benefit from usability techniques, but as a developer, you'll want to know which tools would benefit the most before investing in them.

In this article, I'll discuss how to find the bottlenecks holding up your production, measure the usability of your tools using proven techniques and streamline your entire development process.

The first step to solve any problem is understanding what the problem is and why it's occurring. We need to know where in the development pipeline our process is being stalled. To do this, we must map the problem-space.

There are many ways to view the development pipeline. Some may view it as a checklist of assets and responsibilities, but this doesn't represent the dependencies between the developers.

Those dependencies create an environment where developers are wasting time waiting for someone else to finish a task, which requires managerial effort.

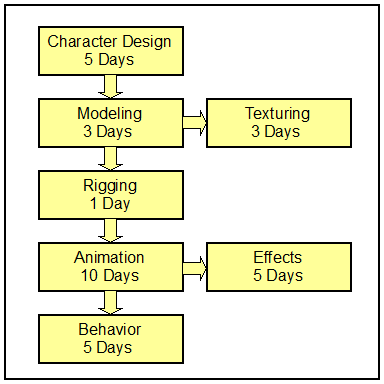

To understand these dependencies, it is more convenient to look at the pipeline as a graph showing workflow between various resources and what each produces. Consider the example workflow for character creation in the below figure.

In this illustration, the arrows point to the dependent task -- the task that depends on the one the arrow is coming from. So, modeling depends on character design, texturing and rigging depend on modeling, and so on.

This represents a very small, incomplete piece of a typical game development pipeline. Your actual pipeline will be much more complex, including more areas of development (level design, UI, gameplay programming, and so on), resources performing multiple processes, and multiple dependencies for a single task. The stages that hold up the development pipeline should be looked at for optimization through tool improvement, as well as other means.

Assuming that each phase of character development needs to be complete before continuing to the next, the first character could be fully implemented in 24 days. We get this by adding up the number of days in the longest pathway (the primary development pipeline), from character design to completion.

Texturing and effects are left out of the calculation, since their pathways diverge from the main, and the time for completing those pathways are less than or equivalent to the primary one.

Character Design + Modeling + Rigging + Animation + Behavior = 24 Days

That doesn't mean that it takes 24 calendar days to complete every character. This is an assembly line; four other characters are being worked on while the current one is being finished -- one at each stage in the pipeline. So how many calendar days does it take to complete a new character after the first? Exactly the time it takes for the longest stage -- 10 days.

Ten characters completed in just under six calendar months may sound great. Of course, if just the animation time were cut in half, we could double the character output by creating a new character every five days.

In any assembly line, efficiency is achieved by breaking down tasks into component parts so that every stage of manufacturing takes an equal amount of time. That's also the most efficient model for developing game assets.

Unfortunately, each stage in the pipeline requires unique skills, and it is often difficult to predict the exact timing for each stage. So, work backs up behind one developer, while another sits idly by waiting for the first one to finish.

There are many tactics for getting around this issue, all of which are employed by game companies in one extent or another. Managerial overhead and individual efficiency is often traded for development pipeline bandwidth.

Still, if efficiency can be increased in the areas of the pipeline that cause slowdown, pipeline bandwidth will also increase, with little overhead. One method for achieving this efficiency is through tool improvements.

Once you understand where the bottlenecks in your pipeline are occurring, it's time to decide where improving the tools used in that process can help, and what improvements should be made.

There are a variety of methods for testing usability, including surveys, user interviews, inspection techniques, thinking aloud protocol, and eye tracking.

Some techniques are difficult to implement, or give subjective results that must be interpreted by an expert, while others give a more formal score that just about anyone can use to gauge the usability of a product.

You can use some of the simpler techniques before investing in the more difficult ones. This will give you a really good idea where to concentrate your efforts.

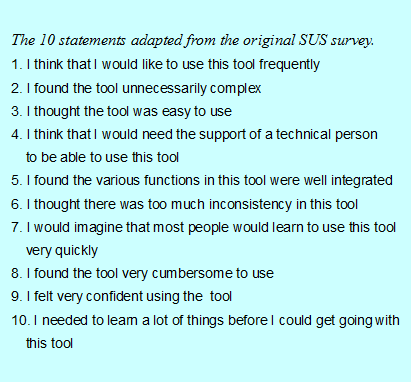

The System Usability Scale (SUS) was developed at Digital Equipment Corporation (DEC) to measure usability. It is probably one of the simplest methods to implement because it is survey-based.

Participants are asked to rate ten statements from the below figure, on a scale from "strongly disagree" to "strongly agree". Each response maps to a value from 0 to 4, where even-numbered items have the score values reversed.

An overall score ranging from 0-100 is derived from the individual values by adding them together and multiplying by 2.5.

Software in the 70-80 range has average usability. If your software ranks higher than that, you may want to look elsewhere for savings. If it scores less than that, there's a good chance you can get significant savings in your development time by investing in usability.

The Single Usability Metric (SUM) is a bit more complex to implement than SUS, since it requires that a user complete three to four common tasks with the software, while a moderator scores how each task is completed. The final score is a combination of task completion rates, task time, error counts and user satisfaction.

Task completion is the ratio of successfully completed tasks to attempts. Error rate is measured by breaking down individual tasks into subtasks, called "error opportunities" and counting the number of successful steps performed per task.

Satisfaction rate is measured with a simple three-question survey given after each task is completed that asks the user to rate how easy the task was, how long the task took, and how satisfied he felt using the tool.

Task time is just the time it takes to complete each task compared to an ideal time, which can be derived using the task times of the users who reported the highest satisfaction.

Because SUM measures usability at the task level, you can use this method to determine which features should be looked at for improvement. Combining this info with thinking aloud protocol -- sitting with someone using the tool while they describe what they are thinking -- will give more detail on how to fix issues.

Of course, overall usability should be the goal, and a redesign of the interface based on user's goals will often give much better results than targeting specific functionality.

The simplest tools give the user the ability to edit data. Your favorite text editor gives you at least this much functionality. Not coincidentally, it also has the widest scope, since any type of data that can be represented as text can be edited.

These tools are very unforgiving, since there is no protection of the user from himself. Iteration is the key for its users, since it is easy to make mistakes, and when errors occur during the runtime, the bad data may be extremely difficult to find. The users may spend hours looking for the number with the wrong decimal place or the text with the incorrect capitalization.

The first and last stop for many game development tools is one that adds a layer of protection from user errors by limiting the possible input to valid values. This is a major step forward, since developers are able to create game assets that are less prone to error. Designers and artists can begin to feel safe in the knowledge that "at least it will run".

Still, there is little feedback on what effect the data will have on the game, and therefore there is still a great deal of iteration time necessary to test changes, tweak values, and so on. This is due to the fact that these tools are still based on the implementation model of design.

That is, the data that is edited directly by the user is equivalent to the data used by the underlying game system, and is therefore more complex than the user can fully understand.

Iteration is still necessary, even though the tool has spared the user from typographical mistakes that would have otherwise caused serious errors (crashing). Typos have been eliminated, but errors of logic are still prevalent.

Data abstraction begins to take place in these tools, albeit on a small scale. Anytime a text box is replaced by a drop down list of enumerated values, the internal representation of the data (a number) is being replaced with something better understood by the user.

Color pickers, graphs, calendars, progress bars, and just about any graphical representation of data imaginable all serve this purpose. Still, the individual bits of data do not represent a cohesive whole.

To achieve that, we must absorb the individual data points into a larger, more conceptual picture through a kind of abstraction. We hide the actual data behind a facade that represents the objects that the data describes.

Consider a 3D model. The actual data is a list of vertex positions, weighting, UV mapping coordinates, RGBA values, and so on. Editing each of these values directly would offer extreme precision, but would take much longer than using a modeling program to generate the same type of data with much better results.

The modeling program hides the complexity of the data by showing what the data represents instead of the data itself, giving the user the ability to edit the model data in a way that makes a lot more sense.

When tools abstract the actual data appropriately, iteration times are significantly reduced because both typos and logic errors are all but eliminated. The abstract view of the data gives the user the ability to understand its purpose. The final data still needs to be tested in game, obviously, but the data created from the tool should be sound.

The hard part, of course, is finding the best representation of the data. This is achieved by understanding the users of the tool. The closer that representation is to the way the user understands it, the easier the tool will be to use.

Sometimes, we talk about users in a way that suits our own desires. "I think the player will really like this," is often heard as an excuse to include some feature in a game.

The flip side is just as easily said, and no argument can be won when two developers disagree on this point.

That's because the user is usually not very well defined. Everyone has their own idea who the target audience is, even with tools. That's where user models come in.

User models are concrete, fictional end users that have their own goals, desires, hobbies, families and even names. They aren't necessarily the "average user," but they are representative of a typical user.

A single piece of software may have several user models that need to use the software, but the key is to design an interface so that it satisfies one of those users very well. That user is the primary user model, and the entire team needs to be on board with who the primary user is to avoid arguments like the one above.

At one company I worked for, the lead designer had come up with some ideas for the level design tool. He had done this on his own based on what he felt the goals of the team were.

The tools team, including myself, dismissed his design ideas since we supposedly knew better, having a greater understanding of what the underlying data was that would be edited in the tool. I wish we had understood the user model concept and used it at that time to start a conversation over what the design should be.

Here was a guy with a stake in the success of the tool and a great deal of knowledge of those who would use it, but we didn't have the tools to really communicate effectively. The tools team should have worked closely with him, as a representative of the end users, to create a primary user model, and decide on the real goals of the design team.

As it was, the level editor was never what it should have been, and the level designers spent much of their time struggling with it.

So, how do you come up with user models? The best method is to study the actual users and create a sort of composite of their individual traits. But, where in the wide range of attributes of your real life users should your user model fall?

You should consider that your users will get better with the software as time goes on, but the vast majority will never achieve "expert" status. Your tool needs to address the needs of intermediate users best, so your user model should represent someone at that skill level.

Come up with a name for your user model. A name gives you the ability to discuss the user model with your group effectively.

As an example, let's say we're creating a new level design tool. Most game developers, including designers are male, so our user model should be a guy. Let's call him "Brad" since it sounds like a good name for a level designer to me.

How old is Brad? Well, in game development, design is a pretty young field, compared to art and programming, so Brad should be pretty young. Let's say the average level designer on our team is 27, so let's go with that.

Where did Brad go to school? What did he study? He probably went to a liberal arts school. He could have a degree in Computer Science, but that would make Brad one of the more technical designers, and we want our tool to target those with less technical experience, so let's say he has a degree in English, instead.

Is Brad married? Probably not, but he might have a girlfriend. How does all of this affect how the tool should be developed? It doesn't directly affect the tool, but it certainly has an effect on Brad's personal goals and that, in turn, affects his goals at work. It doesn't take much effort to fully flesh out the user model, and the effort is rewarded by a deeper understanding of the real-life users.

What are Brad's goals? Why did he get into game development? What does he want to achieve in his current position? Once we know the user model's background, we can start to understand his motivation.

Understanding that can help us understand how to create tools that satisfy his goals. Those goals may include making a really challenging game, not putting in too much overtime, getting a raise, or getting promoted, to name a few.

These are high-level goals that ultimately affect tool-specific ones. Wanting to create a complex puzzle sequence in a level may stem from the user's desire to make a challenging game.

A user that doesn't want to work a lot of overtime may want the tool to be fast and easy to use as a result. Someone who wants a raise or promotion will want a tool that showcases their work and gives them a chance to stand out. Ultimately, understanding what your users want will give you the opportunity to satisfy their needs, making them happy, hardworking employees.

During development of the tool, feedback from individual users can be invaluable, but instead of directly injecting the response to that feedback into the software, check the user's ideas against the user model.

Each individual user will have his or her own unique way of working, but what you're looking for is a method that everyone can use well. Catering features to individual requests may leave you with a hodge-podge of interface components that don't fit into the overall picture.

Target specific users based on user models and focus on their goals. These users should be given exactly what they need. Less means they can't do the job; more means the job becomes overly complex. Every design decision should go through the goal filter: "Does this meet the goals of our user?" Only features that meet this requirement should be included in the design.

When goals supersede features, it is possible to find better ways to achieve success through fewer tasks for the end user. Understanding the goals of the user model also makes it possible to find a way to represent the data in a way that suits that person best.

How you respond to these goals to deliver a better user experience in your tools will be different for each tool. Once you do, your users will achieve much better results in a shorter time frame.

You can find the bottlenecks in your development pipeline and address them using the techniques I've discussed here. There are many others available, and you should try to find something that works well for your team.

If you determine that your tools are not as usable as they should be, you should consider redesigning them using goal-oriented methods.

Understanding your users will allow you to deliver software that meets their needs. Once they have the best tools in hand, they're on their way to higher efficiency and output, which ultimately leads to better games.

A. Cooper. (2007). About Face 3: The Essentials of Interaction Design. Wiley.

J. Brooke. (1996) SUS: A quick and dirty usability scale, pages 189--194. Usability Evaluation in Industry. Taylor and Francis.

J. Sauro & E. Kindlund (2005) A Method to Standardize Usability Metrics Into a Single Score. Retrieved December 17, 2008, from Measuring Usability Web site : http://www.measuringusability.com/papers/p482-sauro.pdf

---

Title photo by Nick Johnson, used under Creative Commons license.

Read more about:

FeaturesYou May Also Like