Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Rendering a frame assumes that the user is able to perceive high details on the whole screen at the same time. Using the characteristics of the human eye, we can lessen the rendering load in the peripheral area.

This article will go through the current progress of my master’s project which is due at the end of the academic year. The purpose of the research is to provide a way of reducing the rendering load by using the characteristics of the human eye. Our eyes can only see high detail in a very small area while rendering a frame assumes high detail everywhere on the screen. We could therefore reduce the rendering load on the area where our eyes do not perceive high details if we knew where the user is looking. Using an eye tracker, that information can get send to an application which can adapt the details of the rendered frame in real-time.

Adapting the details of a frame can be quite difficult. Our eyes easily perceive patterns and having too low details in the peripheral vision with high contrast can cause the eyes to perceive something moving while it is not. This is something we absolutely do not want for the user. The approach to reducing computation in the peripheral vision should go unnoticed.

A human eye contains a high proportion of colour receptors or cones in its central region. They are there to provide detail and colour resolution. Those cone cells are well arranged to provide the most efficient distribution. Their concentration also drops with the distance from the center. The peripheral vision is supplied with highly sensitive rods to black and white and highly motion sensitive rods.

In order to make the most out of the concentration of cones at the fovea, the human eye performs rapid and frequent movements to focus on relevant objects. All these snapshots are then combined to form a stable image of the perceived scenery. Consequently, if rendering system would use a gaze-dependent model, it could benefit from the possibility of computation reduction in the parafoveal and peripheral regions of vision.

Ray tracing is a technique for rendering an image by tracing a path of light through pixels. It can produce images of higher quality than scanline rendering techniques but at the expensive of a more complex computation. Therefore, ray tracing is usually only applied on applications where the frame can be rendered slowly.

The reason I am picking ray tracing over the traditional rasterization is that ray tracing offers more flexibility for per pixel manipulation, which is exactly what I need. Otherwise I would probably have to render low, medium and high parts of the frame and superpose them correctly. Another point is that ray tracing can easily render a physically correct scene without having to add layers of effects on top of each other like the rasterization method.

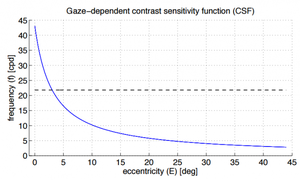

The Contrast Sensitivity Function (CSF) describes the visual ability to perceive the difference in luminance within a certain field of view. An extension to this function is the gaze-dependent one: The amount of perception in numerous viewing angles. This information can be used to compress the data or lessen the amount of rays shot on regions where they human eye would not be able to notice the difference.

Figure 1: Gaze-dependent contrast sensitivity function.

Figure 1: Gaze-dependent contrast sensitivity function.

Cycles per degree (cpd): The acuity unit. A measurement to know how well you are able to see details. As a reference, 20/20 vision can be translated to 30 cpd. Campbell and Green (1965) found that the maximum resolution of the eye was about 60 cycles per degree. This graph shows the angular degradation of resolution the further away from the gaze point.

Figure 2 : A mask computed with the Contrast Sensitivity Function at gaze point 1000, 500. A mask like this will be used to manipulate the rays and lessen the computation on the non-white parts.

Figure 2 : A mask computed with the Contrast Sensitivity Function at gaze point 1000, 500. A mask like this will be used to manipulate the rays and lessen the computation on the non-white parts.

An adequate tool to able to gather the data required for the implementation of the gaze-dependent CSF would be an eye-tracker. We need to know the relative position of our eyes from the screen and where the gaze of the user is directed. The eye-tracker will project near-infrared light on the eyes of the user and take high framerate images while processing details of the pattern in the eyes. Based on this information, algorithms will calculate the eye position and gaze point.

OptiX is a programmable framework for achieving optimal ray tracing performance on the GPU. Developers can write their own ray programs. Programs are sub-divided into categories: closest hit, intersection, miss, ray generation, exception, bounding and any-hit. All these programs together provide a user defined rendering algorithm.

Can we use the characteristic of the human visual system to lessen the computation of raytracing while still maintaining visual acuity?

To answer the above research question I needed to find ways to integrate the human visual system into an application using ray tracing. For the application I will use an altered version of a project that came with the OptiX SDK.

With the gaze-dependent CSF I was able to create a mask (Figure 2). The color of the mask will determine the number of rays shot per pixel. I then proceeded with the integration of the Tobii eye-tracker into the application which can now track the user gaze point and eye position. Knowing this information, the created mask can be recomputed or simply panned according to the user’s gaze point.

For the purpose of the user testing, I will limit the amount of rays shot to 4 or 8 per pixel to have at least some kind of sampling in the foveal area. Once the rays have been manipulated with the help of the gaze-dependent CSF mask, it will be time for user testing.

The first wave of user testing will help me with enhancing the basic technique. An example being that non anti-aliased zones can be noticed in the peripheral area and should be removed with sampling or blurred out with some Depth of Field effect.

Once the first wave has helped me with iterating over the approach, I will be working on another demo. This demo will be an animated one that will switch on a certain time stamp between the standard raytracing approach and mine. The point of testing this demo is seeing if the user notices a change when the rendering alters.

When that data is collected, I will be able to determine how effective my approach is and start writing my thesis.

Reducing the amount of rays for sampling methods by using the human eye characteristics will provide a significant performance boost to ray tracing applications. Due to the fact that most real-time ray tracing applications only go for a ray per pixel, you can always use this approach to implement anti-aliasing only around the users gaze point. The need of an eye-tracker might not be for everyone but the technique could definitely still be used with ray tracing alongside an eye-tracker integrated into a virtual reality headset.

Thank you for your reading time.

Gaze-dependent Ray Tracing, Siekawa Adam

Gaze-dependent Ambient Occlusion, Sebastian Janus

Anatomy And Physiology Of The Eye, Contrast, Contrast Sensitivity, Luminance Perception And Psychophysics, Drew Steve

Foveated 3D Graphics, Microsoft Research

Contrast Sensitivity of the Human Eye and its Effects on Image Quality, P.G.J. Barten

Gaze-Directed Volume Rendering, Marc Levoy and Ross Whitaker

Real-Time Ray Tracing Using Nvidia OptiX, H. Ludvigsen and A. C. Elster

Read more about:

Featured BlogsYou May Also Like