Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

An in-depth look at physics engines -- including a series of tests that stress the capabilities of the simulations in difficult-to-solve situations where physical simulations typically break down and specific attention paid to how these packages will integrate into a large-scale game project.

September 20, 2000

Author: by Chris Hecker

As devoted readers of Game Developer, you are all aware of our belief (or hope!) that realistic physics can greatly improve the gameplay in interactive entertainment. You also know that creating a robust and flexible physical simulator is difficult work. Simulators are hard to design, challenging to code, and even more difficult to debug. These challenges cause many programmers and producers to look for external help for their physics development problems.

Lately we have seen the emergence of licensable "physics engines" from companies wishing to fill this need. We feel the market has matured to the point where it is time for us to take a hard look at these products to see how well they work.

We will be looking at three products that offer roughly the same level of technology and features: MathEngine's Dynamics Toolkit 2.0 and Collision Toolkit 1.0, the Havok GDK from Havok, and Ipion's Virtual Physics SDK. Our focus is on rigid body dynamics. We will not review cloth, particle, water, or other kinds of simulation, even though these packages support some of those features.

In the first of a two part series, we have created a series of tests that stress the capabilities of the simulations in difficult-to-solve situations where physical simulations typically break down. These tests give us a general feel for each engine's implementation of core features such as contact, constraints, and integration, as well as a working knowledge of each API. In the next part, we will take an in-depth look at each package with specific attention paid to how these packages will integrate into a large-scale game project.

First, it's important to point out that the stress tests are not exhaustive, nor directly representative of in-game situations. They are simulations of physical configurations specifically chosen to stress the engines in difficult numerical situations.

Our goal with these tests was to break the simulators. We are not necessarily expecting perfection (although it would be nice!). We chose this approach because users and artists have an annoying tendency to create physical configurations that break physics simulators, so it's better to find weaknesses before licensing than during final play-test.

Though the tests are difficult, they are not unrealistically complex. Artists could create configurations that correspond to these tests without knowing the configurations might be problematic. We did not tune the tests to exacerbate a discovered bug. Rather, we made a good faith programming effort to help each engine simulate the tests successfully, tuning parameters and requesting technical support in some cases.

When creating the score for each test, we evaluated a specific set of factors:

How physically plausible and consistent with our expectations were the results

How stable were the results? Did the simulation come to rest in a stable state or did the system continually jitter or explode?

Were the results accurate given the design of the situation?

How easy were the scenarios to design and adjust in each SDK?

The grading for each test ranges between 0.0 and 1.0, with 1.0 being perfect in all areas. The grades for each engine are absolute compared to a theoretical ideal, not relative to the other engines. A score of 0.5 is the minimum "acceptable" score given our expectations.

We designed the scenarios to be the same scale and with the same units (where possible) in each package. Scale is very important in a simulation, so we used standard units for measurement and chose a world scale that worked well in all three systems.

The final scores are not presented in an easy-to-compare table. This is a conscious decision, as we believe simple numbers are meaningless without a full understanding of the context. Choosing a physical simulator is not as simple as setting up a race between systems and see who wins. There are many other tests that might be more representative of your problem domain, and we encourage you to run them yourself before deciding.

A large cube is dropped on a small cube. This challenge was designed to test the collision detection and response of each system. Masses and sizes that vary by an order of magnitude can cause trouble for contact solvers and collision detectors.

The cubes were axially aligned and centered at the origin and translated up along the Y-axis. Both cubes were initially off the ground and dropped at the same time. The large cube was 10mx10mx10m with a mass of 5,000kg and the small cube was 1mx1mx1m with a mass of 5kg. The coefficient of restitution between all objects and the ground plane was set to 0. We expected the two cubes to drop straight on top of each other without bouncing and come to an immediate rest.

MathEngine: Even though we tried everything to ensure the coefficients of restitution were 0.0, we could not get rid of the bounce when the boxes hit. In this test, the boxes bounced, then the smaller box shot out from beneath the large box. Score: 0.7

Ipion: The boxes dropped directly on each other without bouncing. However, the top box tipped over then jittered a bit before stabilizing. Score: 0.75

Havok: On contact, the top box slid back and forth in a very unrealistic manner before finally tipping over. Setting the friction even higher didn't help. The objects also went to sleep too fast, freezing as they were visibly moving.Score: 0.6

A slightly larger cube is dropped on a smaller cube. This was a variation on the first test in that the difference between the cubes' sizes was much smaller. This test was meant to be a "gimme" and should just work.

The cubes were set at the origin and translated up along the Y-axis. Both cubes were initially off the ground and dropped at the same time. The large cube was 5mx5mx5m with a mass of 625kg and the small cube was 4mx4mx4m with a mass of 320kg. The coefficient of restitution between all objects and the ground plane was set to 0. We expected the two cubes to drop straight on top of each other without bouncing and come to an immediate rest.

MathEngine: Again, the initial bounce between the objects caused the top box to tip over. Score: 0.7

Ipion: The boxes dropped and stayed stacked. The system slept early while still jittering slightly. Score: 0.9

Havok: On contact, the boxes slid slightly. They stayed stacked and there was no jitter between them. Score: 0.9

A large cube is constrained to a small cube and both are dropped. This challenge was designed to test the constraint system as well as the collision detection and response of the systems. Again, orders of magnitude uncover numerical problems in constraint solvers and constrained collision and contact resolvers.

The cubes were set at the origin and translated up along the Y-axis. The two boxes were constrained together with a three-degree-of-freedom spherical constraint positioned exactly midway between the two cubes. Both cubes were initially off the ground and dropped at the same time. The large cube was 10mx10mx10m with a mass of 5,000kg and the small cube is 1mx1mx1m with a mass of 5kg. Again, we set the coefficient of restitution between all objects and the ground plane to 0. We expected the two cubes to drop straight on top of each other without bouncing and come to an immediate rest, with the top box balancing on the constraint. The joint should have stayed rigid, always maintaining the separation between the two boxes.

MathEngine: Again, the two boxes bounced on the drop. The constraint held the boxes apart. However, the box tipped over and the system continued to jitter without coming to a rest. Score: 0.4

Ipion: The joint collapsed on drop. The top box fell over and there was some jitter before the system stabilized to sleep. Score: 0.5

Havok: The joint collapsed on drop. The top box fell over and there was some jitter before the system stabilized to sleep. Applying a mouse force allowed the constraint to be completely violated, adding unrealistic energy to the system. Score: 0.4

A slightly larger cube is constrained to a smaller cube and both are dropped. This is a variation on Test 3 in that the difference between the cubes is much smaller. Like Test 2, this was another "gimme" with very similar sizes and masses, making for an easy-to-solve configuration.

The cubes were set at the origin and translated up along the Y-axis. The two boxes were constrained together with a spherical joint positioned exactly midway between the two cubes. Both cubes were initially off the ground and dropped at the same time. The large cube was 5mx5mx5m with a mass of 625kg and the small cube was 4mx4mx4m with a mass of 320kg. The coefficient of restitution between all objects and the ground plane was set to 0. We expected the two cubes to drop straight on top of each other without bouncing and come to an immediate, balanced rest. The constraint joint should have stayed rigid maintaining the separation between the two boxes.

MathEngine: The boxes bounced, but otherwise perfect. Score: 0.7

Ipion: There was a slight bounce. The top box finally fell over in a plausible manner. Score: 0.8

Havok: There was a slight bounce. The top box finally fell over and the system went to sleep a bit too early. We had to adjust default parameters to get this result. Score: 0.75

A large cube is constrained to a world anchor. A small cube is constrained to the large cube. This challenge was designed to test the constraint system with objects having a great difference in mass, including the infinite mass of the world constraint.

The cubes were set at the origin and translated up along the Y-axis. The two boxes were constrained together with spherical joint positioned exactly midway between the two cubes. The top large cube was constrained to a world anchor above it by another spherical joint. The large cube was 10mx10mx10m with a mass of 5,000kg and the small cube was 1mx1mx1m with a mass of 5kg. We expected the objects not to move, as the constraints would hold everything exactly in place.

MathEngine: Behaved as expected. Score: 0.95

Ipion: Behaved as expected. Due to the interface, we couldn't exert a strong mouse force on the small object to test stability. Score: 0.95

Havok: Behaved as expected. However, forces applied to the small box made the system slightly unstable. Score: 0.9

A small cube is constrained to a world anchor. A large cube is constrained to the small cube. This challenge was the same as the previous test with the two boxes reversed. It was designed to test the constraint system with objects having a great difference in mass, with the small mass between the large and infinite mass.

The cubes were set at the origin and translated up along the Y-axis. The two boxes were constrained together with a spherical joint positioned exactly midway between the two cubes. The top small cube was constrained to a world anchor above it by another spherical joint. The large cube was 10mx10mx10m with a mass of 5,000kg and the small cube was 1mx1mx1m with a mass of 5kg. We expected the objects not to move, as the constraints would hold everything exactly in place.

MathEngine: There was a slight energy addition, but it was very solid. Score: 0.9

Ipion: The constraint was violated in a springlike stretching manner. The system didn't blow up. Score: 0.45

Havok: With the default constraint stiffness parameter, tau, the constraint was stretched in a springlike manner and the system even went to sleep with the constraint violated. When we increased the tau, there was only a slight jitter. However, applying any force to the big cube caused the system to gain energy and explode. Score: 0.4

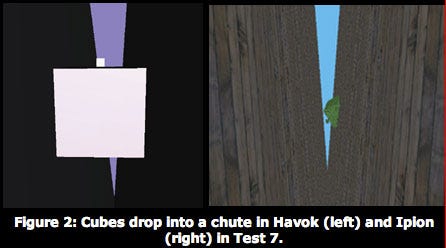

A small cube and large cube are dropped into a gap between two planes that narrows gradually. This challenge tested the collision detection system and the contact resolution system. The gradual slope of the planes caused the contact and collision system to exert large normal forces to stop the cubes from falling.

Two large and narrow boxes used as planes were positioned so they formed a vertical chute that narrowed to a point at the bottom at a 5.625-degree slope, and the two cubes were dropped into the gap. The large cube was 10mx10mx10m with a mass of 5,000kg and the small cube was 1mx1mx1m with a mass of 5kg. We expected the objects to fall into the gap, become wedged between the planes, and not move.

MathEngine: There was an initial bounce, but otherwise it performed well. When the small box was moved with a force, energy was occasionally added. The lack of some basic math functions in the SDK made the test difficult to create. Score: 0.8

Ipion: This was a complete failure. Contacts were missed or incorrect impulses were applied and the boxes fell through the chute. Score: 0.0

Havok: Almost perfect. There was a small amount of bounce. Score: 0.9

One cube is dropped so that it will collide with another cube on collinear edges. This challenge tested the collision detection system. Degenerate collision manifolds can be problematic.

A slightly smaller cube was dropped onto a larger one so that they struck edge to edge. The small cube was 4mx4mx4m with a mass of 320kg and the large cube was 5mx5mx5m with a mass of 625kg. We expected the objects to fall and make contact at the edges without bouncing and then come to an immediate rest. Due to numerical inaccuracies with rotating the cubes to set up the test, we couldn't be totally sure the boxes hit exactly edge to edge.

MathEngine: The dropped box slid off to the side in an unrealistic, slippery manner. Score: 0.7

Ipion: Almost perfect, but it went to sleep in dynamically unstable situation. A small epsilon gap was visible between the two cubes. Score: 0.95

Havok: Almost perfect, however there was a small amount of bounce. An epsilon gap was visible between the objects. Score: 0.9

One cube is dropped on another cube of the same size, so that they will collide exactly point-to-point. This challenge tested the collision detection system as another degenerate collision manifold test.

Two identical cubes were dropped so that they struck point-to-point. The cubes were each 4mx4mx4m with a mass of 320kg. We expected the objects to fall and contact at the point without bouncing and then come to an immediate rest. As in the previous test, due to numerical inaccuracies with rotating the cubes to set up the test, we couldn't be completely sure the boxes hit exactly point-to-point.

MathEngine: The dropped box slid off in an unrealistic manner, exactly like in the previous test. Score: 0.7

Ipion: There was a little bounce and they went to sleep in dynamically unstable situation. Score: 0.85

Havok: Almost perfect. The boxes sat there stably, eventually tipping over. However, the default epsilon gap between objects was visible. Score: 0.9

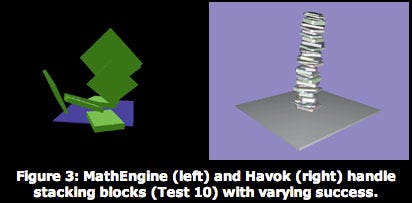

Boxes are dropped to form an uneven stack. This challenge tested the collision detection system with massive numbers of contacts and collisions.

A series of boxes of various sizes were dropped onto each other with the initial rotations around the Y-axis, and slightly offset from the center. The goal was to try stacks of up to 40 boxes. We expected the objects to fall into a stable stack of blocks that behaved in a believable manner.

MathEngine: When more then 10 to 12 boxes were dropped, the system crashed. Otherwise the boxes bounced badly, even adding energy. The system was very unstable, though no boxes appeared to interpenetrate. Score: 0.2

Ipion: Stable, but really started slowing as boxes were added to the system. Some boxes eventually fell through each other and the boxes at the bottom jiggled a bit. Parts of the stack slept while others jittered and interpenetrated. Score: 0.6

Havok: Perfectly stable for a while, but with the maximum stack, the boxes started falling through each other and the floor.

Score: 0.75

A hinge joint with similar bodies. This test should have been easy to solve. A one-degree-of-freedom hinge constrained two similarly sized bodies.

The cubes were set at the origin and translated up along the Y-axis. The two boxes were constrained together with a hinge joint positioned exactly midway between the two cubes. Both cubes were initially off the ground and dropped at the same time. The large cube was 5mx5mx5m with a mass of 625kg and the small cube was 4mx4mx4m with a mass of 320kg. The coefficient of restitution between all objects and the ground plane was set to 0. We expected the two cubes to drop straight on top of each other without bouncing and come to an immediate, balanced rest. The constraint joint should have stayed rigid, maintaining the separation between the two boxes. We should have been able to move the bodies and the hinge should have only rotated around its axis.

MathEngine: The boxes bounced, but otherwise ran perfectly. The lack of mouse forces in the demo made it slightly hard to test the hinge. Score: 0.7

Ipion: There was a slight bounce. The top box finally fell over in a plausible manner. It was very stable. Score: 0.8

Havok: There was a nonstraight bounce. The top box finally fell over and system went to sleep a bit too early. Havok doesn't support hinges except through linearly dependent spherical joints.

Score: 0.7

A hinge joint with differing masses. This was the "hard" version of the previous test. The order of magnitude differences between the bodies can cause numerical trouble.

The cubes were set at the origin and translated up along the Y-axis. The two boxes were constrained together with a one-degree-of-freedom hinge constraint positioned exactly midway between the two cubes. Both cubes were initially off the ground and dropped at the same time. The large cube was 10mx10mx10m with a mass of 5,000kg and the small cube was 1mx1mx1m with a mass of 5kg. The coefficient of restitution between all objects and the ground plane was set to 0. We expected the two cubes to drop straight on top of each other without bouncing and come to an immediate rest, with the top box balancing on the constraint. The joint should have stayed rigid, always maintaining the separation between the two boxes.

MathEngine: The two boxes bounced on the drop. The constraint held the boxes apart. However, the box tipped over and the system continued to jitter without coming to a rest. Score: 0.45

Ipion: The joint collapsed on drop. The top box fell over and jittered. Score: 0.45

Havok: The joint collapsed on drop and the top box fell over. Applying a mouse force allowed the constraint to be completely violated and the system could blow up. Score: 0.4

Space and time limitations prevented us from testing more potential problems. Other things we could have tested include:

Other joint types; two-degree-of-freedom rotational joints are often problematic, prismatic joints with different moments of inertia, and so on.

Stiff springs and user-defined forces; jerking the constraints around was not really tested.

Concave collision detection, union of convex collision detection.

Sliding bodies over one another looking for inconsistent normals, especially as contact manifold collapses from a 2D patch to a 1D line segment and then to a 0D point.

Tunneling of fast-moving objects or objects strongly pulled into the ground (preliminary tests in this area indicated problems with some of the engines).

After conducting all of these tests on each system, we were impressed with the general engine stability across the board. With the exception of the box-stacking test on MathEngine, which was likely a memory problem, the simulators were able to handle every test without crashing. We had some difficulties with the access functions in some cases, which we will cover in depth in next month's conclusion. Nevertheless, we were able to get every test running fairly rapidly.

MathEngine is very capable at handling hard constraints. The bouncing problem on collisions hurt its score on most tests. After talking with MathEngine technical support about it, we adjusted the gamma and epsilon values and were able to improve several of the tests. However, this required case-by-case experimentation and never completely eliminated the bounce. The collision detection and resolution worked well; we never saw objects interpenetrating.

The Ipion engine was a solid performer as well, with the exception of Test 7, the narrowing chute. The rigid constraints were not exactly rigid but they were pretty stable. The collision detection and handling were reasonable, excepting the chute and box-stacking tests. The system also slowed down to unacceptable levels as the number of interacting objects increased.

The Havok engine posted generally strong scores. They clearly need to work on their rigid constraint system. The default constraint tau value (which is not well documented) resulted in springlike constraints that didn't perform well in our tests. Increasing tau caused the constraint to become more rigid, however the system then became unstable. We were also able to see object interpenetration problems when the system was really stressed. That said, the collision system was very robust and fast, handling even fairly large block stacks without slowing much.

So, which package is right for you? These stress tests are only the first part of an answer to that question. Next month we will have an in-depth review of each simulator, covering the complete feature sets, documentation, and API issues.

Note: As we were running these tests, Havok announced that they had purchased Ipion in a consolidation of this newly formed market. They will continue supporting both packages until they can consolidate them into one system.

Last month we put three licensable rigid body physics engines through their paces with a series of stress tests specifically designed to find stability problems. MathEngine's Dynamics Toolkit 2.0 and Collision Toolkit 1.0, the Havok GDK from Havok, and Ipion's Virtual Physics SDK all made their way through the 12 tests with varying levels of success.

This month, we are going to take a broader look at each package. But first, you are probably asking yourself...

Game physics is a complex issue. We are not going to answer the question, "Should I use rigid body physics in my game?" That's a decision you will have to make. However, if (or, we hope, when) you decide to try physics in your game, should you build or buy? That too, is a complicated question.

Rigid body dynamics is a relatively new technology for the game industry. It is mathematically intense and difficult to implement. This would seem to make it perfect for licensing, because a third party has already done the hard part, and you can just buy the technology from them. This is true to some extent, but it remains to be seen how knowledgeable programmers and designers need to be about physics to use a physics engine. As you saw last month, physics is not a technology you can put in your game and have it work perfectly every time. Current simulators are quite sensitive to initial parameters and tolerances, and require hand-holding and tuning.

We feel that developers using physics simulators to make games in the near future will need to know a fair amount about physics and the underlying simulation algorithms in order for such implementations to be successful. However, we also feel this level of knowledge is less than what's required to implement a physics simulator in the first place, so licensing might be a good idea. You won't know exactly why things are breaking, but you'll be a year ahead of the guy down the street trying to implement his simulator from scratch.

Even after reading this review, anyone who is contemplating licensing a physics engine should arrange for an evaluation period to determine how it might fit into an individual project. With a high-risk piece of core technology, there is no substitute for first-hand experience.

We reviewed each package as if we were going to license the package and either integrate it into an existing game or start a new game with the engine. These are subtly different goals, and we'll point out areas where this distinction is important later on. For this review, we looked at the following factors:

Feature set: What are the elements of each system? Can it be used to simulate a great variety of things, including machines, environments, and so on? Is the collision detector full-featured? How many constraint types are there? How many types of friction? We're focusing on the rigid body dynamics features, not things like cloth, soft bodies, water, or domain-specific subsystems such as cars and airplanes.

Documentation: Is the information included adequate and well presented? Does it address nonintuitive problems that arise? Does it discuss tuning tolerance parameters in detail? Are there clear and instructional sample programs included?

Ease of use: Are the libraries intuitive to program with?

Production: Does it include export and preview tools to simplify production and artist use? Can you save internal object representations on the disk so you don't have to let the engine preprocess them every time you load? Does the library include source code? Is it cross-platform?

Integration: Is it easy to integrate into a production? Is it modular, and are the modules well designed? Do the modules work well together?

Input and feedback: Can the game interact with the objects effectively via forces and impulses? Is useful information available from the simulation, such as object speed, contact state, and so on?

Cost: What is the cost, both in licensing and support?

Technical support: How well do the companies respond to problems?

The most glaringly omitted criteria are stability and performance. We covered stability last month with the stress tests, and as we said in that article, we cannot cover all cases. You have to test situations specific to your game design.

Performance is another complicated and hard-to-review feature. All three engines seem fast in the demos, but we implore you to try them on your representative data sets before making a judgment. Related to performance are memory and resource usage. Does one engine allocate 100MB under certain special circumstances? We don't know.

We haven't covered track records or postmortems. To our knowledge, no hit games have shipped with any of these SDKs. We've talked to a few developers using the engines, but a thorough set of interviews and developer feedback would be very interesting and useful.

Before we get into the reviews, we must note that Ipion was recently purchased by Havok, and the Virtual Physics SDK will eventually be integrated into the Havok GDK. For the time being, we will treat them as separate products and consider them separately here. The packages are presented in no particular order.

Features: Ipion's collision detection system has all the necessary features, including broad and narrow phase algorithms, and functions and utilities for generating collision volumes from meshes. You can apply forces to the objects through actuators, such as motors, springs, and even a simple buoyancy simulation. The system supports a constraint system where you can specify the degrees of freedom between the constrained objects or use built-in joints, such as hinge or ball-and-socket.

Documentation: The manual is a relatively scant 76-page Microsoft Word document. It covers the main features, but it does not go into great depth, making thorough examination of the samples and header files imperative. It would have been very helpful to have all of the classes fully explained and an overall description of the architecture.

Tuning parameters are hardly documented. The examples are numerous, providing a demonstration of the complete functionality of the system, as well as creating a starting point for most situations one would typically encounter in the development of a game. The example code comments are a little sparse. All of the examples are in an example framework, so it's hard to tell how to use the engine on its own.

Ease of use: The library has been engineered as a class hierarchy. Objects need to be allocated and owned by the system. Sometimes the public sections of classes have many "comment enforced" rules. We could not find a clear way to control the simulator step-size. No access is provided to the low-level dynamics algorithms, such as the contact solver.

Collision detection with random polygons in Ipion's Virtual Physics SDK.

Production: Ipion doesn't include much in the way of production tools. There are no exporters for standard 3D modeling packages, nor any importers for generic 3D model files. Quake 2 .BSP files are supported with sample code. Most of the example programs generate models using custom code. Users would need to create tools in order to let artists try out their models easily. Ipion does provide a tool for creating a custom convex hull for complicated concave models; however, this tool doesn't load any standard file formats, either. There is an example of saving internal collision information to disk, but it is not documented and doesn't appear to be cross-platform-safe or version-controlled. The engine supports PC and Playstation 2. Ipion has licensed the complete source code to game developers in the past, although it's not clear if the Havok acquisition will affect this stance.

Integration: As mentioned above, the class hierarchy might make integration with an existing architecture more difficult than with a more procedural API. The classes are well organized and mostly documented. The libraries are composed of two pieces, the physics engine and the surface builder, which allows users to create optimized convex hulls from polygon meshes at run time. If you don't need the surface builder function, you can choose not to include it.

Input and feedback: The engine has a few different ways of physically affecting the simulation, including applying impulses to objects and attaching actuators and controllers (like springs, magnets, and user-defined impulses). Ipion includes a class of "listeners" that allow the game to get information about objects and collisions in the system. With these you can determine if objects are actively being simulated or are "asleep." The collision events are very important for adding things like sound effects to a game. These listeners look very useful, though they are not terribly well documented.

Cost: The base price for the Ipion SDK is $50,000 to $60,000 for a single title. Royalty-based fees can be negotiated on an individual basis.

Technical support: Technical support for the Ipion system is now handled by Havok. They have a web-based forum for answering developer questions as well as telephone and e-mail support. It is not yet clear how support will be handled between the Havok and Ipion packages.

A car built by connecting rigid objects with springs in Ipion's Virtual Physics SDK.

Features: The MathEngine system is composed of two pieces intended to work together. The Dynamics Toolkit simulates rigid bodies, constraints, and contacts. A number of constraints and contact friction types are included, and access to the dynamic simulator is provided at a number of levels. The Collision Toolkit handles collision detection between objects, but it is very basic. It only supports a small set of collision primitives (sphere, box, plane, and cylinder) and their unions. The API is modular (more on this below), so adding a custom collision detector is possible, but it would be a lot of work - work you were hoping to save by licensing a physics engine.

Documentation: MathEngine's documentation is the best of the three packages. The manual is very thorough with both a user's guide and complete reference to all the classes in the architecture. There is even a glossy foldout that shows frequently used information. The code is well organized and documented.

There is a small amount of inconsistency in the documentation; for example, it uses the terms alive/awake and dead/asleep interchangeably. The friction parameters are nonintuitive and nonphysical. Some guidelines and a bit more documentation on setting them would have helped. The tolerance values used by the simulator are documented, and some effort is made to describe them. Additionally, there is a section on optimizing and constructing good simulations. While easy to read and understand, the demonstration programs were too basic.

Ease of use: The system is a class hierarchy in structure, but the access to the library is through fairly lightweight standard C functions and data structures. The API is clean and relatively well designed. The dynamics and the collision toolkits are completely modularized and loosely coupled, so a developer could easily use either the toolkit by itself, or call the well-documented contact-resolver function directly. This layered approach is the right way to design a physics engine API for licensing, in our opinion. However, MathEngine has not provided a complete interface between the systems, so you have to do extra work to get them to cooperate. The system has no matrix helper functions. Simulation memory allocation is error-prone.

A dynamically controlled object in a scene in MathEngine.

Production: Similar to the Ipion library, the MathEngine system is designed mainly for coders. Any tools needed for importing models or allowing artists to preview the system will need to be created. The Collision Toolkit can use Renderware models, but no other formats. There appears to be no way to write internal preprocessed data to the disk. The engine supports the PC and Playstation 2 platforms. MathEngine does not generally license complete source code, but said they would discuss it on a case-by-case basis.

Integration: The layered approach to the API makes integration very easy, probably much easier than the other two engines. If your program already handles collision detection, you can easily use only the dynamics system or vice versa. MathEngine provides most of the source code to the abstraction layer that communicates with the dynamics and constraint solver.

Input and feedback: There are simple APIs for exerting torques and forces on bodies, but the programmer must apply joint forces and torques manually. Some of the toolkits and subsystems have callback functions for feedback, while others have "get" functions; most of the rest have enough sample code included that direct access of the data structures is possible.

Cost: MathEngine has two pricing plans, either a single fee of $50,000 per project, or $5,000 plus royalty of 50 cents per unit.

Technical support: The MathEngine web site has a developers' forum for discussing the toolkits. Support over both e-mail and telephone is very good, although we did not test this support anonymously.

Features: Havok provides an underlying rigid body simulator and a toolkit for higher-level access layered on top. Havok's constraint system is weak compared to the other libraries, supporting only spherical joints and point-to-path. The collision subsystem is full-featured, supporting simple collision volumes as well as convex hulls and true concave objects. A number of contact-solver friction types are included. In addition to basic rigid body dynamics, the system simulates soft body objects (such as blobs and cloth) and particles, as well as a simple fluid model; however, we didn't test these features and can't vouch for their completeness.

Documentation: Havok provides a nice set of documentation along with updates on Havok's web site. While not as thorough as MathEngine's documentation, the documents were useful. There were a few minor consistency problems with function names and terms. There is a great variety of well-documented examples of various levels of complexity. The underlying simulator API is not as well documented.

Ease of use: The Havok GDK toolkit is very easy to use, with the code organized in an easy to understand class hierarchy. The system provides helper utilities for common math functions. The underlying simulation API seems slightly more difficult to use and not quite as completely exposed as MathEngine's, but is more complete because of the advanced collision detector.

Production: Because the system provides a plug-in for 3D Studio Max, artists can easily start using it. They can create models with boundary volumes and run them in the simulation without programmer involvement. Another nice feature allows you to dump the state of the objects in the simulation at any point to a file to reload later or examine for debugging purposes. When trying to debug a physics-heavy game, features like this are very useful. The library is available for the PC, Macintosh, and Playstation 2 platforms. Havok does not license the engine source code, though they will discuss full source-level needs individually.

Dynamically controlled objects in a scene in Havok.

Dynamically controlled objects in a scene in Havok.

Integration: Havok breaks the package up into several libraries. While it may be possible to leave out unused portions, they are very tightly integrated. This makes programming easier at the expense of modularity.

Input and feedback: Input to the simulation is through "action" classes that are called back during integration, and through a complete set of access functions on the bodies. Because the constraint system only supports one type of constraint, there are no constraint actuator functions. The Havok GDK provides a complete set of event callbacks and access functions to determine what is happening inside the simulator.

Cost: The Havok 3D Studio Max plug-in is $495 per seat with multi-seat discounts available. The Havok GDK is available for $65,000 to $75,000 per title without royalties. Royalty pricing and other pricing options are available on an individual basis.

Technical support: Like MathEngine, the Havok web site has a developers' forum for discussion of the toolkits. Technical support was very good, but again, it was not attained anonymously.

All three of these packages performed better than we expected. They are clearly up to the task of handling most rigid body simulation needs. But which one is right for you? We arrived at some general conclusions.

While its collision detection system is very fast and flexible, the Ipion Virtual Physics SDK has some problems. It lacks adequate documentation, and the code architecture may make integration with your game application more difficult. It is a product at the end of its lifetime, and unless your needs are very near-term and it is a perfect fit for your code structure, we would hesitate to recommend it. We do hope that Havok can integrate many of Ipion's good features into their architecture.

The Havok GDK is the most full-featured physics engine available. The system handles collision detection of arbitrary geometries and has models for soft bodies, particle systems, and basic fluid dynamics. The documentation is fairly good (though we hope it continues to improve), and the code examples are plentiful and very useful. The availability of a 3D Studio Max tool for artists is a great aid to production. If you need a one-stop, do-everything physics engine, this is the one for you. The only weak points are its constraints (admittedly, a very large weak point, especially if you're interested in simulating articulated figures), library modularity, and occasional collision resolution issues.

The MathEngine Dynamics Toolkit 2.0 and Collision Toolkit 1.0 is a very well designed SDK. The documentation is the best of the three, and technical support is outstanding. The well-documented architecture makes the engine easy to integrate with existing game projects. Programmers with knowledge of dynamic simulators should be able to dig right down into the core and control the simulation. The dynamic simulator is very good, and the rigid-constraint support is the best of the three systems we looked at. However, the lack of advanced collision detection methods might be a showstopper of a problem. (We must note here that as we went to press, we learned that a new beta version of the MathEngine Toolkit was released that includes collisions with convex objects among other new features. We didn't have time to check this out thoroughly, but because all of these packages are constantly evolving, we again encourage you to do your homework when weighing your options.) Finally, the simplicity of the demos may also make it tough to find specific examples to build on.

As we've said many times, you must review the packages for your specific needs before making a decision. Physics is not mature enough to buy a package based simply on a recommendation. All three packages are available for evaluation, and this is really the only way to make sure you get the package that is right for you and your project.

There are other options beyond the rigid body dynamics simulators we discussed here. Several middleware providers have created tools to aid the development of physical simulations. Here are a couple we haven't looked at closely, so "caveat programmer."

The ReelMotion Animation Tool (www.reelmotion.com) is a simulator for generating animation data for a variety of vehicles such as cars, helicopters, airplanes, and motorcycles. It uses dynamic simulation and sophisticated physical models for the objects to generate the motion data.

Hypermatter from Second Nature (www.hypermatter.net) provides a real-time system for animating soft body objects. The stiffness of the object can be controlled so the objects can vary from rigid to very soft while still preserving the volume of the original object. The system contains a number of features common to a rigid body simulator, such as constraints. The toolkit is currently available for the PC, and a Playstation 2 version is in development.

Havok GDK and Ipion's Virtual Physics SDK

www.havok.com

MathEngine's Dynamics Toolkit 2.0 and Collision Toolkit 1.0

www.mathengine.com

Read more about:

FeaturesYou May Also Like