Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In his latest Intel-sponsored Gamasutra column, Orion Granatir offers the key highlights of Intel's entry into the processor-graphics arena: Sandy Bridge (codename for the recently announced 2nd Generation Intel Core processors).

Sponsored by Intel

[In his latest Intel-sponsored Gamasutra column, Orion Granatir offers the key highlights of Intel's entry into the processor-graphics arena: Sandy Bridge (codename for the recently announced 2nd Generation Intel Core processors).

New opportunity is knocking, and its name is Processor Graphics: the integration of graphics functionality into the CPU. Processor graphics will soon be found on computers everywhere, meaning expanded markets for your next-gen video games.

Topics for this article include architecture overview, performance benefits, and tips on what developers should consider when optimizing their games for processor graphics-based machines.]

Processor graphics will soon be found in computers everywhere. Intel calls this new capability Intel HD Graphics; at AMD, it's called AMD Fusion. Both names refer to the integration of graphics functionality into the CPU.

At this year's Intel Developers Forum, the 2nd Generation Intel Core processors (codenamed "Sandy Bridge") were announced. One key feature of this architecture is this tighter integration of graphics into the processor.

One advantage of working at Intel is having early access to leading-edge technology. I'm writing this column on my Sandy Bridge machine. Right now it may be another big dev box on my desk, but this chip will be in mainstream laptops in 2011.

I can't share any numbers, but I can tell you that the processor graphics are pretty good. It runs one of my favorite games, Bioware's Mass Effect 2, at a solid fps in glorious detail (by the way, my mission was truly suicidal).

The ring architecture that connects the processor cores (x86 cores) together is now connected to the processor graphics. This ring interconnect enables high-speed and low-latency communication between the processors cores, processor graphics, and other integrated components, such as the memory controller and the display.

Basically, the processor cores and graphics engine communicate through a shared cache, creating some interesting possibilities for tight CPU/GPU interaction. We'll explore some of these topics in future articles.

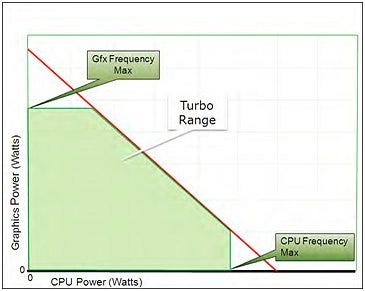

The integration of process components also provides some new improvements to Intel Turbo Boost Technology. Intel Turbo Boost Technology enables the processor to adjust the processor core and processor graphics frequencies to increase performance and maintain the allotted power/thermal budget. This means the processor can increase individual core speed or graphics speed as the workload dictates.

Power Load Line

To learn more about Intel Turbo Boost Technology, check out the white paper: Intel HD Graphics Dynamic Frequency Technology. (And if you want to get this type of content delivered regularly, sign up: Intel Software Dispatch for Visual Adrenaline.)

During performance analysis, it's important to pay attention to dynamic frequencies. A typical game will load down the CPU and GPU with the processor finding a good balance. However, if you are playing back a captured frame, the CPU workload might not be as high and the graphics dynamic frequency might affect your results. This should be a relative scaling within the frame, but the time to complete the frame might be unexpected.

Since Intel Turbo Boost Technology is automatically controlled by the CPU, a developer cannot directly control it. However, it's important to understand how it works. Most games I have investigated benefit well from the graphics turbo scaling.

The addition of Intel Advanced Vector Extensions (Intel AVX) is another interesting Sandy Bridge feature. AVX extends SIMD (single instruction multiple data) instructions from 128 bits to 256 bits. For applications that are floating point intensive, AVX enables a single instruction to work on eight floating points at a time instead of the four that the current SIMD provides.

It's important to note other hardware vendors have also announced support for AVX.

Most developers will just use the latest Microsoft Visual Studio compiler or Intel's C/C++ Compiler to take advantage of AVX. But, for the clock counting, bit shifting, tech heads out there, you can learn more at the Intel's AVX website (it even includes an emulator).

The best way to work with AVX is through intrinsics, which are supported by both the Microsoft and Intel compilers. Anyone familiar with programming SSE or the PlayStation 3's SPUs will be good "frenemies" with intrinsics. Intrinsics are compiler specific functions that usually compile down to highly efficient inlined machine instructions.

Since the compiler has a strong understanding of intrinsics, it will often generate code faster than inlined assembly. Intrinsics are the best way to write high-throughput, compute-intensive code on the CPU (intrinsics are an acquired taste, like wine or Remedy Entertainment's Alan Wake).

Intel engineers and performance-hungry developers are already exploring ways AVX can benefit game and graphics applications. For example, my coworker Stan Melax, just wrote a great article presenting a programming pattern to improve the performance of geometry computations by transposing packed 3D data on-the-fly.

AVX is interesting, but let's shift our focus to the graphics engine.

Remember, Sandy Bridge is a DirectX 10.1 part. I like the DX11 multithreaded API, so most of my current code is DX11 with the proper DX10.1 "feature level" set for Sandy Bridge. With full DX10.1 support, there are no real major surprises when programming for Intel graphics. However, there are a few things to keep in mind.

The memory layout for processor graphics is different than it is for a discrete card. Graphics applications often check for the amount of available free video memory early in execution. As a result of the dynamic allocation of graphics memory performed by the Intel HD Graphics devices (based on application requests), you need to know the total amount of memory that is truly available to the graphics device. Memory checks that supply only the amount of "local" or "dedicated" graphics memory available do not supply an appropriate value for the Intel HD Graphics devices.

All video memory on Intel HD Graphics and earlier generations, including Intel Graphics Media Accelerator Series 3 and 4, use Dynamic Video Memory Technology (DVMT). DVMT memory is considered "local memory." "Non-local video memory" will show as ZERO (0). This should not be used to determine compatibility with Accelerated Graphics Port (AGP) or PCI Express.

To accurately detect the amount of memory available, you'll need to check the total video memory availability. The Microsoft DirectX SDK (June 2010) includes the VideoMemory sample code and describes five commonly used methods to detect the total amount of video memory.

Applications targeting Microsoft Windows Vista and Microsoft Windows 7, should reference GetVideoMemoryViaDXGI. For Microsoft Windows XP applications, GetVideoMemoryViaWMI is a good starting place. For more information, visit the Microsoft sample code site.

The best place to get started with Intel processor graphics is to check out the Intel Graphics Developer's Guides. Even though the guides don't yet discuss specifics for Sandy Bridge, you'll find this information useful in learning about Intel Graphics. Once the product introduction is complete for Sandy Bridge, we'll post an updated guide.

With nearly a million PCs shipped each day, the available market of processor graphics is growing quickly. It's worthwhile to understand and validate on processor graphics. Soon, processor graphics will be everywhere.

[You can check out an archive of Orion Granatir's continuing Gamasutra sponsored column series via his Gamasutra biography page.]

You May Also Like