Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

John Szczepaniak chats with the legendary Steve Wozniak about programming, his vision for the first Apple computers, and performing magic on an apartment floor in Cupertino to create the first Apple variant of the BASIC programming language.

In the August 2012 issue of Game Developer Magazine was an article I wrote titled "A Basic History of BASIC", in which I spoke with the surviving creator of the language Dr Thomas Kurtz. I also spoke with pioneering figures David Ahl, author of the million selling 101 BASIC Computer Games, and Steve Wozniak, co-founder of Apple. Given the cross-pollination between Gamasutra and Game Developer, there's a good chance this BASIC article will feature on Gamasutra at some point. For anyone who has written even one line of BASIC code (that's all of us, right?), it should prove an interesting read, detailing how it came about and the surprising divergence it underwent.

In the meantime, however, I ended up with a lot of unused material from the Wozniak interview, since it was done via Skype. I've re-added some small bits from the GD Mag article, to flesh out the context of some sections, but otherwise below you'll find the remainder of the interview, wrapped up in a kind of mini-feature, detailing his introduction to computers, his early work on the Apple, creating Integer BASIC by hand, the programming of Little Brick Out, and an interesting anecdote regarding some prototype Apple II hardware which was never released.

It was a real pleasure chatting with Steve Wozniak, who kindly took time out from a busy schedule. Hearing his recollections reinforces my view that the man is a genius - a true intellectual titan. I hope you have as much enjoyment in reading these recollections.

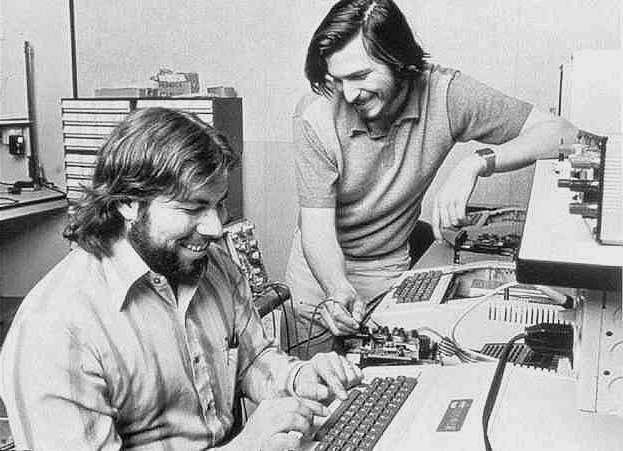

Apologies about the spartan nature of the imagery. The below photo was taken randomly from the internet, while the Little Brick Out image is my own.

World of Wozcraft

BASIC, or Beginners All-purpose Symbolic Instruction Code, was an important factor in the early home-computers that emerged during the mid-1970s. The higher-level language, which was easier to learn than something like machine assembler, first came into existence on the 1st of May, 1964, at 4am, having been created by Dr Thomas Kurtz and the late Dr John Kemeny at Dartmouth College in the USA. It's nearly 50-years-old, more than the commercial games industry itself. Although it started on university time-share networks, the fact the language was freely available meant there were numerous efforts porting it to different systems.

Steve Wozniak recalled how he'd been exposed to BASIC as far back as his school days: "I discovered it very briefly, kind of almost for one or two days, back in high school. My electronics teacher had arranged for me to go to a company, outside of school once a week, only myself, and learn to program there. And I taught myself how to program FORTRAN from a manual. Back at high school, one day they brought in terminals that could connect to a faraway computer over phone lines, and let us write programs in BASIC. So I looked at the BASIC, and it was similar to FORTRAN in a lot of ways, and I wrote a couple of short programs and typed them into a teletype. So I had seen BASIC, but only enough to know that it was similar to FORTRAN."

Today there over 280 variants available for arguably every computer system, and several games consoles too. During the 1970s though, out of a complex maelstrom of variation, there were two prominent companies. One was Digital Equipment Corporation, which started creating versions for its tiered computer systems. To go alongside them, as proof of what the computers could do, David Ahl collected BASIC programs together and published the book 101 BASIC Computer Games, which through various reprints would go on to sell a million copies. Another company was the then named Micro-Soft, when Bill Gates and Paul Allen created Altair BASIC for the MITS Altair computer. In fact Microsoft went on to find tremendous success in producing and licensing versions of BASIC, for a whole range of international computers. If you were going to launch new hardware you needed a language alongside it, and BASIC's ease-of-use meant it was hot stuff. There were other languages, such as DEC's slightly superior FOCAL, but according to Ahl DEC was unwilling to license it to other computer manufacturers.

In these nascent days, early computers had a lot of shortcomings. "Eventually the manufacturers started to look like they might come up with a formula that could be useful at an affordable price," explained Wozniak. "The first hobby computers were affordable, but they couldn't run any programs, because you had to add thousands of dollars of memory, and you had to get a BASIC that Bill Gates wrote, and you had to get a teletype that cost more than cars, to have an input/output device. So they hadn't discovered the formula of the affordable computer yet."

As Wozniak explained, BASIC was fundamental to this formula of usefulness. You needed a higher level language, since as he put it: "You couldn't solve problems typing machine code in by ones and zeros, toggling switches and lights. That wouldn't work. Bill Gates' BASIC had got his name known in our computer club. We were possibly the only computer club in the world [at that time]. We had about 500 members that met every two weeks, and they had a tape of Bill Gates' BASIC. A paper tape with punch-holes, that you could read into a machine called a teletype. So you'd read this tape through, ba-pa-pa, for 20 minutes, and it gets all the ones and zeros into the memory of your little computer, and you can then run BASIC."

"I had my computer design for the affordable computer," explained Wozniak, "and one of the keys was you need 4K of memory to run a language like BASIC. In my earlier life in high school, I told my dad I was going to own a 4K computer someday, and he said it would cost as much as a house. And I said: I live in an apartment! But I needed 4K to run a language like I had run in high school. I wanted to do that all on my own, and I wanted to own it."

As he revealed, that first computer design was very much a home-made effort: "I had the formula to build affordable hardware, based around a microprocessor and some video terminal that I had built using my home TV, and a keyboard. So I had the whole formula in my head, but the microprocessor I had chosen had no higher level languages. All it had was what was called machine language - ones and zeros. So I had to create a BASIC using those ones and zeros. I sat down, I organised it, I got it into a logical fashion, I reflected for a long time all alone - that's my style - and I said that the world needs BASIC. I wasn't going to sell it for money or anything, I just wanted to develop the computer. But I had to develop a BASIC."

Integers and Floating Point

Wozniak set about creating what would be known as Integer BASIC, which came on all early Apple II computers though was short-lived due to not supporting floating points. As he explains: "I wrote down a complete syntax chart of the commands that were in the H-P BASIC manual, and I included floating point arithmetic, decimal points, numbers and everything. Then I started thinking it was going to take me a month longer - I could save a month if I left out the floating point." To explain it very loosely, a floating point number is one with a decimal string, for example 3.14159. Without floating point numbers you're limited in how the computer handles variables.

However, Wozniak was confident that a good mathematician can work around the limitation of integers: "We made the first handheld scientific calculators at H-P, that's what I was designing, and they would work with transcendental numbers, like SIN and COSIN. And was everything floating point? No! We did all the calculations inside our calculators digitally, for higher accuracy and higher speed, with integers. I said, basically integers can solve anything. I was a mathematician of the type that wanted to solve things with integers. You could always have dollars and cents as separate integer numbers - all you need for games is integers. So I thought, I'll save a month writing my BASIC, and I'll have a chance to be known as the first one to write a BASIC for the 6502 processor. I said: I'll become famous like Bill Gates if I write it the fastest I could. So I stripped out the floating point."

What seemed like a good plan led to complications though. "The biggest problem in writing my BASIC was I could not afford what is called a time-share assembler. That's where you take a terminal, call a local phone number, connect to some far away computer, and type your programs in using computer machine language. Well, I couldn't afford that, so what I did was I wrote my programs in machine language on paper, and then I looked at little charts to figure out what ones and zeroes would get created by them, to make them work. And I would write the ones and zeros down in Base-16, or hexadecimal, and I would type that into my own computer that I had built, to test out if things would work. It would take me 40 minutes solid typing to type my entire BASIC in, when it was nearing completion. So writing the BASIC program, I never used a computer. If you go back to Bill Gates or anyone else who wrote a computer language, they used a computer to type their program in. I'm sure I must be the only one who wrote a program completely by hand in my own handwriting - and I still have it! By the time we shipped the Apple II, every bit of code had only ever been written by hand, not typed into a computer."

Of course if you're creating an entire computer language by handwriting it, and then are manually converting the assembly into hexadecimal, it makes implementing even a small change rather troublesome. "I knew floating point very well," he explains. "My BASIC was unfortunate, because if I had typed it into an assembler, I could just add pieces and subtract them at will. So it was hard at the end to go back - and it would have been quite a job to add the floating point back in. In fact when we shipped the Apple II, I wrote a set of floating point routines, and I wrote an article on them for Byte Magazine, and I put them in the ROM of the Apple II. But I didn't go back and incorporate them into the BASIC. One of the reasons for that was because my BASIC was assembled by hand. If I discovered that - oh my gosh - I've got to add a few bytes to this one routine, I had to put a jump out to somewhere else, do what was needed, then jump back in. It was absolute memory locations. So it was extremely clumsy. I would shorten a routine and I would lose 5 bytes, but I wouldn't be able to put any more instructions in there. Everything had to stay exactly where it was, because I had pre-calculated: a branch ahead will be exactly 32 bytes. I'm sorry, I'm not going to go back rewrite my entire code, just because I can shrink it down to 31 bytes."

The result wasn't quite what Wozniak expected though: "It's too bad. I've thought back and that might be my one regret on the Apple II - because eventually we licensed Microsoft BASIC, which was floating point. They walked in the door, they had a BASIC for the 6502 microprocessor, and I was working on a floating point BASIC at the time, and I thought 'Oh my gosh, that'll free me up to work on other things.' So I was happy to go along with licensing it. Eventually all of our revenues were coming from the Apple II, which was shipped with BASIC, with Microsoft's BASIC. So they kind of had us in a tight place for re-negotiating when the license expired."

Even so, he recalls the original with fondness: "Our original Apple II came with Integer BASIC, by me, and you could then buy a board with Applesoft BASIC, to add that to it. And you would have both languages. Or you could buy the Apple II Plus, with Applesoft, and then buy a board that had Integer BASIC. So you still had both BASICs even in the same machine. I liked Integer BASIC. There's a lot of things you could do to save time, but you have to think mathematically. And most people would rather just have the easy world - so it wasn't good for most people. But for my type of person, Integer was great. I would want to do things with integers."

Allen Baum lends a hand

Wozniak wasn't alone though when originally creating Integer BASIC, he had help from a friend who'd gone to MIT - Allen Baum. "He was an important person, in fact the only other person who wrote part of the code that was in the Apple II; a person that hung around with me and Steve Jobs, the only other person who knew computers when I was in high school, just one thing after another, and he had an influence on Apple products. Allen Baum sent me his Xerox copies of some books from MIT, from when he was going there, so I could read this exciting stuff - otherwise you wouldn't know where to find a book about computer language compilers, and language translators."

"So I had a little bit of experience knowing that they had to use stacks to reorient arithmetic equations/formulas. Our Hewlett-Packard calculators had the human reorient the equations in their own head, to what's called Reverse Polish Notation. So I knew that to make a computer work, you had to take an equation like 3 + 4 x 6, and realise that the 4 x 6 should be done first, and the way you do that is with some stacks. You convert it to Reverse Polish Notation, just like our calculators used."

This proved invaluable when dealing with how the language handled input: "I wrote this thing so that I had a syntax table written out as text, and I looked at the line that a user typed in, and it compared letters to my table, one at a time, worked its way through and found out if it was any valid statement. When they started typing numbers, was it in a place where you could type any number of digits? When it asked for a variable, were they typing something that fit the definition of a variable name? And I would determine if their statement was correct, just by scanning, character by character by character, and if it got hung up it said this one doesn't work. It would go try the next step in my syntax diagram of all of the BASIC statements that I had written."

As had been proven by the success of the 101 BASIC Computer Games book, Wozniak knew games would be the key to success: "I named my BASIC ‘Game Basic', from before I started writing it, because it had to play games." Next came the actual creation...

"What I would do is, I would scan across, and if someone typed a statement like PRINT, well PRINT is an operation that you do. So I would put a little token that stood for PRINT, on to the verb stack - it's a verb. And then after it said print, it might say 'A', which is a variable name. That would go on the noun stack. And then when you hit the RETURN at the end of the sentence, it would pull off the first verb, PRINT, and it would know: now you print the first thing that's on the noun stack. And then you toss them off of these little push down stacks, where it's first in last out. In my syntax chart, every time it had a word like PRINT, every place it had a comma, or a parentheses, each one of those turned into one token on its own, that I had to write a tiny routine for. I basically wound up with maybe a hundred of these little tokens for things you do. And each one has a tiny little process program that had to be written, so it was entirely modular."

Graphics were also important, and it must be noted that earlier variants of BASIC were limited in this regard: "Obviously I made a very different variant of BASIC, because I had graphics and color. So I put in commands like 'COLOR = 7', or 'SET COLOR 7', I forget how I did it. And I would say HLINE for a horizontal line, and then give three numeric co-ordinates; the vertical position, plus where it starts and where it ends. So that was just due to the hardware I had being different."

Games become software - Little Brickout

Was Little Brickout, a version of Breakout designed specifically for the Apple II using Integer BASIC, the first program Wozniak made using the new language?

"I would say that was not the first program - I wrote little programs all along as I was developing the BASIC, to know what it could do with color. But, you're right, that was one of the first. Once I put in commands for color and graphics, the first thing I wrote was Breakout - we called it Breakout at first. Then I put in a command that said if you type in command-Z it would automatically start playing, but never miss the ball - it would fake people into thinking they were playing it perfectly."

"I did this on my apartment floor, in Cupertino. I just remember the green rug, and the wires going into the TV set, and I called Steve Jobs over and showed him how I could quickly make one little change in my BASIC program and the bricks would all change color. And I could make another change, and the paddle would get bigger, or I could move the score to a different place. We knew that games would never be the same - I was shaking, because games were software finally. Not only software, but in easy-to-program BASIC. With hardware at Atari it was a very hard job, now I sat down and in half an hour I wrote Breakout with tons of options. I changed the colors of things, I changed the speeds of balls, I changed angles of the ball. I wrote all of this and I realised, if I were doing it in hardware it would have taken me 10 years for what I did me in half an hour in software. That's when I called Steve over, and I was just shaking, because now I realised that games were going to be software. They were going to be a hundred times faster to develop."

Featuring vertical lines of green and orange blocks, Little Brickout was a recreation of Breakout that Wozniak had made back at Atari. "I designed everything using very few parts. I was known for that at Hewlett-Packard, so it wasn't like I broke some rules in doing Breakout for Atari. It was just a very nice, tight design - typical Woz design. But when I designed it for Atari, it was not software, it was hardware. So you had to wire signals from chips to chips, that go high, that go low, go high, go low. You'd have to combine them with clocks and counters, and get the pulses at the right time into the TV set, to show up with dots in the right place on the screen. A very, very hard job."

Next Wozniak revealed some of the other neat tricks that could be done on an Apple with his variant of the language. "I added commands in to control it. Two of the most useful things I added were still coming from the microprocessor being a little binary machine. It has memory, and memory has addresses, and I put two commands in, called PEEK and POKE. You take PEEK and a number, and it would peek out the data from that part of RAM and display it as a number. It was just a function call. You could also say POKE, and you could poke a number somewhere in memory, and that wound up very useful in controlling hardware devices that got created, and being able to do things with the BASIC so simply."

Unreleased Apple II phone board

One of the most interesting hardware devices they invented never actually made it to market though: "We had a friend at Apple, John Draper, who was known as Captain Crunch, develop a phone board for the Apple II. We didn't put it out but, 12 years before modems would do this, it could listen to the phone line and know if it got a ring, or a busy, or a dial tone. Those sort of things. It would listen, and the way you told it to listen? Just in BASIC. You poked a number into memory that corresponded to the tone you were looking for, and then you sampled something to see if it came back true or false, with PEEK commands. So you had direct access to the hardware from BASIC. That was probably one of the most useful things ever in a BASIC."

The language offered a tremendous opportunity to the fledgling programmer, as Wozniak explained: "It was really a great language with a great purpose. People have to start somewhere, and I think that's the best starting language you could ever have. Machine language is very good if you get the chance to understand all the little registers and nuances, and instructions in a machine, and what shifting is all about. That's good stuff to learn too, but if you're going to start with a higher level language, you don't want to start at the top of structured languages. It's much better, young people, newcomers, like even myself, learn things a lot better when they learn what every little statement does, and then they have to combine them, and loop them around, and put them into a structure that actually does something, that plays. Then after a while you'll learn why you want a structured programming language - which BASIC is not. But that's a much better starting point."

"People came up with LOGO as a starting language in later years, and that was very good because you typed in a statement and would see something happen right away. In BASIC you sort of had to write a program for a while to actually run it and see it work. I liked the immediacy of LOGO, but here's the trouble. You got a little ways into LOGO, learning some higher level principles of creating lower level commands from the top down, and you couldn't take it very far. Nobody could really take it very far. In BASIC you could build from the bottom up, and take it to the moon. On our Apple II people were writing databases, word processors, I mean everything. An awful lot of it done in BASIC. A lot of games. So I admired the language. It was too bad that Hewlett-Packard and Digital Equipment Corporation varied widely on how they handled strings of characters. Since my BASIC in the end wasn't super compatible with the programs in books. But I actually thought the Hewlett-Packard way was better, but sometimes, but it was different. And that surprised me. I kind of wish BASIC had remained one language."

The future of beginner languages?

Given that it's been 50 years since BASIC was formed, does he believe it still has a place in the world? "I think it does. I still recommend it frequently, as the right way to start programming classes. Or at least a simpler language like BASIC - it's probably pretty hard to find the exact, plain old original BASIC in this day of graphics on computers. But yes, I do. Over my time I would write a lot of fun programs. Things like solving puzzles that I bought in stores - I would write programs to do it. And I got in the habit of writing them in three languages, and BASIC wasn't one of them. BASIC was a little more convoluted, but these other languages were also very simple to learn, scripting-type languages. One was LOGO, one was AppleScript by Apple, and one was Hyper-Talk, part of our Hyper Card system. And every time the programs came out, [they were] more English reading, shorter and faster in Hyper-Talk. So there are differences in the way you can compare languages. But I didn't go back, in those days, and write those types of programs in BASIC. Partly because being an interpreted language it was slow for the sort of things I enjoyed doing, which was solving puzzles and the like."

"But as far as the introduction to computing... To me BASIC and FORTRAN are the same. Either one of those, that's the right way to start, and not a real super structured language where you have to learn so much about the structure. It's better to learn structure from the ground up, the basic atoms. Which is what BASIC is. To learn the structure from the ground up, once you've learned it you will apply it in a structured language. Then you're ready for it. But a lot of people think: Oh, you should just teach, and once you've reached that pinnacle of knowledge, that's the way we should teach programming. It's structured, and think out your data-types to begin with, and don't have this wild pioneer world. I just disagree with that, for beginners."

From the release of the Apple II with Integer BASIC, countless people had their first taste of programming. When Applesoft BASIC was later implemented, some such as Richard Garriott even went on to find success creating games such as Ultima. The launch of the Apple and proliferation of the BASIC language reshaped computers, videogames and how people perceived technology forever.

Read more about:

Featured BlogsYou May Also Like