Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Explore the what and whys of Unity's networking options and use NetTest (full source included) to put the latest version of the LLAPI through its paces.

Woah, with the attitude already. Look, I'm all about multiplayer. Have been for a long time. I've gone from being excited to get two people walking on the same ANSI map in LORD2 in the early 90s to hitting the 70,000 concurrent user mark during busy times in Growtopia.

In addition to writing my own junk, I regularly play with Unity and Unreal and am constantly trying to see how they can fit my developing style - mostly I need them because it's becoming a losing battle to try to maintain your own 3D engine if you also want to actually design and release games too.

LLAPI stands for Low Level Application Programming Interface. It's what Unity's HLAPI (High level) API is built on. I guess both of those can be called part of UNet. (Unity networking)

That might be ok for some projects, especially round-based networked games without too many players. When you are targeting MMO level network performance where a server may have to track stats of millions of players and simulate a world for weeks instead of minutes, the first rule is:

I don't want someone else's net code to even think about touching my gameobjects, I just want it to deliver reliable/unreliable packets that I write/read straight bytes to. That's it. I want to handle things like prediction, dead reckoning, and variable serialization, myself!

Both UDP and TCP are internet protocols built on top of IP.

TCP is a bidirectional socket based connection that insures your data is correct and sent in the right order.

It's like calling Jeff, he picks up the phone and you can both talk until one of you puts the phone down. Oh, and after you say something, you make sure Jeff understood you correctly each time, so that eats up a bit of time.

UDP is is a stateless connection where single packets get sent. You write your message on a rock and throw it at Jeff's window. While it gets there fast, it's possible you'll miss and Jeff will never see it. If dozens of people are throwing rocks, he needs to examine each one carefully to figure out which pile to put it with so the text is attributed to the right thrower. He might read the second rock you threw before the first one.

WebSockets are basically TCP with a special handshake that's done over HTTP first. To continue this already questionable analogy, it's as if you had to call Jeff's mom for permission, and then she gave you his cell number to call.

In theory WebSocket performance could be similar to TCP but in practice they are much slower and unreliable, I don't really know why but hey, that's what we get. I suspect this will improve later.

For games, UDP is nice because you can send some of your packets fast with no verification that it was received, and others (more important packets, like dying) with verification which sort of makes those packets work like TCP.

That said, many most games probably would be ok with a straight TCP steam as well and having a connected state can make things easier, like easily applying SSL to everything and not worrying about unordered packets.

BECAUSE I CAN'T! Browsers won't allow the Unity plugin to run anymore, they just allow straight javascript/WebGL these days. It hurts. I mean, I wrote a cool multiplayer space taxi test for Unity using TCP sockets and now nobody can even play it.

It can read from both sockets (UDP) and WebSockets at the same time and route them so your game can let them play together. But how fast is it, and does it work? This brings us to the second rule of netcode:

Which finally brings us to the point of this post. NetTest is a little utility I wrote that can:

Run as a client, server, or both

If you connect as "Normal client" you can all chat with eachother

Run with a GUI or as headless (meaning no GUI, which is what you want for a server usually)

Can open "Stress clients" for testing, a single instance can open 100+ clients sockets

When stress mode is turned on, the stress clients will each send one random sentence per second. The server is set to re-broadcast it to ALL clients connected, so this generates a lot of traffic. (300 stress clients cause the server to generate 90K+ packets per second for example)

Server can give interesting stats about packets, payload vs Unity junk % in the packets, bandwidth and server speed statistics

Built in telnet server, any telnet client can log on and get statistics directly or issue commands

Tested on Windows, linux, WebGL

Supports serializing variables and creating custom packet types, although the stress test only uses strings

Everything is setup to push performance. Things that might mess with the readings like Unity's packet merging or modifying values based on dropped packets is disabled. All processing is done as fast as possible, no throttling or consideration to sleep cycles.

Keep in mind a real game server is going to also being doing tons of other things, this is doing almost nothing CPU wise except processing packets. Testing things separately like this make it easier to see issues and know what the baseline is.

These tests are presented 'as is', do your own tests using my Unity project if you really want exact info and to check the settings.

My windows machine is a i7-5960X, the remote linux box is similar and hosted on a different continent, I get a 200 ping to it.

All packets in NetTest are being sent over the 'reliable' channel. No tests involve p2p networking, only client<>server.

Settings:

config.AcksType = ConnectionAcksType.Acks32; //NOTE: Must match server or you'll get a CRC error on connection

config.PingTimeout = 4000; //NOTE: Must match server or you'll get a CRC error on connection

config.DisconnectTimeout = 20000;//NOTE: Must match server or you'll get a CRC error on connection

config.MaxSentMessageQueueSize = 30000;

config.MaxCombinedReliableMessageCount = 1;

config.MaxCombinedReliableMessageSize = 100; //in bytes

config.MinUpdateTimeout = 1;

config.OverflowDropThreshold = 100;

config.NetworkDropThreshold = 100;

config.SendDelay = 0;

Ok, here I'm curious how many bytes LLAPI is going to use with clients doing nothing.

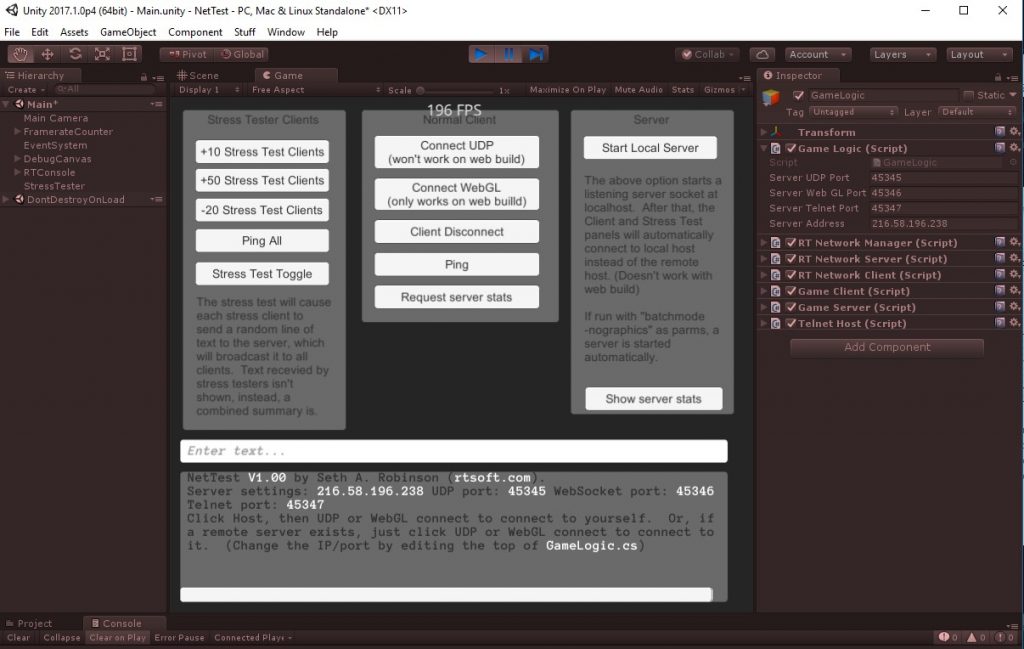

Server is set to localhost. I've got 4 NetTest's running - the one on the bottom right has had "Start local host" clicked, and the three others each have added 100 stress clients.

Results:

Results:

Around 30 bytes per stay alive packet per client. (23KB total over 7.74 seconds, sent 2.2 packets per client over 8 seconds, so roughly what I would expect for the 4000 ms ping timeout setting) Are stay alives sent over the reliable channel? Hmm.

Adding and removing test clients is a blocking operation and gets progressibly slower as more are added - why does it get slower and cause my whole system to act a bit weird?

While closing the instances looks normal, the instances are actually spending an additional 30 seconds or so to close sockets that were opened

Side note: Having a client ping the host (at localhost) takes about 2 ms. (normally this would be bad, but given that it can't check until the next frame, then once more to get the answer, this seems to match the framerate decent enough)

I'm not going to worry about the socket slowness, this may be related to a windows 10 resource thing. I'll launch with less clients in future tests to help though. The server itself has zero speed/blocking issues with actually connecting/disconnecting though, so that's good.

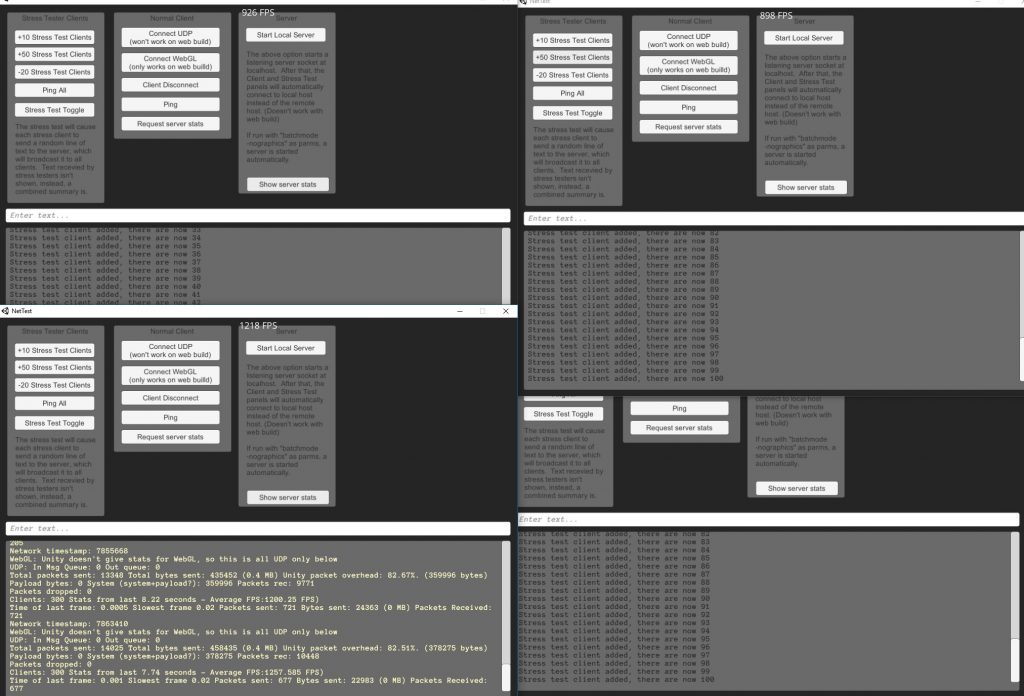

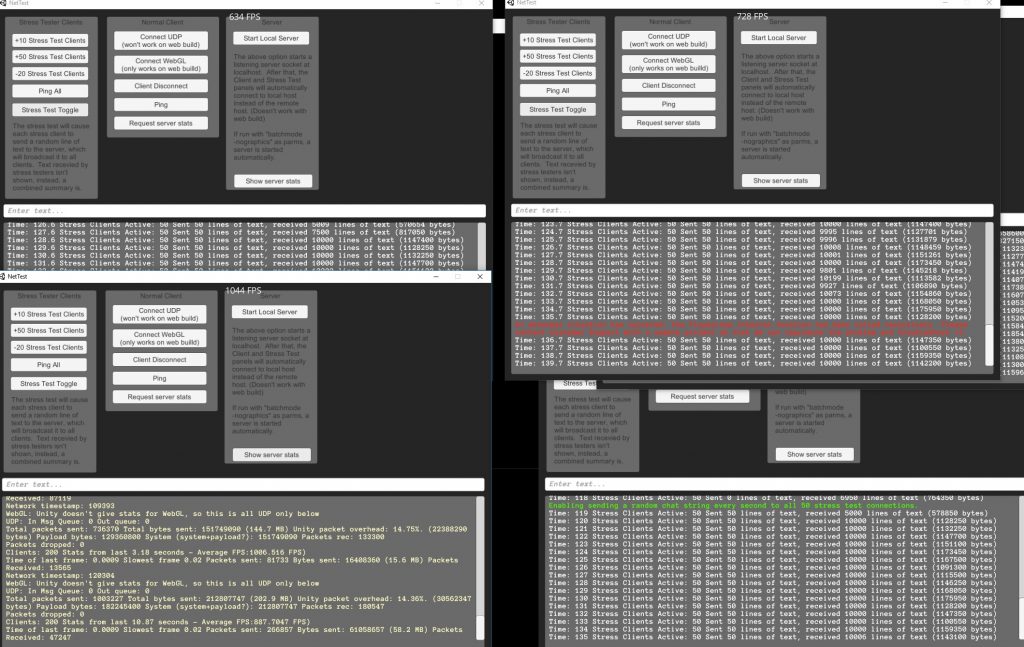

Ok, now we're getting serious. I have 5 instances - 4 have 50 stress clients, 1 is the server. (I could have run the server on one of the stress client instances, but meh)

First I enabled 50 clients in "stress mode" - this should generate 10,000 packets (50*200) per second. The server gets 50 lines of text from clients, then broadcasts each one to each of the 200 clients. Huh, stats show it only sending around 6,000 packets per second, not 10,000. However, we can see all the lines of text are being delivered.

That seemed fine so I upped it to all 200 clients doing the stress test - this should cause the server to send 40,000 packets a second (and receive 200 from the clients).

The stats show only 24,549 packets and 5.3 MB per second being sent. About 15% is unity packet overhead, seems like a lot but ok. Server FPS is good, slowest frame was 0.02 (20 ms) so not bad.

Is unity combining packets even though I set config.MaxCombinedReliableMessageCount = 1; Oops, I bet that needs to be 0 to do no combining.

I also notice 4,346 per second packets being received by the server. 200 for my messages, and I assume the rest are part of the "reliable guarantee" protocol they are doing for UDP where the clients need to confirm they received things ok. Oh, and UNet's keep alives too.

In the screenshot you can see an error appearing of "An abnormal situation has occurred: the PlayerLoop internal function has been called recursively... contact customer support". Uhh... I don't know what that's about. Did I screw up something with threads? It didn't happen on the server, but one of the four client instances.

I let it run at this rate for a while - a few clients were dropped by the server for no reason I could see.

Not entirely stable at these numbers I guess, but possible it would be if I ran it on multiple computers, it's a lot of data and ports.

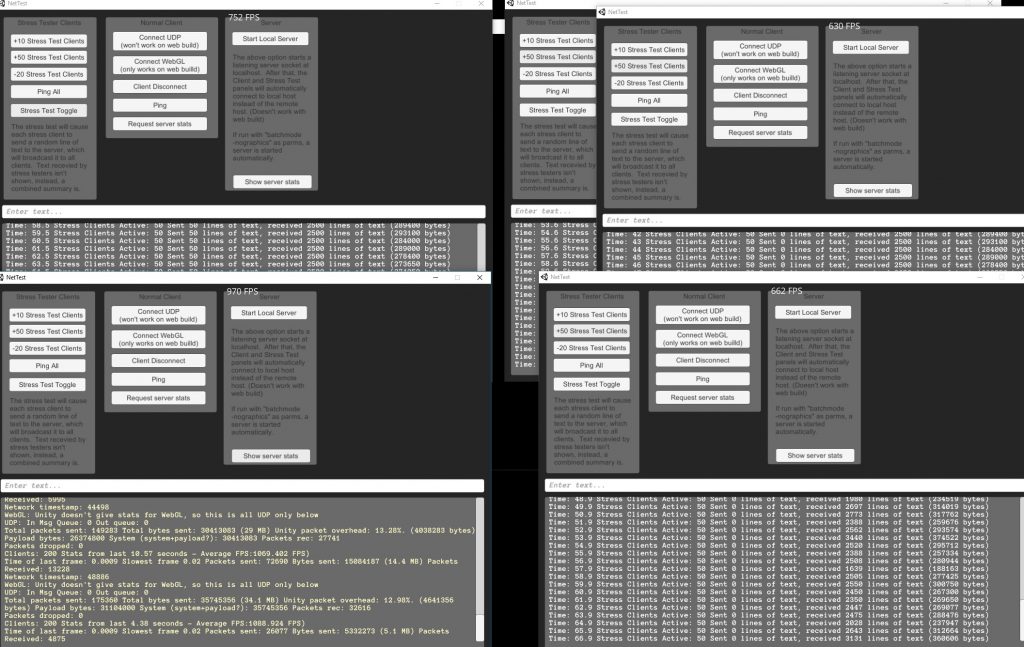

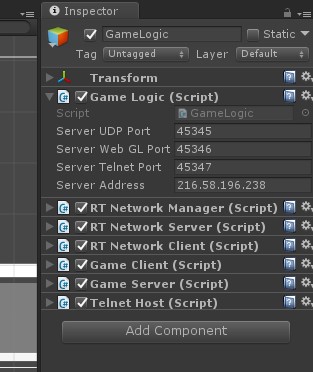

As nice as it is to be able to turn on both the server (localhost) and the client directly from the same app in the Unity editor to test stuff, eventually you'll probably want to get serious and host the server on linux in a data center somewhere. This test let's us do that. I've changed the destination address to the remote IP. (You can click the GameLogic object to edit them, I was too lazy to make a real GUI way))

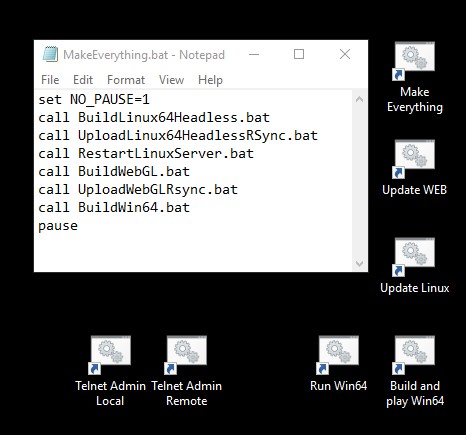

I have a .bat file that builds the Unity project (on Windows) and copies it to the remote server, then triggers a restart. It's run on Linux like this:

cd ~/NetTest

chmod +x ./linux64headless

./linux64headless -batchmode -nographics -logFile log.txtHere is a screenshot of the 100 clients (using one instance of NetTest) connecting to the linux server (which is also NetTest)

So let's try to digest this data.

NetTest Client: The 100 clients are self reporting receiving about 1,150,000 (1.15 MB) bytes of actual payload data per second. (10000 lines of text). Zero packets in the send queue

Windows system: Windows says 12.1 Mbps per second, so 1.5 MB, close enough, who knows what else I'm streaming/downloading

Linux system: iotop shows server sending 10.Mb (1.25 MB per second) so that seems right

Linux NetTest: (using telnet) it's reporting it's sending 1.22 MB per second (only 3,458 packets per second, yeah, definitely some packet merging happening) The total stats went negative, I ran this a while, uh... I guess I need to be using larger numbers, probably rolled over.

Linux: Server is running at 1100 FPS, so zero problems with speed. You're probably thinking "Hey, it's headless, what do you mean frames per second? What frames?", ok, fine, it's really measuring "update()'s per second", but you know what I mean. Slowest frame out of the 90 second sample time was 0.0137 (13 ms)

I don't have it in the screenshot, but top shows the server is not too power hungry, its process seems to use 3% (no load) to 15% (spamming tons of packets). Keep in mind we aren't actually DOING anything game related yet, but for just the networking, that isn't bad

I tried 200X200 (4x the total bandwidth & packets, so 5 MB a second) but the outgoing packet queue started to fill up and I started dropping connections.

I'm not going to bother making pics and somehow this post already got way out of control, but here is what seemed to be the case when I played with this earlier:

No WebSocket stats are included in the stuff UNet reports - "the function called has not been supported for web sockets communication" when using things like NetworkTransport.GetOutgoingFullBytesCountForHost on it

WebGL/WebSockets be slow. Real slow. If pushed the bandwidth to over 18KB per second (per Chrome tab), packets started to get backed up. I don't know if things are getting throttled by the browser or what. The server eventually freaks out when the send queue gets too big and starts throwing resource errors.

When creating stress test clients, a single WebGL tab seems limited to 14 WebSockets max (Chrome at least)

I suspect there could be issues with a naughty WebSocket filling up the queue and not sending acknowledges of receives, your game probably needs to carefully monitor packets sent/received to disconnect people quick if they abuse the connection

WebSocket queue/etc issues don't affect or slow down normal connections, so that's something

Mixing WebSocket and normal Sockets (UDP) clients work fine, that's a very handy thing to be able to do

All in all, I think directly using LLAPI in Unity 2017.1+ is promising. Previously, I assumed I'd need to write my own C++ server to get decent socket performance (that's how I did the Unity multiplayer space taxi demo) but now I don't think so, if you're careful about C#'s memory stuff. Issues/comments:

How is it ok that Unity's text widget/scroller can only show like 100 lines of text (65,535 vert buffer errors if you have too much text, even though most of it is off screen)? Maybe I'm doing something wrong

Unity's linux builds don't seem to cleanly shutdown if you do a pkill/sigterm. OnApplicationQuit() does not get run, this is very bad for linux servers that could restart processes for reboots or upgrades. (if it's a dedicated server you can work around it, but still!)

WebGL builds can't do GetHostAddresses() so I had to type the real IP in for it to connect (I should try switching to the experimental 4.6 net libs)

To my great surprise, my telnet host contined to run and send answers even after stopping the game in the editor. How could that game object still exist?! Threads and references are confusing, I fixed it by properly closing the telnet host threads on exit.

The Unity linux headless build worked perfectly on my CentOS 7.4 dedicated server, didn't have to change a single thing

It's weird that you'll get CRC errors if PingTimeout/AcksType/DisconnectTimeout don't perfectly match on the client and server. I wonder why there is not an option to just let the server set it on the client during the initial handshake as you want to be able to tweak those things easily server-side. I guess the client could grab that data via HTTP or something before the real connect, but meh.

Note: I left in my .bat files (don't mock the lowly batch file!), thought they may be useful to somebody. They allow you to build everything for linux/win/webl, copy to the remote servers and restart the remote server with a single click. Nothing will work without tweaking (they assume you have ssh, rsync etc working from a dos prompt) but you could probably figure it out.

Source is released under the "do whatever, but be cool and give me an attribution if you use it in something big" license.

Read more about:

Featured BlogsYou May Also Like