Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

I’m a big fan of artificial intelligence, and recently tried creating a simple game with adaptive enemy AI driven by a genetic algorithm. This blog entry discusses my approach to implementing the GA, as well as some lessons learned.

I’m a big fan of artificial intelligence, and I recently tried creating a simple game with adaptive enemy AI driven by a genetic algorithm. The result is invAIders, an Xbox Live Indie Game that is part Shoot’em Up (SHMUP), and part artificial intelligence experiment. The game pits the player against generations of enemy AI. When the player beats a generation, the genetic algorithm ranks the AI and uses them to create a new generation of enemy to fight the player. This blog entry discusses my approach to implementing the genetic algorithm, as well as some lessons learned in trying to use this technique to create a compelling enemy.

invAIders screenshot

Genetic Algorithm Overview

A genetic algorithm, or GA, is simply an implementation of Darwinian principles (“survival of the fittest”) as a search technique. From this perspective, life or existence is simply a problem solving exercise to determine the most survivable life form (best not to dwell on that too much). Bottom line, each distinct life form represents a possible solution, and the performance of these solutions are compared against each other. The most successful solutions propagate themselves to the next generation, while the unsuccessful solutions do not.

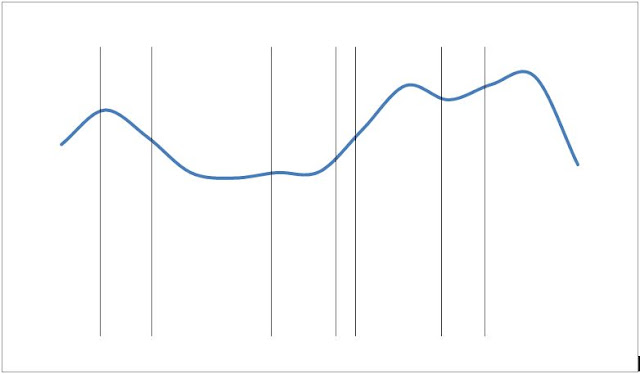

Consider the chart below. The vertical lines represent individuals within a population, and the curved line represents how well any possible individual might perform. This line is known as the fitness function. The intersection of the individuals with the fitness function represents their fitness value. Of course, when you use a GA, it’s usually because the true shape of the fitness function is unknown. We can only guess at it by examining the fitness values produced by the individuals. Each individual performs at a certain level on the fitness function, and those who perform best are selected to serve as the basis for future solutions. Ideally, this selective “breeding” of successful individuals creates localized searches of the solutions space, ultimately arriving at “The Best Solution”.

Individuals plotted against a fitness function

Now, because of the somewhat random nature of GAs, I am obliged to point out that this search technique is not complete. That is to say, a genetic algorithm might not ever get to “The Best Solution”. In fact, a GA is not guaranteed to even explore every location within a given solution space. But in spite of this, genetic algorithms are pretty useful in dealing with complex multivariate problems. As long as you can calculate a fitness value of a possible solution, the GA provides a methodology for searching the solutions space. Plus, they’re fun!

I should really note that this is only a cursory look at GAs, as I am only a cursory geneticist. Much more in depth information can be found throughout the internets via the magic of the Google.

For invAIders, I used the following general process to implement my genetic algorithm.

Define the possible solutions

Create an initial generation

Calculate relative fitness values

Create a new generation

GOTO LINE 3 (Calculate relative fitness values)

Define the Possible Solutions

In this preparatory step, we dissect the possible values which the solution could take and reduce them to their basic parts. Very simply, this is so that individual solution values can be generated procedurally at run time.

For invAIders, the solutions are the AI for the enemy ships. I broke the AI down into two main portions: the spawning parameters, and the orders.

The spawning parameters determine where the enemy shows up. They all come from the top of the screen, but they show up in different locations, and spawn at different speeds. Further, the various ships in a wave (all from the same AI) might be offset slightly, so that each ship appears a little to the left or the right of the preceding ship. All of these values are easily created via a combination of random numbers and parameters.

The same thing is done for the order portion of the AI. To make the ships act in interesting and varied ways, I made the orders out of lists of behaviors. The behaviors simply determine how the enemy will move and shoot. Movement has three possible values, turn left, turn right, or go straight. I added in one additional metavalue for variety – steer toward the player. Similarly, shooting consists of shooting straight (relative to the direction of the enemy), shooting down to the bottom of the screen, or shooting at the player. Each behavior has an associated time value, and once that time is up, the next behavior goes into effect. To create a complete order, approximately 40 of these behaviors are strung together in a list. As with spawning, each of these factors are easily generated using random numbers and parameters.

Create an Initial Generation

Now that the solution space is established, the next step is to create the initial generation. One approach would be to create “hardcoded” seed AI to serve as the initial generation. However, in a fit of “procedural generation” zealotry, I opted to allow the first generation in invAIders to be completely randomly generated at run time. All of the new AI are color coded with a green cockpit, so that they can be easily identified.

After some playtest feedback, I did make a concession and create two “Starter AI” to serve as the first two waves. These AI are mostly randomly generated, though I set specific values for their spawning rate and spawn location offset. The intent was to allow new players to fight AI that was “hobbled”, based on values that I assumed (correctly or not) would make them easier to deal with.

This brings up an interesting point of genetic algorithms. In my implementation, the random generation process for new AI has access to the entire solution space. That is to say, the random generation process could theoretically create any AI, including “The Best Solution”. Now, you could very easily create a generation process in which all values of the solution space are not initially available. To do so, however, you would probably need to know what are good values in your solution, and what are not good. As I said above, I don’t feel that I know exactly what would be good values and what would be bad values. Play testing helped refine the parameter selection process, but part of my goal was to create an opponent that could surprise me, and to that end, I tried to limit how much of my judgment I imposed upon its behavior (too much). Just like how I raise my children (Just joking! Sort of.)

Calculate Relative Fitness Values

Once a generation of individual AI specimen has been created, the next step is to test them against a fitness function. Enter the player. I like to consider that in invAIders, the player is the problem, and the AI is the solution. The fitness test is seeing how well the AI solves the “player problem”. To that end, invAIders measures the fitness value of the AI by how many times a particular AI specimen (or wave) kills the player, and how long, on average, each ship survives. The AIs that kill the player more and last longer are considered to be better solutions.

This creates an interesting reversal in performance assessment. At the end of a generation, the scores of interest are really the scores of the enemy, not of the player. After each generation, we can see the immediate relative performance of the AI in that generation. Each individual AI also has a history associated with it, and their relative performances are compare over their entire history. This provides a bit of added stability to the fitness values, so a new “usurper” AI won’t get just lucky and automatically take top place. Consistency, ideally, is rewarded over luck.

Create a New Generation

Once all the AI have been “racked and stacked” by the fitness function, it’s time to create a new generation of AI. There’s generally three methods of propagating successful specimen: Selection, Mutation, and Cross Over. invAIders uses all three.

Selection is simply selecting the best performing individuals from a population. This is a pretty easy process: simply reintroduce the top performing individuals into the next generation. As mentioned, my implementation uses cumulative rankings, so it is possible that the top performing AI in a given generation may not necessarily be the top overall AI. Again, consistency over luck. I selected the three top AI overall. In the game, I’ve labeled them as “Dominant”, and color code them with red cockpits so that player might have an idea of who they are fighting (and to intimidate them).

Mutation provides a slight twist on Selection. A top performing individual is reintroduced into the new generation, but with a bit of alteration. The level of alteration, or mutation, is up to the genetic algorithm designer. The trick is to balance “innovation” and “proven performance”. A 100% mutation fails to leverage the benefits of the parent. A 0% mutation is the same as selection. I chose to have a random mutation level, where a randomly selected number of things would be changed between parent and child. The mutation process involves creating a copy of the parent AI, and then changing some of the factors that define it. For example, a mutation might replace some of the parent’s orders with new ones, or change some of the spawning values. The intent is to explore the solution space from the vantage point of successful parents. In my GA, the new altered values are generated with the same parameters as when they were newly created. These mutant AI ships are listed as “Mutant”, and have orange cockpits. Each generation has three mutants, two based on the top performing AI, and one based on the second to top performing AI.

Crossovers involve taking two separate individuals and creating a hybrid that uses bits of both. In invAIders, each generation has one crossover AI. This crossover is created from the orders of the top performing AI, and the spawning settings of a randomly selected AI. I have to confess that this seemed to me to be the least productive propagation method from the search perspective, which is weird, because it seems to have worked OK IRL for a while now. I’ve color coded the crossover AI with yellow cockpits and ingeniously labeled them “Crossbreed”

Lastly, I round out the generation with three new, randomly created AI, for a total of ten AI in each generation. Once this is done, the cycle starts anew, and the AI is tested against the player problem again.

Observations

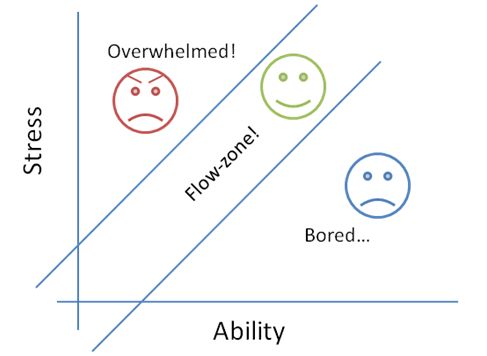

I think that using a genetic algorithm introduces some very interesting balance and game play dynamics. This can be seen in the context of the Flow concept, popularized by Jenova Chen. I like to think of Flow as basically an expression of the Yerkes-Dodson theory of optimal stress expressed over time.

My Interpretation of Flow as a Function of Stress and Ability

The goal of the video game should be to match the players ability to the challenge presented, to put them into the “Flow-zone”. This can be done by increasing the power level of the enemies as the power of the player increases. The problem with a genetic algorithm is that if you artificially increase the power of an AI outside of the genetic algorithm construct (say, by giving it more hit points), then you could end up wrecking the nature of the fitness function and somewhat invalidate the previous search results (in other words, the genetic history). The result would be that the GA produces nothing better than a random AI.

Ideally, the genetic algorithm would start with a poor performing AI, and then work its way up to a high performing AI (see the discussion above about Creating an Initial Generation). As mentioned though, trying to dictate and control the fitness of a given specimen presupposes that you already know what “The Best Solution” is. And if that’s the case, why muck around with a GA?

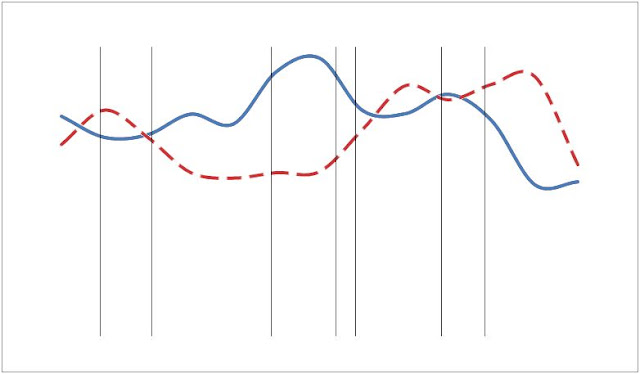

This line of thought vexed me until I applied a different perspective on stress. In addition to the simple mismatch in abilities, stress can also be a function of change. Humans are pretty good at pattern recognition, and fairly good at memorization. We also tend to gravitate towards “comfort zones” of the familiar. If the player fights the same AI again and again, no matter how good the AI is (within reason), they will figure out how to beat it. The beauty of GAs is that once the player learns the pattern and figures out how to beat that dominant AI, that AI goes away, and a new one takes its place. The player has to constantly learn and change to adapt to the new enemy behavior. Its less a matter of getting the right power-up, and more a matter of adapting quickly to a changing enemy.

Now, the same applies to the human player end of the equation. Again, many games feature a string of increasingly powerful weapons to help the player maintain flow. I was concerned that doing so would overwhelmingly disrupt the fitness function. The player might become too powerful, wrecking the GAs ability to adapt, and thus dropping the Stress to Ability ratio into the boredom area. I wanted every AI to be on relatively equal footing against the player, regardless of what power-ups the player might have. The differentiator on the fitness function should be the player’s behavior, not what the player has accumulated. To that end, most of the power ups in invAIders are really “power-sideways-es”. For example, the main weapon has a heat limit, and like many games, if you overheat, you have to wait for it too cool down. Now, you can get a number of multi-shot power-ups, but they also heat up quicker. They are not necessarily better weapons, just different ways to use available resources. The fitness function remains relatively constant.

Well, as constant as a human can be. Humans have an annoying habit of learning. As the player adapts to the changing patterns of AI presented, they are likely to learn and change their own behavior. Accordingly the fitness function results change as well. But ideally, the player is learning the patterns of presented by the AI, and the AI, is changing those patterns in response to the player. Hopefully, this creates a nice feedback loop that helps keep the play in the Flow-zone.

The upshot is that if the fitness function changes, then “The Best Solution” is also changing. To some extent, this realization relieves my fear of “The Best Solution” being created in the new AI random generation process. As long as the stress presented by this AI is not insurmountable, the player will eventually learn to beat it. The fitness function will then change, and something else will become “The Best Solution”. For the genetic algorithm, this is where flexibility and diversity comes into play. The trick is to keep the have a sufficient mix of new and old so that when the player masters one particular artifact of an AI presentation, the GA can substitute in something new.

Changing Fitness Functions

As the player response to the AI adjusts, the flexibility presented by the three new AI introduced each generation increases the chances of finding a solution that the player will not be ready for. This is a very tangible representation of the notion of strength through diversity.

So Does It Work?

Well, now, that is the question, isn’t it? I’d say the jury is still out. I’ll leave this game play video over here so you can take a look. I will say that I believe it accomplishes at least one objective, in that even I am surprised by what the AI does. Which makes me happy. I did make a concession and add in different difficulty levels, acknowledging that players have different innate skill levels, and the genetic algorithm might not be able to compensate for that. The difference between levels is the number of enemy per AI wave: 10 for easy, 20 for normal, and 30 for hard. But the mechanics of the GA remain intact.

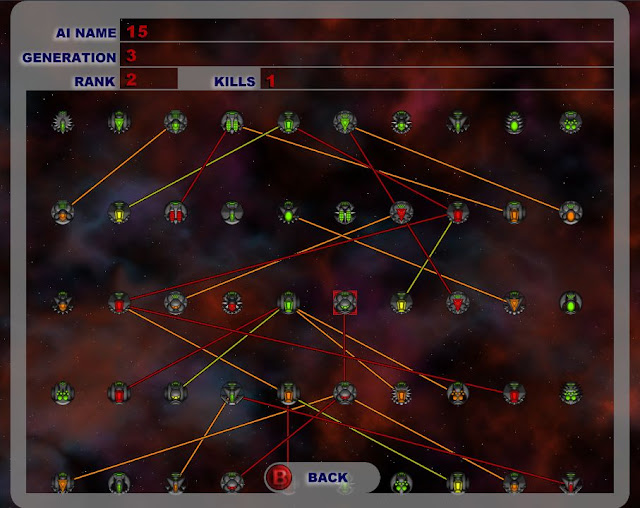

To some extent, the effectiveness of the AI evolution is evidenced in the Genealogy screen, which is accessible at the end of each generation. This display lists the AI one generation per row, and draws in links to show parent / child relationships. The rows are organized so that the highest ranking AI appear in the middle of the row.

The presence of new AI (color coded green) in the middle of the top rows shows that the genetic algorithm is searching for an effective solution, and is rapidly cycling though ineffective ones. Towards the bottom of the chart we begin to see that the AI hones in on effective solutions, and the top ranked AI return as Dominant specimen.

Genealogy Screen

One issue with this implementation is that genetic algorithms are typically slow, long lasting search routines, which iterate over hundreds or thousands of generations, and usually have hundreds or more competing individuals. For sanity sake, invAIders has 10 individuals per generations, and a game might last three to seven generations. Some of the feedback directly relating to the AI suggests this abbreviation is still not enough, and players want to see the results even sooner...

A possible solution is to network the algorithm, which would likely speed up the process significantly. The game is currently an Xbox Live Indie Game, which makes for easy development and deployment, but doesn’t allow for network server support. If I were to put this game out for PC, I would probably want to include some server component which might allow a master database of AI performance. Effectively, it would “cloud-source” (ewww…) the fitness function, and might provide some interesting insight into the system’s macro behaviors. But there are a number added considerations down that path…

A different alternative is to implement this system into a game in which the players regularly kill thousands of the enemy. I mistakenly believed this game would do that, but may have not implemented that part of my own concept properly. It may be possible to make each ship a unique individual, thus shortening the algorithm cycle, but it seems there would be a danger that the resulting generational macro behavior simply look chaotic. Maybe something to consider for future games...

Anyway, thanks for checking this out and reading all way to the bottom! Hopefully it was interesting, maybe even a little informative. :)

Read more about:

Featured BlogsYou May Also Like